The Advent of Recursion & Logic in Computer Science

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Thomas Ströhlein's Endgame Tables: a 50Th Anniversary

Thomas Ströhlein's Endgame Tables: a 50th Anniversary Article Supplemental Material The Festschrift on the 40th Anniversary of the Munich Faculty of Informatics Haworth, G. (2020) Thomas Ströhlein's Endgame Tables: a 50th Anniversary. ICGA Journal, 42 (2-3). pp. 165-170. ISSN 1389-6911 doi: https://doi.org/10.3233/ICG-200151 Available at http://centaur.reading.ac.uk/90000/ It is advisable to refer to the publisher’s version if you intend to cite from the work. See Guidance on citing . Published version at: https://content.iospress.com/articles/icga-journal/icg200151 To link to this article DOI: http://dx.doi.org/10.3233/ICG-200151 Publisher: The International Computer Games Association All outputs in CentAUR are protected by Intellectual Property Rights law, including copyright law. Copyright and IPR is retained by the creators or other copyright holders. Terms and conditions for use of this material are defined in the End User Agreement . www.reading.ac.uk/centaur CentAUR Central Archive at the University of Reading Reading’s research outputs online 40 Jahre Informatik in Munchen:¨ 1967 – 2007 Festschrift Herausgegeben von Friedrich L. Bauer unter Mitwirkung von Helmut Angstl, Uwe Baumgarten, Rudolf Bayer, Hedwig Berghofer, Arndt Bode, Wilfried Brauer, Stephan Braun, Manfred Broy, Roland Bulirsch, Hans-Joachim Bungartz, Herbert Ehler, Jurgen¨ Eickel, Ursula Eschbach, Anton Gerold, Rupert Gnatz, Ulrich Guntzer,¨ Hellmuth Haag, Winfried Hahn (†), Heinz-Gerd Hegering, Ursula Hill-Samelson, Peter Hubwieser, Eike Jessen, Fred Kroger,¨ Hans Kuß, Klaus Lagally, Hans Langmaack, Heinrich Mayer, Ernst Mayr, Gerhard Muller,¨ Heinrich Noth,¨ Manfred Paul, Ulrich Peters, Hartmut Petzold, Walter Proebster, Bernd Radig, Angelika Reiser, Werner Rub,¨ Gerd Sapper, Gunther Schmidt, Fred B. -

Edsger Dijkstra: the Man Who Carried Computer Science on His Shoulders

INFERENCE / Vol. 5, No. 3 Edsger Dijkstra The Man Who Carried Computer Science on His Shoulders Krzysztof Apt s it turned out, the train I had taken from dsger dijkstra was born in Rotterdam in 1930. Nijmegen to Eindhoven arrived late. To make He described his father, at one time the president matters worse, I was then unable to find the right of the Dutch Chemical Society, as “an excellent Aoffice in the university building. When I eventually arrived Echemist,” and his mother as “a brilliant mathematician for my appointment, I was more than half an hour behind who had no job.”1 In 1948, Dijkstra achieved remarkable schedule. The professor completely ignored my profuse results when he completed secondary school at the famous apologies and proceeded to take a full hour for the meet- Erasmiaans Gymnasium in Rotterdam. His school diploma ing. It was the first time I met Edsger Wybe Dijkstra. shows that he earned the highest possible grade in no less At the time of our meeting in 1975, Dijkstra was 45 than six out of thirteen subjects. He then enrolled at the years old. The most prestigious award in computer sci- University of Leiden to study physics. ence, the ACM Turing Award, had been conferred on In September 1951, Dijkstra’s father suggested he attend him three years earlier. Almost twenty years his junior, I a three-week course on programming in Cambridge. It knew very little about the field—I had only learned what turned out to be an idea with far-reaching consequences. a flowchart was a couple of weeks earlier. -

A Politico-Social History of Algolt (With a Chronology in the Form of a Log Book)

A Politico-Social History of Algolt (With a Chronology in the Form of a Log Book) R. w. BEMER Introduction This is an admittedly fragmentary chronicle of events in the develop ment of the algorithmic language ALGOL. Nevertheless, it seems perti nent, while we await the advent of a technical and conceptual history, to outline the matrix of forces which shaped that history in a political and social sense. Perhaps the author's role is only that of recorder of visible events, rather than the complex interplay of ideas which have made ALGOL the force it is in the computational world. It is true, as Professor Ershov stated in his review of a draft of the present work, that "the reading of this history, rich in curious details, nevertheless does not enable the beginner to understand why ALGOL, with a history that would seem more disappointing than triumphant, changed the face of current programming". I can only state that the time scale and my own lesser competence do not allow the tracing of conceptual development in requisite detail. Books are sure to follow in this area, particularly one by Knuth. A further defect in the present work is the relatively lesser availability of European input to the log, although I could claim better access than many in the U.S.A. This is regrettable in view of the relatively stronger support given to ALGOL in Europe. Perhaps this calmer acceptance had the effect of reducing the number of significant entries for a log such as this. Following a brief view of the pattern of events come the entries of the chronology, or log, numbered for reference in the text. -

Early Stored Program Computers

Stored Program Computers Thomas J. Bergin Computing History Museum American University 7/9/2012 1 Early Thoughts about Stored Programming • January 1944 Moore School team thinks of better ways to do things; leverages delay line memories from War research • September 1944 John von Neumann visits project – Goldstine’s meeting at Aberdeen Train Station • October 1944 Army extends the ENIAC contract research on EDVAC stored-program concept • Spring 1945 ENIAC working well • June 1945 First Draft of a Report on the EDVAC 7/9/2012 2 First Draft Report (June 1945) • John von Neumann prepares (?) a report on the EDVAC which identifies how the machine could be programmed (unfinished very rough draft) – academic: publish for the good of science – engineers: patents, patents, patents • von Neumann never repudiates the myth that he wrote it; most members of the ENIAC team contribute ideas; Goldstine note about “bashing” summer7/9/2012 letters together 3 • 1.0 Definitions – The considerations which follow deal with the structure of a very high speed automatic digital computing system, and in particular with its logical control…. – The instructions which govern this operation must be given to the device in absolutely exhaustive detail. They include all numerical information which is required to solve the problem…. – Once these instructions are given to the device, it must be be able to carry them out completely and without any need for further intelligent human intervention…. • 2.0 Main Subdivision of the System – First: since the device is a computor, it will have to perform the elementary operations of arithmetics…. – Second: the logical control of the device is the proper sequencing of its operations (by…a control organ. -

Reinventing Education Based on Data and What Works • Since 1955

Reinventing Education Based on Data and What Works • Since 1955 Carnegie Mellon is reinventing education and the way we think about leveraging technology through its study of the science of learning – an interdisciplinary effort that we’ve been tackling for more than 50 years with both computer scientists and psychologists. CMU's educational technology innovations have inspired numerous startup companies, which are helping students to learn more effectively and efficiently. 1955: Allen Newell (TPR ’57) joins 1995: Prof. Kenneth R. Koedinger (HSS Prof. Herbert Simon’s research team as ’88,’90) and Anderson develop Practical a Ph.D. student. Algebra Tutor. The program pioneers a new form of computer-aided instruction for high 1956: CMU creates one of the world’s first school students based on cognitive tutors. university computation centers. With Prof. Alan Perlis (MCS ’42) as its head, it is a joint 1995: Prof. Jack Mostow (SCS ’81) undertaking of faculty from the business, develops Project LISTEN, an intelligent tutor Simon, Newell psychology, electrical engineering and that helps children learn to read. The National mathematics departments, and the Science Foundation included Project precursor to computer science. LISTEN’s speech recognition system as one of its top 50 innovations from 1950-2000. 1956: Simon creates a “thinking machine”—enacting a mental process 1995: The Center for Automated by breaking it down into its simplest Learning and Discovery is formed, led steps. Later that year, the term “artificial by Prof. Thomas M. Mitchell. intelligence” is coined by a small group Perlis including Newell and Simon. 1998: Spinoff company Carnegie Learning is founded by CMU scientists to expand 1956: Simon, Newell and J. -

Turing's Influence on Programming — Book Extract from “The Dawn of Software Engineering: from Turing to Dijkstra”

Turing's Influence on Programming | Book extract from \The Dawn of Software Engineering: from Turing to Dijkstra" Edgar G. Daylight∗ Eindhoven University of Technology, The Netherlands [email protected] Abstract Turing's involvement with computer building was popularized in the 1970s and later. Most notable are the books by Brian Randell (1973), Andrew Hodges (1983), and Martin Davis (2000). A central question is whether John von Neumann was influenced by Turing's 1936 paper when he helped build the EDVAC machine, even though he never cited Turing's work. This question remains unsettled up till this day. As remarked by Charles Petzold, one standard history barely mentions Turing, while the other, written by a logician, makes Turing a key player. Contrast these observations then with the fact that Turing's 1936 paper was cited and heavily discussed in 1959 among computer programmers. In 1966, the first Turing award was given to a programmer, not a computer builder, as were several subsequent Turing awards. An historical investigation of Turing's influence on computing, presented here, shows that Turing's 1936 notion of universality became increasingly relevant among programmers during the 1950s. The central thesis of this paper states that Turing's in- fluence was felt more in programming after his death than in computer building during the 1940s. 1 Introduction Many people today are led to believe that Turing is the father of the computer, the father of our digital society, as also the following praise for Martin Davis's bestseller The Universal Computer: The Road from Leibniz to Turing1 suggests: At last, a book about the origin of the computer that goes to the heart of the story: the human struggle for logic and truth. -

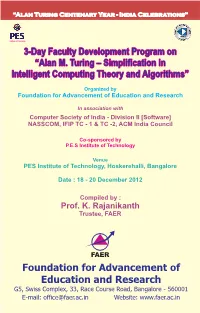

Alan M. Turing – Simplification in Intelligent Computing Theory and Algorithms”

“Alan Turing Centenary Year - India Celebrations” 3-Day Faculty Development Program on “Alan M. Turing – Simplification in Intelligent Computing Theory and Algorithms” Organized by Foundation for Advancement of Education and Research In association with Computer Society of India - Division II [Software] NASSCOM, IFIP TC - 1 & TC -2, ACM India Council Co-sponsored by P.E.S Institute of Technology Venue PES Institute of Technology, Hoskerehalli, Bangalore Date : 18 - 20 December 2012 Compiled by : Prof. K. Rajanikanth Trustee, FAER “Alan Turing Centenary Year - India Celebrations” 3-Day Faculty Development Program on “Alan M. Turing – Simplification in Intelligent Computing Theory and Algorithms” Organized by Foundation for Advancement of Education and Research In association with Computer Society of India - Division II [Software], NASSCOM, IFIP TC - 1 & TC -2, ACM India Council Co-sponsored by P.E.S Institute of Technology December 18 – 20, 2012 Compiled by : Prof. K. Rajanikanth Trustee, FAER Foundation for Advancement of Education and Research G5, Swiss Complex, 33, Race Course Road, Bangalore - 560001 E-mail: [email protected] Website: www.faer.ac.in PREFACE Alan Mathison Turing was born on June 23rd 1912 in Paddington, London. Alan Turing was a brilliant original thinker. He made original and lasting contributions to several fields, from theoretical computer science to artificial intelligence, cryptography, biology, philosophy etc. He is generally considered as the father of theoretical computer science and artificial intelligence. His brilliant career came to a tragic and untimely end in June 1954. In 1945 Turing was awarded the O.B.E. for his vital contribution to the war effort. In 1951 Turing was elected a Fellow of the Royal Society. -

The Advent of Recursion in Programming, 1950S-1960S

The Advent of Recursion in Programming, 1950s-1960s Edgar G. Daylight?? Institute of Logic, Language, and Computation, University of Amsterdam, The Netherlands [email protected] Abstract. The term `recursive' has had different meanings during the past two centuries among various communities of scholars. Its historical epistemology has already been described by Soare (1996) with respect to the mathematicians, logicians, and recursive-function theorists. The computer practitioners, on the other hand, are discussed in this paper by focusing on the definition and implementation of the ALGOL60 program- ming language. Recursion entered ALGOL60 in two novel ways: (i) syn- tactically with what we now call BNF notation, and (ii) dynamically by means of the recursive procedure. As is shown, both (i) and (ii) were in- troduced by linguistically-inclined programmers who were not versed in logic and who, rather unconventionally, abstracted away from the down- to-earth practicalities of their computing machines. By the end of the 1960s, some computer practitioners had become aware of the theoreti- cal insignificance of the recursive procedure in terms of computability, though without relying on recursive-function theory. The presented re- sults help us to better understand the technological ancestry of modern- day computer science, in the hope that contemporary researchers can more easily build upon its past. Keywords: recursion, recursive procedure, computability, syntax, BNF, ALGOL, logic, recursive-function theory 1 Introduction In his paper, Computability and Recursion [41], Soare explained the origin of computability, the origin of recursion, and how both concepts were brought to- gether in the 1930s by G¨odel, Church, Kleene, Turing, and others. -

Edsger W. Dijkstra: a Commemoration

Edsger W. Dijkstra: a Commemoration Krzysztof R. Apt1 and Tony Hoare2 (editors) 1 CWI, Amsterdam, The Netherlands and MIMUW, University of Warsaw, Poland 2 Department of Computer Science and Technology, University of Cambridge and Microsoft Research Ltd, Cambridge, UK Abstract This article is a multiauthored portrait of Edsger Wybe Dijkstra that consists of testimo- nials written by several friends, colleagues, and students of his. It provides unique insights into his personality, working style and habits, and his influence on other computer scientists, as a researcher, teacher, and mentor. Contents Preface 3 Tony Hoare 4 Donald Knuth 9 Christian Lengauer 11 K. Mani Chandy 13 Eric C.R. Hehner 15 Mark Scheevel 17 Krzysztof R. Apt 18 arXiv:2104.03392v1 [cs.GL] 7 Apr 2021 Niklaus Wirth 20 Lex Bijlsma 23 Manfred Broy 24 David Gries 26 Ted Herman 28 Alain J. Martin 29 J Strother Moore 31 Vladimir Lifschitz 33 Wim H. Hesselink 34 1 Hamilton Richards 36 Ken Calvert 38 David Naumann 40 David Turner 42 J.R. Rao 44 Jayadev Misra 47 Rajeev Joshi 50 Maarten van Emden 52 Two Tuesday Afternoon Clubs 54 2 Preface Edsger Dijkstra was perhaps the best known, and certainly the most discussed, computer scientist of the seventies and eighties. We both knew Dijkstra |though each of us in different ways| and we both were aware that his influence on computer science was not limited to his pioneering software projects and research articles. He interacted with his colleagues by way of numerous discussions, extensive letter correspondence, and hundreds of so-called EWD reports that he used to send to a select group of researchers. -

A Biased History Of! Programming Languages Programming Languages:! a Short History Fortran Cobol Algol Lisp

A Biased History of! Programming Languages Programming Languages:! A Short History Fortran Cobol Algol Lisp Basic PL/I Pascal Scheme MacLisp InterLisp Franz C … Ada Common Lisp Roman Hand-Abacus. Image is from Museo (Nazionale Ramano at Piazzi delle Terme, Rome) History • Pre-History : The first programmers • Pre-History : The first programming languages • The 1940s: Von Neumann and Zuse • The 1950s: The First Programming Language • The 1960s: An Explosion in Programming languages • The 1970s: Simplicity, Abstraction, Study • The 1980s: Consolidation and New Directions • The 1990s: Internet and the Web • The 2000s: Constraint-Based Programming Ramon Lull (1274) Raymondus Lullus Ars Magna et Ultima Gottfried Wilhelm Freiherr ! von Leibniz (1666) The only way to rectify our reasonings is to make them as tangible as those of the Mathematician, so that we can find our error at a glance, and when there are disputes among persons, we can simply say: Let us calculate, without further ado, in order to see who is right. Charles Babbage • English mathematician • Inventor of mechanical computers: – Difference Engine, construction started but not completed (until a 1991 reconstruction) – Analytical Engine, never built I wish to God these calculations had been executed by steam! Charles Babbage, 1821 Difference Engine No.1 Woodcut of a small portion of Mr. Babbages Difference Engine No.1, built 1823-33. Construction was abandoned 1842. Difference Engine. Built to specifications 1991. It has 4,000 parts and weighs over 3 tons. Fixed two bugs. Portion of Analytical Engine (Arithmetic and Printing Units). Under construction in 1871 when Babbage died; completed by his son in 1906. -

Early Life: 1924–1945

An interview with FRITZ BAUER Conducted by Ulf Hashagen on 21 and 26 July, 2004, at the Technische Universität München. Interview conducted by the Society for Industrial and Applied Mathematics, as part of grant # DE-FG02-01ER25547 awarded by the US Department of Energy. Transcript and original tapes donated to the Computer History Museum by the Society for Industrial and Applied Mathematics © Computer History Museum Mountain View, California ABSTRACT: Professor Friedrich L. Bauer describes his career in physics, computing, and numerical analysis. Professor Bauer was born in Thaldorf, Germany near Kelheim. After his schooling in Thaldorf and Pfarrkirchen, he received his baccalaureate at the Albertinium, a boarding school in Munich. He then faced the draft into the German Army, serving first in the German labor service. After training in France and a deployment to the Eastern Front in Kursk, he was sent to officer's school. His reserve unit was captured in the Ruhr Valley in 1945 during the American advance. He returned to Pfarrkirchen in September 1945 and in spring of 1946 enrolled in mathematics and physics at the Ludwig-Maximilians-Universitäat, München. He studied mathematics with Oscar Perron and Heinrich Tietze and physics with Arnold Sommerfeld and Paul August Mann. He was offered a full assistantship with Fritz Bopp and finished his Ph.D. in 1951 writing on group representations in particle physics. He then joined a group in Munich led by a professor of mathematics Robert Sauer and the electrical engineer Hans Piloty, working with a colleague Klaus Samelson on the design of the PERM, a computer based in part on the Whirlwind concept. -

Oral History Interview with Friedrich L. Bauer

An Interview with FRIEDRICH L. BAUER OH 128 Conducted by William Aspray on 17 February 1987 Munich, West Germany Charles Babbage Institute The Center for the History of Information Processing University of Minnesota, Minneapolis Copyright, Charles Babbage Institute 1 Friedrich L. Bauer Interview 17 February 1987 Abstract Bauer briefly reviews his early life and education in Bavaria through his years in the German army during World War II. He discusses his education in mathematics and theoretical physics at the University of Munich through the completion of his Ph.D. in 1952. He explains how he first came in contact with work on modern computers through a seminar in graduate school and how he and Klaus Samelson were led to join the PERM group in 1952. Work on the hardware design and on compilers is mentioned. Bauer then discusses the origins and design of the logic computer STANISLAUS, and his role in its development. The next section of the interview describes the European side of the development of ALGOL, including his work and that of Rutishauser, Samelson, and Bottenbrach. The interview concludes with a brief discussion of Bauer's work in numerical analysis in the 1950s and 1960s and his subsequent investigations of programming methodology. 2 FRIEDRICH L. BAUER INTERVIEW DATE: 17 February 1987 INTERVIEWER: William Aspray LOCATION: Deutsches Museum, Munich, West Germany ASPRAY: This is an interview on the 17th of February, 1987 at the Deutsches Museum in Munich. The interviewer is William Aspray of the Charles Babbage Institute. The interview subject is Dr. F. L. Bauer. Let's begin by having you discuss very briefly your early career in education, say up until World War II.