SMART MOVEMENT DETECTION for ANDROID PHONES a Degree Thesis Submitted to the Faculty of the Escola Tècnica D'enginyeria De Tele

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Ipad Pro Just Might Replace Your Laptop 21 January 2016, by Jim Rossman, the Dallas Morning News

Review: IPad Pro just might replace your laptop 21 January 2016, by Jim Rossman, The Dallas Morning News For the first time, Apple made an iPad with a bigger screen. It also introduced a few accessories, like the Apple Pencil and the keyboard cover, that make it even more compelling. Would this be the model to replace my MacBook Pro? BIG SCREEN I'm still making that decision, but let me tell you how it's going so far. The first thing you'll notice about the iPad Pro is its 12.9-inch screen. This is an iPad on steroids. Every time a new iPad is introduced, I have the same conversation with myself. Apple sells laptops with similarly sized screens. "Maybe this one will replace my laptop," I muse. My own iPad is a 7.9-inch iPad mini, so stepping up almost 5 inches in screen size feels quite But alas, each time I've been disappointed. luxurious. "Not yet," I mutter. "Maybe someday." The iPad Pro's screen has a resolution of 2,732 by 2,048 pixels (264 pixels per inch). Third-party keyboard cases do a good job at helping the iPad look like a laptop, but a few basic It runs Apple's A9X processor and has 4 gigabytes things keep me from my dream. of RAM. For my writing, I need a comfortable keyboard, a You can configure the iPad Pro with 32 or 128 decently fast processor and screen, and a way to gigabytes of internal storage. Pricing is $799 for the easily move, copy, and paste among my writing 32GB model and $949 for 128GB. -

Apple Lancia Ipad Pro: 12.9", Apple A9X, Smart Keyboard E Pencil

Apple lancia iPad Pro: 12.9", Apple A9X, Smart Keyboard e Pencil - Ultima modifica: Giovedì, 10 Settembre 2015 17:04 Pubblicato: Mercoledì, 09 Settembre 2015 20:16 Scritto da Laura Benedetti Apple iPad Pro è ufficiale. Il maxi-tablet da 12.9 pollici Retina (2732 x 2748 pixel) con Apple A9x sarà sul mercato per novembre in tre versioni a 799$, 949$ e 1079$. Opzionalmente, la Apple Pencil e la Smart Keyboard a 99 dollari e 169 dollari. iPad Pro è stato anticipato da svariati rumors ma solo oggi, nello stesso Bill Graham Civic Auditorium dove quasi quarant'anni fa fu mostrato l'Apple II, è stato reso ufficiale. Andrà a completare la gamma di tablet della Mela morsicata, affiancando l'iPad Air da 9.7 pollici e l'iPad mini da 7.9 pollici, con un grosso Retina Display da 12.9 pollici (2732 x 2748 pixel), oltre 5.6 milioni di pixel visualizzati. E la diagonale non è casuale: il lato lungo di iPad Air 2 è uguale a quello corto di iPad Pro (coincidenze?). Non è solo il nuovo modello della famiglia iPad, nonché il più grande fino ad oggi lanciato, ma è anche il più potente; integra uno più veloci processori sul mercato e supporta tutte quelle funzionalità assenti su iPad più piccoli. Dopotutto il suo nome "Pro" parla chiaro, perché è un iPad indicato per la produttività quindi sta bene in ufficio, negli studi medici o grafici. Apple iPad Pro è dotato di un processore Apple A9x, fino a 1.8 volte più veloce di un Apple A8x e con prestazioni grafiche raddoppiate. -

A STUDY of MODERN MOBILE TABLETS by Jordan Kahtava A

A STUDY OF MODERN MOBILE TABLETS By Jordan Kahtava A thesis submitted in partial fulfillment of the requirements for the degree Bachelor of Computer Science Algoma University Gerry Davies, Thesis Advisor April 14th, 2016 A Study of Modern Mobile Tablets - 1 Abstract Mobile tablets have begun playing a larger role in mobile computing because of their portability. To gather an understanding of what mobile computers can currently accomplish Microsoft and Apple tablets were examined. In general this topic is very broad and hard to research because of the number of mobile devices and tablets. Examining this document should provide detailed insight into mobile tablets and their hardware, operating systems, and programming environment. Any developer can use the information to develop, publish, and setup the appropriate development environments for either Apple or Microsoft. The Incremental waterfall methodology was used to develop two applications that utilize the Accelerometer, Gyroscope, and Inclinometer/Attitude sensors. In addition extensive research was conducted and combined to outline how applications can be published and the rules associated with each application store. The Apple application used Xcode and Objective-C while the Microsoft application used Visual Studio 2012, C-Sharp, and XAML. It was determined that developing for Apple is significantly easier because of the extensive documentation and examples available. In addition Apple’s IDE Xcode can be used to develop, design, test, and publish applications without the need for other programs. It is hard to find easily understandable documentation from Microsoft regarding a particular operating system. Visual Studio 2012 or later must be used to develop Microsoft Store applications. -

Heterogeneous Resource Mobile Sensing: Computational Offloading

Heterogeneous resource mobile sensing: computational offloading, scheduling and algorithm optimisation Petko Georgiev Corpus Christi College University of Cambridge January 2017 This dissertation is submitted for the degree of Doctor of Philosophy Declaration This dissertation is the result of my own work and includes nothing which is the outcome of work done in collaboration except where specifically indicated in the text. This dissertation does not exceed the regulation length of 60 000 words, including tables and footnotes. Heterogeneous resource mobile sensing: computational offloading, scheduling and algorithm optimisation Petko Georgiev Summary Important mobile apps such as digital assistants (Google Now, Apple Siri) rely on sensor data and require low-overhead accurate inferences. Although many sensor types are found in devices, the microphone with its high sampling rates arguably poses one of the greatest sensor-related mobile resource utilisation challenges and has similar generic problems faced by all sensors { e.g., efficient background operation, or obtaining accurate inferences in real time. Resource-heavy inference algorithms often employed by audio sensing apps, and the growing number of simultaneously running on-device audio background services, result in app deployments that routinely struggle to reach a day worth of operation alongside other existing services with a single battery charge. In this dissertation we address the challenges presented by emerging audio workloads on smartphone and wearable devices { we enable accurate and efficient multi-app microphone sensing by carefully managing the full range of heterogeneous processors available to high-end mobile platforms. We devise three general classes of resource optimisation techniques to empower micro- phone sensing apps with continuous, accurate, and more energy efficient operation. -

A Comparative Study of 64 Bit Microprocessors Apple, Intel and AMD

Special Issue - 2016 International Journal of Engineering Research & Technology (IJERT) ISSN: 2278-0181 ICACT - 2016 Conference Proceedings A Comparative Study of 64 Bit Microprocessors Apple, Intel and AMD Surakshitha S Shifa A Ameen Rekha P M Information Science and Engineering Information Science and Engineering Information Science and Engineering JSSATE JSSATE JSSATE Bangalore, India Bangalore, India Bangalore, India Abstract- In this paper, we draw a comparative study of registers, even larger .The term may also refer to the size of microprocessor, using the three major processors; Apple, low-level data types, such as 64-bit floating-point numbers. Intel and AMD. Although the philosophy of their micro Most CPUs are designed so that the contents of a architecture is the same, they differ in their approaches to single integer register can store the address of any data in implementation. We discuss architecture issues, limitations, the computer's virtual considered an appropriate size to architectures and their features .We live in a world of constant competition dating to the historical words of the work with for another important reason: 4.29 billion Charles Darwin-‘Survival of the fittest ‘. As time passed by, integers are enough to assign unique references to most applications needed more processing power and this lead to entities in applications like databases. an explosive era of research on the architecture of Some supercomputer architectures of the 1970s and microprocessors. We present a technical & comparative study 1980s used registers up to 64 bits wide. In the mid-1980s, of these smart microprocessors. We study and compare two Intel i860 development began culminating in a 1989 microprocessor families that have been at the core of the release. -

Market Power, Regulation and the App Economy

GSR-16 Discussion paper THE RACE FOR SCALE: MARKET POWER, REGULATION AND THE APP ECONOMY Work in progress, for discussion purposes Comments are welcome! Please send your comments on this paper at: [email protected] by 30 May 2016 The views expressed in this paper are those of the author and do not necessarily reflect the opinions of ITU or its Membership. CONTENTS 1 EXECUTIVE SUMMARY ........................................................................................... 5 2 BACKGROUND AND DEFINITION ............................................................................. 11 2.1 What is the app economy? ............................................................................................... 11 2.2 Defining the app economy and its ecosystem ................................................................... 14 3 THE APP ECONOMY VALUE CHAIN AND THE GLOBALISATION OF APP DEVELOPMENT . 18 3.1 The structure of the app economy .................................................................................... 18 4 THE ECONOMICS OF DISRUPTION ........................................................................... 26 4.1 Transactions costs ............................................................................................................. 26 4.2 Modes of digital disruption ............................................................................................... 27 4.3 Potential benefits of app disruption .................................................................................. 31 4.4 The race for scale and -

CSRN 2902 (Eds.)

ISSN 2464–4617 (print) ISSN 2464–4625 (CD-ROM) CSRN 2902 (Eds.) Vaclav Skala University of West Bohemia, Czech Republic 27. International Conference in Central Europe on Computer Graphics, Visualization and Computer Vision WSCG 2019 Plzen, Czech Republic May 27 – 30, 2019 Proceedings WSCG 2019 Proceedings – Part II Computer Science Research Notes Research Science Computer ISSN 2464–4617 (print) ISSN 2464–4625 (CD-ROM) ISSN 2464–4617 (print) ISSN 2464–4625 (CD-ROM) CSRN 2902 (Eds.) Vaclav Skala University of West Bohemia, Czech Republic 27. International Conference in Central Europe on Computer Graphics, Visualization and Computer Vision WSCG 2019 Plzen, Czech Republic May 27 – 30, 2019 Proceedings WSCG 2019 Proceedings – Part II Computer Science Research Notes Research Science Computer Vaclav Skala - UNION Agency ISSN 2464–4617 (print) ISSN 2464–4625 (CD-ROM) This work is copyrighted; however all the material can be freely used for educational and research purposes if publication properly cited. The publisher, the authors and the editors believe that the content is correct and accurate at the publication date. The editor, the authors and the editors cannot take any responsibility for errors and mistakes that may have been taken. Computer Science Research Notes CSRN 2902 Editor-in-Chief: Vaclav Skala c/o University of West Bohemia Univerzitni 8 CZ 306 14 Plzen Czech Republic [email protected] http://www.VaclavSkala.eu Managing Editor: Vaclav Skala Publisher & Author Service Department & Distribution: Vaclav Skala - UNION Agency Na Mazinach 9 CZ 322 00 Plzen Czech Republic Reg.No. (ICO) 416 82 459 Published in cooperation with the University of West Bohemia Univerzitní 8, 306 14 Pilsen, Czech Republic ISSN 2464-4617 (Print) ISSN 2464-4625 (CD/DVD) ISBN 978-80-86943-38-1 (CD/-ROM) WSCG 2019 International Program Committee Manoharan,P. -

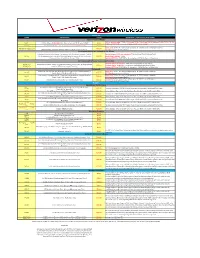

DMS-1011-008C Verizon Contract Pricing

Price Plans CODES DESCRIPTION ACCESS GUIDELINES & OPTIONAL PLAN FEATURES Nationwide Per Minute N/A on feature codes 77294, 77295, and 79740, 79407, and 72409. Must use 79789, 79790, 79791, 86680 $.049 Per Minute Nationwide Voice Plan-includes 600 M2M and 600 N&W $0.00 79780, or 79781. Please see smartphone feature codes below 80245 200 Text/Pix/Flix Messages-Optional on price plan 86680 only $0.00 Basic: (3G) 73749 - $0 Push To Talk Enterprise & 73814 General IP Address (required) 86678/94976 (Dura XV) $10.00 Unlimited Push to Talk w/ Unlimited Mobile 2 Mobile .049 per min rate PTT Plus-$0 (auto attaches-81814) Smartphone Standalone Nationwide Per Minute Unlimited Nationwide Blackberry/ Smartphone Data / Unlimited Domestic Text.Pix, Mobile Hotspot (5 GB auto attached)- 76404 (4G) and 76405 (3G) and 79048 93445 Flix Messaging, Unlimited Mobile 2 Mobile & Nights/Weekends ($.052 Per Minute $35.99 Block Mobile Hotspot-78485 Nationwide Voice Plan)/Mobile Hotspot-5GB) Optional-PTT Plus-$5 (80598 for all smartphones, 80590 for Kyocera Brigadier) Smartphone All Inclusive Nationwide Price Plan 3G Smartphone- $0 Hotspot-79441 (auto attached built into price plan) 86769 (4G) Unlimited Nationwide Calling w/ Unlimited Domestic Text/Pix/Flix Messaging; Email $51.00 4G Smartphone -$0 Hotspot- 76065 (auto attached built into the price plan) 86768 (3G) and Data, 5GB of data hotspot/Tethering Optional-PTT Plus-$5 (80598 for all smartphones, 80590 for Kyocera Brigadier) Nationwide Nationwide 250 Anytime Min ($.052 per min overage rate) w/ Unlimited Mobile -

Huawei's Mobile Processors

Huawei's mobile processors Dezső Sima Vers. 2.1 November 2018 Sima Dezső, 2018 Huawei's mobile processors • 1. Overview • 2. Examples: The Kirin 950 • 2.1 The Kirin 950 • 2.2 The Kirin 960 • 2.3 The Kirin 970 • 2.4 The Kirin 980 • 3. References 1. Overview 1. Overview (1) Huawei [1] • Huawei Technologies Co. Ltd. is a Chinese multinational networking and telecommunications equipment and services company headquartered in Shenzhen China. • It was founded in 1987 and became the world's largest telecom equipment manufacturer. • The name Huawei (华为) means Chinese achievement. • Recently it has about 180 000 employees. Figure: Huawei's logo [1] Figure: Huawei's headquarter in Shenzen [1] 1. Overview (2) HiSilicon [2] • HiSilicon, a global fabless semiconductor and IC design company, headquartered in Shenzhen, China. • It was founded in 2004 as a subsidiary of Huawei. • It has offices and research centers worldwide, the number of the employees is about 7000. • HiSilicon purchases licenses mainly from ARM and designs in the first line application processors for mobiles. It filed over 5,000 patents. 1. Overview (3) 1. Overview Worldwide smartphone shipments by vendors Q4/2009 to Q4/2016 (million units) [3] 1. Overview (4) Worldwide market share of application processors in 2015 used in smartphones (based on revenue) [4] Market Processor lines Vendor Cores ISA share (examples) Qualcomm Snapdragon Qualcomm designed Krait cores ARMv7 42 % (USA) 200-800 ARM Cortex A line ARMv7/v8 Apple Apple A7-A9 Apple designed Cyclone core 21 % ARMv8 (USA) Apple A10 2xbig./4x LITTLE cores 8xARM Cortex A53 ARMv7 MediaTek Helio x10 (ARM big.LITTLE) 19 % (Taiwan) Helio X20 2xARM Cortex A72/8x A53 ARMv8 (ARM big.LITTLE) Samsung Exynos ARM Cortex A line ARMv7 (S. -

July, 2015 P.Gargini ITRS/RC

July, 2015 ITRS/RC P.Gargini ITRS Past, Present and Future PaoloPaolo GarginiGargini ChairmanChairman ITRSITRS FellowFellow IEEE,IEEE, FellowFellow II--JSAPJSAP July, 2015 ITRS/RC P.Gargini 2 AgendaAgenda InIn thethe beginningbeginning GeometricalGeometrical ScalingScaling ITRSITRS 1.01.0 EquivalentEquivalent ScalingScaling PostPost CMOSCMOS ITRSITRS 2.02.0 3D3D PowerPower ScalingScaling HeterogeneousHeterogeneous IntegrationIntegration July, 2015 ITRS/RC P.Gargini 3 MooreMoore’’ss LawLaw -- 19651965 2X/Year ~65,000 July, 2015 ITRS/RC P.Gargini 4 The Semiconductor Business in the 70s System Designer Product Proprietary Definition Product Definition Open Semiconductor Market Company Custom Standard Components Components System Integration July, 2015 ITRS/RC P.Gargini 5 The SX-70 The story of the struggle between Fairchild Instruments and Texas Instruments for the contract to supply the integrated circuitry for Polaroid's SX-70 camera, introduced in 1972, is related. Research and development work by both companies is described. The problems caused by Polaroid's secrecy regarding the overall camera design are highlighted IEEE Spectrum archive Volume 26 Issue 5, May 1989 July, 2015 ITRS/RC P.Gargini 6 Phase 1 First Age of Scaling (Self-aligned Silicon Gate) July, 2015 ITRS/RC P.Gargini 7 MOSMOS TransistorTransistor ScalingScaling (1972) Scaled Constant Parameter Voltage Voltage S < 1 Supply Voltage (Vdd) S 1 Channel Length (Lg, Le) S S Channel Width (W) S S Gate Oxide Thickness (Tox) S S Substrate Doping (N) 1/s 1/s Drive Current (Id) S 1/s * Gate Capacitance (Cg) S S Gate Delay S S 2 Active Power S 3 S * Does Not Include Carrier Velocity Saturation R. -

Security Policy Module Version 10.0

Apple Inc. Apple corecrypto User Space Module for ARM (ccv10) FIPS 140-2 Non-Proprietary Security Policy Module Version 10.0 Prepared for: Apple Inc. One Apple Park Way Cupertino, CA 95014 www.apple.com Prepared by: atsec information security Corp. 9130 Jollyville Road, Suite 260 Austin, TX 78759 www.atsec.com ©2021 Apple Inc. This document may be reproduced and distributed only in its original entirety without revision Trademarks Apple’s trademarks applicable to this document are listed in https://www.apple.com/legal/intellectual- property/trademark/appletmlist.html. Other company, product, and service names may be trademarks or service marks of others. Last update: 2021-03-17 ©2021 Apple Inc. Version: 1.4 Page 2 of 31 Table of Contents 1 Introduction .............................................................................. 5 2 Purpose .................................................................................... 5 2.1 Document Organization / Copyright ............................................................................................. 5 2.2 External Resources / References .................................................................................................. 5 2.2.1 Additional References .......................................................................................................... 5 2.3 Acronyms ...................................................................................................................................... 7 3 Cryptographic Module Specification ......................................... -

Enjoy Flying Your TBM with the New E-COPILOT Reducing Pilot Workload

OWNERS AND PILOTS MAGAZINE SUMMER 2016 TBM SPECIAL EDITION FLYING THE LAST FOUR MINUTES IN CASE YOU HAVE TO DITCH Ditching automatically means you lose the airplane. Just try not to lose anything else Come visit us at Oshkosh! Booth # 2083•Hangar B•Isle D See you there! SimCom is proud to have been the Exclusive Factory Authorized simulator training provider fRUWKH7%0µHHWVLQce 1999. Nothing sharpens yRXUµ\LQJVNLOOV and prepares you for the unexpected like simulator training. Visit www.simulator.com to see a video describing BETTER TRAINING • SAFER PILOTS • GREATER VALUE why SIMCOM’s instructors, simulators and training locations will make your training experience special. ...Nothing Prepares You Like Simulator Training. Visit SIMCOM’s website at simulator.com Call 866.692.1994 to speak with one of our training advisors. © 2016 SIMCOM Aviation Training. All rights reserved. 7 LBS OF POWER START PAC ONE THE SMALLEST STARTER IN THE WORLD YOU NEED THE ONE • PATENTPATENT PENDINGPENDING 7LB7LB CABLECABLE FREEFREE DESIGNDESIGN • • FOR PISTON AND SMALL TO MEDIUM TURBINE ENGINES • • 28V, 1500 PEAK AMPS, AND 10 AH CAPACITY • www.STARTPAC.com OR TOLL FREE 844.901.9987 TBM MAGAZINE 4 SUMMER 2016 TBMBM OwO nerrss& & PiP lotlo s Magaazine • Summer 2016 • Volume 6, Issueu 2 8 12 18 30 D E PA R T M E N T S 6 Hellloo, Oshhkok shs ByB Fraank J. McKee,e TBMOPO A chairmann 8 NNew & NNotaablb e 100 SkSky Gaalss FlF yingg intnto histtory ByB P.J. Goldd 400 From the Facctory A busys andn exciting sprir ngtime foor the TBM By Nicollasa Chaabbert 42 Taax TaTalkl ThThe TTa xppayyer’ss Holy Graaiil Byy Haarryy Dannieels 46 MiPPaad TThe lalateest vere sioon of thhe iPPada features a better screen, faf sttere proceesss oor, new acccessoriei s and greateer fufunnctit onala ity Byy Wayyne Rash JrJ .