2 Oslo Stock Exchange 2

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Report on the Aircraft Accident at Bodø Airport on 4 December 2003 Involving Dornier Do 228-202 Ln-Hta, Operated by Kato Airline As

SL Report 2007/23 REPORT ON THE AIRCRAFT ACCIDENT AT BODØ AIRPORT ON 4 DECEMBER 2003 INVOLVING DORNIER DO 228-202 LN-HTA, OPERATED BY KATO AIRLINE AS This report has been translated into English and published by the AIBN to facilitate access by international readers. As accurate as the translation might be, the original Norwegian text takes precedence as the report of reference. June 2007 Accident Investigation Board Norway P.O. Box 213 N-2001 Lillestrøm Norway Phone:+ 47 63 89 63 00 Fax:+ 47 63 89 63 01 http://www.aibn.no E-mail: [email protected] The Accident Investigation Board has compiled this report for the sole purpose of improving flight safety. The object of any investigation is to identify faults or discrepancies which may endanger flight safety, whether or not these are causal factors in the accident, and to make safety recommendations. It is not the Board’s task to apportion blame or liability. Use of this report for any other purpose than for flight safety should be avoided. Accident Investigation Board Norway Page 2 INDEX NOTIFICATION .................................................................................................................................3 SUMMARY.........................................................................................................................................3 1. FACTUAL INFORMATION..............................................................................................4 1.1 History of the flight..............................................................................................................4 -

INGENIØRFORLAGET 10 ÅR Åmv

wn-iup '^n A/O7&00O.2?1 INGENIØRFORLAGET 10 ÅR åmv rrr-=s»-T— UDDEHOLM LAND et ressursenes rike. Uddeholms Aktiebolag har langsomt for - a utvikle nye ruslfne stalkvaliteter Skriv eller ring til oss og be om og organisk vokst frem av del varm- som anvendes ved tremstilhng av brosjyren ••Uddeholm om Uddeholnv landske folket og tiihorende landområde kunstgjodsel Konsernet sysselsetter ca 12 000 Med egne gruver, skoger og kratt personer og disponerer over ca 4 5 mill stasjoner star vi sp'vsagt sterkere enn ÜODEHOLftti dekar jord og skog saml moderne mange andre i produksjonen av produksjonsenheter Tilsammen spesialstål, kj f?m i ka li er eller krnttpapir UDDEHOLM STAL A/S forvalter vi en mangehundreang arv - badp i dag og i fremtiden Stalfiæra 1, Postboks 85 Kaldbakken konsernet ble grunnlagi i 5668 Oslo 9 Vi seiger mer verktoystal til industrien Var innsats hat virket som en Telefon (02) 256510 - Telex 18473 enn noen andre, og lager blader lor stimulans i ulvikhngen av nye kriteriet vevstoler tre ganger lynnere enn for kvalitet - som bfant annef tnneltærer *Vaei>ng5kon!or Stavange' Be-yetanasgt 38 menneskehår nyp prosesser i stålproduksjon for a J0O0 Stavange' Teie'on <045, 25 075 Teii^Tiiqe Vi gjor vari 1il a minske verdens bevare energi og ressurser og nye matvareproblemer ved at vi har lost en sikringstiltak mot forurensing av de vanskeligsle oppgåver stal- Vi er stolte av vare bedrifter og pradusentene noen gang har statt over liker a forteile om dem Innhold Ingeniørforlaget Side Ved en milepæl. Per Bjørnstad 5 Vår oppgave. Bjarne Lien 6 Industriministeren hilser 7 Eierne hilser 8 Slik ble forlaget til 11 10 år i vekst 13 Samarbeid over landegrensene 16 Hva er Fagpressen? 23 Miljøene vi virker i 26 Tidsskriftene våre 29 Teknisk Ukeblad, Elektro, Kjemi, Maskin, Norsk Oljerevy, Plan og Bygg, Våre Veger Petrokjemi, oljeteknologl og plast Basisindustri tilpasset norske forhold. -

Offshore Blow-Out Accidents - an Analysis of Causes of Vulnerability Exposing Technological Systems to Accidents

Offshore Blow-out Accidents - An Analysis of Causes of Vulnerability Exposing Technological Systems to Accidents Thomas G Sætren [email protected] Univesity of Oslo Universite Louis Pasteur Assessing and communicating risks Wordcount: 24983 Preface This thesis is about understanding causes of vulnerabilities leading to specific type of accidents on offshore oil and gas installations. Blow-out accidents have disastrous potential and exemplify accidents in advanced technological systems. The thesis aims to reveal dysfunctional mechanisms occurring within high reliability systems whether in organization or socio –technical interaction. Technological systems form a central place in technological development and as such this thesis is placed in the technology and society group part of the STS- field, though describing technological risks and accidents at group, organizational and industrial sector level. The contents are description on developments in offshore technological design, theories on how organisational vulnerabilities occur, empirical analysis on three major blow-out accidents, empirical analysis on one normal project for reference, sosio-technological historic description on development in Norwegian offshore industry and final analysis Keywords Blow-out, Offshore, Vulnerabilities, Accident causes, Technological development, Social construction of technology, Bravo – accident, West Vanguard, Snorre A, Ormen Lange Acknowledgments I am grateful for the help and advice I received from the researchers Ger Wackers (Univ of Maastricht/Univ of Oslo) and Knut Haukelid (Univ of Oslo) during the later stages of the project. I am also indebt to my two fellow students Marius Houm and for assistance and advice during the process The 14 interviewees I owe big thanks for the time they spent talking to me a novice in the offshore industry. -

Fields Listed in Part I. Group (8)

Chile Group (1) All fields listed in part I. Group (2) 28. Recognized Medical Specializations (including, but not limited to: Anesthesiology, AUdiology, Cardiography, Cardiology, Dermatology, Embryology, Epidemiology, Forensic Medicine, Gastroenterology, Hematology, Immunology, Internal Medicine, Neurological Surgery, Obstetrics and Gynecology, Oncology, Ophthalmology, Orthopedic Surgery, Otolaryngology, Pathology, Pediatrics, Pharmacology and Pharmaceutics, Physical Medicine and Rehabilitation, Physiology, Plastic Surgery, Preventive Medicine, Proctology, Psychiatry and Neurology, Radiology, Speech Pathology, Sports Medicine, Surgery, Thoracic Surgery, Toxicology, Urology and Virology) 2C. Veterinary Medicine 2D. Emergency Medicine 2E. Nuclear Medicine 2F. Geriatrics 2G. Nursing (including, but not limited to registered nurses, practical nurses, physician's receptionists and medical records clerks) 21. Dentistry 2M. Medical Cybernetics 2N. All Therapies, Prosthetics and Healing (except Medicine, Osteopathy or Osteopathic Medicine, Nursing, Dentistry, Chiropractic and Optometry) 20. Medical Statistics and Documentation 2P. Cancer Research 20. Medical Photography 2R. Environmental Health Group (3) All fields listed in part I. Group (4) All fields listed in part I. Group (5) All fields listed in part I. Group (6) 6A. Sociology (except Economics and including Criminology) 68. Psychology (including, but not limited to Child Psychology, Psychometrics and Psychobiology) 6C. History (including Art History) 60. Philosophy (including Humanities) -

2025 Tillægsbet. O. Lovf. Vedr. Det Skandinaviske Luftfartssamarbejde 2026

2025 Tillægsbet. o. lovf. vedr. det skandinaviske luftfartssamarbejde 2026 Til lovforslag nr. L 108.Tillægsbetænkning afgivet af udvalget om offentlige arbejder den 14. maj 1987 Tillægsbetænkning over Forslag til lov om forlængelse af Danmarks deltagelse i det skandinaviske luftfartssamarbej de / Udvalget har, efter at lovforslaget ved 2. re direkte flyruter fra det jysk-fynske område behandling blev henvist til fornyet udvalgs- til europæiske destinationer. Ministeren læg- behandling, behandlet dette i et møde og har ger vægt på, at sådanne ruter oprettes, hvor herunder haft samråd med ministeren for det nødvendige trafikunderlag er til stede, og offentlige arbejder. således, at der er rimelig balance i betjenin- Udvalget har endvidere modtaget skriftlig gen af regioner uden for hovedstadsregioner- henvendelse fra: ne de tre skandinaviske lande imellem. Air Business, Ministeren har fremhævet, at såfremt SAS Air-X, under disse forudsætninger ikke ønsker at Alkair, udnytte sin fortrinsret, vil koncession kunne Braathens SAFE, gives til andre selskaber. Business Aviation, Business Flight Services, Et flertal (Det Konservative Folkepartis, Cimber Air, Venstres, Det Radikale Venstres, Centrum- Coast Aero Center, Demokraternes og Kristeligt Folkepartis Conair, medlemmer af udvalget) har taget ministe- Delta Fly, rens redegørelse til efterretning og indstiller Maersk Air, lovforslaget til vedtagelse uændret. Metro Airways, Midtfly, MUK Air, Et mindretal (Socialdemokratiets og Socia- Mørefly, listisk Folkepartis medlemmer af udvalget) Norsk Air, indstiller lovforslaget til vedtagelse uændret Norving, og henviser til samme mindretals udtalelser i New Air, betænkningen og under 2. behandling af lov- Partnair og forslaget. Sterling Airways. Mindretallet anmoder kraftigt om, at mini- Ministeren har under samrådet givet ud- steren i sine overvejelser om oprettelse af . -

Utdrag Av Norges Luftfartøyregister Pr. 01. Januar 2000

NORGES LUFTFARTØYREGISTER NORWEGIAN CIVIL AIRCRAFT REGISTER UTDRAG AV NORGES LUFTFARTØYREGISTER PR. 01. JANUAR 2000 Summary of Norwegian Civil Aircraft Register showing the actual status on 01 January 2000 Type luftfartøy Antall Type of Aircraft Number Motordrevne fly 770 Engined-powered airplanes Helikoptre 125 Helicopters Seil-/motorfly 172 Gliders/motor-gliders Ballonger 19 Balloons Denne listen er en fortegnelse over samtlige luftfartøy registrert i Norges Luftfartøyregister. This is a list of all aircraft registered in the Norwegian Civil Aircraft Register. POSTBOKS 8050 DEP ., NO-0031 OSLO, NORWAY KONTORADRESSE : RÅDHUSGATA 2, OSLO TELEFON: +47 23 31 78 00, TELEFAKS: +47 23 31 79 95, TELEX: 77194 ENCA N E-POST: [email protected], INTERNETT: www.luftfartstilsynet.no, AFTN: ENCAYAYA BANKGIRO: 0826 05 69776, SWIFT: PGINNOKK, ORG.NR: 981 105 516 Side/Page - 2 - Registr. Owner Manufacturer Maximum Cert. of marks Aircraft type Weight (kg) Airw.ness Serial number expires LN-AAA Sundt AS Cessna Aircraft Company 9979 2000-12-31 Postboks 2801 Solli 650 0204 OSLO 650-0187 Operator Nor Aviation AS Postboks 50 1330 FORNEBU Tlf. 6759 0140/ Fax 6753 0450 LN-AAE Skjellerud Dag Endre Cessna Aircraft Company 1111 2000-03-31 Gotterud 175C 2830 RAUFOSS 175-57093 LN-AAF Handelsbanken Finans AB (publ) Cessna Aircraft Company 5670 2000-12-31 106 35 STOCKHOLM, Sverige 500 Operator 500-0311 H5 Air Service Norway AS Postboks 28 2061 GARDERMOEN Tlf. 6397 8555/ Fax 6397 8086 LN-AAJ Fagerhaug Terje Lake Aircraft Corporation 1180 2000-09-30 Johnsen Yngve -

Knowledge Based Oil and Gas Industry

Knowledge Based Oil and Gas Industry by Amir Sasson and Atle Blomgren Research Report 3/2011 BI Norwegian Business School Department of Strategy and Logistics Amir Sasson and Atle Blomgren: Knowledge Based Oil and Gas Industry © Amir Sasson and Atle Blomgren 2011 Forskningsrapport 03/2011 ISSN: 0803‐2610 BI Norwegian Business School N‐0442 Oslo Phone: 4641 0000 www.bi.no Print: Nordberg BI’s Research Reports may be ordered from our website www.bi.no/en/research‐publications. Executive Summary This study presents the Norwegian upstream oil and gas industry (defined as all oil and gas- related firms located in Norway, regardless of ownership) and evaluates the industry according to the underlying dimensions of a global knowledge hub - cluster attractiveness, education attractiveness, talent attractiveness, R&D and innovation attractiveness, ownership attractiveness, environmental attractiveness and cluster dynamics. The Norwegian oil and gas industry was built upon established Norwegian competences in mining (geophysics), maritime operations and maritime construction (yards), with invaluable inputs from foreign operators and suppliers. At present, the industry is a complete cluster of 136,000 employees divided into several sectors: Operators (22,000), Geo & Seismics (4,000), Drill & Well (20,000), Topside (43,000), Subsea (13,000) and Operations Support (34,000). The value creation from operators and suppliers combined represents one-third of total Norwegian GDP (2008). The Norwegian oil and gas industry has for many years been a highly attractive cluster in terms of technical competence. The last ten years have also seen improvements in terms of access to capital and quality of financial services provided. -

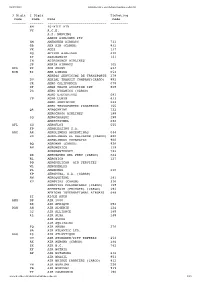

3 Digit 2 Digit Ticketing Code Code Name Code ------6M 40-MILE AIR VY A.C.E

06/07/2021 www.kovrik.com/sib/travel/airline-codes.txt 3 Digit 2 Digit Ticketing Code Code Name Code ------- ------- ------------------------------ --------- 6M 40-MILE AIR VY A.C.E. A.S. NORVING AARON AIRLINES PTY SM ABERDEEN AIRWAYS 731 GB ABX AIR (CARGO) 832 VX ACES 137 XQ ACTION AIRLINES 410 ZY ADALBANAIR 121 IN ADIRONDACK AIRLINES JP ADRIA AIRWAYS 165 REA RE AER ARANN 684 EIN EI AER LINGUS 053 AEREOS SERVICIOS DE TRANSPORTE 278 DU AERIAL TRANSIT COMPANY(CARGO) 892 JR AERO CALIFORNIA 078 DF AERO COACH AVIATION INT 868 2G AERO DYNAMICS (CARGO) AERO EJECUTIVOS 681 YP AERO LLOYD 633 AERO SERVICIOS 243 AERO TRANSPORTES PANAMENOS 155 QA AEROCARIBE 723 AEROCHAGO AIRLINES 198 3Q AEROCHASQUI 298 AEROCOZUMEL 686 AFL SU AEROFLOT 555 FP AEROLEASING S.A. ARG AR AEROLINEAS ARGENTINAS 044 VG AEROLINEAS EL SALVADOR (CARGO) 680 AEROLINEAS URUGUAYAS 966 BQ AEROMAR (CARGO) 926 AM AEROMEXICO 139 AEROMONTERREY 722 XX AERONAVES DEL PERU (CARGO) 624 RL AERONICA 127 PO AEROPELICAN AIR SERVICES WL AEROPERLAS PL AEROPERU 210 6P AEROPUMA, S.A. (CARGO) AW AEROQUETZAL 291 XU AEROVIAS (CARGO) 316 AEROVIAS COLOMBIANAS (CARGO) 158 AFFRETAIR (PRIVATE) (CARGO) 292 AFRICAN INTERNATIONAL AIRWAYS 648 ZI AIGLE AZUR AMM DP AIR 2000 RK AIR AFRIQUE 092 DAH AH AIR ALGERIE 124 3J AIR ALLIANCE 188 4L AIR ALMA 248 AIR ALPHA AIR AQUITAINE FQ AIR ARUBA 276 9A AIR ATLANTIC LTD. AAG ES AIR ATLANTIQUE OU AIR ATONABEE/CITY EXPRESS 253 AX AIR AURORA (CARGO) 386 ZX AIR B.C. 742 KF AIR BOTNIA BP AIR BOTSWANA 636 AIR BRASIL 853 AIR BRIDGE CARRIERS (CARGO) 912 VH AIR BURKINA 226 PB AIR BURUNDI 919 TY AIR CALEDONIE 190 www.kovrik.com/sib/travel/airline-codes.txt 1/15 06/07/2021 www.kovrik.com/sib/travel/airline-codes.txt SB AIR CALEDONIE INTERNATIONAL 063 ACA AC AIR CANADA 014 XC AIR CARIBBEAN 918 SF AIR CHARTER AIR CHARTER (CHARTER) AIR CHARTER SYSTEMS 272 CCA CA AIR CHINA 999 CE AIR CITY S.A. -

Competition Policy and International Airport Services, 1997

Competition Policy and International Airport Services 1997 The OECD Competition Committee debated competition policy and international airport services in June 1997. This document includes an executive summary, an analytical note by the OECD staff and written submissions from Australia, Austria, Canada, the European Commission, Germany, Hungary, Italy, Japan, Korea, Norway, Poland, Sweden, Switzerland, the United Kingdom, the United States and BIAC, as well as an aide-memoire of the discussion. Although airlines have long sought to enter alliances, an important new development in the last decade has been the crystallization of international airline alliances around major airline groupings. The scope and nature of these alliances differ, but there is a tendency towards deeper alliances involving co-operation on all aspects of the airline business. These super-alliances are coming as close to actual mergers as aviation’s Byzantine regulations allow, raising fundamental questions for competition policy-makers and enforcers. Alliances have the potential both to enhance the level and quality of services offered to consumers and to significantly restrict competition. Why do airlines seek to enter such alliances? What are the benefits to the airlines or consumers? How do alliances restrict competition? What is the role played by frequent-flyer programmes and other loyalty schemes? What remedies should competition authorities consider to alleviate the harmful effects of alliances? What is the appropriate role for international co-operation between authorities? Structural Reform in the Rail Industry (2005) Competition Issues in Road Transport (2000) Competition in Local Services (2000) Airlines Mergers and Alliances (1999) Promoting Competition in Postal Services (1999) Unclassified DAFFE/CLP(98)3 Organisation de Coopération et de Développement Economiques OLIS : 07-May-1998 Organisation for Economic Co-operation and Development Dist. -

Asian Breeze (33)

Asian Breeze (33) (亜細亜の風) A Happy Spring to you all 4 April, 2014 Dear Coordinators and Facilitators in the Asia/Pacific region. A long waited Spring has finally come to Tokyo with a full bloom of “Sakura” or Cherry trees. Since this winter was very cold with a lot of snow, the bloom of Sakura was especially waited impatiently. April is a start of everything in Japan such as the entrance of schools, entrance to companies and governments and a new fiscal year. Sakura seems to be blessing this new start as if Spring refreshes everything. Following illustration is a typical image of the entrance of elementary school with the Sakura bloom. One point lesson for Japanese; 「入学式」in this illustration means the entrance ceremony at school. In this issue, we have received a wonderful contribution from Norway featuring the Airport Coordination Norway (ACN) and four level 3 airport of Oslo Gardermoen Airport (OSL), Stavanger Airport, Sola (SVG), Bergen Airport, Flesland (BGO) and Trondheim Airport, Værnes (TRD), and one level 2 airport of Kirkenes Airport, Høybuktmoen (KKN). I hope you will enjoy reading them. Airport Coordination Norway (ACN) Airport Coordination Norway AS is a registered limited company under Norwegian private law. Airport Coordination Norway Ltd is a non-profit company in charge of slot allocation for Oslo-Gardermoen, Stavanger, Trondheim, Bergen airports, Trondheim Airport, and schedule facilitation for Kirkenes airport. Members The stocks are owned 50% by coordinated airports and 50% by Norwegian licensed scheduled air carriers. The Board has representatives from airport operators Avinor and Oslo Airport, as well as the airlines SAS and Norwegian Air Shuttle. -

Norges Luftfartøyregister Utdrag Av Norges Luftfartøyregister Pr. 25. August 2000

NORGES LUFTFARTØYREGISTER NORWEGIAN CIVIL AIRCRAFT REGISTER UTDRAG AV NORGES LUFTFARTØYREGISTER PR. 25. AUGUST 2000 Summary of Norwegian Civil Aircraft Register showing the actual status on 25 August 2000 Type luftfartøy Antall Type of Aircraft Number Motordrevne fly 783 Engined-powered airplanes Helikoptre 132 Helicopters Seil-/motorfly 173 Gliders/motor-gliders Ballonger 16 Balloons Denne listen er en fortegnelse over samtlige luftfartøy registrert i Norges Luftfartøyregister. This is a list of all aircraft registered in the Norwegian Civil Aircraft Register. POSTBOKS 8050 DEP ., NO-0031 OSLO, NORWAY KONTORADRESSE : RÅDHUSGATA 2, OSLO TELEFON: +47 23 31 78 00, TELEFAKS: +47 23 31 79 95, TELEX: 77194 ENCA N E-POST: [email protected], INTERNETT: www.luftfartstilsynet.no, AFTN: ENCAYAYA BANKGIRO: 0826 05 69776, SWIFT: PGINNOKK, ORG.NR: 981 105 516 Side/Page - 2 - Registr. Owner Manufacturer Maximum Cert. of marks Aircraft type Weight (kg) Airw.ness Serial number expires LN-AAA Sundt AS Cessna Aircraft Company 9979 31.12.2000 Postboks 2801 Solli 650 0204 OSLO 650-0187 Operator Sundt Air AS Postboks 31 2061 GARDERMOEN Tlf. 6392 9660/ Fax 6392 9670 LN-AAE Skjellerud Dag Endre Cessna Aircraft Company 1111 31.03.2001 Gotterud 175C 2830 RAUFOSS 175-57093 LN-AAG * Gjengedal Hans Piper Aircraft Corporation 1999 30.04.2001 Gjengedal Ole PA -34-220T * Postboks 44 34-48070 3551 GOL LN-AAI Brigantina A/S Cessna Aircraft Company 1698 31.07.2001 Harbitzalleen 2 A TU 206G 0275 OSLO U206-06863 Operator Coronet Norge AS Postboks 2486 Solli 0202 OSLO -

PROSPECTUS Seadrill Limited (An Exempted Company Limited by Shares Incorporated Under the Laws of Bermuda) Listing of the Compan

PROSPECTUS Seadrill Limited (An exempted company limited by shares incorporated under the laws of Bermuda) Listing of the Company's common shares on the Oslo Stock Exchange This prospectus (the "Prospectus") has been prepared in connection with the listing (the "Listing") by Seadrill Limited, an exempted company limited by shares incorporated under the laws of Bermuda with registration number 53439 (the "Company", "Seadrill" or the "Successor" and from the Effective Date (as defined herein) together with its consolidated subsidiaries, the "Group"), on Oslo Børs, a stock exchange operated by Oslo Børs ASA (the "Oslo Stock Exchange") of 100,000,000 common shares in the Company with a par value of USD 0.10 (the "Shares"). The Company applied for the Shares to be admitted for trading and listing on the Oslo Stock Exchange on 19 July 2018, and the board of directors of the Oslo Stock Exchange approved the listing application of the Company on 24 July 2018. Trading in the Shares on the Oslo Stock Exchange is expected to commence on or about 26 July 2018, under the ticker code "SDRL". The Company has with effect from the Effective Date, being the date when the Debtors (as defined herein) emerged from Chapter 11 Proceedings (as defined herein), served as the ultimate parent holding company of the Group. Certain information described herein relates to the period prior to the Effective Date when the Company's predecessor Seadrill Limited, a company in which the economic interests in its shares were extinguished on the Effective Date with registration number 36832, (referred to herein as "Old Seadrill" or the "Predecessor", and together with its consolidated subsidiaries prior to the Effective Date the "Group"), and not the Company, was the parent holding company of the Group.