* I'm Somewhat Familiar with Ramachandran's Mirror Boxes By

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

11Eyes Achannel Accel World Acchi Kocchi Ah! My Goddess Air Gear Air

11eyes AChannel Accel World Acchi Kocchi Ah! My Goddess Air Gear Air Master Amaenaideyo Angel Beats Angelic Layer Another Ao No Exorcist Appleseed XIII Aquarion Arakawa Under The Bridge Argento Soma Asobi no Iku yo Astarotte no Omocha Asu no Yoichi Asura Cryin' B Gata H Kei Baka to Test Bakemonogatari (and sequels) Baki the Grappler Bakugan Bamboo Blade Banner of Stars Basquash BASToF Syndrome Battle Girls: Time Paradox Beelzebub BenTo Betterman Big O Binbougami ga Black Blood Brothers Black Cat Black Lagoon Blassreiter Blood Lad Blood+ Bludgeoning Angel Dokurochan Blue Drop Bobobo Boku wa Tomodachi Sukunai Brave 10 Btooom Burst Angel Busou Renkin Busou Shinki C3 Campione Cardfight Vanguard Casshern Sins Cat Girl Nuku Nuku Chaos;Head Chobits Chrome Shelled Regios Chuunibyou demo Koi ga Shitai Clannad Claymore Code Geass Cowboy Bebop Coyote Ragtime Show Cuticle Tantei Inaba DFrag Dakara Boku wa, H ga Dekinai Dan Doh Dance in the Vampire Bund Danganronpa Danshi Koukousei no Nichijou Daphne in the Brilliant Blue Darker Than Black Date A Live Deadman Wonderland DearS Death Note Dennou Coil Denpa Onna to Seishun Otoko Densetsu no Yuusha no Densetsu Desert Punk Detroit Metal City Devil May Cry Devil Survivor 2 Diabolik Lovers Disgaea Dna2 Dokkoida Dog Days Dororon EnmaKun Meeramera Ebiten Eden of the East Elemental Gelade Elfen Lied Eureka 7 Eureka 7 AO Excel Saga Eyeshield 21 Fight Ippatsu! JuudenChan Fooly Cooly Fruits Basket Full Metal Alchemist Full Metal Panic Futari Milky Holmes GaRei Zero Gatchaman Crowds Genshiken Getbackers Ghost -

Aachi Wa Ssipak Afro Samurai Afro Samurai Resurrection Air Air Gear

1001 Nights Burn Up! Excess Dragon Ball Z Movies 3 Busou Renkin Druaga no Tou: the Aegis of Uruk Byousoku 5 Centimeter Druaga no Tou: the Sword of Uruk AA! Megami-sama (2005) Durarara!! Aachi wa Ssipak Dwaejiui Wang Afro Samurai C Afro Samurai Resurrection Canaan Air Card Captor Sakura Edens Bowy Air Gear Casshern Sins El Cazador de la Bruja Akira Chaos;Head Elfen Lied Angel Beats! Chihayafuru Erementar Gerad Animatrix, The Chii's Sweet Home Evangelion Ano Natsu de Matteru Chii's Sweet Home: Atarashii Evangelion Shin Gekijouban: Ha Ao no Exorcist O'uchi Evangelion Shin Gekijouban: Jo Appleseed +(2004) Chobits Appleseed Saga Ex Machina Choujuushin Gravion Argento Soma Choujuushin Gravion Zwei Fate/Stay Night Aria the Animation Chrno Crusade Fate/Stay Night: Unlimited Blade Asobi ni Iku yo! +Ova Chuunibyou demo Koi ga Shitai! Works Ayakashi: Samurai Horror Tales Clannad Figure 17: Tsubasa & Hikaru Azumanga Daioh Clannad After Story Final Fantasy Claymore Final Fantasy Unlimited Code Geass Hangyaku no Lelouch Final Fantasy VII: Advent Children B Gata H Kei Code Geass Hangyaku no Lelouch Final Fantasy: The Spirits Within Baccano! R2 Freedom Baka to Test to Shoukanjuu Colorful Fruits Basket Bakemonogatari Cossette no Shouzou Full Metal Panic! Bakuman. Cowboy Bebop Full Metal Panic? Fumoffu + TSR Bakumatsu Kikansetsu Coyote Ragtime Show Furi Kuri Irohanihoheto Cyber City Oedo 808 Fushigi Yuugi Bakuretsu Tenshi +Ova Bamboo Blade Bartender D.Gray-man Gad Guard Basilisk: Kouga Ninpou Chou D.N. Angel Gakuen Mokushiroku: High School Beck Dance in -

Newsletter 11/08 DIGITAL EDITION Nr

ISSN 1610-2606 ISSN 1610-2606 newsletter 11/08 DIGITAL EDITION Nr. 231 - Juni 2008 Michael J. Fox Christopher Lloyd LASER HOTLINE - Inh. Dipl.-Ing. (FH) Wolfram Hannemann, MBKS - Talstr. 3 - 70825 K o r n t a l Fon: 0711-832188 - Fax: 0711-8380518 - E-Mail: [email protected] - Web: www.laserhotline.de Newsletter 11/08 (Nr. 231) Juni 2008 editorial Hallo Laserdisc- und DVD-Fans, Ob es nun um aktuelle Kinofilme, um nen Jahr eröffnet und gelangte vor ein liebe Filmfreunde! besuchte Filmfestivals oder gar um paar Wochen sogar ganz regulär in die Die schlechte Nachricht gleich vorweg: einen nostalgischen Blick zurück auf deutschen Kinos. Wer den Film dort unsere Versandkostenpauschale für die liebgewonnene LaserDisc geht – verpasst hat oder ihn (verständlicher- Standard-Pakete erhöht sich zum 01. die angehende Journalistin weiß, wo- weise) einfach noch einmal sehen Juli 2008. Ein gewöhnliches Paket ko- von sie schreibt. Kennengelernt haben möchte, der kann die US-DVD des Ti- stet dann EUR 6,- (bisher: EUR 4,-), wir Anna während ihres Besuchs beim tels ab September in seine Film- eine Nachnahmesenduung erhöht sich 1. Widescreen-Weekend in der Karlsru- sammlung integrieren. Der Termin für auf EUR 13,- (bisher: EUR 9,50). Diese her Schauburg. Ein Wort ergab das eine deutsche Veröffentlichung wurde Preiserhöhung ist leider notwendig andere – und schon war die junge Frau zwar noch nicht bekanntgegeben, doch geworden, da unser Vertriebspartner Feuer und Flamme, für den Laser wird OUTSOURCED mit Sicherheit DHL die Transportkosten zum 01. Juli Hotline Newsletter zu schreiben. Wir auch noch in diesem Jahr in Deutsch- 2008 anheben wird. -

Ghost Hound (Netflix Instant Watch)

Ghost Hound (Netflix Instant Watch) From the creator of "Ghost in the Shell," comes this paranormal anime that deals with how the lives of three high school boys, with seemingly little in common, intertwine. A visit to an abandoned hospital unlocks their ability to have out-of-body experiences. In spirit form, they bear a resemblence to "Casper the Friendly Ghost," and can fly around unseen by anyone else, until they encounter other spirits and certain people who do see and hear them. When he was very little, central character Taro (left) and his sister, Mizuka, were kidnapped, but Taro was the sole survivor of the ordeal -- something that still haunts him. Masayuki (center) is the wiseguy who is living with the knowledge that his bullying caused a former classmate to curse him before jumping to his death from the school rooftop. Makoto (right), the antisocial one, has never been able to get his father's suicide out of his head, nor his mother abandoning him. Enter Miyako, a mysterious girl who lives at the local shrine with her father, whose sensitivity allows her to see the boys when they are in spirit form, but which also makes her susceptible to possession. Eventually, Taro begins to suspect she is the reincarnation of his sister. This is a mostly serious anime, sometimes creepy, which devotes entire episodes to illustrating certain psychological facts and/or theories and how they relate to advancing the plot. 22 episodes, worth a look, but nothing special. -Walt. -

MAD SCIENCE! Ab Science Inc

MAD SCIENCE! aB Science Inc. PROGRAM GUIDEBOOK “Leaders in Industry” WARNING! MAY CONTAIN: Vv Highly Evil Violations of Volatile Sentient :D Space-Time Materials Robots Laws FOOT table of contents 3 Letters from the Co-Chairs 4 Guests of Honor 10 Events 15 Video Programming 18 Panels & Workshops 28 Artists’ Alley 32 Dealers Room 34 Room Directory 35 Maps 41 Where to Eat 48 Tipping Guide 49 Getting Around 50 Rules 55 Volunteering 58 Staff 61 Sponsors 62 Fun & Games 64 Autographs APRIL 2-4, 2O1O 1 IN MEMORY OF TODD MACDONALD “We will miss and love you always, Todd. Thank you so much for being a friend, a staffer, and for the support you’ve always offered, selflessly and without hesitation.” —Andrea Finnin LETTERS FROM THE CO-CHAIRS Anime Boston has given me unique growth Hello everyone, welcome to Anime Boston! opportunities, and I have become closer to people I already knew outside of the convention. I hope you all had a good year, though I know most of us had a pretty bad year, what with the economy, increasing healthcare This strengthening of bonds brought me back each year, but 2010 costs and natural disasters (donate to Haiti!). At Anime Boston, is different. In the summer of 2009, Anime Boston lost a dear I hope we can provide you with at least a little enjoyment. friend and veteran staffer when Todd MacDonald passed away. We’ve been working long and hard to get composer Nobuo When Todd joined staff in 2002, it was only because I begged. Uematsu, most famous for scoring most of the music for the Few on staff imagined that our three-day convention was going Final Fantasy games as well as other Square Enix games such to be such an amazing success. -

4.- Ghost in the Shell Guió I Dibuixos: Masamune Shirow Planeta Deagostini Per Antoni Guiral

4.- Ghost in the Shell Guió i dibuixos: Masamune Shirow Planeta DeAgostini per Antoni Guiral L’autor A Masamune Shirow (pseudònim de Masanori Ota, Kobe, Japó, 1961) no li agrada la celebritat; no concedeix entrevistes, pràcticament no viatja i, a més, treballa en solitari, sense un estudi darrera, com és habitual en la majoria d’autors de manga o mangakes amb un cert renom. Ed. Comentada: La seva tasca professional començà al 1985 a la revista Seishinsha, Ghost in the Shell amb la sèrie Black Magic; ja en aquesta primera obra demostrava un Planeta DeAgostini interès especial per la tecnologia i la seva relació amb l’ésser humà i 2002 la política. A la sèrie Appleseed, publicada entre 1985 i 1989, seguiran altres com Dominion (un dels seus treballs més humorístics, del 351 pàgines 1986), Exon Depot (un estrany manga sense paraules més proper al PVP: 11,95€ llibre il·lustrat que al còmic, de 1990), Ghost in the Shell (1991) i Orion (1993). Aquestes obres el convertiran en un dels autors de manga millor valorats per lectors i crítica, tant al Japó com a Europa, on gaudeix d’un gran prestigi com autor de manga, a mig camí entre les històries més comercials de gènere i les obres més personals. El 1995 va publicar la segona part de Dominion, Dominion Conflict, i el 2001 la continuació de Ghost in the Shell, Manmachine Interface, però des del 1992 adreça el seu interès professional i artístic més cap a la il·lustració (terreny en el que ha publicat diversos llibres) i cap el món del disseny per sèries d’animació (Gundress) i de jocs per ordinador (on ha treballat a Orion i Sampaguita). -

Harga Sewaktu Wak Jadi Sebelum

HARGA SEWAKTU WAKTU BISA BERUBAH, HARGA TERBARU DAN STOCK JADI SEBELUM ORDER SILAHKAN HUBUNGI KONTAK UNTUK CEK HARGA YANG TERTERA SUDAH FULL ISI !!!! Berikut harga HDD per tgl 14 - 02 - 2016 : PROMO BERLAKU SELAMA PERSEDIAAN MASIH ADA!!! EXTERNAL NEW MODEL my passport ultra 1tb Rp 1,040,000 NEW MODEL my passport ultra 2tb Rp 1,560,000 NEW MODEL my passport ultra 3tb Rp 2,500,000 NEW wd element 500gb Rp 735,000 1tb Rp 990,000 2tb WD my book Premium Storage 2tb Rp 1,650,000 (external 3,5") 3tb Rp 2,070,000 pakai adaptor 4tb Rp 2,700,000 6tb Rp 4,200,000 WD ELEMENT DESKTOP (NEW MODEL) 2tb 3tb Rp 1,950,000 Seagate falcon desktop (pake adaptor) 2tb Rp 1,500,000 NEW MODEL!! 3tb Rp - 4tb Rp - Hitachi touro Desk PRO 4tb seagate falcon 500gb Rp 715,000 1tb Rp 980,000 2tb Rp 1,510,000 Seagate SLIM 500gb Rp 750,000 1tb Rp 1,000,000 2tb Rp 1,550,000 1tb seagate wireless up 2tb Hitachi touro 500gb Rp 740,000 1tb Rp 930,000 Hitachi touro S 7200rpm 500gb Rp 810,000 1tb Rp 1,050,000 Transcend 500gb Anti shock 25H3 1tb Rp 1,040,000 2tb Rp 1,725,000 ADATA HD 710 750gb antishock & Waterproof 1tb Rp 1,000,000 2tb INTERNAL WD Blue 500gb Rp 710,000 1tb Rp 840,000 green 2tb Rp 1,270,000 3tb Rp 1,715,000 4tb Rp 2,400,000 5tb Rp 2,960,000 6tb Rp 3,840,000 black 500gb Rp 1,025,000 1tb Rp 1,285,000 2tb Rp 2,055,000 3tb Rp 2,680,000 4tb Rp 3,460,000 SEAGATE Internal 500gb Rp 685,000 1tb Rp 835,000 2tb Rp 1,215,000 3tb Rp 1,655,000 4tb Rp 2,370,000 Hitachi internal 500gb 1tb Toshiba internal 500gb Rp 630,000 1tb 2tb Rp 1,155,000 3tb Rp 1,585,000 untuk yang ingin -

Protoculture Addicts #97 Contents

PROTOCULTURE ADDICTS #97 CONTENTS 47 14 Issue #97 ( July / August 2008 ) SPOTLIGHT 14 GURREN LAGANN The Nuts and Bolts of Gurren Lagann ❙ by Paul Hervanack 18 MARIA-SAMA GA MITERU PREVIEW ANIME WORLD Ave Maria 43 Ghost Talker’s Daydream 66 A Production Nightmare: ❙ by Jason Green Little Nemo’s Misadven- 21 KUJIBIKI UNBALANCE tures in Slumberland ❙ ANIMESample STORIES file by Bamboo Dong ❙ by Brian Hanson 24 ARIA: THE ANIMATION 44 BLACK CAT ❙ by Miyako Matsuda 69 Anime Beat & J-Pop Treat ❙ by Jason Green • The Underneath: Moon Flower 47 CLAYMORE ❙ by Rachael Carothers ❙ by Miyako Matsuda ANIME VOICES 50 DOUJIN WORK 70 Montreal World Film Festival • Oh-Oku: The Women of 4 Letter From The Editor ❙ by Miyako Matsuda the Inner Palace 5 Page 5 Editorial 53 KASHIMASHI: • The Mamiya Brothers 6 Contributors’ Spotlight GIRL MEETS GIRL ❙ by M Matsuda & CJ Pelletier 98 Letters ❙ by Miyako Matsuda 56 MOKKE REVIEWS NEWS ❙ by Miyako Matsuda 73 Live-Action Movies 7 Anime & Manga News 58 MONONOKE 76 Books ❙ by Miyako Matsuda 92 Anime Releases 77 Novels 60 SHIGURUI: 78 Manga 94 Related Products Releases DEATH FRENZY 82 Anime 95 Manga Releases ❙ by Miyako Matsuda Gurren Lagann © GAINAX • KAZUKI NAKASHIMA / Aniplex • KDE-J • TV TOKYO • DENTSU. Claymore © Norihiro Yago / Shueisha • “Claymore” Production Committee. 3 LETTER FROM THE EDITOR ANIME NEWS NETWORK’S Twenty years ago, Claude J. Pelletier and a few of his friends decided to start a Robotech プPROTOCULTURE ロトカル チャ ー ADDICTS fanzine, while ten years later, in 1998, Justin Sevakis decided to start an anime news website. Yes, 2008 marks both the 20th anniversary of Protoculture Addicts, and the 10th anniversary Issue #97 (July / August 2008) of Anime News Network. -

1 07 Ghost 2 11 Eyes 3 801 TTS Airbat 4 Abenobashi Mahou

1 07 Ghost 37 Amnesia (Movie) 2 11 Eyes 38 Angel Beats 3 801 TTS Airbat 39 Angel Sanctuary 4 Abenobashi Mahou Shoutengai 40 Angel's Feathers (Yaoi) 5 Abrazo de Primavera (Yaoi) 41 Antique Bakery 6 Accelerado (Hentai) 42 Ao no Exorcist 7 AD Police 43 Aoi Bungaku 8 Afro Samirai Resurrection 44 Aoi Hana 9 Afro Samurai 45 Appleseed 10 After the School in the Teacher's Lounge 46 Aquariam Age 11 Agent Aika 47 Araiso (Yaoi) 12 Ah! Megamisama 48 Arakawa Under The Bridge 13 Ah! Megamisama (Movie) 49 Arashi no Ato (Manga - Yaoi) 14 Ah! Megamisama (OVA) 50 Argento Soma 15 Ai no Kusabi (Yaoi) 51 Aria -Arietta- (OVA) 16 Ai Yori Aoshi 52 Aria The Animation 17 Ai Yori Aoshi - Enishi - 53 Aria The Navegation 18 Aika R-16 54 Aria The Origination 19 Aika Zero 55 Aria The Scarlet Ammo 20 Air 56 Armitage III 21 Air (OVA) 57 Asterix El Galo (Movie) 22 Air Gear 58 Asterix y Cleopatra (Movie) 23 Air Tonelico (Movie) 59 Asterix y La Sorpresa del Cesar (Movie) 24 Aiso Tsukash (Manga - Yaoi) 60 Asterix y las 12 Pruebas (Movie) 25 Aitsu no Daihonmei (Manga - Yaoi) 61 Asterix y Los Bretones (Movie) 26 Aitsu no Heart Uihi wo Tsukeru (Manga - Yaoi) 62 Asterix y Los Vikingos (Movie) 27 Akane Maniac 63 Asu no Yoichi 28 Akane-Iro ni Somaru Saka 64 Asura Criyn' 29 Akira 65 Asura Criyn' II 30 Akuma no Himitsu (Manga - Yaoi) 66 Asuza 31 Amaenaideyo 67 Avatar Libro 1 Agua 32 Amaenaideyo - Especial - (OVA) 68 Avatar Libro 2 Tierra 33 Amaenaideyo Katsu 69 Avatar Libro 3 Fuego 34 Amagami SS 70 Avenger 35 Amame Pecaminosamente (Manga - Yaoi) 71 Ayakashi Japanese Classic Horror -

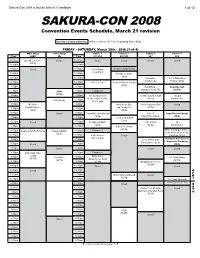

Sakura-Con 2008 Schedule, March 21 Revision 1 of 12

Sakura-Con 2008 schedule, March 21 revision 1 of 12 SAKURA-CON 2008 FRIDAY - SATURDAY, March 28th - 29th (2 of 4) FRIDAY - SATURDAY, March 28th - 29th (3 of 4) FRIDAY - SATURDAY, March 28th - 29th (4 of 4) Panels 5 Autographs Console Arcade Music Gaming Workshop 1 Workshop 2 Karaoke Main Anime Theater Classic Anime Theater Live-Action Theater Anime Music Video FUNimation Theater Omake Anime Theater Fansub Theater Miniatures Gaming Tabletop Gaming Roleplaying Gaming Collectible Card Youth Matsuri Convention Events Schedule, March 21 revision 3A 4B 615-617 Lvl 6 West Lobby Time 206 205 2A Time Time 4C-2 4C-1 4C-3 Theater: 4C-4 Time 3B 2B 310 304 305 Time 306 Gaming: 307-308 211 Time Closed Closed Closed Closed 7:00am Closed Closed Closed 7:00am 7:00am Closed Closed Closed Closed 7:00am Closed Closed Closed Closed Closed 7:00am Closed Closed Closed 7:00am 7:30am 7:30am 7:30am 7:30am 7:30am 7:30am Bold text in a heavy outlined box indicates revisions to the Pocket Programming Guide schedule. 8:00am Karaoke setup 8:00am 8:00am The Melancholy of Voltron 1-4 Train Man: Densha Otoko 8:00am Tenchi Muyo GXP 1-3 Shana 1-4 Kirarin Revolution 1-5 8:00am 8:00am 8:30am 8:30am 8:30am Haruhi Suzumiya 1-4 (SC-PG V, dub) (SC-PG D) 8:30am (SC-14 VSD, dub) (SC-PG V, dub) (SC-G) 8:30am 8:30am FRIDAY - SATURDAY, March 28th - 29th (1 of 4) (SC-PG SD, dub) 9:00am 9:00am 9:00am 9:00am (9:15) 9:00am 9:00am Karaoke AMV Showcase Fruits Basket 1-3 Pokémon Trading Figure Pirates of the Cursed Seas: Main Stage Turbo Stage Panels 1 Panels 2 Panels 3 Panels 4 Open Mic -

Sentai Filmworks

Sentai Filmworks - DVD Release Checklist: # - C A complete list of all 325 anime title releases and their 617 volumes and editions, with catalog numbers when available. 11 Eyes Azumanga Daioh: The Animation - Complete Collection (SF-EE100) - Complete Collection (Sentai Selects) (SFS-AZD100) A Little Snow Fairy Sugar Bakuon!! - Collection 1 (SF-LSF100) - Complete Collection (SF-BKN100) - Collection 2 (SF-LSF200) - Complete Collection (Sentai Selects) (SFS-LSF110) Battle Girls: Time Paradox - Complete Collection (SF-SGO100) A-Channel: The Animation - Complete Collection (SF-AC100) Beautiful Bones: Sakurako's Investigation - Complete Collection (SF-BBS100) Ajin: Demi-Human - Complete Collection (SF-AJN100) Beyond the Boundary - Limited Edition (BD/DVD Combo) (SFC-AJN100) - Complete Collection (SF-BTB100) - Season 2: Complete Collection (SF-AJN200) - Complete Collection: Limited Edition (BR/DVD Combo) (SFC-BTB100) - Season 2: Limited Edition (BD/DVD Combo) (SFC-AJN200) - I'll Be Here (BD/DVD Combo) (SFC-BTB001) Akame ga Kill! Black Bullet - Collection 1 (SF-AGK101) - Complete Collection (SF-BKB100) - Collection 1: Limited Edition (BD/DVD Combo) (SFC-AGK101) - Collection 2 (SF-AGK102) Blade & Soul - Collection 2: Limited Edition (BD/DVD Combo) (SFC-AGK102) - Complete Collection (SF-BAS100) Akane Iro ni Somaru Saka Blade Dance of the Elementalers - Complete Collection (SF-AIS100) - Complete Collection (SF-BDE100) AKB0048 Blue Drop - Season One: Complete Collection (SF-AKB100) - The Complete Series (2009, subbed) - Next Stage: Complete Collection -

Samedi / Saturday

Samedi / Saturday 10:00 - 11:00 11:00 - 12:00 12:00 - 13:00 13:00 - 14:00 14:00 - 15:00 15:00 - 16:00 16:00 - 17:00 17:00 - 18:00 18:00 - 19:00 19:00 - 20:00 20:00 - 21:00 21:00 - 22:00 Salles visuelles / Video Rooms Video 1 Otogi Jushi Oh Edo Rocket Macross Frontier Lovely Complex Rental Magica Sisters of Wellber Minami-ke (F) Moyashimon (F) ICE (F) Baccano! (F) Dragonaut (F) Night Wizard (F) 524b Akazukin (F) (F) (F) (F) (F) (F) Ultimate Sushi Macross, spécial 25e anniversaire / Video 2 Shiawase Sou no Jeux télévisés japonais / Spice and Wolf This is Restaurants Pt 1 Fan Parodies** S.T.E.A.M. The Movie (E) Macross 25th Anniversary Special (J) (to Okojo-san (J) Japanese Game Shows (J)** (J) Otakudom (E) 524c (LA) 00:00)** Video 3 Neo Angelique Myself; Yourself Slayers Sexy Voice and Tantei Gakuen Q Dragonzakura One Missed Call Blue Comet SPT Maple Story (J) Doraemon: Nobita's Dinosaur (J) RAIFU (LA) 525b Abyss (J) (J) Revolution (J) Robo (LA) (LA) (LA) (LA) (J) Ecchi Fanservice (J) Video 4 H2O - Footprints in Jyuushin Enbu Yawaraka Hatenko Yugi (J) Persona (J) Nabari no Ou (J) Célébration du Shonen-ai (Yaoi) / Shounen-ai (Yaoi) Celebration (J)** (14+) the Sand (J) (J) Shangokush (J) 525a (to 00:00)** Video 5 School Rumble Kujibiki xxxHolic & Tsubasa Welcome to the Black Blood Divertissement familial / Family Friendly Anime (E)** Peach Girl (J) Fruits Basket (J) Gurren Lagann (J) 522bc (J) Unbalance (J) Chronicles Movies (E) NHK (J) Brothers (J) Salle de présentation / Main Events Room OP Ceremonies The 404s: Saturday J-Rock en direct