Mining Source Code to Automatically Split Identifiers for Software Analysis

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Program #6: Word Count

CSc 227 — Program Design and Development Spring 2014 (McCann) http://www.cs.arizona.edu/classes/cs227/spring14/ Program #6: Word Count Due Date: March 11 th, 2014, at 9:00 p.m. MST Overview: The UNIX operating system (and its variants, of which Linux is one) includes quite a few useful utility programs. One of those is wc, which is short for Word Count. The purpose of wc is to give users an easy way to determine the size of a text file in terms of the number of lines, words, and bytes it contains. (It can do a bit more, but that’s all of the functionality that we are concerned with for this assignment.) Counting lines is done by looking for “end of line” characters (\n (ASCII 10) for UNIX text files, or the pair \r\n (ASCII 13 and 10) for Windows/DOS text files). Counting words is also straight–forward: Any sequence of characters not interrupted by “whitespace” (spaces, tabs, end–of–line characters) is a word. Of course, whitespace characters are characters, and need to be counted as such. A problem with wc is that it generates a very minimal output format. Here’s an example of what wc produces on a Linux system when asked to count the content of a pair of files; we can do better! $ wc prog6a.dat prog6b.dat 2 6 38 prog6a.dat 32 321 1883 prog6b.dat 34 327 1921 total Assignment: Write a Java program (completely documented according to the class documentation guidelines, of course) that counts lines, words, and bytes (characters) of text files. -

Administering Unidata on UNIX Platforms

C:\Program Files\Adobe\FrameMaker8\UniData 7.2\7.2rebranded\ADMINUNIX\ADMINUNIXTITLE.fm March 5, 2010 1:34 pm Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta UniData Administering UniData on UNIX Platforms UDT-720-ADMU-1 C:\Program Files\Adobe\FrameMaker8\UniData 7.2\7.2rebranded\ADMINUNIX\ADMINUNIXTITLE.fm March 5, 2010 1:34 pm Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Notices Edition Publication date: July, 2008 Book number: UDT-720-ADMU-1 Product version: UniData 7.2 Copyright © Rocket Software, Inc. 1988-2010. All Rights Reserved. Trademarks The following trademarks appear in this publication: Trademark Trademark Owner Rocket Software™ Rocket Software, Inc. Dynamic Connect® Rocket Software, Inc. RedBack® Rocket Software, Inc. SystemBuilder™ Rocket Software, Inc. UniData® Rocket Software, Inc. UniVerse™ Rocket Software, Inc. U2™ Rocket Software, Inc. U2.NET™ Rocket Software, Inc. U2 Web Development Environment™ Rocket Software, Inc. wIntegrate® Rocket Software, Inc. Microsoft® .NET Microsoft Corporation Microsoft® Office Excel®, Outlook®, Word Microsoft Corporation Windows® Microsoft Corporation Windows® 7 Microsoft Corporation Windows Vista® Microsoft Corporation Java™ and all Java-based trademarks and logos Sun Microsystems, Inc. UNIX® X/Open Company Limited ii SB/XA Getting Started The above trademarks are property of the specified companies in the United States, other countries, or both. All other products or services mentioned in this document may be covered by the trademarks, service marks, or product names as designated by the companies who own or market them. License agreement This software and the associated documentation are proprietary and confidential to Rocket Software, Inc., are furnished under license, and may be used and copied only in accordance with the terms of such license and with the inclusion of the copyright notice. -

Classical Quotient Rings of Pwd's Robert Gordon

PROCEEDINGS of the AMERICAN MATHEMATICAL SOCIETY Volume 36, Number 1, November 1972 CLASSICAL QUOTIENT RINGS OF PWD'S ROBERT GORDON Abstract. Piecewise domains which are right orders in semi- primary rings are characterized. An example is given showing the result obtained is "best possible". A further example is obtained of a prime right Goldie ring possessing a regular element which becomes a left zero divisor in some prime overring. This example leads to the construction of a PWD R not satisfying the regularity con- dition, but for which R/N(R) is right Goldie. Introduction. The principle result of this note is a characterization of PWD's (piecewise domains) which are right orders in semiprimary rings. The main device employed here is L. W. Small's concept of exhaustive set. Probably the most important consequence of the characterization is that a right noetherian PWD is a right order in a right artinian PWD. In the more general setting our result is less satisfactory because an assumption is made about each of the factor rings R/T^R) (see the theorem in §1). However, an example given at the end of §1 shows that this is unavoidable: There we exhibit a right Goldie PWD R having R/N(R) right Goldie which is not a right order in a semiprimary ring. In §2, as a problem closely related to the existence of classical quotient rings, we study the regularity condition in PWD's. Necessary and sufficient conditions are obtained for a PWD R to satisfy the regularity condition in terms of the torsion-freeness of R as an RlN(R)-modu\e. -

Grundfos Ls Split Case Pumps 50Hz: 37-2240Kw / 60Hz: 75-2500Kw

GRUNDFOS LS SPLIT CASE PUMPS 50HZ: 37-2240KW / 60HZ: 75-2500KW GRUNDFOS LS SPLIT CASE PUMPS INCREASED PUMP PERFORMANCE AND SYSTEM EFFICIENCY LS SPLIT CASE PUMPS INTRODUCTION HIGH EFFICIENCY SPLIT-CASE PUMPS GRUNDFOS LS LONG-COUPLED, HORIZONTAL SPLIT-CASE, DOUBLE SUCTION PUMPS, ARE SINGLE-STAGE, NON-SELF-PRIMING, CENTRIFUGAL VOLUTE PUMPS. The LS pumps are designed especially for water utility applications and manufactured according to the highest Grundfos quality standards. These high efficiency pumps have a wide efficiency range and very low NPSHr, which ensures safe and economic operation even when the actual flow deviates from the designed duty point. APPLICATIONS WATER SUPPLY: Υ ě¤Ċ½ÿÑçĊ¤Þ½ Υ ě¤Ċ½ÿ²ííĄĊÑçÈ Υ ě¤Ċ½ÿĊÿ¤çĄùíÿĊ¤ĊÑíç Υ ²¤³Þ줥ÎÑçÈ IRRIGATION: Υ Çѽà¹ÑÿÿÑȤĊÑíçγÇàíí¹ÑçÈδ Υ ĄùÿÑçÞà½ÿÑÿÿÑȤĊÑíç QUALITY DESIGN AND VERSATILE Grundfos LS pumps are in-line design with a radial Double volute design suction port and radial discharge port. The flanges are The compensated double-volute design virtually in accordance with DIN standard. Pump performance eliminates radial forces on the shaft and ensures is in accordance with ISO9906 G2. smooth performance throughout the entire operating range. High efficiency LS pumps have a very high efficiency, not only at the Low NPSHr rated flow but also within a wide flow range. The NPSHr of the LS pumps at rated point is about 2m-5m pump retains high efficiency even when the actual and this fits most customer demands. In addition, flow deviates by +/-20% of rated flow. The feature Grundfos provides customised solutions for special enables the system to operate at high efficiency requirements. -

Hadoop Distributed File System (HDFS)

CHAPTER 1 Hadoop Distributed File System (HDFS) The Hadoop Distributed File System (HDFS) is a Java-based dis‐ tributed, scalable, and portable filesystem designed to span large clusters of commodity servers. The design of HDFS is based on GFS, the Google File System, which is described in a paper published by Google. Like many other distributed filesystems, HDFS holds a large amount of data and provides transparent access to many clients dis‐ tributed across a network. Where HDFS excels is in its ability to store very large files in a reliable and scalable manner. HDFS is designed to store a lot of information, typically petabytes (for very large files), gigabytes, and terabytes. This is accomplished by using a block-structured filesystem. Individual files are split into fixed-size blocks that are stored on machines across the cluster. Files made of several blocks generally do not have all of their blocks stored on a single machine. HDFS ensures reliability by replicating blocks and distributing the replicas across the cluster. The default replication factor is three, meaning that each block exists three times on the cluster. Block-level replication enables data availability even when machines fail. This chapter begins by introducing the core concepts of HDFS and explains how to interact with the filesystem using the native built-in commands. After a few examples, a Python client library is intro‐ duced that enables HDFS to be accessed programmatically from within Python applications. 1 Overview of HDFS The architectural design of HDFS is composed of two processes: a process known as the NameNode holds the metadata for the filesys‐ tem, and one or more DataNode processes store the blocks that make up the files. -

Unix/Linux Command Reference

Unix/Linux Command Reference .com File Commands System Info ls – directory listing date – show the current date and time ls -al – formatted listing with hidden files cal – show this month's calendar cd dir - change directory to dir uptime – show current uptime cd – change to home w – display who is online pwd – show current directory whoami – who you are logged in as mkdir dir – create a directory dir finger user – display information about user rm file – delete file uname -a – show kernel information rm -r dir – delete directory dir cat /proc/cpuinfo – cpu information rm -f file – force remove file cat /proc/meminfo – memory information rm -rf dir – force remove directory dir * man command – show the manual for command cp file1 file2 – copy file1 to file2 df – show disk usage cp -r dir1 dir2 – copy dir1 to dir2; create dir2 if it du – show directory space usage doesn't exist free – show memory and swap usage mv file1 file2 – rename or move file1 to file2 whereis app – show possible locations of app if file2 is an existing directory, moves file1 into which app – show which app will be run by default directory file2 ln -s file link – create symbolic link link to file Compression touch file – create or update file tar cf file.tar files – create a tar named cat > file – places standard input into file file.tar containing files more file – output the contents of file tar xf file.tar – extract the files from file.tar head file – output the first 10 lines of file tar czf file.tar.gz files – create a tar with tail file – output the last 10 lines -

LS-Split Dispatching Laser Power

LASEA | MODULES LS-Split Dispatching laser power High power ultrashort pulse lasers are now commercially available, with powers of more than 100 W and with high energy per pulse. Unfortunately, despite the minimized thermal effects of these ultrashort pulses, most of microprocessing applications will still suffer from heat degradations above 20 W, and even more for small parts, where the heat cannot be spread over large surfaces. To reach a high rate of production while maintaining the quality offered by ultrashort pulse lasers, parallel processing is seen as the only efficient solution. While splitting the beam on several spots close to each other is seducing, the flexibility of this technique still remains to be improved to be applied in the industry. The only proven and efficient way to increase productivity is by splitting the beam down to several laser heads, each one processing identical workpieces. Laser scanners being components with MTBFs of more than 30.000 hrs, like the LS-Scan, the MTBF of a complete machine with several juxtaposed LS-Scan is almost not decreased, while its productivity can easily be tripled. To obtain, 2 beams, one LS-Split is needed. To get a third beam, another LS-Split must be added. LS-Splits can be cascaded indefinitely until reaching the desired number of beams. Then the power balance is easily performed by rotating the polarization with LS-Splits entrance waveplates. Two LS-Split versions are available: The LS-Split Access allows a manual setting of the power balance. The LS-Split Flex is the same but motorized to be able to easily adapt the power balance during a process, bringing for example the whole power on one single head. -

Dual-Stack Split for SAP Systems Based on SAP Netweaver 7.5, and Systems Upgraded to SAP Solution Manager 7.2 Java, on UNIX Company

Operations Guide | PUBLIC Software Provisioning Manager 1.0 SP32 Document Version: 3.3 – 2021-06-21 Dual-Stack Split for SAP Systems Based on SAP NetWeaver 7.5, and Systems Upgraded to SAP Solution Manager 7.2 Java, on UNIX company. All rights reserved. All rights company. affiliate THE BEST RUN 2021 SAP SE or an SAP SE or an SAP SAP 2021 © Content 1 About This Document.........................................................8 1.1 Use Cases of Dual-Stack Split....................................................9 1.2 About Software Provisioning Manager 1.0...........................................10 1.3 SAP Products Based on SAP NetWeaver 7.5, and Systems Upgraded to SAP Solution Manager 7.2, Supported for Dual-Stack Split Using Software Provisioning Manager 1.0 .....................11 1.4 Naming Conventions..........................................................11 1.5 New Features...............................................................12 1.6 Constraints.................................................................14 1.7 SAP Notes for the Dual-Stack Split................................................15 1.8 Accessing the SAP Library......................................................16 1.9 How to Use this Guide.........................................................16 2 Split Options Covered by this Guide.............................................18 2.1 Split Option: Move Java Database.................................................18 Operating System and Database Migration During Dual-Stack Split......................20 -

Troubleshooting TCP/IP

CHAPTER 7 Troubleshooting TCP/IP The sections in this chapter describe common features of TCP/IP and provide solutions to some of the most common TCP/IP problems. The following items will be covered: • TCP/IP Introduction • Tools for Troubleshooting IP Problems • General IP Troubleshooting Theory and Suggestions • Troubleshooting Basic IP Connectivity • Troubleshooting Physical Connectivity Problems • Troubleshooting Layer 3 Problems • Troubleshooting Hot Standby Router Protocol (HSRP) TCP/IP Introduction In the mid-1970s, the Defense Advanced Research Projects Agency (DARPA) became interested in establishing a packet-switched network to provide communications between research institutions in the United States. DARPA and other government organizations understood the potential of packet-switched technology and were just beginning to face the problem that virtually all companies with networks now have—communication between dissimilar computer systems. With the goal of heterogeneous connectivity in mind, DARPA funded research by Stanford University and Bolt, Beranek, and Newman (BBN) to create a series of communication protocols. The result of this development effort, completed in the late 1970s, was the Internet Protocol suite, of which the Transmission Control Protocol (TCP) and the Internet Protocol (IP) are the two best-known protocols. The most widespread implementation of TCP/IP is IPv4 (or IP version 4). In 1995, a new standard, RFC 1883—which addressed some of the problems with IPv4, including address space limitations—was proposed. This new version is called IPv6. Although a lot of work has gone into developing IPv6, no wide-scale deployment has occurred; because of this, IPv6 has been excluded from this text. Internet Protocols Internet protocols can be used to communicate across any set of interconnected networks. -

DC Load Application Note

DC Electronic Load Applications and Examples Application Note V 3032009 22820 Savi Ranch Parkway Yorba Linda CA, 92887-4610 www.bkprecision.com Table of Contents INTRODUCTION.........................................................................................................................3 Overview of software examples........................................................................................................3 POWER SUPPLY TESTING.......................................................................................................4 Load Transient Response.................................................................................................................4 Load Regulation................................................................................................................................5 Current Limiting................................................................................................................................6 BATTERY TESTING...................................................................................................................7 Battery Discharge Curves.................................................................................................................7 Battery Internal Resistances.............................................................................................................8 PERFORMANCE TESTING OF DC LOADS...........................................................................10 Slew Rate.......................................................................................................................................10 -

Some UNIX Commands At

CTEC1863/2007F Operating Systems – UNIX Commands Some UNIX Commands at Syntax: at time [day] [file] Description: Provides ability to perform UNIX commands at some time in the future. At time time on day day, the commands in filefile will be executed. Comment: Often used to do time-consuming work during off-peak hours, or to remind yourself to do something during the day. Other at-related commands: atq - Dump the contents of the at event queue. atrm - Remove at jobs from the queue. Examples: # at 0300 calendar | mail john ^D # # cat > DOTHIS nroff -ms doc1 >> doc.fmt nroff -ms file2 >> doc.fmt spell doc.fmt > SPELLerrs ^D # at 0000 DOTHIS cal Syntax: cal [month] year Description: Prints a calendar for the specified year or month. The year starts from year 0 [the calendar switched to Julian in 1752], so you typically must include the century Comment: Typical syntax: cal 11 1997 cal 1998 /Users/mboldin/2007F/ctec1863/unixnotes/UNIX-07_Commands.odt Page 1 of 5 CTEC1863/2007F Operating Systems – UNIX Commands calendar Syntax: calendar [-] Description: You must set-up a file, typically in your home directory, called calendar. This is a database of events. Comment: Each event must have a date mentioned in it: Sept 10, 12/5, Aug 21 1998, ... Each event scheduled for the day or the next day will be listed on the screen. lpr Syntax: lpr [OPTIONS] [FILE] [FILE] ... Description: The files are queued to be output to the printer. Comment: On some UNIX systems, this command is called lp, with slightly different options. Both lpr and lp first make a copy of the file(s) to be printed in the spool directory. -

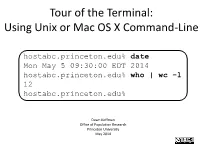

Tour of the Terminal: Using Unix Or Mac OS X Command-Line

Tour of the Terminal: Using Unix or Mac OS X Command-Line hostabc.princeton.edu% date Mon May 5 09:30:00 EDT 2014 hostabc.princeton.edu% who | wc –l 12 hostabc.princeton.edu% Dawn Koffman Office of Population Research Princeton University May 2014 Tour of the Terminal: Using Unix or Mac OS X Command Line • Introduction • Files • Directories • Commands • Shell Programs • Stream Editor: sed 2 Introduction • Operating Systems • Command-Line Interface • Shell • Unix Philosophy • Command Execution Cycle • Command History 3 Command-Line Interface user operating system computer (human ) (software) (hardware) command- programs kernel line (text (manages interface editors, computing compilers, resources: commands - memory for working - hard-drive cpu with file - time) memory system, point-and- hard-drive many other click (gui) utilites) interface 4 Comparison command-line interface point-and-click interface - may have steeper learning curve, - may be more intuitive, BUT provides constructs that can BUT can also be much more make many tasks very easy human-manual-labor intensive - scales up very well when - often does not scale up well when have lots of: have lots of: data data programs programs tasks to accomplish tasks to accomplish 5 Shell Command-line interface provided by Unix and Mac OS X is called a shell a shell: - prompts user for commands - interprets user commands - passes them onto the rest of the operating system which is hidden from the user How do you access a shell ? - if you have an account on a machine running Unix or Linux , just log in. A default shell will be running. - if you are using a Mac, run the Terminal app.