20 Questions a Journalist Should Ask About Poll Results

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Council Accused of Pro-Roe Push Poll

MELVILLE CITY HEo RALD Volume 25 N 19 Melville City’s own INDEPENDENT newspaper li treet, remantle Saturday May 10, 2014 Leeming to Kardinya Edition - etterboed to eeming, ateman, ull ree, ardinya, h a www.fremantleherald.com/melvilles urdoh, urdoh niversity, illagee and inthrop *Fortnightly Email: [email protected] B ell tolls for etla d s by STEVE GRANT • Save Beeliar etlands members are reeling following speculation eeliar etlands says te bbott government is set to the arnett government should fund the construction of Roe 8 stop pushing oe until appeals by making it s first toll road against its environmental Teyre promising mass action to approval have been heard stop te proect Photo by Matthew heir all follows transport Dwyer minister ean alder onfirming he’s negotiating funding with the Find the Fake Ad & WIN a bbott government that would Chance for a Feast for 2 be tied to the highway slugging 42 Mews Rd, truies with ’s first toll road Fremantle (The Sunday Times, May 4, 2014). member andi hinna told the Herald her group was still trying For details, please see Competitions to digest the information, given premier olin arnett had said the Want good pay? Some easy, gentle long-planned etension through the exercise? Deliver the Herald to amsar-listed wetlands wouldn’t local letterboxes! It’s perfect for be built in this term of parliament all ages, from young teens to She says the announcement seniors looking to keep active. If maes a moery of government proesses, with appeals against you’re reliable we may just have the ’s environmental approval a round near you (we deliver of the road still to be heard. -

Telephone Calls and Text Messages in State Senate, District

STATE OF MAINE Commission Meeting 09/30/2020 COMMISSION ON GOVERNMENTAL ETHICS Agenda Item #5 AND ELECTION PRACTICES 135 STATE HOUSE STATION AUGUSTA, MAINE 04333-0135 To: Commission From: Michael Dunn, Esq., Political Committee Registrar Date: September 22, 2020 Re: Complaint against Public Opinion Research On September 15, 2020, the Commission received a complaint from the Lincoln County Democratic Committee (the “LCDC”) alleging that unknown persons acting under the name of Public Opinion Research have engaged in a “push poll” to influence the general election for State Senate, District #13. ETH 1-8. Voters have received phone calls from live callers asking questions that are likely designed to influence their vote and text messages linking to an online survey conducted through the SurveyMonkey.com containing similar questions. According to the LCDC, the organizers of the telephone campaign have violated three disclosure requirements in Maine Election Law: • the callers have not provided any “disclaimer statement” identifying who paid for the calls, • the calls do not meet special disclosure requirements applicable to push polls in Maine, and • no independent expenditure report has been filed relating to the calls, text messages, and online survey. The LCDC requests that the Commission initiate an investigation into these activities. The Commission staff has been unable to make contact with Public Opinion Research as part of our preliminary investigation. We cannot find an online presence for a polling or political consulting firm with that name. A “push poll” is a paid influence campaign conducted in the guise of a telephone survey. The telephone calls are designed to “push” the listener away from one candidate and towards another candidate. -

Ye Olde Publisher Vs. Mark Gerzon - Push Polls! Opinion Questions of the Day

• Letters: • L-Chastain chastizes Bag Ban People! • L-Ruemmler says Water District didn’t answer? • L-Luppens opine Eagle-Vail collapse imminent! • Avon & di Simone’s bag ban destroys freedom! Business Briefs – www.BusinessBriefs.net – Volume 10, Number 9 - July 27 thru Aug 30th, 2017 Distributed free online & to almost 900 locations in Vail, Beaver Creek & Eagle County, Colorado. 970-280-5555 . Injury Attorneys • Auto/Motorcycle • Ski/Snowboard • Dog Bites Rep. Diane Mitsch Bush • Other Injuries Free Consult broke a promise to serve! Percentage Fee Bloch & Chapleau 970.926.1700 Ye Olde Publisher vs. VailJustice.com Edwards/Denver Mark Gerzon - push polls! d, The Bad, The Ugly! The Goo • The Stupid: Most of the Avon Council - potted plants in street! • The Surprising: The amount of locals who don’t use VVMC! • The Defective Thinkers: Our elected Climate Change nutcases! • The Obfuscators: Dems afraid of illegal voting commission! lied to voters about ObamaCare! TraTcraec eT Tyyleer rA Agegnecy ncy • The Liars: Republicans who 97 Main9 7S Mtraeine Stt r•e eSt u! Situeit eW W-110066 ! •Ed wEadrwds,a CrOd s8,1 6C32O 81632 • They Don’t Care - 90% of Americans don’t care about Russia. 9P7h0o-n9e:2 967-04-932760-4 •37 0T !T Eymlaeirl:@ ttAylemrr@@Famamfam.c.coom m “We are proud to serve the Vail Valley providing the community with ““WWee arree prroudoud to servvee tthehe VVailail VValleyalley prroovvidingiding tthehe communittyy • Sad: The loss of Lew Meskiman, Ursula Fricker, Dick Blair! exwwitcitethhll eexxxcellentncellentt servi cseer avvicenicced pandro dpruroductoductcts ttsos mtoe meetet al lall y oyyouruourr ri insnsuurranceranceance needsneeds. -

Actualities and Electronic Press Kits, 40, 85, 122 Advertising, Radio

Cambridge University Press 978-0-521-84749-0 — New Media Campaigns and the Managed Citizen Philip N. Howard Index More Information Index actualities and electronic press kits, campaigns: candidate type, 145; 40, 85, 122 grassroots or social movement advertising, radio, television, and type, 144; implanted or Astroturf Web site, 88–94 type, 98, 145, 177; lobby group affinity networks, 85, 139, 144–147, type, 144. See also hypermedia 158 campaigns; issue campaigns; mass AFL-CIO, 6, 138 media campaigns; presidential Agora, GrassrootsActivist.org, 120 campaigns America Coming Together (ACT), 18 CandidateShopper, Voting.com, 113 American Association of Retired capo managers, 148 Persons (AARP), 137, 160 categorization: and identity, 37, 183, American Civil Liberties Union 188; as political negotiation, (ACLU), 137 133–134, 141; managed paradoxes, Amnesty International, 84, 85 86, 154, 157 astroturf or implanted campaign. chat and listservs, 112, 239 See campaigns ChoicePoint Technologies, 15 Astroturf Compiler Software, citizenship: historical context, 185; Astroturf-Lobby.org, 86 privatized, 189–190; shadow, Astroturf-Lobby.org, 83–100, 187–189; thin, 185–187 175–176 coding generals, 148 avatars, 116, 174 Columbia School. See Lazarsfeld, Paul; Merton, Robert Barber, Benjamin, 102, 149 conferences and conventions: Baudrillard, Jean, 67 Democratic and Republican blogging, 16, 121, 239 National Convention, 35, 72, 85, Bourdieu, Pierre, 69, 70, 212 166; and group identity, 34–36, 50 cookie, 239–240 campaign managers. See political C-SPAN, 65 consultants cultural frame, 67, 172, 205. See also campaign spending, 91, 146 media effects 261 © in this web service Cambridge University Press www.cambridge.org Cambridge University Press 978-0-521-84749-0 — New Media Campaigns and the Managed Citizen Philip N. -

A Tale of Two Paranoids: a Critical Analysis of the Use of the Paranoid Style and Public Secrecy by Donald Trump and Viktor Orbán

Secrecy and Society ISSN: 2377-6188 Volume 1 Number 2 Secrecy and Authoritarianism Article 3 February 2018 A Tale of Two Paranoids: A Critical Analysis of the Use of the Paranoid Style and Public Secrecy by Donald Trump and Viktor Orbán Andria Timmer Christopher Newport University, [email protected] Joseph Sery Christopher Newport University, [email protected] Sean Thomas Connable Christopher Newport University, [email protected] Jennifer Billinson Christopher Newport University, [email protected] Follow this and additional works at: https://scholarworks.sjsu.edu/secrecyandsociety Part of the Social and Cultural Anthropology Commons, and the Speech and Rhetorical Studies Commons Recommended Citation Timmer, Andria; Joseph Sery; Sean Thomas Connable; and Jennifer Billinson. 2018. "A Tale of Two Paranoids: A Critical Analysis of the Use of the Paranoid Style and Public Secrecy by Donald Trump and Viktor Orbán." Secrecy and Society 1(2). https://doi.org/10.31979/ 2377-6188.2018.010203 https://scholarworks.sjsu.edu/secrecyandsociety/vol1/iss2/3 This Article is brought to you for free and open access by the School of Information at SJSU ScholarWorks. It has been accepted for inclusion in Secrecy and Society by an authorized administrator of SJSU ScholarWorks. For more information, please contact [email protected]. This work is licensed under a Creative Commons Attribution 4.0 License. A Tale of Two Paranoids: A Critical Analysis of the Use of the Paranoid Style and Public Secrecy by Donald Trump and Viktor Orbán Abstract Within the last decade, a rising tide of right-wing populism across the globe has inspired a renewed push toward nationalism. -

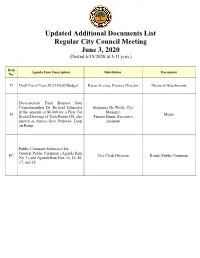

Updated Additional Documents List Regular City Council Meeting June 3, 2020 (Posted 6/15/2020 at 5:11 P.M.)

Updated Additional Documents List Regular City Council Meeting June 3, 2020 (Posted 6/15/2020 at 5:11 p.m.) Item Agenda Item Description Distributor Document No. 17 Draft Fiscal Year 20/21 Draft Budget Karen Aceves, Finance Director Memo w/Attachments Discretionary Fund Request from Councilmember Dr. Richard Schneider Stephanie De Wolfe, City in the amount of $6,000 for a First Cut Manager; 18 Memo Scaled Drawing of State Route 110, also Tamara Binns, Executive known as Arroyo Seco Parkway, Loop Assistant on Ramp Public Comment Submitted for: General Public Comment (Agenda Item PC City Clerk Division E-mail Public Comment No. 3); and Agenda Item Nos. 11, 14, 16, 17, and 18. City of South Pasadena Finance Department Memo Date: June 2, 2020 To: The Honorable City Council Via: Stephanie DeWolfe, City Manager From: Karen Aceves, Finance Director June 3, 2020 City Council Meeting Item No. 17 Additional Document – Draft Fiscal Year 20/21 Draft Budget Re: Attached is an additional document which includes the revenue line items for non-general funds. A.D. 17- 1 Revenue Detail Actual Actual Actual Budget Estimated Proposed Acct Account Title 2016/17 2017/18 2018/19 2019/20 2019/20 2020/21 9911-000 Transfers from Other Fund 81,711 - 200,000 95,000 301,163 320,000 Transfers In 81,711 - 200,000 95,000 301,163 320,000 103 - INSURANCE FUND TOTAL 81,711 - 200,000 95,000 301,163 320,000 9911-000 Transfers from Other Fund 3,505,451 - 1,100,000 965,000 965,000 500,000 Transfers In 3,505,451 - 1,100,000 965,000 965,000 500,000 104 - STREET IMPROVEMENTS -

Political Consulting and the Legislative Process. Douglas A

University of Massachusetts Amherst ScholarWorks@UMass Amherst Doctoral Dissertations 1896 - February 2014 1-1-2001 Post-electoral consulting : political consulting and the legislative process. Douglas A. Lathrop University of Massachusetts Amherst Follow this and additional works at: https://scholarworks.umass.edu/dissertations_1 Recommended Citation Lathrop, Douglas A., "Post-electoral consulting : political consulting and the legislative process." (2001). Doctoral Dissertations 1896 - February 2014. 1990. https://scholarworks.umass.edu/dissertations_1/1990 This Open Access Dissertation is brought to you for free and open access by ScholarWorks@UMass Amherst. It has been accepted for inclusion in Doctoral Dissertations 1896 - February 2014 by an authorized administrator of ScholarWorks@UMass Amherst. For more information, please contact [email protected]. POST-ELECTORAL CONSULTING POLITICAL CONSULTING AND THE LEGISLATIVE PROCESS A Dissertation Presented by DOUGLAS A. LATHROP Submitted to the Graduate School of the University of Massachusetts-Amherst in partial fulfillment of the requirements for the degree of DOCTOR OF PHILOSOPHY May 2001 Political Science © Copyright by Douglas A. Lathrop 2001 All rights reserved POST-ELECTORAL CONSULTING POLITICAL CONSULTING AND THE LEGISLATIVE PROCESS A Dissertation Presented by DOUGLAS A. LATHROP Approved as to style and content by; Jerome Mileur, Department Chair ^T^olitical Science DEDICATION To my parents ACKNOWLEDGMENTS I would like to extend my heartfelt appreciation and immeasurable gratitude to Jerry Mileur and Jeff Sedgwick. I doubt that I could have finished graduate school, much less finished a dissertation, without their support and interest in my professional goals. A special thanks to my outside reader, Jarice Hanson, who provided some helpful suggestions from an alternate perspective. -

Council Accused of Pro-Roe Push Poll

FREMANTLE Ho ERALD Volume 25 N 19 Fremantle’s own INDEPENDENT newspaper 1 Cliff Street, Fremantle Saturday May 10, 201 Letterboxed to Fremantle, Beaconsfield, East Fremantle, Hilton, North Fremantle, Ph 30 7727 Fax 30 7726 www.fremantleherald.com ’Connor, Samson, South Fremantle and White um Valley Email newsfremantleherald.com B ell t o ll f o r w e la d by STEVE GRANT • Save Beeliar etlands members are reeling following speculation SAVE Beeliar Wetlands says te bbott government is set to the Barnett government should fund te construction of Roe stop pushing Roe until appeals by making it s first toll road against its environmental Teyre promising mass action to approval have been heard. stop te proect Photo by Matthew Their call follows WA transport Dwyer minister Dean Nalder confirming he’s negotiating funding with the Find the Fake Ad & WIN a Abbott government that would Chance for a Feast for 2 be tied to the highway slugging 4 2 M ews R d, truckies with WA’s first toll road F rem antle (The Sunday Times, May 4, 2014). SBW member Nandi Chinna told the Herald her group was still trying For details please see Competitions to digest the information, given premier Colin Barnett had said the Want good pay? Some easy, gentle long-planned extension through the exercise? Deliver the Herald to Ramsar-listed wetlands wouldn’t local letterboxes! It’s perfect for be built in this term of parliament. all ages, from young teens to She says the announcement seniors looking to keep active. If makes a mockery of government processes, with 16 appeals against you’re reliable we may just have the EPA’s environmental approval a round near you (we deliver of the road still to be heard. -

Cybercrime and the Electoral System

Chapter 10 CYBERCRIME AND THE ELECTORAL SYSTEM Oliver Friedrichs This manuscript is a draft of a chapter that will appear in the forthcoming book \Crimeware" edited by Markus Jakobsson and Zul¯kar Ramzan (to be published by Symantec Press and Addison-Wesley Professional). 1 2 While we ¯rst saw the Internet used extensively during the 2004 Presi- dential election, its use in future presidential elections will clearly overshadow it. It is important to understand the associated risks as political candidates increasingly turn to the Internet to more e®ectively communicate their posi- tions, rally supporters, and seek to sway critics. These risks include among others the dissemination of misinformation, fraud, phishing, malicious code, and the invasion of privacy. Some of these attacks, including those involving the diversion of online campaign donations have the potential to threaten voters' faith in our electoral system. Our analysis in this chapter focuses on the 2008 presidential election in order to demonstrate the risks involved, however our ¯ndings may just as well apply to any future election. We will show that many of the same risks that we have grown accustomed to on the Internet can also manifest themselves when applied to the election process. It is not di±cult for one to conceive numerous attacks that may present themselves which may, to varying degrees, impact the election process. One need only examine attack vectors that already a®ect consumers and enter- prises today in order to apply them to this process. In this chapter we have chosen to analyze those attack vectors that would be most likely to have an immediate and material a®ect on an election, a®ecting voters, candidates, or campaign o±cials. -

Mitchell's Musings: 3Rd Quarter 2014 from Employmentpolicy.Org

Mitchell’s Musings: 3rd Quarter 2014 From employmentpolicy.org Employment Policy Research Network Formatting may differ from original. Typo correction included. 0 Mitchell’s Musings 7-7-14: All Incomes Are Not Created Equal Daniel J.B. Mitchell Our prior musing dealt with results from the California Field Poll on how folks felt about the general state of affairs in the U.S. As noted in that musing, polls are suspect when they get into detailed questions about specific public policies. Typically in those cases, pollsters have to explain the policy because most people don’t follow such matters in detail. The ways in which the explanations and questions are presented have a major impact on the responses. However, when the questions are general, attitudes are likely to be reasonably detected. Table 1 on the final page of this musing shows the results concerning attitudes about income inequality among the California adult population.1 A majority of adults are dissatisfied with income inequality. But beyond that simple observation, there are surprises. Immigrants, who are often at the lower end of the income distribution (especially Latinos), are less worried about income inequality than other adults. Presumably, the still-lower income level alternatives in their native countries influence the responses. Strongly conservative and strongly liberal respondents are more concerned about inequality than others so both (extreme) sides of the political spectrum are more concerned than centrists. Young people (age 18-29) are less worried about it – despite their well-publicized job problems, issues of college debt, etc. – than are other adults. Lower income respondents are less dissatisfied about inequality than others. -

January 21, 2019 Please Support These Pro-Democracy Bills!

January 21, 2019 Please support these pro-democracy bills! Voting Access: SB 6228 / HB 2292 – Allows persons complying with conditions of community custody to register to vote. This restores voting rights to those convicted of a felony as soon as they are released from prison, regardless of the status of any court fines or fees owed. ➢ If passed, this bill would bring 18,000 voters back into our democracy. ➢ The freedom to vote is a fundamental American value. ➢ Our laws should encourage all eligible voters to participate, including those who have served their time and earned back the freedom to vote. ➢ People of color are overrepresented in WA prisons, but underrepresented in democracy. It’s time we help correct this problem by expanding voting access to those most impacted. SB 6313 / HB 2558 – Voting Opportunities Through Education (VOTE) to increase youth participation - Authorizes 17-year-olds to participate in primary elections if they will be 18 by the next election and adds Engagement Centers for voting & other civic engagement activities on college campuses. ➢ Registering and activating young voters before they move out of their childhood homes leads to higher turnout rates. ➢ Encouraging civic engagement and expanding civic education helps build a more enlightened community actively engaged in the democratic process. ➢ Primaries are an important component to our elections, so those who can vote in an upcoming general or special election, should be able to participate in the primary as well. ➢ Participation in the whole election process is good for the democratic process, civic engagement, and education for young voters, helping to build a lifetime habit of regular voting ➢ The turnout rate for young voters is typically lower compared to other demographics; our democracy should encourage, not make it more difficult, for eligible young adults to participate. -

7 Polls and Elections Election Surveys Are the Polls That Are Most Familiar to Americans for a Number of Reasons

7 Polls and Elections Election surveys are the polls that are most familiar to Americans for a number of reasons. First, there are so many of them, and they receive so much media coverage. Second, election polls generate distributea lot of controversy and hence media coverage under specific circumstances. One such circum- stance is when the preelection polls are wrong. Elections are one the few topics for which there is a benchmark to measureor the accuracy of the poll—the benchmark being the actual Election Day result. We can compare how close the poll prediction was to the actual vote outcome. Keep in mind, however, that if the Election Day outcome was 51–49 and the poll predicted 49–51, the poll’s prediction was actually very accurate when one factors in the sampling error of thepost, poll. Another situation in which election polls generate controversy occurs when multiple preelection polls con- ducted in close proximity to Election Day yield highly disparate results. That is, some polls might predict a close election, and others predict a more lopsided contest. Although the most prominent election polls in the United States focus on thecopy, presidential contest, polling is conducted in almost every election battle, ranging from Congress to state and local races to bal- lot issues at the state and local levels. Indeed, election polling has become an international phenomenon, even in countries where the history of free and opennot elections is relatively short. In 2014 and 2015, there were numerous instances in the United States and abroad in which the polls did a poor job of predicting the actual Doelection outcomes.