Analysis and Optimal Targets Setup of a Multihead Weighing Machine

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

10 Head Multihead Weigher

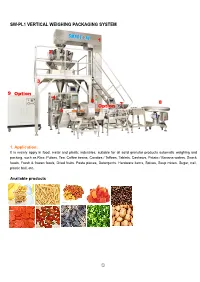

SW-PL1 VERTICAL WEIGHING PACKAGING SYSTEM 1 2 3 9 Option 4 6 8 Option 7 5 1. Application: It is mainly apply in food, metal and plastic industries, suitable for all solid granular products automatic weighing and packing, such as Rice, Pulses, Tea, Coffee beans, Candies / Toffees, Tablets, Cashews, Potato / Banana wafers, Snack foods, Fresh & frozen foods, Dried fruits, Pasta pieces, Detergents, Hardware items, Spices, Soup mixes, Sugar, nail, plastic ball, etc. Available products ① Bag Style Pillow Bag Pillow Gusset Bag 4 Side seal bag 2. Machines List: 1 Z Bucket Conveyor 2 Multihead weigher (Available 10-24 head weigher) 3 Supporting Platform 4 Vertical Form Fill Seal Machine (Bag width 50-400 mm) 5 Output conveyor 6 Metal Detector (OPTION) 7 Check weigher (OPTION) 8 Collect Table (OPTION) 9 Nitrogen Generator (OPTION) 3. Working Principle 1. Filling products on vibrator of Z bucket conveyor on floor; 2. Products will be lifted on the top of multihead weigher for feeding; 3. Multihead weigher will automatic weighing according to preset weight; 4. Preset weight products will be dropped to VFFS machine for bag sealing; 5. Finished package will be outputed to metal detector, if with metal machine will alarm, if not will go to check weigher 6. Product will be passed through check weigher, if over or less weight, it will be rejected, if not, pass to rotary table 7. Products will get to rotary table, and worker put them into paper box 4. Features: 1). Fully-automatically procedures from material feeding, weighing, filling and bag-making, date-printing to finished products output; 2). -

ONE VARIETY, PLEASE Tubes, Bottles and All Lot Sizes from ADA International 4 EDITORIAL PREVIEW

consumer/nonwovens 01 | 2017 The OPTIMA Magazine MORE SERVICE, 10 MORE EFFICIENCY And more freedom: The new HMI TEA DOSING: 22 GENTLE ON THE LEAF The next trend in capsules is here ONE VARIETY, PLEASE Tubes, bottles and all lot sizes from ADA International 4 EDITORIAL PREVIEW IN USE 4 2 years of personalization in real-world applications Technical advantage for ADA International 14 Dosing packed with power 4 Sticking "vitamin bears" through the eye of a needle ADA Cosmetic GmbH: Even most of the niche markets today are dominated by 22 Trend: international competitors and are highly Tea in serving-size packages competitive. Hotel beauty products are Gentle and precise in the process no exception. The European market leader PRACTICAL RESEARCH ADA manages to combine both using packaging technology, quality, cost orienta- FOCUS: TECHNOLOGY SEEING INTO THE FUTURE? tion, customer service and flexibility. 10 The new HMI offers freedom of choice What hardware, Futuristic topics are once more a ma- that are proving their worth in practice at what functions? jor story in this o-com: Cobots, the next customer facilities. For instance, modular generations of HMIs and 3D printing. machine construction, which Optima Con- 19 3D printing at Optima Despite the variety in topics, the focus for sumer takes to its full potential with MOD- For rapid prototyping all projects lies unequivocally on practical ULINE. The system construction kit offers and complex small batches benefit. numerous solutions that are constantly Strategic decisions set the course being enhanced with new additions. 10 26 What are Cobots capable of? even before the beginning of new R&D This is where format flexibility and The helpers in action projects. -

PRODUCE Packaging Solutions EN Keep It Fresh Longer

Global Packaging PRODUCE Packaging Solutions EN Keep it fresh longer Shelf life extending technologies After they are harvested, fruits and vegetables live on and The packing forms a barrier that contains the product and have a limited shelf life. To extend it, ULMA offers you the protects it from the microbial growth, from the phisical- widest range of packaging solutions on the market for the chemical deterioration and from the contamination, keeps Fruit and Vegetables sector helping you in your search for its flavor and odor, and acts like a display for the product the optimal packaging for your product. marketing, information and traceability. After harvesting fruit and vegetables keep breathing, ULMA Packaging collaborates with research, development ripening, and are exposed to the deterioration (ethylene and innovation centers such as ADESVA or AINIA and some synthesis), to the microbial growth and, through the of the most important companies in the sector to develop transpiration, to the loss of moisture (weight) in the product. the perfect solution for each product adjusting the film‘s permeability to match the product‘s respiration rate or The shelf life of your fresh products can be extended by: adding protective gases if they are required. • Storage in cool temperatures (depending on each product). With suitable packaging and different technologies • Processing in optimum hygienic conditions. (macroperforations, microperforations, permeable films • Avoiding physical damage. etc) the permeability and thus the respiration rate of the • Adjusting the film permeability for the appropriate product package can be controlled resulting in an optimal balance in respiration. the concentration of gases inside. -

Intelligent Packaging System

INTELLIGENT PACKAGING SYSTEM 1 INTELLIGENT PACKAGING SYSTEM Introduction Built in 1983, YOUNGSUN is a large manufacturer of packaging As an authorized agent for sales and service of YOUNGSUN in equipment in China and a national high-tech enterprise which Indonesia, Kawan Lama presence will be a peace of mind for our integrates R&D, production, sales and services. The main business customer in Indonesia who use our products. With Kawan Lama includes various liquid filling, powder and granule packaging, presence, 24 hours sales and technical support, warranty and spare universal packaging equipment and external packaging materials. parts availability will be guaranteed for all our customer in Indonesia. YOUNGSUN has factory areas covering about 250.000 m², five Our products are widely used in Food and Beverage industry, production bases, with more than 700 patents, 22 international Pharmaceuticals industry, Chemical and Cosmetics industry, and any patents, 100 patents for invention and 36 years of professional other industries that involve carton packaging and carton palletizing. technologies and experiences, and world wide customer. Background Our Products We provide advanced packaging system, both standardize system or YOUNGSUN products are proven to be a quality product which both a customized solution tailored to your specific needs. Our products reliable and efficient. All of our products has received CE certification cover every part of the packaging process and make this process fit by TÜV NORD such as Case Erectors, Case Sealer, Automatic Strapping together more effectively to increase productivity and performance. Machine, Wrapping Machine, Forming, Filling and Sealing Machine, Shrink Wrap Machine, Vacuum Machine, Carton Packer, Palletizing Machine, Labelling Machine, and many more. -

Internationalional

InternatIonalIonal Vol. 26 No. 6 December 2012 US $ 10 · 10 ISSN 0932-2744 B 31377 Cover: Biscuits at their Best Clean Label Starch Gentle Egg Feeding School Milk Packaging International Publications Please Company affix stamp here Name Function/Department Street/P.O.Box BY AIR MAIL PAR AVION Zip Code/City food Marketing & Technology Country Reader Service Phone Dr. Harnisch Verlags GmbH Blumenstr. 15 Fax 90402 Nürnberg Germany E-mail Perforationslinie > Please Company affix stamp here Name Function/Department Street/P.O.Box BY AIR MAIL PAR AVION Zip Code/City food Marketing & Technology Country Reader Service Phone Dr. Harnisch Verlags GmbH Blumenstr. 15 Fax 90402 Nürnberg Germany E-mail Perforationslinie > Please Company affix stamp here Name Function/Department Street/P.O.Box BY AIR MAIL PAR AVION Zip Code/City food Marketing & Technology Country Reader Service Phone Dr. Harnisch Verlags GmbH Blumenstr. 15 Fax 90402 Nürnberg Germany E-mail INTERNATIONAL Vol. 26 No. 6 December 2012 US $ 10 · 10 ISSN 0932-2744 B 31377 Cover: Biscuits at their Best Clean Label Starch Gentle Egg Feeding +49 (0)911 2018-100 School Milk Packaging or mail us to: [email protected] our service: If you like to have more information on articles and/or advertise- International Publications ments please fax this form and quote the Key Numbers. Issue 6/12 All questions must be answered to process your inquiry! 1. Business Classification: type Product Function Manufacturer Milling Dairy Products Administration/Management Supplier Bakery Fruit + Vegetable Prod. Product Development Distributor Confectionery Beverages, alcoholic Production Import/Export Meat & Fish Beverages, non-alcoholic Packaging Flavors & Spices other (please specify) Research & Development 2. -

Metal D Etection

SAFELINE Metal Detection T Series, ST Series Improved Sensitivity with eDrive™ Enhanced Brand Protection Optimized Process Efficiency Metal Detection Metal Detection Solutions For Vertical Packaging Applications High Performance Metal Detection In Vertical Packing Applications High-speed, vertical packaging processes need to maximize product quality, protect the welfare of customers and meet regulatory demands. When it comes to selecting a metal detection solution for the inspection of food products in vertical form, fill and seal (VFFS) processes, there can be only one choice: a METTLER TOLEDO Safeline Throat detector. For the inspection of snack foods, confectionery and other food products packed using a multihead weigher and VFFS bagmaker, T and ST Series metal detectors are the ideal solution. These throat metal detectors provide the means to deliver significant benefits for your business. Enhanced brand protection Increased productivity Reduced operational costs The combination of increased T and ST Series metal detectors T and ST Series technology lower sensitivity and superior reliability enable productivity to be overall lifetime costs by: T and ST Series Throat Metal Detectors Metal Throat Series ST T and provide protection for brands optimized. This effectiveness is • Eliminating false rejects & and reputations. realized through: product waste • Simple set-up and operation • Optimizing testing processes Choosing the latest Safeline throat • Reliable, consistent to increase operator efficiency metal detectors can help achieve -

Model Box Nr

BOX ı 2019 108 No. Cooperation EDITORIAL GDPR DEAR READER Dear Sir/Madam, The GDPR of 25.05.2018 requires us to ask your permis- sion to continue to send you our informative customer magazine, Model Box. If we do not hear from you, we will assume that we have your consent and will continue to send Model Box. If you no longer wish to receive the magazine, please notify your personal Model customer advisor. Thank you for your cooperation. Wouldn’t we all like to experience more «win- win situations»? Collaborations and alliances Best regards, that stimulate and accelerate joint ventures with The Model Box editorial team optimum results? And wherever possible on both a large and small scale, in both a private and professional sphere. Andreas Rufer Head of Papertrading Between individuals and entire businesses. It all Model Group sounds so simple. But let’s be honest, is it really that easy? Why don’t win-win situations come more natu- Win-win situations come about only when ever- rally to us? I think that although the benefits of yone agrees on the advantages and economic 3 Editorial cooperation are usually identified from the out- benefit of cooperating, and works to remove any 4 CEO Report set, too little thought is given to obstacles or potential obstacles or pitfalls. This is how to cre- 5 Annual Report Switzerland pitfalls. ate synergies, boost efficiency and achieve the 6 Annual Report Czech Republic desired results. 7 Annual Report Germany The foundation for successful joint working is 8 Annual Report Poland where two or more people or parties strive To generate more win-win situations, we then 9 Certification Berka/Werra towards the same, well-defined goal. -

Uttar Pradesh Suppliers by Region Agra (522) Aligarh (269) Allahabad (42) Bareilly (35)

Global Suppliers Catalog - Sell147.com 6403 Uttar Pradesh Suppliers By Region Agra (522) Aligarh (269) Allahabad (42) Bareilly (35) bhadohi (103) FIROZABAD (40) greater noida (81) Kannauj (36) Kanpur (955) Lucknow (322) Mathura (36) MEERUT (279) Moradabad (867) Noida (1291) Saharanpur (99) Varanasi (181) Updated: 2014/11/30 Noida Suppliers Company Name Business Type Total No. Employees Year Established Annual Output Value AARKAYS AIR EQUIPMENT PRIVATE LIMITED Air Washer,Air Handling Unit,Air Distribution Products,Fan Coil Unit,Air Curtain,Doubl Manufacturer 51 - 100 People 1979 - e Skin Air Handling unit,Intake Louvers,Square Diffusers,HVAC ... India Uttar Pradesh NOIDA Associated Lighting Co . Lamp, Shades Manufacturer 501 - 1000 People 1995 - India Uttar Pradesh Noida M/S PROTECH INDIA LTD. Manufacturer Directories Buying Office 51 - 100 People 1987 - India Uttar Pradesh Noida Other NutraTec Baked Blue Corn Tortilla Chips Manufacturer 51 - 100 People 2009 - India Uttar Pradesh NOIDA Tech Mentro IT Training Centre,Java Training in Noida,. Net Training in Noida,Android training in N Other 11 - 50 People 2009 - oida,Summer Training India Uttar Pradesh Noida REYNOLD INDIA PRIVATE LIMITED Chillers ,Scroll Chillers ,Screw Chillers ,Reciprocating Chillers ,Chilling Plants Manufacturer 201 - 300 People 1995 - India Uttar Pradesh Noida UKB ELECTRONICS PVT LTD Wires,Cables,Power Cords,Wiring Harness,Flexible Cord Manufacturer 501 - 1000 People 1996 - India Delhi Noida AB Lighting Technology ( Lighting & Fountains) Manufacturer led lights ,down -

West Coast Microbreweries Step up Their Product Coding Game to Ride the Rising Market Tide for Canned Craft Beer

CODESCODES OFOF THETHE WESTWEST West Coast microbreweries step up their product coding game to ride the rising market tide for canned craft beer See stories on pages 15 & 19 IN THIS ISSUE: PACKAGING FOR FRESHNESS • AUTOMATE NOW NEW MODULAR CELL Our modular case packing cell can be incorporated within any existing or new PICK & PLACE packaging process. Learn more about our flexible end of line AFFORDABLY solutions at www.paxiom.com/pickplace LAS VEGAS • MIAMI • MILWAUKEE • MONTREAL • TORONTO 1.833.4paxiom paxiom.com CPK_Paxiom_June18_CSA.indd 1 2018-05-22 10:51 AM The smarter way to print is with true CIJ innovation 4 3 2 1 It’s here! The new Videojet 1860 Continuous Inkjet Printer 1. True predictability 2. True remote services* 3. True IP rating 4. True scalability Industry-fi rst ink build-up Multiple access options Optional IP66 rating for Workfl ow modules help to sensor provides true (Ethernet™, WiFi) and entire cabinet and hygienic customize the printer to predictability and secure VPN communication design following industry perform the exact need warnings of possibly meet highest standards guidelines; easier integration required. It is easy to adapt the degrading print quality, in the industry as part of with slanted printhead printer with new capabilities even before a fault occurs. VideojetConnect™ Remote design. and functionalities, and to Service, and help to improve meet growing demands. machine uptime and customer experience. * Subject to availability in your country The Videojet 1860 – Discover the smarter way to print videojet.com/1860 ©2017 Videojet Technologies Inc. All rights reserved. Videojet Technologies Inc.’s policy is one of continued product improvement. -

Instmc WFMP1010, a Guide to Dynamic Weighing for Industry, October 2010

A Guide to Dynamic Weighing for Industry The Institute of Measurement and Control 87 Gower Street London WC1E 6AF October 2010 A Guide to Dynamic Weighing for Industry PANEL RESPONSIBLE FOR THIS GUIDE The Weighing & Force Measurement Panel reporting to the Learned Society Board of the Institute of Measurement and Control has prepared this Guide. The persons listed below served as members of the Weighing & Force Measurement Panel in preparing this Guide: Dr T Allgeier Flintec UK Ltd Mr J Anthony UK Weighing Federation Mr M Baker Sherborne Sensors Ltd Mr A Bowen AB Measurement and Control Solutions Mr P Dixon National Measurement Office Professor U Erdem Consultant, UE Consulting Mr P Harrison United Kingdom Accreditation Service Mr M Hopkins Procon Engineering Ltd Mr A Knott National Physical Laboratory Mr S Maclean Sherborne Sensors Ltd Professor J Pugh Glasgow Caledonian University Mr D Smith Avery Weigh-Tronix Mr A Urwin The Quality Scheme for Ready Mixed Concrete Mr C Whittingham M & C Engineers Mr B Yarwood Consultant, Dynamic Weighing Mr P Zecchin Nova Weigh Ltd The Weighing & Force Measurement Panel would like to express its thanks to the specialists who contributed to the preparation of the following sections: Section 3.2.1.3 Mr N Clark of Ishida Europe Ltd Section 5.2.1 Mr R W Stokes of Central Weighing Ltd Section 5.2.2 Mr D McLennan of Railweight Ltd Section 5.2.3 Mr D Pewter and Mr G Dorney of Herbert Industrial Ltd The Institute is grateful to members, specialists, and their organisations for providing relevant illustrations, and also to other providers of figures and photographs, including Eastern Instruments Inc and Gericke GmbH. -

Leading Exhibitors

Accubal Intelligent Machinery AFA China Ltd Co.,Ltd Leading Booth No. 5G38 Booth No. 6A15 CE-GLT Exhibitors New Version standard weigher 19-21 June 2019 AFA Systems Ltd. is a 1.Strength of standard leading provider of combination weigher: wide Engineered Packaging Shanghai, China rage of application, high Automation Systems price competitiveness, reliable to users worldwide. We performace. provide automated 2.10.4 inch color display, lots of packaging systems language can be chosen. that meet the end 3.Speed and amplitude can be adjusted in user's unique specification. Whether it is robotics, operation process. cartoners, case packers, palletizers or case 4.Weigh hopper followed by discharge, effectively erectors, AFA Systems can provide engineered prevents blockages. packaging solutions for all of your automation 5.99 of present programs are preprogrammed in needs. stock. Innovative engineered machinery results in a competitive advantage for your production line. AFA Systems' packaging machinery provides our customers with performance driven packaging automation. From beginning to end, AFA Systems provides the best material handling and packaging solutions. We are dedicated to making every Aetna Group Packaging Equipment project a complete success. (Shanghai)Co .Ltd Booth No. 5k53 ROBOT SERIE 6 Thanks to the experience matured in time, Robopac ALPHA-PACK (HEYUAN) presents a new range of self- propelled packaging machines, CO., LTD Robot S6.The combination between the catchy design Booth No. 6F05 and the sophisticated technology makes -

Product Lineup

Product Lineup ISHIDA CO.,LTD. www.ishida.com 44 Shogoin Sannocho, Sakyo-ku, Kyoto, 606-8392, Japan Tel: +81 (0)75 751 1618 Fax: +81 (0)75 751 1634 Printed in Japan 0918(SK) No.6031A ISHIDA's area of activity Our competency-related packing technology is well-accepted in all industries. Farms and ports Products directly coming from farms and fishing harbors Food Packed delicatessen such as sandwitch, lunch box, cut fruits and all home meal replacements All types of warehouses and factories, transportation, processing Logistics logistics centres, wholesalers Food Mass produced packed foods with a fixed weight, manufacturing such as snacks, confectionery and frozen foods Whole store automation Retail from sales floor to back room Pharmaceuticals Medicine, supplements and cosmetics manufacturing plants, laboratories Non-food products made in auto, electric, Industrial apparel, chemical and many other industries Management Centre Head Ofce 01 02 ISHIDA's area of activity Our competency-related packing technology is well-accepted in all industries. Farms and ports Products directly coming from farms and fishing harbors Food Packed delicatessen such as sandwitch, lunch box, cut fruits and all home meal replacements All types of warehouses and factories, transportation, processing Logistics logistics centres, wholesalers Food Mass produced packed foods with a fixed weight, manufacturing such as snacks, confectionery and frozen foods Whole store automation Retail from sales floor to back room Pharmaceuticals Medicine, supplements and cosmetics manufacturing plants, laboratories Non-food products made in auto, electric, Industrial apparel, chemical and many other industries Management Centre Head Ofce 01 02 Farms and ports Sorting Products directly coming from farms and fishing harbors Conveying Farms and ports Growing Center A bespoke system for packing lines, Weighing including industry leading weighers, achieves maximum efficiencies, Weighing excellent quality and high throughput.