Multi-Core Processors – an Overview

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Understanding Performance Numbers in Integrated Circuit Design Oprecomp Summer School 2019, Perugia Italy 5 September 2019

Understanding performance numbers in Integrated Circuit Design Oprecomp summer school 2019, Perugia Italy 5 September 2019 Frank K. G¨urkaynak [email protected] Integrated Systems Laboratory Introduction Cost Design Flow Area Speed Area/Speed Trade-offs Power Conclusions 2/74 Who Am I? Born in Istanbul, Turkey Studied and worked at: Istanbul Technical University, Istanbul, Turkey EPFL, Lausanne, Switzerland Worcester Polytechnic Institute, Worcester MA, USA Since 2008: Integrated Systems Laboratory, ETH Zurich Director, Microelectronics Design Center Senior Scientist, group of Prof. Luca Benini Interests: Digital Integrated Circuits Cryptographic Hardware Design Design Flows for Digital Design Processor Design Open Source Hardware Integrated Systems Laboratory Introduction Cost Design Flow Area Speed Area/Speed Trade-offs Power Conclusions 3/74 What Will We Discuss Today? Introduction Cost Structure of Integrated Circuits (ICs) Measuring performance of ICs Why is it difficult? EDA tools should give us a number Area How do people report area? Is that fair? Speed How fast does my circuit actually work? Power These days much more important, but also much harder to get right Integrated Systems Laboratory The performance establishes the solution space Finally the cost sets a limit to what is possible Introduction Cost Design Flow Area Speed Area/Speed Trade-offs Power Conclusions 4/74 System Design Requirements System Requirements Functionality Functionality determines what the system will do Integrated Systems Laboratory Finally the cost sets a limit -

Multiprocessing Contents

Multiprocessing Contents 1 Multiprocessing 1 1.1 Pre-history .............................................. 1 1.2 Key topics ............................................... 1 1.2.1 Processor symmetry ...................................... 1 1.2.2 Instruction and data streams ................................. 1 1.2.3 Processor coupling ...................................... 2 1.2.4 Multiprocessor Communication Architecture ......................... 2 1.3 Flynn’s taxonomy ........................................... 2 1.3.1 SISD multiprocessing ..................................... 2 1.3.2 SIMD multiprocessing .................................... 2 1.3.3 MISD multiprocessing .................................... 3 1.3.4 MIMD multiprocessing .................................... 3 1.4 See also ................................................ 3 1.5 References ............................................... 3 2 Computer multitasking 5 2.1 Multiprogramming .......................................... 5 2.2 Cooperative multitasking ....................................... 6 2.3 Preemptive multitasking ....................................... 6 2.4 Real time ............................................... 7 2.5 Multithreading ............................................ 7 2.6 Memory protection .......................................... 7 2.7 Memory swapping .......................................... 7 2.8 Programming ............................................. 7 2.9 See also ................................................ 8 2.10 References ............................................. -

Chap01: Computer Abstractions and Technology

CHAPTER 1 Computer Abstractions and Technology 1.1 Introduction 3 1.2 Eight Great Ideas in Computer Architecture 11 1.3 Below Your Program 13 1.4 Under the Covers 16 1.5 Technologies for Building Processors and Memory 24 1.6 Performance 28 1.7 The Power Wall 40 1.8 The Sea Change: The Switch from Uniprocessors to Multiprocessors 43 1.9 Real Stuff: Benchmarking the Intel Core i7 46 1.10 Fallacies and Pitfalls 49 1.11 Concluding Remarks 52 1.12 Historical Perspective and Further Reading 54 1.13 Exercises 54 CMPS290 Class Notes (Chap01) Page 1 / 24 by Kuo-pao Yang 1.1 Introduction 3 Modern computer technology requires professionals of every computing specialty to understand both hardware and software. Classes of Computing Applications and Their Characteristics Personal computers o A computer designed for use by an individual, usually incorporating a graphics display, a keyboard, and a mouse. o Personal computers emphasize delivery of good performance to single users at low cost and usually execute third-party software. o This class of computing drove the evolution of many computing technologies, which is only about 35 years old! Server computers o A computer used for running larger programs for multiple users, often simultaneously, and typically accessed only via a network. o Servers are built from the same basic technology as desktop computers, but provide for greater computing, storage, and input/output capacity. Supercomputers o A class of computers with the highest performance and cost o Supercomputers consist of tens of thousands of processors and many terabytes of memory, and cost tens to hundreds of millions of dollars. -

Multiprocessing and Scalability

Multiprocessing and Scalability A.R. Hurson Computer Science and Engineering The Pennsylvania State University 1 Multiprocessing and Scalability Large-scale multiprocessor systems have long held the promise of substantially higher performance than traditional uni- processor systems. However, due to a number of difficult problems, the potential of these machines has been difficult to realize. This is because of the: Fall 2004 2 Multiprocessing and Scalability Advances in technology ─ Rate of increase in performance of uni-processor, Complexity of multiprocessor system design ─ This drastically effected the cost and implementation cycle. Programmability of multiprocessor system ─ Design complexity of parallel algorithms and parallel programs. Fall 2004 3 Multiprocessing and Scalability Programming a parallel machine is more difficult than a sequential one. In addition, it takes much effort to port an existing sequential program to a parallel machine than to a newly developed sequential machine. Lack of good parallel programming environments and standard parallel languages also has further aggravated this issue. Fall 2004 4 Multiprocessing and Scalability As a result, absolute performance of many early concurrent machines was not significantly better than available or soon- to-be available uni-processors. Fall 2004 5 Multiprocessing and Scalability Recently, there has been an increased interest in large-scale or massively parallel processing systems. This interest stems from many factors, including: Advances in integrated technology. Very -

Design of a Message Passing Interface for Multiprocessing with Atmel Microcontrollers

DESIGN OF A MESSAGE PASSING INTERFACE FOR MULTIPROCESSING WITH ATMEL MICROCONTROLLERS A Design Project Report Presented to the Engineering Division of the Graduate School of Cornell University in Partial Fulfillment of the Requirements of the Degree of Master of Engineering (Electrical) by Kalim Moghul Project Advisor: Dr. Bruce R. Land Degree Date: May 2006 Abstract Master of Electrical Engineering Program Cornell University Design Project Report Project Title: Design of a Message Passing Interface for Multiprocessing with Atmel Microcontrollers Author: Kalim Moghul Abstract: Microcontrollers are versatile integrated circuits typically incorporating a microprocessor, memory, and I/O ports on a single chip. These self-contained units are central to embedded systems design where low-cost, dedicated processors are often preferred over general-purpose processors. Embedded designs may require several microcontrollers, each with a specific task. Efficient communication is central to such systems. The focus of this project is the design of a communication layer and application programming interface for exchanging messages among microcontrollers. In order to demonstrate the library, a small- scale cluster computer is constructed using Atmel ATmega32 microcontrollers as processing nodes and an Atmega16 microcontroller for message routing. The communication library is integrated into aOS, a preemptive multitasking real-time operating system for Atmel microcontrollers. Report Approved by Project Advisor:__________________________________ Date:___________ Executive Summary Microcontrollers are versatile integrated circuits typically incorporating a microprocessor, memory, and I/O ports on a single chip. These self-contained units are central to embedded systems design where low-cost, dedicated processors are often preferred over general-purpose processors. Some embedded designs may incorporate several microcontrollers, each with a specific task. -

Network on Chip for FPGA Development of a Test System for Network on Chip

Network on Chip for FPGA Development of a test system for Network on Chip Magnus Krokum Namork Master of Science in Electronics Submission date: June 2011 Supervisor: Kjetil Svarstad, IET Norwegian University of Science and Technology Department of Electronics and Telecommunications Problem Description This assignment is a continuation of the project-assignment of fall 2010, where it was looked into the development of reactive modules for application-test and profiling of the Network on Chip realization. It will especially be focused on the further development of: • The programmability of the system by developing functionality for more advanced surveillance of the communication between modules and routers • Framework that will be used to test and profile entire applications on the Network on Chip The work will primarily be directed towards testing and running the system in a way that resembles a real system at run time. The work is to be compared with relevant research within similar work. Assignment given: January 2011 Supervisor: Kjetil Svarstad, IET i Abstract Testing and verification of digital systems is an essential part of product develop- ment. The Network on Chip (NoC), as a new paradigm within interconnections; has a specific need for testing. This is to determine how performance and prop- erties of the NoC are compared to the requirements of different systems such as processors or media applications. A NoC has been developed within the AHEAD project to form a basis for a reconfigurable platform used in the AHEAD system. This report gives an outline of the project to develop testing and benchmarking systems for a NoC. -

Photovoltaic Couplers for MOSFET Drive for Relays

Photocoupler Application Notes Basic Electrical Characteristics and Application Circuit Design of Photovoltaic Couplers for MOSFET Drive for Relays Outline: Photovoltaic-output photocouplers(photovoltaic couplers), which incorporate a photodiode array as an output device, are commonly used in combination with a discrete MOSFET(s) to form a semiconductor relay. This application note discusses the electrical characteristics and application circuits of photovoltaic-output photocouplers. ©2019 1 Rev. 1.0 2019-04-25 Toshiba Electronic Devices & Storage Corporation Photocoupler Application Notes Table of Contents 1. What is a photovoltaic-output photocoupler? ............................................................ 3 1.1 Structure of a photovoltaic-output photocoupler .................................................... 3 1.2 Principle of operation of a photovoltaic-output photocoupler .................................... 3 1.3 Basic usage of photovoltaic-output photocouplers .................................................. 4 1.4 Advantages of PV+MOSFET combinations ............................................................. 5 1.5 Types of photovoltaic-output photocouplers .......................................................... 7 2. Major electrical characteristics and behavior of photovoltaic-output photocouplers ........ 8 2.1 VOC-IF characteristics .......................................................................................... 9 2.2 VOC-Ta characteristic ........................................................................................ -

COSC 6385 Computer Architecture - Multi-Processors (IV) Simultaneous Multi-Threading and Multi-Core Processors Edgar Gabriel Spring 2011

COSC 6385 Computer Architecture - Multi-Processors (IV) Simultaneous multi-threading and multi-core processors Edgar Gabriel Spring 2011 Edgar Gabriel Moore’s Law • Long-term trend on the number of transistor per integrated circuit • Number of transistors double every ~18 month Source: http://en.wikipedia.org/wki/Images:Moores_law.svg COSC 6385 – Computer Architecture Edgar Gabriel 1 What do we do with that many transistors? • Optimizing the execution of a single instruction stream through – Pipelining • Overlap the execution of multiple instructions • Example: all RISC architectures; Intel x86 underneath the hood – Out-of-order execution: • Allow instructions to overtake each other in accordance with code dependencies (RAW, WAW, WAR) • Example: all commercial processors (Intel, AMD, IBM, SUN) – Branch prediction and speculative execution: • Reduce the number of stall cycles due to unresolved branches • Example: (nearly) all commercial processors COSC 6385 – Computer Architecture Edgar Gabriel What do we do with that many transistors? (II) – Multi-issue processors: • Allow multiple instructions to start execution per clock cycle • Superscalar (Intel x86, AMD, …) vs. VLIW architectures – VLIW/EPIC architectures: • Allow compilers to indicate independent instructions per issue packet • Example: Intel Itanium series – Vector units: • Allow for the efficient expression and execution of vector operations • Example: SSE, SSE2, SSE3, SSE4 instructions COSC 6385 – Computer Architecture Edgar Gabriel 2 Limitations of optimizing a single instruction -

Embedded Networks on Chip for Field-Programmable Gate Arrays by Mohamed Saied Abdelfattah a Thesis Submitted in Conformity With

Embedded Networks on Chip for Field-Programmable Gate Arrays by Mohamed Saied Abdelfattah A thesis submitted in conformity with the requirements for the degree of Doctor of Philosophy Graduate Department of Electrical and Computer Engineering University of Toronto © Copyright 2016 by Mohamed Saied Abdelfattah Abstract Embedded Networks on Chip for Field-Programmable Gate Arrays Mohamed Saied Abdelfattah Doctor of Philosophy Graduate Department of Electrical and Computer Engineering University of Toronto 2016 Modern field-programmable gate arrays (FPGAs) have a large capacity and a myriad of embedded blocks for computation, memory and I/O interfacing. This allows the implementation of ever-larger applications; however, the increase in application size comes with an inevitable increase in complexity, making it a challenge to implement on-chip communication. Today, it is a designer's burden to create a customized communication circuit to interconnect an application, using the fine-grained FPGA fab- ric that has single-bit control over every wire segment and logic cell. Instead, we propose embedding a network-on-chip (NoC) to implement system-level communication on FPGAs. A prefabricated NoC improves communication efficiency, eases timing closure, and abstracts system-level communication on FPGAs, separating an application's behaviour and communication which makes the design of complex FPGA applications easier and faster. This thesis presents a complete embedded NoC solution, includ- ing the NoC architecture and interface, rules to guide its use with FPGA design styles, application case studies to showcase its advantages, and a computer-aided design (CAD) system to automatically interconnect applications using an embedded NoC. We compare NoC components when implemented hard versus soft, then build on this component-level analysis to architect embedded NoCs and integrate them into the FPGA fabric; these NoCs are on average 20{23× smaller and 5{6× faster than soft NoCs. -

An Early Performance Evaluation of Many Integrated Core Architecture Based SGI Rackable Computing System

NAS Technical Report: NAS-2015-04 An Early Performance Evaluation of Many Integrated Core Architecture Based SGI Rackable Computing System Subhash Saini,1 Haoqiang Jin,1 Dennis Jespersen,1 Huiyu Feng,2 Jahed Djomehri,3 William Arasin,3 Robert Hood,3 Piyush Mehrotra,1 Rupak Biswas1 1NASA Ames Research Center 2SGI 3Computer Sciences Corporation Moffett Field, CA 94035-1000, USA Fremont, CA 94538, USA Moffett Field, CA 94035-1000, USA {subhash.saini, haoqiang.jin, [email protected] {jahed.djomehri, william.f.arasin, dennis.jespersen, piyush.mehrotra, robert.hood}@nasa.gov rupak.biswas}@nasa.gov ABSTRACT The GPGPU approach relies on streaming multiprocessors and Intel recently introduced the Xeon Phi coprocessor based on the uses a low-level programming model such as CUDA or a high- Many Integrated Core architecture featuring 60 cores with a peak level programming model like OpenACC to attain high performance of 1.0 Tflop/s. NASA has deployed a 128-node SGI performance [1-3]. Intel’s approach has a Phi serving as a Rackable system where each node has two Intel Xeon E2670 8- coprocessor to a traditional Intel processor host. The Phi has x86- core Sandy Bridge processors along with two Xeon Phi 5110P compatible cores with wide vector processing units and uses coprocessors. We have conducted an early performance standard parallel programming models such as MPI, OpenMP, evaluation of the Xeon Phi. We used microbenchmarks to hybrid (MPI + OpenMP), UPC, etc. [4-5]. measure the latency and bandwidth of memory and interconnect, Understanding performance of these heterogeneous computing I/O rates, and the performance of OpenMP directives and MPI systems has become very important as they have begun to appear functions. -

A Comparison of Four Series of Cisco Network Processors

Advanced Computational Intelligence: An International Journal (ACII), Vol.3, No.4, October 2016 A COMPARISON OF FOUR SERIES OF CISCO NETWORK PROCESSORS Sadaf Abaei Senejani 1, Hossein Karimi 2 and Javad Rahnama 3 1Department of Computer Engineering, Pooyesh University, Qom, Iran 2 Sama Technical and Vocational Training College, Islamic Azad University, Yasouj Branch, Yasouj, Iran 3 Department of Computer Science and Engineering, Sharif University of Technology, Tehran, Iran. ABSTRACT Network processors have created new opportunities by performing more complex calculations. Routers perform the most useful and difficult processing operations. In this paper the routers of VXR 7200, ISR 4451-X, SBC 7600, 7606 have been investigated which their main positive points include scalability, flexibility, providing integrated services, high security, supporting and updating with the lowest cost, and supporting standard protocols of network. In addition, in the current study these routers have been explored from hardware and processor capacity viewpoints separately. KEYWORDS Network processors, Network on Chip, parallel computing 1. INTRODUCTION The expansion of science and different application fields which require complex and time taking calculations and in consequence the demand for fastest and more powerful computers have made the need for better structures and new methods more significant. This need can be met through solutions called “paralleled massive computers” or another solution called “clusters of computers”. It should be mentioned that both solutions depend highly on intercommunication networks with high efficiency and appropriate operational capacity. Therefore, as a solution combining numerous cores on a single chip via using Network on chip technology for the simplification of communications can be done. -

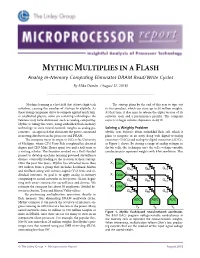

MYTHIC MULTIPLIES in a FLASH Analog In-Memory Computing Eliminates DRAM Read/Write Cycles

MYTHIC MULTIPLIES IN A FLASH Analog In-Memory Computing Eliminates DRAM Read/Write Cycles By Mike Demler (August 27, 2018) ................................................................................................................... Machine learning is a hot field that attracts high-tech The startup plans by the end of this year to tape out investors, causing the number of startups to explode. As its first product, which can store up to 50 million weights. these young companies strive to compete against much larg- At that time, it also aims to release the alpha version of its er established players, some are revisiting technologies the software tools and a performance profiler. The company veterans may have dismissed, such as analog computing. expects to begin volume shipments in 4Q19. Mythic is riding this wave, using embedded flash-memory technology to store neural-network weights as analog pa- Solving a Weighty Problem rameters—an approach that eliminates the power consumed Mythic uses Fujitsu’s 40nm embedded-flash cell, which it in moving data between the processor and DRAM. plans to integrate in an array along with digital-to-analog The company traces its origin to 2012 at the University converters (DACs) and analog-to-digital converters (ADCs), of Michigan, where CTO Dave Fick completed his doctoral as Figure 1 shows. By storing a range of analog voltages in degree and CEO Mike Henry spent two and a half years as the bit cells, the technique uses the cell’s voltage-variable a visiting scholar. The founders worked on a DoD-funded conductance to represent weights with 8-bit resolution. This project to develop machine-learning-powered surveillance drones, eventually leading to the creation of their startup.