Cliff Notes: Research in Natural Language Processing at the University of Pennsylvania

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

New Mexico State University

Financial Report Table of Contents Financial Statements and Schedules June 30, 2010 and 2009 Official Roster........................................................................................................................................................................ 1 President’s Letter .................................................................................................................................................................. 2 Independent Auditor’s Report ............................................................................................................................................... 3 Financial Statements Management’s Discussion and Analysis ............................................................................................................................... 5 Exhibit A: Statement of Net Assets ..................................................................................................................................... 15 Exhibit B: Statement of Revenues, Expenses, and Changes in Net Assets ........................................................................ 17 Exhibit C: Statement of Cash Flows .................................................................................................................................... 19 Notes to the Financial Statements ...................................................................................................................................... 21 Supplemental Schedules Schedule 1 - Combining Statement of Net Assets for -

Peter Culicover's Curriculum Vitae, 2019-20

CURRICULUM VITAE June 2020 PETER W. CULICOVER Distinguished University Professor Emeritus, The Ohio State University Affiliate Professor, University of Washington Phone (cell): 614-361-4744 Email: [email protected] Primary research interests: Syntactic theory, constructions, typology, syntactic change, language learnability, English syntax, representation of language in the mind/brain, linguistics and cognitive science, computational simulation of language acquisition and language change, jazz. EDUCATION PhD Massachusetts Institute of Technology (1971), Linguistics B.A. City College of NeW York (1966), Mathematics ACADEMIC POSITIONS HELD 2019- Affiliate Professor, University of Washington 2018- Distinguished University Professor Emeritus, OSU 2016-2018 Distinguished University Professor, The Ohio State University 2008 Professeur invité, Université de Paris VII (Feb-Mar 2008) 2006-7 Visiting Scholar, Seminar für Sprachwissenschaft, Universität Tübingen 2005- Humanities Distinguished Professor in Linguistics, The Ohio State University 2003 Linguistics Institute, Michigan State University (Summer 2003) 2002 Visiting Scientist, University of Groningen (Autumn 2002) 2000 Distinguished Visiting Professor, University of Tübingen (June 2000) 1998-2006 Chair, Department of Linguistics, The Ohio State University 1997 Fulbright Distinguished Chair in Theoretical Linguistics, University of Venice (Spring 1997) 1991-92 Visiting Scientist, Center for Cognitive Science, MIT 1989-2003 Director, Center for Cognitive Science, The Ohio State University -

Kreyòl Ayisyen, Or Haitian Creole (‘Creole French’)1

HA Kreyòl Ayisyen, or Haitian Creole (‘Creole French’)1 Michel DeGraff Introduction Occupying the western third of Hispaniola, the second largest island of the Caribbean, Haiti has a population of more than 7 million. Kreyòl (as we Haitians call it) is the only language that is shared by the entire nation, the vast majority of which is monolingual (Y Dejean 1993). The official name of the language is also Kreyòl (cf Bernard 1980 and Article 5 of the 1987 Haitian Constitution). Haitian Creole (HA), as the communal language of the new Creole community of colonial Haiti (known as Saint-Domingue in the colonial period), emerged in the 17th and 18th centuries out of the contact among regional and colloquial varieties of French and the various Niger-Congo languages spoken by the Africans brought as slaves to work the colony’s land. HA is modern Haiti’s national language and one of two constitutionally-recognized official languages (alongside French). However, most official documents published by the Haitian State in all domains, from education to politics, are still written exclusively in French to the detriment of monolingual Creolophones, even though French is spoken today by at most one-fifth of the population, at various levels of fluency. The Haitian birth certificate, the very first official document that every newborn Haitian citizen is, in principle, assigned by the state, exists in French only. Such French-only policies, at least at the level of the written record, effectively create a situation of “linguistic apartheid” in the world’s most populous Creole-speaking country (P Dejean 1989, 1993: 123-24). -

Vol 41 No 1 Spring-Summer 2018

MASSACHUSETTS EDUCATORS OF ENGLISH LANGUAGE LEARNERS CurrentsVol. 41, No. 1 Spring/Summer 2018 INSIDE MATSOL’s Annual Conference Volunteer Opportunities with MATSOL Teaching Between Languages: Five Principles for the Multicultural Classroom ON THE COVER Fernanda Kray, DESE’s Coordinator of ELL Professional Development, explains to an attentive MATSOL Conference audience the steps that DESE is taking to implement the LOOK Act MASSACHUSETTS EDUCATORS OF ENGLISH LANGUAGE LEARNERS CurrentsVol. 41, No.1 Spring/Summer 2018 Contents MATSOL News 46 Bilingualism Through the Public Eye 4 President’s Message CHRISTINE LEIDER 6 MATSOL’s Annual Conference 49 Teaching into a Soundproof Booth 15 Photos from the Conference HEATHER BOBB 18 Elections to the MATSOL Board 52 The State of Teacher Preparedness to Teach 20 An Update from MATSOL’s Director of Emergent Bilingual Learners Professional Learning MICHAELA COLOMBO, JOHANNA ANN FELDMAN TIGERT, & CHRISTINE LEIDER 22 Language Opportunity Coalition Honored for Reviews Advocacy 59 Understanding English Language Variation in 24 A Report from MATSOL’s English Learner U.S. Schools, by Anne H. Charity Hudley & Chris- Leadership Council (MELLC) tine Mallinson ANN FELDMAN REVIEWED BY CAROLYN A. PETERSON 26 What’s Happening in MATSOL’s Special Interest 61 Race, Empire and English Language Teaching: Groups (SIGs) Creating Responsible and Ethical Anti-Racist Get Involved Practice, by Suhanthie Motha 29 Volunteer Opportunities with MATSOL REVIEWED BY KAREN HART 31 Submit to MATSOL Publications 63 Islandborn, by Junot Díaz Reports REVIEWED BY JACQUELINE STOKES 32 TESOL’s 52nd Annual Convention in Chicago: The Inside Scoop KATHY LOBO 34 An Update on Adult Basic Education in Massachusetts: Preparing to Take a Major Step Forward JEFF MCLYNCH 36 News from NNE TESOL STEPHANIE N. -

Bulletin - December 2001 Page 1 of 48

LSA Bulletin - December 2001 Page 1 of 48 Bulletin No. 174, December 2001 Copyright ©2001 by the Linguistic Society of America Linguistic Society of America 1325 18th Street, NW, Suite 211 Washington, DC 20036-6501 [email protected] The LSA Bulletin is issued a minimum of four times per year by the Secretariat of the Linguistic Society of America, 1325 18th Street, NW, Suite 211, Washington, DC 20036-6501 and is sent to all members of the Society. News items should be addressed to the Linguistic Society of America, 1325 18th Street, NW, Suite 211, Washington, DC 20036-6501. All materials must arrive at the LSA Secretariat by the 1st of the month preceding the month of publication. Annual dues for U.S. personal memberships for 2000 are $65.00; U.S. student dues are $25.00 per year, with proof of status; U.S. library memberships are $120.00; add $10.00 postage surcharge for non-U.S. addresses; $13.00 of dues goes to the publication of the LSA Bulletin. New memberships and renewals are entered on a calendar year basis only. Postmaster: Send address changes to: Linguistic Society of America, 1325 18th Street, NW, Suite 211, Washington, DC 20036-6501. CONTENTS z LSA Presidents z Constitution of the Linguistic Society of America z LSA Officers and Executive Committee - 2002 z LSA Committees - 2002 z LSA Delegates and Liaisons - 2002 z Honorary Members z Language Online z Language Style Sheet z Model Abstracts z 2002 Abstract Submittal Form: 15 - and 30 - Minute Papers and Poster Sessions { Download rtf file z Program Committee 2002 Guidelines and Abstract Specifications z Grants Calendar z Grant Agency Addresses z LSA Guidelines for Nonsexist Usage z Forthcoming Conferences z Job Opportunities z Prizes Awarded by the LSA LSA PRESIDENTS 1925 Hermann Collitz (1855-1935) 1963 Mary R. -

The Ups and Downs of Head Displacement Karlos Arregi Asia Pietraszko

The Ups and Downs of Head Displacement Karlos Arregi Asia Pietraszko We propose a theory of head displacement that replaces traditional Head Movement and Lowering with a single syntactic operation of Generalized Head Movement. We argue that upward and downward head displacement have the same syntactic properties: cyclicity, Mir- ror Principle effects, feeding upward head displacement, and being blocked in the same syntactic configurations. We also study the inter- action of head displacement and other syntactic operations, arguing that claimed differences between upward and downward displacement are either spurious or follow directly from our account. Finally, we show that our theory correctly predicts the attested crosslinguistic variation in verb and inflection doubling in predicate clefts. Keywords: head displacement, Mirror Principle, VP-ellipsis, do-sup- port, predicate clefts 1 Introduction The status of displacement operations that specifically target heads has been hotly contested. While some authors have highlighted formal similarities with phrasal movement (e.g., Koopman 1984, Travis 1984, M. Baker 1988, Lema and Rivero 1990, Rizzi 1990, Koopman and Szabolcsi 2000, Matushansky 2006, Vicente 2007, Roberts 2010, Harizanov 2019, Harizanov and Gribanova 2019, Preminger 2019), others have argued that at least some cases of head displacement are not the result of the same type of process as phrasal movement (e.g., Chomsky 2001, Hale and Keyser 2002, Harley 2004, Platzack 2013, Barrie 2017, Harizanov and Gribanova 2019). A related -

Degraff 20140729 Cv.Pdf

Curriculum Vitae Michel DeGraff http://mit.edu/degraff http://haiti.mit.edu Department: MIT Linguistics and Philosophy Date: July 29, 2014 Date of Birth: 26 July 1963 Place of Birth: Port-au-Prince, Ha¨ıti Education Institution Degree Date University of Pennsylvania Ph.D. 1992 University of Pennsylvania M.Sc. 1988 City College of New York B.Sc. 1986 Title of Doctoral Thesis: Creole Grammars and the Acquisition of Syntax: The Case of Haitian Creole Grants, Fellowships and Honors Grant from National Science Foundation (NSF) 2013–2017 Project: “Krey`ol-based Cyberlearning for a New Perspective on the Teaching of STEM in local Languages” (co-PI: Dr. Vijay Kumar) $999,993 award from three NSF Programs: Linguistics, Cyberlearning and Research on Education & Learning. http://www.nsf.gov/awardsearch/showAward.do?AwardNumber=1248066 [One of this project’s recent outcomes, on April 17, 2013, is a landmark agreement between the Haitian State and our NSF-funded MIT-Haiti Initiative toward a (“thinking out of the box”) paradigm shift in order to improve Haiti’s education system. See section on Media Coverage on page 19.] Grant from Open Society Foundations 2012–2013 Project: “Improving Haiti’s STEM Education through Faculty Training and Curriculum Develop- ment” (co-PI: Dr. Vijay Kumar) $55,518 award. Grant from National Science Foundation (NSF) 2010–2013 Project: “Krey`ol-based based and technology-enhanced learning of reading, writing, math, and science in Haiti.” $200,000 award from NSF Division of Research on Learning in Formal and Informal Settings (Pro- gram: Research and Evaluation on Education in Science and Engineering). -

Bulletin Page 1 of 1

December 2004 Bulletin Page 1 of 1 Bulletin No. 186, December 2004 Copyright ©2004 by the Linguistic Society of America Linguistic Society of America 1325 18th Street, NW, Suite 211 Washington, DC 20036-6501 [email protected] The LSA Bulletin (ISSN 0023-6365) is issued four times per year (March, June, October, and December) by the Secretariat of the Linguistic Society of America, 1325 18th Street, NW, Suite 211, Washington, DC 20036-6501 and is sent to all members of the Society. News items should be addressed to the Linguistic Society of America, 1325 18th Street, NW, Suite 211, Washington, DC 20036-6501. All materials must arrive at the LSA Secretariat by the 1st of the month preceding the month of publication. Periodical postage is paid at Washington, DC, and at additional mailing offices. Annual dues for U.S. personal members for 2004 are $65.00; U.S. student dues are $25.00 per year, with proof of status; U.S. library memberships are $120.00; add $10.00 postage surcharge for non-U.S. addresses; $13.00 of dues goes to the publication of the LSA Bulletin. New memberships and renewals are entered on a calendar year basis only. Postmaster: Send address changes to: LSA Bulletin, 1325 18th Street, NW, Suite 211, Washington, DC 20036-6501. Electronic mail: [email protected]. CONTENTS z LSA Officers and Executive Committee - 2005 z LSA Committees - 2005 z LSA Delegates and Liaisons - 2005 z Abstract Specifications LSA Homepage http://lsadc.org/info/dec04bulletin/indextext.html 5/21/2008 December 2004 LSA Bulletin - Abstract Specifications Page 1 -

The Case of Creole Studies (Response to Charity Hudley Et Al.) MICHEL DEGRAFF

PERSPECTIVES Toward racial justice in linguistics: The case of Creole studies (Response to Charity Hudley et al.) MICHEL DEGRAFF Massachusetts Institute of Technology In their target article, Anne Charity Hudley, Christine Mallinson, and Mary Bucholtz (2020) have challenged linguists to constructively engage with race and racism. They suggest three core principles for inclusion and equity in linguistics: (i) ‘social impact’ as a core criterion for excel - lence; (ii) the acknowledgment of our field’s ‘origins as a tool of colonialism and conquest’; and (iii) the elimination of the ‘race gap’ that ‘diminishes the entire field … by excluding scholars and students of color’. Here I amplify Charity Hudley et al.’s challenges through some of my own cri - tiques of Creole studies. These critiques serve to further analyze institutional whiteness as, in their terms, ‘a structuring force in academia, informing the development of theories, methods, and models in ways that reproduce racism and white supremacy’. The latter is exceptionally overt in the prejudicial misrepresentations (a.k.a. ‘Creole exceptionalism’) that we linguists have created and transmitted, since the colonial era, about Creole languages and their speakers. One such mis - representation, whose popularity trumps its dubious historical and empirical foundations, starts with the postulation that Creole languages as a class contrast with so-called ‘regular’ languages due to allegedly ‘abnormal’ processes of emergence and transmission. In effect, then, Creole stud - ies may well be the most spectacular case of exclusion and marginalization in linguistics. With this in mind, I ask of Charity Hudley et al. a key question that is inspired by legal scholar Derrick Bell’s ‘racial interest-convergence theory’, whereby those in power will actively work in favor of racial justice only when such work also contributes to their self-interest. -

Language Acquisition in Creolization And, Thus, Language Change: Some Cartesian- Uniformitarian Boundary Conditions

See discussions, stats, and author profiles for this publication at: https://www.researchgate.net/publication/220531633 Language Acquisition in Creolization and, Thus, Language Change: Some Cartesian- Uniformitarian Boundary Conditions Article in Language and Linguistics Compass · July 2009 DOI: 10.1111/j.1749-818X.2009.00135.x · Source: DBLP CITATIONS READS 26 78 1 author: Michel DeGraff MIT 37 PUBLICATIONS 467 CITATIONS SEE PROFILE All content following this page was uploaded by Michel DeGraff on 27 August 2019. The user has requested enhancement of the downloaded file. Language and Linguistics Compass 3/4 (2009): 888–971, 10.1111/j.1749-818x.2009.00135.x CreolizationMichelBlackwellOxford,LNCOLanguage1749-818x©Journal13510.1111/j.1749-818x.2009.00135.xMarch0888???971???Original 2009 2009DeGraff UKThecompilationArticle Publishing and isAuthor Language Linguistic © Ltd 2009 Change Compass Blackwell Publishing Ltd Language Acquisition in Creolization and, Thus, Language Change: Some Cartesian- Uniformitarian Boundary Conditions Michel DeGraff* MIT Linguistics & Philosophy Content Abstract 1 Background and Objectives 1.1 Terminological and conceptual preliminaries: ‘Creoles’, ‘Creole genesis’, ‘Creolization’, etc. 1.2 Uniformitarian boundary conditions 1.3 Cartesian boundary conditions 1.4 Some landmarks for navigating through the many detours of this long essay 2 Whence ‘Creole Genesis’? 2.1 Making constructive use of the distinction and relation between ‘I-languages’ and ‘E-languages’ 2.1.1 The ontological priority of I-languages (and -

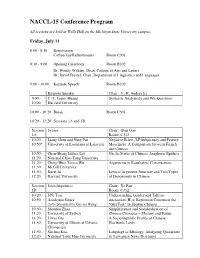

Conference Program

NACCL-15 Conference Program All sessions are held at Wells Hall on the Michigan State University campus. Friday, July 11 8:00 - 8:30 Registration Coffee/Tea/Refreshments Room C301 8:30 - 9:00 Opening Ceremony Room B102 Dr. Wendy Wilkins, Dean, College of Arts and Letters Dr. David Prestel, Chair, Department of Linguistics and Languages 9:00 - 10:00 Keynote Speech Room B102 Keynote Speaker Chair: Y.-H. Audrey Li 9:00 - C.-T. James Huang Syntactic Analyticity and Wh-Questions 10:00 Harvard University 10:00 - 10:20 Break Room C301 10:20 - 12:20 Sessions 1A and 1B Session Syntax Chair: Qian Gao 1A Room: C313 10:20 - Liang Chen and Ning Pan Negative Effect, LF Subjacency and Feature 10:50* University of Louisiana at Lafayette Movement: A Comparison between French and Chinese 10:50 - Chen-Sheng Luther Liu On the Status of Chinese Anaphoric Epithets 11:20 National Chiao Tung University 11:20 - Ching-Huei Teresa Wu Arguments in Resultative Constructions 11:50 McGill University 11:50 - Ruixi Ai Lexical Argument Structure and Two Types 12:20 Harvard University of Denominals in Chinese Session Sociolinguistics Chair: Ye Fan 1B Room: C312 10:20 - I-Ni Tsai Understanding Gender and Talk-in- 10:50 Academia Sinica interaction: How Repetition Constructs the (to be presented by Cui-xia Weng) "Girl Talk" in Spoken Chinese 10:50 - Shouhui Zhao Simplification and Standardization of 11:20 University of Sydney Chinese Character -- History and Future 11:20 - Liwei Gao A Sociolinguistic Profile of Chinese 11:50 University of Illinois at Urbana- Electronic Lexis -

Bulletin LSA PRESIDENTS

LSA October 2000 Bulletin Page 1 of 34 Bulletin No. 170, December 2000 Copyright ©2000 by the Linguistic Society of America Linguistic Society of America 1325 18th Street, NW, Suite 211 Washington, DC 20036-6501 [email protected] The LSA Bulletin is issued a minimum of four times per year by the Secretariat of the Linguistic Society of America, 1325 18th Street, NW, Suite 211, Washington, DC 20036-6501 and is sent to all members of the Society. News items should be addressed to the Linguistic Society of America, 1325 18th Street, NW, Suite 211, Washington, DC 20036-6501. All materials must arrive at the LSA Secretariat by the 1st of the month preceding the month of publication. Annual dues for U.S. personal memberships for 2000 are $65.00; U.S. student dues are $25.00 per year, with proof of status; U.S. library memberships are $120.00; add $10.00 postage surcharge for non-U.S. addresses; $13.00 of dues goes to the publication of the LSA Bulletin. New memberships and renewals are entered on a calendar year basis only. Postmaster: Send address changes to: Linguistic Society of America, 1325 18th Street, NW, Suite 211, Washington, DC 20036-6501. CONTENTS z LSA Presidents z Constitution of the Linguistic Society of America z LSA Officers and Executive Committee - 2001 z LSA Committees - 2001 z LSA Delegates and Liaisons - 2001 z Honorary Members z Program Committee 2001 Guidelines and Abstract Specifications z 2001 Abstract Submittal Form: 15- and 30-minute papers and poster sessions z Model Abstracts z Grants Calendar z Grant Agency Addresses z Forthcoming Conferences z LSA Guidelines for Nonsexist Usage z Job Opportunities LSA Homepage LSA PRESIDENTS 1925 Hermann Collitz (1855-1935) 1963 Mary R.