Is Tricking a Robot Hacking?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

HOW to PHYSICALLY PREPARE for TRICKING by Anthony Mychal Ten Years Ago, Only a Handful of People Tricked

HOW TO PHYSICALLY PREPARE FOR TRICKING BY AnTHONY mychal Ten years ago, only a handful of people tricked. Since then, however, the sport has seen a rapid climb in popularity. There are thousands of people from hundreds of countries that are flipping, twisting, and kicking in their backyards aided by the massive amount of online content, support, and resources. Even though tricking depends largely on skill, better athletes are emerging and raising the level of difficulty and complexity of the tricks. The downside of this is that most new tricksters have no formal training. In its infancy, tricksters were martial artists that were already conditioned, which helped protect from injuries and jumpstart their careers. This lack of physical preparation can devastate an athlete. As tricking advances, just like any sport, getting bigger, faster, and stronger is advantageous to prevent injuries and push the boundaries of human capability. What makes training for tricking difficult, however, is that there aren’t rules or boundaries defining the sport. There are no intervals of work and rest. You trick when you’re ready to trick. This means following programs, techniques, and regimens from other sports is very misguided. A TRICKSTEr’S NEEDS Successful tricksters will have the power and explosiveness to execute high flying moves, while also having the strength and stiffness to handle the impact of sticking a landing. But tricking is unique from others sports in that grace and flexibility are needed to execute most moves. It’s not about brute strength, but having strength in extreme ranges of motion is important to protect from injury. -

A Pilgrimage in Europe and America, Leading to the Discovery of the Sources of the Mississippi and Bloody River; with a Descript

Library of Congress A pilgrimage in Europe and America, leading to the discovery of the sources of the Mississippi and Bloody River; with a description of the whole course of the former, and of the Ohio. By J.C. Volume 2 2 1108 3614 A PILGRIMAGE IN EUROPE AND AMERICA, LEADING TO THE DISCOVERY OF THE SOURCES OF THE MISSISSIPPI AND BLOODY RIVER; WITH A DESCRIPTION OF THE WHOLE COURSE OF THE FORMER, AND OF THE OHIO. cQiacomo Constantinos BY J. C. BELTRAMI, ESQ. FORMERLY JUDGE OF A ROYAL COURT IN THE EX-KINGDOM OF ITALY. IN TWO VOLUMES. VOL. II. LC LIBRARY OF CONGRESS CITY OF WASHINGTON LONDON: PRINTED FOR HUNT AND CLARKE, YORK STREET, COVENT GARDEN. 1828. F597 .846 F597 .B46 LETTER X. A pilgrimage in Europe and America, leading to the discovery of the sources of the Mississippi and Bloody River; with a description of the whole course of the former, and of the Ohio. By J.C. Volume 2 http://www.loc.gov/resource/lhbtn.1595b Library of Congress Philadelphia, February 28 th, 1823. Where shall I begin, my dear Madam? Where I ought to end,—with myself; for you are impatient to hear what is become of me. I know your friendship, and anticipate its wishes. I am now in America. My hand-writing ought to convince you that I am alive; but, since a very reverend father has made the dead write letters, it is become necessary to explain whether one is still in the land of the living, and particularly when one writes from another world, and has been many times near the gates of eternity. -

Ntroduction to Heraldry Vith Nearly One Thousand Illustrations

N T R O D U C T I O N T O H ER A LD R Y &VIT H N EAR LY ONE THOUSAND ILLUSTRAT IONS ; L HE A OF A UT FIVE H ND ED DIF ERE LI IN C UDIN G T RMS BO U R F NT FAMI ES . B Y H U G H C L A K R . T EIGHTEEN H EDITION . P E ED Y R . LAN EE E SED AND CO CT B J . O R VI RR , - IV T F ARMS ROUGE CROIX PURS C AN O . L O N D O N B ELL B A Y 6 Y R S TREET C VENT GAR EN LD , , O K , O D , A D 1 LEET T R ET N 8 6 , F S E . 6 1 8 6 . n cQJ LO O PR N E BY W ILLIAM CLOWVES O MFORD ST R EET ND N I T D AND S NS , STA A ND C H G C ROSS A RIN . P E R F A C E . ’ “ OLAR K s Introducti onto Heraldry has now beenin xis nc for r s of ar and n r e te e upwa d eighty ye s , go e th ough s n n n In r nin i eve tee edi ti o s . p ese t g the e ghteenth to i c i s on nc ss r to sa in the Publ , it ly e e a y y , that or r to c r c n na nce of ch r de se u e a o ti u su popula ity, the book has undergone c omplete revision; and by the o i onof s or and c rr c i on miss ome exploded the ies, the o e t of few rrono s ni on enr n r o a e e u Opi s , be e de ed , it is h ped , a still more trustworthy Hand - book to anArt as useful as rnm n — to Sc nc r al of it is o a e tal a ie e , the eal v ue which is daily be coming more apparent in this age of nd ri i n r progress a c t cal i qui y . -

Civic Genealogy from Brunetto to Dante

University of Pennsylvania ScholarlyCommons Publicly Accessible Penn Dissertations 2016 The Root Of All Evil: Civic Genealogy From Brunetto To Dante Chelsea A. Pomponio University of Pennsylvania, [email protected] Follow this and additional works at: https://repository.upenn.edu/edissertations Part of the Medieval Studies Commons Recommended Citation Pomponio, Chelsea A., "The Root Of All Evil: Civic Genealogy From Brunetto To Dante" (2016). Publicly Accessible Penn Dissertations. 2534. https://repository.upenn.edu/edissertations/2534 This paper is posted at ScholarlyCommons. https://repository.upenn.edu/edissertations/2534 For more information, please contact [email protected]. The Root Of All Evil: Civic Genealogy From Brunetto To Dante Abstract ABSTRACT THE ROOT OF ALL EVIL: CIVIC GENEALOGY FROM BRUNETTO TO DANTE Chelsea A. Pomponio Kevin Brownlee From the thirteenth century well into the Renaissance, the legend of Florence’s origins, which cast Fiesole as the antithesis of Florentine values, was continuously rewritten to reflect the changing nature of Tuscan society. Modern criticism has tended to dismiss the legend of Florence as a purely literary conceit that bore little relation to contemporary issues. Tracing the origins of the legend in the chronicles of the Duecento to its variants in the works of Brunetto Latini and Dante Alighieri, I contend that the legend was instead a highly adaptive mode of legitimation that proved crucial in the negotiation of medieval Florentine identity. My research reveals that the legend could be continually rewritten to serve the interests of collective and individual authorities. Versions of the legend were crafted to support both republican Guelfs and imperial Ghibellines; to curry favor with the Angevin rulers of Florence and to advance an ethnocentric policy against immigrants; to support the feudal system of privilege and to condemn elite misrule; to denounce the mercantile value of profit and ot praise economic freedom. -

Is Tricking a Robot Hacking? Ryan Calo University of Washington School of Law

University of Washington School of Law UW Law Digital Commons Tech Policy Lab Centers and Programs 2018 Is Tricking a Robot Hacking? Ryan Calo University of Washington School of Law Ivan Evtimov Earlence Fernandes Tadayoshi Kohno David O'Hair Follow this and additional works at: https://digitalcommons.law.uw.edu/techlab Part of the Computer Law Commons, and the Science and Technology Law Commons Recommended Citation Ryan Calo, Ivan Evtimov, Earlence Fernandes, Tadayoshi Kohno & David O'Hair, Is Tricking a Robot Hacking?, (2018). Available at: https://digitalcommons.law.uw.edu/techlab/5 This Book is brought to you for free and open access by the Centers and Programs at UW Law Digital Commons. It has been accepted for inclusion in Tech Policy Lab by an authorized administrator of UW Law Digital Commons. For more information, please contact [email protected]. Is Tricking a Robot Hacking? Ryan Calo | [email protected] University of Washington School of Law Ivan Evtimov | [email protected] University of Washington Paul G. Allen School of Computer Science & Engineering Earlence Fernandes | [email protected] University of Washington Paul G. Allen School of Computer Science & Engineering Tadayoshi Kohno | [email protected] University of Washington Paul G. Allen School of Computer Science & Engineering David O’Hair | [email protected] University of Washington School of Law Legal Studies Research Paper No. 2018-05 Electronic copy available at: https://ssrn.com/abstract=3150530 Is Tricking A Robot Hacking? Ryan Calo, Ivan Evtimov, Earlence Fernandes, Tadayoshi Kohno, David O’Hair Tech Policy Lab University of Washington The term “hacking” has come to signify breaking into a computer system.1 Lawmakers crafted penalties for hacking as early as 1986 in supposed response to the movie War Games three years earlier in which a teenage hacker gained access to a military computer and nearly precipitated a nuclear war. -

CARY Family.Pdf

«sr. i-S >••. ) P^EGOI^DS 7X<' yfrj/ t*/4rri of BRIDGEWATER, MASS. -FROM- 1()o() to 1681. PUBUSHED BY XjOI^XiTO "W. Srcs^oaa, 2v£a.ss. BROCKTON: WM, L. PUF FER, STEAM, BOOK AHO JOB PRtKTBB. 1889. ,JX^, '^T/'-^^^* t SH/RL£yMSHJRLSy M BESSI.VGEJf^^B£SS/.VGES'^ 950S.GILB£KTST -234 ">• AVAHE/M. CA. 92.'ii)4 J'o ihe numerous family in :dME^lG:d hearing Ihe honored name of Col'Ill', this record is respeclfuUy dedicah'd, by LOm-NG Jf'\ TUFFm, 'Brocklon, July /, 1889. reprinted by the M} OLD BRIDGEWATER HISTORICAL SOCIETY ^ 162 Howard Street ^ West Bridgewater, Mass. 02379 1? li 1986 NO'TBl. John Cary, or Carew as it was once spelled, the inuiiigrant, came to New beingKngiaiid then from 25 Somersetshire,years old. He Englaiid, married and Elizabeth, settled ill daughter Duxbury. of FrancisMass., (lodfrey,in 1639, m 1644 and by her had in Duxbury, John. 1645; Francis, 1O47; Elizabeth, 1649. He then removed to Hraintree, and had James, 1652, and linally settled in Hridgewater where his remaining eight children were born. He was one of the lifly four original proprietors of the town of IJridgewaler. He leinoved from IJrainiree to Hridgewater in 1654. In "1656 he was chosen C.onstable, being the lirst town oHicei and only one elected that year. He was the lirst town clerk, serving until 1681. He lived in what is now West Hridgewater, and about one fourth of a mile west of the old church. He died in 1681. His wife died in 1680. TOWI? RESO^DS —OF— BFl!JlDGE.WA.TElPi, MJSlSS. -

Heraldry Manual January 2011

Letter of Registration and Return May 2011 TO ALL SINGULAR as well as Nobles and Gentles and others to whom these presents shall Come, Sir L’Bet’e deAcmd, Fleur-de-Lis Principal Herald and King of Arms of the Realm and Empire of Adria sendeth Greetings. I apologize for the lateness of this LoRR. Submissions just kept tricking in and I wanted to get as many of them through for this LoRR. Eventually I had to stop, so there will be more for next month. I must say that it has been a long time since I have seen such a diverse and creative group of submissions in one month. Not one violation of marshaling, the bordure wasn’t used on the majority of submission (a trend that has shown a lack of creativity). In this LoRR it seems the 50/50 tincture rule is being tested a lot. Out of 26 submissions, we have only two returns. A final note to our heralds out there, mostly the new ones yet some of the older too. The LoRR is an official document. Not only does it record registered arms. It records rulings, research, and notification of events. The LoRR “is” the official means of communication. Not the Yahoo groups or websites. So whether you have submission or not, I suggest you read them all. They are not only informative, at times educational, they are necessary to be the best herald you can be. In Service To the College of Arms and the Empire of Adria, Sir L’Bet’e deAcmd Fleur de Lis ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ REGISTERED ALBION Alabara Device Azure, a pair of shears argent Desert Phoenix Rising, Estate of Estate Or, a phoenix and on a chief gules five bezants Or. -

Is Tricking a Robot Hacking?

Is Tricking A Robot Hacking? Ryan Calo, Ivan Evtimov, Earlence Fernandes, Tadayoshi Kohno, David O’Hair Tech Policy Lab University of Washington The term “hacking” has come to signify breaking into a computer system.1 Lawmakers crafted penalties for hacking as early as 1986 in supposed response to the movie War Games three years earlier in which a teenage hacker gained access to a military computer and nearly precipitated a nuclear war. Today a number of local, national, and international laws seek to hold hackers accountable for breaking into computer systems to steal information or disrupt their operation; other laws and standards incentivize private firms to use best practices in securing computers against attack. The landscape has shifted considerably from the 1980s and the days of dial-ups and mainframes. Today most people carry around the kind of computing power available to the United States military at the time of War Games in their pockets. People, institutions, and even everyday objects are connected via the Internet. Driverless cars roam highways and city streets. Yet in an age of smartphones and robots, the classic paradigm of hacking, in the sense of unauthorized access to a protected system, has sufficed and persisted. All of this may be changing. A new set of techniques, aimed not at breaking into computers but at manipulating the increasingly intelligent machine learning models that control them, may force law and legal institutions to reevaluate the very nature of hacking. Three of the authors have shown, for example, that it is possible to use one’s knowledge of a system to fool a machine learning classifier (such as the classifiers one might find in a driverless car) into perceiving a stop sign as a speed limit. -

Table of Contents

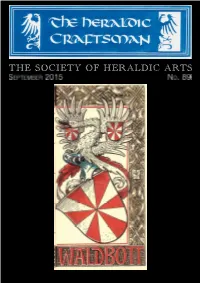

THE SOCIETY OF HERALDIC ARTS Table of Contents Familiawappen for the family Waldbo by O o Hupp cover Contents, Membership and Heraldic Craftsman inside cover Offi cers of the Society and Chairman’s Message 1 Cornish Choughs are drawn with sable claws! David Hopkinson, FSHA 2-7 What are you doing today? 8 An Appreciation of O o Hupp David F Phillips, SHA 9-14 Living Heraldry Tim Crawley ARBS 15-17 Macedonian Heraldry, Melvyn Jeremiah 18-20 The matriculated arms of Mr Stavre Dzikov rear cover Membership of the Society Associate Membership is open to individuals and organisations interested in heraldic art. Craftsmen new to heraldry or whose work is not preponderantly heraldic should initially join as Associates. The annual fee is £17.50 or equivalent in other currencies. Craft Membership is open to those whose work comprises a substantial element of heraldry and is of suffi ciently high standard to pass examination by the Society’s Appointments Board. Successful applicants may use the post-nominal SHA. Fellowship of the Society is in recognition of outstanding work. Annual craft fee is £35 with access to and recognition on the Society’s website. Please join us! Look on www.heraldic-arts.com or contact Gwyn Ellis-Hughes, the Hon Membership Secretary, whose details are on the next page. The Heraldic Craftsman Any virtual society as we are is dependent upon the passion for the subject to hold it together, and this means encouraging those who practise it as well as creating opportunities for it to fl ourish. Another way to look at what we do is like a three- legged stool composed of the web, the journal and a commi ed, growing membership all held together by our common interest. -

The Sword of Judith Judith Studies Across the Disciplines Edited by Kevin R

The Sword of Judith Judith Studies Across the Disciplines Edited by Kevin R. Brine, Elena Ciletti and Henrike Lähnemann To access digital resources including: blog posts videos online appendices and to purchase copies of this book in: hardback paperback ebook editions Go to: https://www.openbookpublishers.com/product/28 Open Book Publishers is a non-profit independent initiative. We rely on sales and donations to continue publishing high-quality academic works. Abraham Bosse, Judith Femme Forte, 1645. Engraving in Lescalopier, Les predications. Photo credit: Bibliothèque Nationale, Paris. Kevin R. Brine, Elena Ciletti and Henrike Lähnemann (eds.) The Sword of Judith Judith Studies Across the Disciplines Cambridge 2010 40 Devonshire Road, Cambridge, CB1 2BL, United Kingdom http://www.openbookpublishers.com © 2010 Kevin R. Brine, Elena Ciletti and Henrike Lähnemann Some rights are reserved. This book is made available under the Creative Commons Attribution-Non-Commercial-No Derivative Works 2.0 UK: England & Wales License. This license allows for copying any part of the work for personal and non-commercial use, providing author attribution is clearly stated. Details of allowances and restrictions are available at: http://www.openbookpublishers.com As with all Open Book Publishers titles, digital material and resources associated with this volume are available from our website: http://www.openbookpublishers.com ISBN Hardback: 978-1-906924-16-4 ISBN Paperback: 978-1-906924-15-7 ISBN Digital (pdf): 978-1-906924-17-1 All paper used by Open Book Publishers is SFI (Sustainable Forestry Initia- tive), and PEFC (Programme for the Endorsement of Forest Certification Schemes) Certified. Printed in the United Kingdom and United States by Lightning Source for Open Book Publishers Contents Introductions 1. -

The Gore Roll of Arms 11

Rhode Island Historical Society Collections Vol. XXIX JANUARY, 1936 No. 1 EARLY LOCAL PAPER CURRENCY See page 10 Issued Quarterly 68 Waterman Street, Providence, Rhode Island 1 <^ CONTENTS PAGE Early Paper Currency . Cover and 1 Advertisement of 1678 Communicated by Fulmer Mood ... 1 A Rhode Island Imprint of 1 73 Communicated by Douglas C. McMurtrie . 3 New Publications of Rhode Island Interest . 9 New Members . 10 Notes 10 The Gore Roll by Harold Bowditch . , . 11 Ships' Protests 30 THE GORE ROLL OF ARMS 11 The Gore Roll of Arms By Harold Bowditch Four early manuscript collections of paintings of coats of arms of New England interest are known to be in existence} these are known as the Promptuarium Armorum, the Chute Pedigree, the Miner Pedigree and the Gore Roll of Arms. The Promptuarium Armorum has been fully described by the late Walter Kendall Watkins in an article which appeared in the Boston Globe of 7 February 1915. The author was an officer of the College of Arms: William Crowne, Rouge Dragon, and the period of production lies between the years 1 602 and 1616. Crowne came to America and must have brought the book with him, for Mr. Watkins has traced its probable ownership through a number of Boston painters until it is found in the hands of the Gore family. That it served as a source-book for the Gore Roll is clear and it would have been gratifying to have been able to examine it with this in mind j but the condition of the manu- script is now so fragile, the ink having in many places eaten completely through the paper, that the present owner is unwilling to have it subjected to further handling. -

The Tricking Hour

The Tricking Hour by Irene Silt foreword by the Clandestine Whores Network TRIPWIRE PAMPHLET #7 2020 TRIPWIRE a journal of poetics editor: David Buuck assistant editor, design & layout: Kate Robinson editorial assistant: Lara Durback minister of information: Jenn McCreary co-founding editor: Yedda Morrison cover images & illustrations by Harriet “Happy” Burbeck (happyburbeck.com) The Tricking Hour was originally published as a monthly column in ANTIGRAVITY from June 2018 to October 2019 under the name Saint Agatha, with the exception of the last column printed here, written under the onslaught of the Covid-19 pandemic and pub- lished in April 2020. all rights convert to contributors upon publication. ISSN: 1099-2170 tripwirejournal.com Oakland : 2020 FOREWORD ANTIGRAVITY began publishing Irene Silt’s “The Tricking Hour” (under the penname Saint Agatha) in the summer of 2018. At the time, New Orleans city officials were doubling down on an ongoing sterilization of the French Quarter. In the backdrop, Airbnb rentals continued devastating poor residents while service workers were taunted on a sanitized “family-friendly” Bourbon Street. It was a multi-agency, anti-vice project targeting unregulated or “seedy” economies, with an emphasis on strip clubs. Police raided multiple clubs and subjected dancers to violent and humiliating searches. They were told this was done to save them from the threat of human trafficking, but there was never a single case of human traf- ficking discovered. As the cops continued their brutal crackdown, it was not sur- prising that their motives were extremely racialized, as most clubs with a majority of black dancers were permanently closed.