Section 9. Basic Digital Modulator Theory

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Discrete Cosine Transform Based Image Fusion Techniques VPS Naidu MSDF Lab, FMCD, National Aerospace Laboratories, Bangalore, INDIA E.Mail: [email protected]

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by NAL-IR Journal of Communication, Navigation and Signal Processing (January 2012) Vol. 1, No. 1, pp. 35-45 Discrete Cosine Transform based Image Fusion Techniques VPS Naidu MSDF Lab, FMCD, National Aerospace Laboratories, Bangalore, INDIA E.mail: [email protected] Abstract: Six different types of image fusion algorithms based on 1 discrete cosine transform (DCT) were developed and their , k 1 0 performance was evaluated. Fusion performance is not good while N Where (k ) 1 and using the algorithms with block size less than 8x8 and also the block 1 2 size equivalent to the image size itself. DCTe and DCTmx based , 1 k 1 N 1 1 image fusion algorithms performed well. These algorithms are very N 1 simple and might be suitable for real time applications. 1 , k 0 Keywords: DCT, Contrast measure, Image fusion 2 N 2 (k 1 ) I. INTRODUCTION 2 , 1 k 2 N 2 1 Off late, different image fusion algorithms have been developed N 2 to merge the multiple images into a single image that contain all useful information. Pixel averaging of the source images k 1 & k 2 discrete frequency variables (n1 , n 2 ) pixel index (the images to be fused) is the simplest image fusion technique and it often produces undesirable side effects in the fused image Similarly, the 2D inverse discrete cosine transform is defined including reduced contrast. To overcome this side effects many as: researchers have developed multi resolution [1-3], multi scale [4,5] and statistical signal processing [6,7] based image fusion x(n1 , n 2 ) (k 1 ) (k 2 ) N 1 N 1 techniques. -

Digital Signals

Technical Information Digital Signals 1 1 bit t Part 1 Fundamentals Technical Information Part 1: Fundamentals Part 2: Self-operated Regulators Part 3: Control Valves Part 4: Communication Part 5: Building Automation Part 6: Process Automation Should you have any further questions or suggestions, please do not hesitate to contact us: SAMSON AG Phone (+49 69) 4 00 94 67 V74 / Schulung Telefax (+49 69) 4 00 97 16 Weismüllerstraße 3 E-Mail: [email protected] D-60314 Frankfurt Internet: http://www.samson.de Part 1 ⋅ L150EN Digital Signals Range of values and discretization . 5 Bits and bytes in hexadecimal notation. 7 Digital encoding of information. 8 Advantages of digital signal processing . 10 High interference immunity. 10 Short-time and permanent storage . 11 Flexible processing . 11 Various transmission options . 11 Transmission of digital signals . 12 Bit-parallel transmission. 12 Bit-serial transmission . 12 Appendix A1: Additional Literature. 14 99/12 ⋅ SAMSON AG CONTENTS 3 Fundamentals ⋅ Digital Signals V74/ DKE ⋅ SAMSON AG 4 Part 1 ⋅ L150EN Digital Signals In electronic signal and information processing and transmission, digital technology is increasingly being used because, in various applications, digi- tal signal transmission has many advantages over analog signal transmis- sion. Numerous and very successful applications of digital technology include the continuously growing number of PCs, the communication net- work ISDN as well as the increasing use of digital control stations (Direct Di- gital Control: DDC). Unlike analog technology which uses continuous signals, digital technology continuous or encodes the information into discrete signal states (Fig. 1). When only two discrete signals states are assigned per digital signal, these signals are termed binary si- gnals. -

3 Characterization of Communication Signals and Systems

63 3 Characterization of Communication Signals and Systems 3.1 Representation of Bandpass Signals and Systems Narrowband communication signals are often transmitted using some type of carrier modulation. The resulting transmit signal s(t) has passband character, i.e., the bandwidth B of its spectrum S(f) = s(t) is much smaller F{ } than the carrier frequency fc. S(f) B f f f − c c We are interested in a representation for s(t) that is independent of the carrier frequency fc. This will lead us to the so–called equiv- alent (complex) baseband representation of signals and systems. Schober: Signal Detection and Estimation 64 3.1.1 Equivalent Complex Baseband Representation of Band- pass Signals Given: Real–valued bandpass signal s(t) with spectrum S(f) = s(t) F{ } Analytic Signal s+(t) In our quest to find the equivalent baseband representation of s(t), we first suppress all negative frequencies in S(f), since S(f) = S( f) is valid. − The spectrum S+(f) of the resulting so–called analytic signal s+(t) is defined as S (f) = s (t) =2 u(f)S(f), + F{ + } where u(f) is the unit step function 0, f < 0 u(f) = 1/2, f =0 . 1, f > 0 u(f) 1 1/2 f Schober: Signal Detection and Estimation 65 The analytic signal can be expressed as 1 s+(t) = − S+(f) F 1{ } = − 2 u(f)S(f) F 1{ } 1 = − 2 u(f) − S(f) F { } ∗ F { } 1 The inverse Fourier transform of − 2 u(f) is given by F { } 1 j − 2 u(f) = δ(t) + . -

How I Came up with the Discrete Cosine Transform Nasir Ahmed Electrical and Computer Engineering Department, University of New Mexico, Albuquerque, New Mexico 87131

mxT*L. BImL4L. PRocEsSlNG 1,4-5 (1991) How I Came Up with the Discrete Cosine Transform Nasir Ahmed Electrical and Computer Engineering Department, University of New Mexico, Albuquerque, New Mexico 87131 During the late sixties and early seventies, there to study a “cosine transform” using Chebyshev poly- was a great deal of research activity related to digital nomials of the form orthogonal transforms and their use for image data compression. As such, there were a large number of T,(m) = (l/N)‘/“, m = 1, 2, . , N transforms being introduced with claims of better per- formance relative to others transforms. Such compari- em- lh) h = 1 2 N T,(m) = (2/N)‘%os 2N t ,...) . sons were typically made on a qualitative basis, by viewing a set of “standard” images that had been sub- jected to data compression using transform coding The motivation for looking into such “cosine func- techniques. At the same time, a number of researchers tions” was that they closely resembled KLT basis were doing some excellent work on making compari- functions for a range of values of the correlation coef- sons on a quantitative basis. In particular, researchers ficient p (in the covariance matrix). Further, this at the University of Southern California’s Image Pro- range of values for p was relevant to image data per- cessing Institute (Bill Pratt, Harry Andrews, Ali Ha- taining to a variety of applications. bibi, and others) and the University of California at Much to my disappointment, NSF did not fund the Los Angeles (Judea Pearl) played a key role. -

7.3.7 Video Cassette Recorders (VCR) 7.3.8 Video Disk Recorders

/7 7.3.5 Fiber-Optic Cables (FO) 7.3.6 Telephone Company Unes (TELCO) 7.3.7 Video Cassette Recorders (VCR) 7.3.8 Video Disk Recorders 7.4 Transmission Security 8. Consumer Equipment Issues 8.1 Complexity of Receivers 8.2 Receiver Input/Output Characteristics 8.2.1 RF Interface 8.2.2 Baseband Video Interface 8.2.3 Baseband Audio Interface 8.2.4 Interfacing with Ancillary Signals 8.2.5 Receiver Antenna Systems Requirements 8.3 Compatibility with Existing NTSC Consumer Equipment 8.3.1 RF Compatibility 8.3.2 Baseband Video Compatibility 8.3.3 Baseband Audio Compatibility 8.3.4 IDTV Receiver Compatibility 8.4 Allows Multi-Standard Display Devices 9. Other Considerations 9.1 Practicality of Near-Term Technological Implementation 9.2 Long-Term Viability/Rate of Obsolescence 9.3 Upgradability/Extendability 9.4 Studio/Plant Compatibility Section B: EXPLANATORY NOTES OF ATTRIBUTES/SYSTEMS MATRIX Items on the Attributes/System Matrix for which no explanatory note is provided were deemed to be self-explanatory. I. General Description (Proponent) section I shall be used by a system proponent to define the features of the system being proposed. The features shall be defined and organized under the headings ot the following subsections 1 through 4. section I. General Description (Proponent) shall consist of a description of the proponent system in narrative form, which covers all of the features and characteris tics of the system which the proponent wishe. to be included in the public record, and which will be used by various groups to analyze and understand the system proposed, and to compare with other propo.ed systems. -

Reception Performance Improvement of AM/FM Tuner by Digital Signal Processing Technology

Reception performance improvement of AM/FM tuner by digital signal processing technology Akira Hatakeyama Osamu Keishima Kiyotaka Nakagawa Yoshiaki Inoue Takehiro Sakai Hirokazu Matsunaga Abstract With developments in digital technology, CDs, MDs, DVDs, HDDs and digital media have become the mainstream of car AV products. In terms of broadcasting media, various types of digital broadcasting have begun in countries all over the world. Thus, there is a demand for smaller and thinner products, in order to enhance radio performance and to achieve consolidation with the above-mentioned digital media in limited space. Due to these circumstances, we are attaining such performance enhancement through digital signal processing for AM/FM IF and beyond, and both tuner miniaturization and lighter products have been realized. The digital signal processing tuner which we will introduce was developed with Freescale Semiconductor, Inc. for the 2005 line model. In this paper, we explain regarding the function outline, characteristics, and main tech- nology involved. 22 Reception performance improvement of AM/FM tuner by digital signal processing technology Introduction1. Introduction from IF signals, interference and noise prevention perfor- 1 mance have surpassed those of analog systems. In recent years, CDs, MDs, DVDs, and digital media have become the mainstream in the car AV market. 2.2 Goals of digitalization In terms of broadcast media, with terrestrial digital The following items were the goals in the develop- TV and audio broadcasting, and satellite broadcasting ment of this digital processing platform for radio: having begun in Japan, while overseas DAB (digital audio ①Improvements in performance (differentiation with broadcasting) is used mainly in Europe and SDARS (satel- other companies through software algorithms) lite digital audio radio service) and IBOC (in band on ・Reduction in noise (improvements in AM/FM noise channel) are used in the United States, digital broadcast- reduction performance, and FM multi-pass perfor- ing is expected to increase in the future. -

Next-Generation Photonic Transport Network Using Digital Signal Processing

Next-Generation Photonic Transport Network Using Digital Signal Processing Yasuhiko Aoki Hisao Nakashima Shoichiro Oda Paparao Palacharla Coherent optical-fiber transmission technology using digital signal processing is being actively researched and developed for use in transmitting high-speed signals of the 100-Gb/s class over long distances. It is expected that network capacity can be further expanded by operating a flexible photonic network having high spectral efficiency achieved by applying an optimal modulation format and signal processing algorithm depending on the transmission distance and required bit rate between transmit and receive nodes. A system that uses such adaptive modulation technology should be able to assign in real time a transmission path between a transmitter and receiver. Furthermore, in the research and development stage, optical transmission characteristics for each modulation format and signal processing algorithm to be used by the system should be evaluated under emulated quasi-field conditions. We first discuss how the use of transmitters and receivers supporting multiple modulation formats can affect network capacity. We then introduce an evaluation platform consisting of a coherent receiver based on a field programmable gate array (FPGA) and polarization mode dispersion/polarization dependent loss (PMD/PDL) emulators with a recirculating-loop experimental system. Finally, we report the results of using this platform to evaluate the transmission characteristics of 112-Gb/s dual-polarization, quadrature phase shift keying (DP-QPSK) signals by emulating the factors that degrade signal transmission in a real environment. 1. Introduction amplifiers—which is becoming a limiting factor In the field of optical communications in system capacity—can be efficiently used. -

Baseband Harmonic Distortions in Single Sideband Transmitter and Receiver System

Baseband Harmonic Distortions in Single Sideband Transmitter and Receiver System Kang Hsia Abstract: Telecommunications industry has widely adopted single sideband (SSB or complex quadrature) transmitter and receiver system, and one popular implementation for SSB system is to achieve image rejection through quadrature component image cancellation. Typically, during the SSB system characterization, the baseband fundamental tone and also the harmonic distortion products are important parameters besides image and LO feedthrough leakage. To ensure accurate characterization, the actual frequency locations of the harmonic distortion products are critical. While system designers may be tempted to assume that the harmonic distortion products are simply up-converted in the same fashion as the baseband fundamental frequency component, the actual distortion products may have surprising results and show up on the different side of spectrum. This paper discusses the theory of SSB system and the actual location of the baseband harmonic distortion products. Introduction Communications engineers have utilized SSB transmitter and receiver system because it offers better bandwidth utilization than double sideband (DSB) transmitter system. The primary cause of bandwidth overhead for the double sideband system is due to the image component during the mixing process. Given data transmission bandwidth of B, the former requires minimum bandwidth of B whereas the latter requires minimum bandwidth of 2B. While the filtering of the image component is one type of SSB implementation, another type of SSB system is to create a quadrature component of the signal and ideally cancels out the image through phase cancellation. M(t) M(t) COS(2πFct) Baseband Message Signal (BB) Modulated Signal (RF) COS(2πFct) Local Oscillator (LO) Signal Figure 1. -

Baseband Video Testing with Digital Phosphor Oscilloscopes

Application Note Baseband Video Testing With Digital Phosphor Oscilloscopes Video signals are complex pose instrument that can pro- This application note demon- waveforms comprised of sig- vide accurate information – strates the use of a Tektronix nals representing a picture as quickly and easily. Finally, to TDS 700D-series Digital well as the timing informa- display all of the video wave- Phosphor Oscilloscope to tion needed to display the form details, a fast acquisi- make a variety of common picture. To capture and mea- tion technology teamed with baseband video measure- sure these complex signals, an intensity-graded display ments and examines some of you need powerful instru- give the confidence and the critical measurement ments tailored for this appli- insight needed to detect and issues. cation. But, because of the diagnose problems with the variety of video standards, signal. you also need a general-pur- Copyright © 1998 Tektronix, Inc. All rights reserved. Video Basics Video signals come from a for SMPTE systems, etc. The active portion of the video number of sources, including three derived component sig- signal. Finally, the synchro- cameras, scanners, and nals can then be distributed nization information is graphics terminals. Typically, for processing. added. Although complex, the baseband video signal Processing this composite signal is a sin- begins as three component gle signal that can be carried analog or digital signals rep- In the processing stage, video on a single coaxial cable. resenting the three primary component signals may be combined to form a single Component Video Signals. color elements – the Red, Component signals have an Green, and Blue (RGB) com- composite video signal (as in NTSC or PAL systems), advantage of simplicity in ponent signals. -

Digital Signal Processing: Theory and Practice, Hardware and Software

AC 2009-959: DIGITAL SIGNAL PROCESSING: THEORY AND PRACTICE, HARDWARE AND SOFTWARE Wei PAN, Idaho State University Wei Pan is Assistant Professor and Director of VLSI Laboratory, Electrical Engineering Department, Idaho State University. She has several years of industrial experience including Siemens (project engineering/management.) Dr. Pan is an active member of ASEE and IEEE and serves on the membership committee of the IEEE Education Society. S. Hossein Mousavinezhad, Idaho State University S. Hossein Mousavinezhad is Professor and Chair, Electrical Engineering Department, Idaho State University. Dr. Mousavinezhad is active in ASEE and IEEE and is an ABET program evaluator. Hossein is the founding general chair of the IEEE International Conferences on Electro Information Technology. Kenyon Hart, Idaho State University Kenyon Hart is Specialist Engineer and Associate Lecturer, Electrical Engineering Department, Idaho State University, Pocatello, Idaho. Page 14.491.1 Page © American Society for Engineering Education, 2009 Digital Signal Processing, Theory/Practice, HW/SW Abstract Digital Signal Processing (DSP) is a course offered by many Electrical and Computer Engineering (ECE) programs. In our school we offer a senior-level, first-year graduate course with both lecture and laboratory sections. Our experience has shown that some students consider the subject matter to be too theoretical, relying heavily on mathematical concepts and abstraction. There are several visible applications of DSP including: cellular communication systems, digital image processing and biomedical signal processing. Authors have incorporated many examples utilizing software packages including MATLAB/MATHCAD in the course and also used classroom demonstrations to help students visualize some difficult (but important) concepts such as digital filters and their design, various signal transformations, convolution, difference equations modeling, signals/systems classifications and power spectral estimation as well as optimal filters. -

Digital Baseband Modulation Outline • Later Baseband & Bandpass Waveforms Baseband & Bandpass Waveforms, Modulation

Digital Baseband Modulation Outline • Later Baseband & Bandpass Waveforms Baseband & Bandpass Waveforms, Modulation A Communication System Dig. Baseband Modulators (Line Coders) • Sequence of bits are modulated into waveforms before transmission • à Digital transmission system consists of: • The modulator is based on: • The symbol mapper takes bits and converts them into symbols an) – this is done based on a given table • Pulse Shaping Filter generates the Gaussian pulse or waveform ready to be transmitted (Baseband signal) Waveform; Sampled at T Pulse Amplitude Modulation (PAM) Example: Binary PAM Example: Quaternary PAN PAM Randomness • Since the amplitude level is uniquely determined by k bits of random data it represents, the pulse amplitude during the nth symbol interval (an) is a discrete random variable • s(t) is a random process because pulse amplitudes {an} are discrete random variables assuming values from the set AM • The bit period Tb is the time required to send a single data bit • Rb = 1/ Tb is the equivalent bit rate of the system PAM T= Symbol period D= Symbol or pulse rate Example • Amplitude pulse modulation • If binary signaling & pulse rate is 9600 find bit rate • If quaternary signaling & pulse rate is 9600 find bit rate Example • Amplitude pulse modulation • If binary signaling & pulse rate is 9600 find bit rate M=2à k=1à bite rate Rb=1/Tb=k.D = 9600 • If quaternary signaling & pulse rate is 9600 find bit rate M=2à k=1à bite rate Rb=1/Tb=k.D = 9600 Binary Line Coding Techniques • Line coding - Mapping of binary information sequence into the digital signal that enters the baseband channel • Symbol mapping – Unipolar - Binary 1 is represented by +A volts pulse and binary 0 by no pulse during a bit period – Polar - Binary 1 is represented by +A volts pulse and binary 0 by –A volts pulse. -

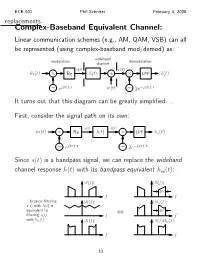

The Complex-Baseband Equivalent Channel Model

ECE-501 Phil Schniter February 4, 2008 replacements Complex-Baseband Equivalent Channel: Linear communication schemes (e.g., AM, QAM, VSB) can all be represented (using complex-baseband mod/demod) as: wideband modulation demodulation channel s(t) r(t) m˜ (t) Re h(t) + LPF v˜(t) × × j2πfct w(t) j2πfct ∼ e ∼ 2e− It turns out that this diagram can be greatly simplified. First, consider the signal path on its own: s(t) x(t) m˜ (t) Re h(t) LPF v˜s(t) × × j2πfct j2πfct ∼ e ∼ 2e− Since s(t) is a bandpass signal, we can replace the wideband channel response h(t) with its bandpass equivalent hbp(t): S(f) S(f) | | | | 2W f f ...because filtering H(f) Hbp(f) s(t) with h(t) is | | | | equivalent to 2W filtering s(t) f ⇔ f with hbp(t): X(f) S(f)H (f) | | | bp | f f 33 ECE-501 Phil Schniter February 4, 2008 Then, notice that j2πfct j2πfct s(t) h (t) 2e− = s(τ)h (t τ)dτ 2e− ∗ bp bp − · # ! " j2πfcτ j2πfc(t τ) = s(τ)2e− h (t τ)e− − dτ bp − # j2πfct j2πfct = s(t)2e− h (t)e− , ∗ bp which means we can rewrit!e the block di"agr!am as " s(t) j2πfct m˜ (t) Re hbp(t)e− LPF v˜s(t) × × j2πfct j2πfct ∼ e ∼ 2e− j2πfct We can now reverse the order of the LPF and hbp(t)e− (since both are LTI systems), giving s(t) j2πfct m˜ (t) Re LPF hbp(t)e− v˜s(t) × × j2πfct j2πfct ∼ e ∼ 2e− Since mod/demod is transparent (with synched oscillators), it can be removed, simplifying the block diagram to j2πfct m˜ (t) hbp(t)e− v˜s(t) 34 ECE-501 Phil Schniter February 4, 2008 Now, since m˜ (t) is bandlimited to W Hz, there is no need to j2πfct model the left component of Hbp(f + f ) = hbp(t)e− : c F{ } Hbp(f) Hbp(f + fc) H˜ (f) | | | | | | f f f fc fc 2fc 0 B 0 B − − − j2πfct Replacing hbp(t)e− with the complex-baseband response h˜(t) gives the “complex-baseband equivalent” signal path: m˜ (t) h˜(t) v˜s(t) The spectrums above show that h (t) = Re h˜(t) 2ej2πfct .