Intelligent Carpet: Inferring 3D Human Pose from Tactile Signals

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Pinyin Conversion Project Specifications for Conversion of Name and Series Authority Records October 1, 2000

PINYIN CONVERSION PROJECT SPECIFICATIONS FOR CONVERSION OF NAME AND SERIES AUTHORITY RECORDS OCTOBER 1, 2000 Prepared by the Library of Congress Authorities Conversion Group, February 10, 2000 Annotated with Clarifications from LC March 23, 2000. Contact point: Gail Thornburg Revised, incorporating language suggested by OCLC, as well as adding and changing other sections, April 14, 2000, May 30, 2000, June 20, 2000, June 22, 2000, July 5, 2000, July 27, 2000, August 14, 2000, and September 12, 2000 1 Introduction The conversion, or perhaps better, translation, to PY in our model involves distinct and somewhat arbitrary paths. We employ both specialized dictionaries and strict step-by-step substitution rules for those cases where dictionary substitution proves inadequate. Details of the conversion are given below. This summary seeks to attune the reader to the overall methods and strategy. 1.1 Scope The universe of authority records to be converted (or translated) is determined by scanning headings in the NACO file (i.e., 1xx tags) for character strings matching WG syllables. It is important to understand that this identification plans to, in part, utilize the fixed filed language code in related bibliographic records. The scan is done on a sub-field by sub-field basis for those MARC sub-fields within the heading that could reasonably contain WG strings. Thus, we would scan the heading's sub-field "a" but not sub-field "e" etc. The presence of WG strings in portions of the heading thus acts as a trigger to identify a candidate record for conversion. Once a record has been so identified all other fields that could carry WG data are examined and processed for conversion. -

Chinese Personal Name Disambiguation Based on Vector Space Model

Chinese Personal Name Disambiguation Based on Vector Space Model Qing-hu Fan Hong-ying Zan Yu-mei Chai College of Information Engineering, Yu-xiang Jia Zhengzhou University, Zhengzhou, College of Information Engineering, Henan ,China Zhengzhou University, Zhengzhou, [email protected] Henan ,China {iehyzan, ieymchai, ieyx- jia}@zzu.edu.cn Gui-ling Niu Foreign Languages School, Zhengzhou University, Zhengzhou, Henan ,China [email protected] associate professor at the Department of Me- Abstract chanical and Electrical Engineering, Physics and Electrical and Mechanical Engineering College of Xiamen University, or it may be Peking Uni- versity Founder Group Corp that was established This paper introduces the task of Chinese per- by Peking University. It needs to associate with sonal name disambiguation of the Second context for disambiguating the entity Fang Zheng. CIPS-SIGHAN Joint Conference on Chinese For example, Fang Zheng who is an associate Language Processing (CLP) 2012 that Natural Language Processing Laboratory of professor at Xiamen University can be extracted Zhengzhou University took part in. In this task, with the feature that Xiamen University, Me- we mainly use the Vector Space Model to dis- chanical and Electrical Engineering College or ambiguate Chinese personal name. We extract associate professor, which can eliminate ambigu- different named entity features from diverse ity. names information, and give different weights to various named entity features with the im- 2 Related Research portance. First of all, we classify all the name documents, and then we cluster the documents In the early stages of Named Entity Disambigua- that cannot be mapped to names that have tion, Bagga and Baldwin (1998) use Vector been defined. -

The Later Han Empire (25-220CE) & Its Northwestern Frontier

University of Pennsylvania ScholarlyCommons Publicly Accessible Penn Dissertations 2012 Dynamics of Disintegration: The Later Han Empire (25-220CE) & Its Northwestern Frontier Wai Kit Wicky Tse University of Pennsylvania, [email protected] Follow this and additional works at: https://repository.upenn.edu/edissertations Part of the Asian History Commons, Asian Studies Commons, and the Military History Commons Recommended Citation Tse, Wai Kit Wicky, "Dynamics of Disintegration: The Later Han Empire (25-220CE) & Its Northwestern Frontier" (2012). Publicly Accessible Penn Dissertations. 589. https://repository.upenn.edu/edissertations/589 This paper is posted at ScholarlyCommons. https://repository.upenn.edu/edissertations/589 For more information, please contact [email protected]. Dynamics of Disintegration: The Later Han Empire (25-220CE) & Its Northwestern Frontier Abstract As a frontier region of the Qin-Han (221BCE-220CE) empire, the northwest was a new territory to the Chinese realm. Until the Later Han (25-220CE) times, some portions of the northwestern region had only been part of imperial soil for one hundred years. Its coalescence into the Chinese empire was a product of long-term expansion and conquest, which arguably defined the egionr 's military nature. Furthermore, in the harsh natural environment of the region, only tough people could survive, and unsurprisingly, the region fostered vigorous warriors. Mixed culture and multi-ethnicity featured prominently in this highly militarized frontier society, which contrasted sharply with the imperial center that promoted unified cultural values and stood in the way of a greater degree of transregional integration. As this project shows, it was the northwesterners who went through a process of political peripheralization during the Later Han times played a harbinger role of the disintegration of the empire and eventually led to the breakdown of the early imperial system in Chinese history. -

2017 36Th Chinese Control Conference (CCC 2017)

2017 36th Chinese Control Conference (CCC 2017) Dalian, China 26-28 July 2017 Pages 1-776 IEEE Catalog Number: CFP1740A-POD ISBN: 978-1-5386-2918-5 1/15 Copyright © 2017, Technical Committee on Control Theory, Chinese Association of Automation All Rights Reserved *** This is a print representation of what appears in the IEEE Digital Library. Some format issues inherent in the e-media version may also appear in this print version. IEEE Catalog Number: CFP1740A-POD ISBN (Print-On-Demand): 978-1-5386-2918-5 ISBN (Online): 978-9-8815-6393-4 ISSN: 1934-1768 Additional Copies of This Publication Are Available From: Curran Associates, Inc 57 Morehouse Lane Red Hook, NY 12571 USA Phone: (845) 758-0400 Fax: (845) 758-2633 E-mail: [email protected] Web: www.proceedings.com Proceedings of the 36th Chinese Control Conference, July 26-28, 2017, Dalian, China Contents Systems Theory and Control Theory Robust H∞filter design for continuous-time nonhomogeneous markov jump systems . BIAN Cunkang, HUA Mingang, ZHENG Dandan 28 Continuity of the Polytope Generated by a Set of Matrices . MENG Lingxin, LIN Cong, CAI Xiushan 34 The Unmanned Surface Vehicle Course Tracking Control with Input Saturation . BAI Yiming, ZHAO Yongsheng, FAN Yunsheng 40 Necessary and Sufficient D-stability Condition of Fractional-order Linear Systems . SHAO Ke-yong, ZHOU Lipeng, QIAN Kun, YU Yeqiang, CHEN Feng, ZHENG Shuang 44 A NNDP-TBD Algorithm for Passive Coherent Location . ZHANG Peinan, ZHENG Jian, PAN Jinxing, FENG Songtao, GUO Yunfei 49 A Superimposed Intensity Multi-sensor GM-PHD Filter for Passive Multi-target Tracking . -

Names of Chinese People in Singapore

101 Lodz Papers in Pragmatics 7.1 (2011): 101-133 DOI: 10.2478/v10016-011-0005-6 Lee Cher Leng Department of Chinese Studies, National University of Singapore ETHNOGRAPHY OF SINGAPORE CHINESE NAMES: RACE, RELIGION, AND REPRESENTATION Abstract Singapore Chinese is part of the Chinese Diaspora.This research shows how Singapore Chinese names reflect the Chinese naming tradition of surnames and generation names, as well as Straits Chinese influence. The names also reflect the beliefs and religion of Singapore Chinese. More significantly, a change of identity and representation is reflected in the names of earlier settlers and Singapore Chinese today. This paper aims to show the general naming traditions of Chinese in Singapore as well as a change in ideology and trends due to globalization. Keywords Singapore, Chinese, names, identity, beliefs, globalization. 1. Introduction When parents choose a name for a child, the name necessarily reflects their thoughts and aspirations with regards to the child. These thoughts and aspirations are shaped by the historical, social, cultural or spiritual setting of the time and place they are living in whether or not they are aware of them. Thus, the study of names is an important window through which one could view how these parents prefer their children to be perceived by society at large, according to the identities, roles, values, hierarchies or expectations constructed within a social space. Goodenough explains this culturally driven context of names and naming practices: Department of Chinese Studies, National University of Singapore The Shaw Foundation Building, Block AS7, Level 5 5 Arts Link, Singapore 117570 e-mail: [email protected] 102 Lee Cher Leng Ethnography of Singapore Chinese Names: Race, Religion, and Representation Different naming and address customs necessarily select different things about the self for communication and consequent emphasis. -

Mating-Induced Male Death and Pheromone Toxin-Regulated Androstasis

bioRxiv preprint first posted online Dec. 15, 2015; doi: http://dx.doi.org/10.1101/034181. The copyright holder for this preprint (which was not peer-reviewed) is the author/funder. All rights reserved. No reuse allowed without permission. Shi, Runnels & Murphy – preprint version –www.biorxiv.org Mating-induced Male Death and Pheromone Toxin-regulated Androstasis Cheng Shi, Alexi M. Runnels, and Coleen T. Murphy* Lewis-Sigler Institute for Integrative Genomics and Dept. of Molecular Biology, Princeton University, Princeton, NJ 08544, USA *Correspondence to: [email protected] Abstract How mating affects male lifespan is poorly understood. Using single worm lifespan assays, we discovered that males live significantly shorter after mating in both androdioecious (male and hermaphroditic) and gonochoristic (male and female) Caenorhabditis. Germline-dependent shrinking, glycogen loss, and ectopic expression of vitellogenins contribute to male post-mating lifespan reduction, which is conserved between the sexes. In addition to mating-induced lifespan decrease, worms are subject to killing by male pheromone-dependent toxicity. C. elegans males are the most sensitive, whereas C. remanei are immune, suggesting that males in androdioecious and gonochoristic species utilize male pheromone differently as a toxin or a chemical messenger. Our study reveals two mechanisms involved in male lifespan regulation: germline-dependent shrinking and death is the result of an unavoidable cost of reproduction and is evolutionarily conserved, whereas male pheromone-mediated killing provides a novel mechanism to cull the male population and ensure a return to the self-reproduction mode in androdioecious species. Our work highlights the importance of understanding the shared vs. sex- and species- specific mechanisms that regulate lifespan. -

Rethinking Chinese Kinship in the Han and the Six Dynasties: a Preliminary Observation

part 1 volume xxiii • academia sinica • taiwan • 2010 INSTITUTE OF HISTORY AND PHILOLOGY third series asia major • third series • volume xxiii • part 1 • 2010 rethinking chinese kinship hou xudong 侯旭東 translated and edited by howard l. goodman Rethinking Chinese Kinship in the Han and the Six Dynasties: A Preliminary Observation n the eyes of most sinologists and Chinese scholars generally, even I most everyday Chinese, the dominant social organization during imperial China was patrilineal descent groups (often called PDG; and in Chinese usually “zongzu 宗族”),1 whatever the regional differences between south and north China. Particularly after the systematization of Maurice Freedman in the 1950s and 1960s, this view, as a stereo- type concerning China, has greatly affected the West’s understanding of the Chinese past. Meanwhile, most Chinese also wear the same PDG- focused glasses, even if the background from which they arrive at this view differs from the West’s. Recently like Patricia B. Ebrey, P. Steven Sangren, and James L. Watson have tried to challenge the prevailing idea from diverse perspectives.2 Some have proven that PDG proper did not appear until the Song era (in other words, about the eleventh century). Although they have confirmed that PDG was a somewhat later institution, the actual underlying view remains the same as before. Ebrey and Watson, for example, indicate: “Many basic kinship prin- ciples and practices continued with only minor changes from the Han through the Ch’ing dynasties.”3 In other words, they assume a certain continuity of paternally linked descent before and after the Song, and insist that the Chinese possessed such a tradition at least from the Han 1 This article will use both “PDG” and “zongzu” rather than try to formalize one term or one English translation. -

Download: Brill.Com/Brill-Typeface

From Accelerated Accumulation to Socialist Market Economy in China China Studies Published for the Institute for Chinese Studies, University of Oxford Edited by Micah Muscolino (University of Oxford) VOLUME 38 The titles published in this series are listed at brill.com/chs From Accelerated Accumulation to Socialist Market Economy in China Economic Discourse and Development from 1953 to the Present By Kjeld Erik Brødsgaard and Koen Rutten LEIDEN | BOSTON This is an open access title distributed under the terms of the CC-BY-NC License, which permits any non-commercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited. An electronic version of this book is freely available, thanks to the support of libraries working with Knowledge Unlatched. More information about the initiative can be found at www.knowledgeunlatched.org. Library of Congress Control Number: 2017931917 Typeface for the Latin, Greek, and Cyrillic scripts: “Brill”. See and download: brill.com/brill-typeface. issn 1570-1344 isbn 978-90-04-33008-5 (hardback) isbn 978-90-04-33009-2 (e-book) Copyright 2017 by Kjeld Erik Brødsgaard and Koen Rutten. This work is published by Koninklijke Brill NV. Koninklijke Brill NV incorporates the imprints Brill, Brill Hes & De Graaf, Brill Nijhoff, Brill Rodopi and Hotei Publishing. Koninklijke Brill NV reserves the right to protect the publication against unauthorized use and to authorize dissemination by means of offprints, legitimate photocopies, microform editions, reprints, translations, and secondary information sources, such as abstracting and indexing services including databases. Requests for commercial re-use, use of parts of the publication, and/or translations must be addressed to Koninklijke Brill NV. -

Following the Troops, Carrying Along Our Brushes: Jian'an (196–220 Ad

following the troops: fu and shi Asia Major (2020) 3d ser. Vol. 33.2: 61-91 qiulei hu Following the Troops, Carrying Along Our Brushes: Jian’an (196–220 ad) Fu and Shi Written for Military Campaigns abstract: It is widely accepted that Jian’an-period writing is best understood in tandem with society and political events. Past studies have explored the nature and function of writing, especially under the Cao-Wei regime, and future ones will do so as well. A strong focus should be cast on writing’s relationship with the military. This pa- per argues that Jian’an writings made while following military campaigns created a discourse of cultural conquest that claimed political legitimacy and provided moral justification for military actions. More importantly, they directly participated in the struggle for and the building of a new political power; thus they were effective acts of conquest themselves. Through a close examination of Jian’an views on the signifi- cance of writing vis-à-vis political and moral accomplishments, this paper sees a new identity that literati created for themselves, one that showed their commitment to po- litical and military goals. Literary talent — the writing brush — became a weapon that marked these writers’ place in a world primarily defined by politics and war. keywords: Jian’an literati, discourse of cultural conquest, power of writing, fu about military campaigns, poems on accompanying the army he historical period “Jian’an 建安” (196–220 ad) refers to the last T reign of the last Han 漢 emperor — emperor Xian 献帝 (r. 189– 220). -

A Prosopographical Study of Princesses During the Western and Eastern Han Dynasties

Born into Privilege: A Prosopographical Study of Princesses during the Western and Eastern Han Dynasties A thesis submitted to McGill University in partial fulfillment of the requirements of the degree of Master in Arts McGill University The Department of History and Classical Studies Fei Su 2016 November© CONTENTS Abstract . 1 Acknowledgements. 3 Introduction. .4 Chapter 1. 12 Chapter 2. 35 Chapter 3. 69 Chapter 4. 105 Afterword. 128 Bibliography. 129 Abstract My thesis is a prosopographical study of the princesses of Han times (206 BCE – 220 CE) as a group. A “princess” was a rank conferred on the daughter of an emperor, and, despite numerous studies on Han women, Han princesses as a group have received little scholarly attention. The thesis has four chapters. The first chapter discusses the specific titles awarded to princesses and their ranking system. Drawing both on transmitted documents and the available archaeological evidence, the chapter discusses the sumptuary codes as they applied to the princesses and the extravagant lifestyles of the princesses. Chapter Two discusses the economic underpinnings of the princesses’ existence, and discusses their sources of income. Chapter Three analyzes how marriage practices were re-arranged to fit the exalted status of the Han princesses, and looks at some aspects of the family life of princesses. Lastly, the fourth chapter investigates how historians portray the princesses as a group and probes for the reasons why they were cast in a particular light. Ma thèse se concentre sur une étude des princesses de l'ère de Han (206 AEC (Avant l'Ère Commune) à 220 EC) en tant qu'un groupe. -

Back Matter (PDF)

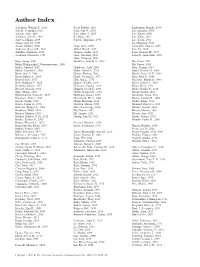

Author Index Ackerman, William E., 2966 Fazal, Fabeha, 3010 Lindemann, Monika, 2839 Adachi, Yoshitaka, 2862 Feng, Carl G., 2875 Liu, Xiangtao, 2909 Aderem, Alan, 2949 Fike, Adam J., 2803 Liu, Xiaoju, 2900 Aitchison, John D., 2949 Fu, Ming, 2852 Liu, Yalan, 2852 Aliyeva, Minara, 2989 Fujieda, Shigeharu, 2791 Liu, Yusen, 2966 Amon, Lynn M., 2949 Lu, Mingfang, 3021 Anand, Gautam, 3000 Geng, Shuo, 2980 Luckenbill, Sara A., 2803 Andersen, Gregers R., 3032 Giebel, Bernd, 2839 Lun, Yu, 2819 Andrikos, Dimitrios, 2839 Gorgens,€ Andre, 2839 Lund, Jennifer M., 2937 Asashima, Hiromitsu, 2785 Goto, Tatsunori, 2862 Lundell, Anna-Carin, 2839 Guan, Xinmeng, 2852 Bajic, Goran, 3032 Guethlein, Lisbeth A., 3064 Ma, Caina, 2909 Balaji, Kithiganahalli Narayanaswamy, 2888 Ma, Junwu, 2909 Barker, Edward, 3043 Habibovic, Aida, 2989 Mair, Florian, 2937 Barley, Timothy J., 2966 Hafler, David A., 2785 Marin, Wesley M., 3064 Batty, Abel J., 2966 Hafner, Markus, 2966 Marsh, Steven G. E., 3064 Bauer, Robert A., 2989 Hagel, Joachim P., 3073 Mast, Fred D., 2949 Bennett, Kyle, 3073 Han, Jing L., 2775 McCarter, Martin D., 3043 Berk, Bradford C., 3010 Hansen, Wiebke, 2839 Miller, Jeffrey S., 3064 Bertolino, Patrick, 2875 Heilmann, Carsten, 2828 Miura, Kenji, 2791 Beyzaie, Niassan, 3064 Heppner, David E., 2989 Miura, Noriko N., 2819 Bhatt, Bharat, 2888 Hoehn, Kenneth B., 2785 Miyao, Kotaro, 2862 Bhattacharyya, Nayan D., 2875 Hoffmann, Daniel, 2839 Morikawa, Taiyo, 2791 Bluestone, Jeffrey, 2769 Hollenbach, Jill A., 3064 Morris, Carolyn R., 2989 Borjini, Nozha, 2819 Homp, Ekaterina, 2839 Muller,€ Klaus, 2828 Bowen, David G., 2875 Hristova, Milena, 2989 Munford, Robert S., 3021 Bowman, Bridget A., 2966 Hsu, Chia George, 3010 Murase, Atsushi, 2862 Bradshaw, Elliot, 2924 Hu, Huimin, 2852 Murata, Makoto, 2862 Brauser, Martina, 2839 Hu, Qinxue, 2852 Murata, Sara, 3053 Bredius, Robbert G. -

Universtiy of California, San Diego

UNIVERSTIY OF CALIFORNIA, SAN DIEGO From Colonial Jewel to Socialist Metropolis: Dalian 1895-1955 A dissertation submitted in partial satisfaction of the requirements for the degree Doctor of Philosophy in History by Christian A. Hess Committee in Charge: Professor Joseph W. Esherick, Co-Chair Professor Paul G. Pickowicz, Co-Chair Professor Weijing Lu Professor Richard Madsen Professor Christena Turner 2006 Copyright Christian A. Hess, 2006 All rights reserved. The Dissertation of Christian A. Hess is approved, and it is acceptable in quality and fonn for publication on microfilm: Co-Chair Co-Chair University of California, San Diego 2006 111 TABLE OF CONTENTS Signature Page……………...…………………………………………………………..iii Table of Contents……………………………………………………………………….iv List of Maps…………………………………………………………………………….vi List of Tables…………………………………………………………………………...vii Abbreviations………………………………………………………………………….viii Acknowledgments………………………………………………………………………ix Vita, Publications, and Fields of Study………………………………………………...xii Abstract………………………………………………………………………………..xiii Introduction ....................................................................................................................1 1. The Rise of Colonial Dalian 1895-1934……………………………………………16 Section One Dalian and Japan’s Wartime Empire, 1932-1945 2. From Colonial Port to Wartime Production City: Dalian, Manchukuo, and Japan’s Wartime Empire……………………………………………………………………75 3. Urban Society in Wartime Dalian………………………………………………...116 Section Two Big Brother is Watching: Rebuilding Dalian Under Soviet