Identification of Raga by Machine Learning with Chromagram

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Towards Automatic Audio Segmentation of Indian Carnatic Music

Friedrich-Alexander-Universit¨at Erlangen-Nurnberg¨ Master Thesis Towards Automatic Audio Segmentation of Indian Carnatic Music submitted by Venkatesh Kulkarni submitted July 29, 2014 Supervisor / Advisor Dr. Balaji Thoshkahna Prof. Dr. Meinard Muller¨ Reviewers Prof. Dr. Meinard Muller¨ International Audio Laboratories Erlangen A Joint Institution of the Friedrich-Alexander-Universit¨at Erlangen-N¨urnberg (FAU) and Fraunhofer Institute for Integrated Circuits IIS ERKLARUNG¨ Erkl¨arung Hiermit versichere ich an Eides statt, dass ich die vorliegende Arbeit selbstst¨andig und ohne Benutzung anderer als der angegebenen Hilfsmittel angefertigt habe. Die aus anderen Quellen oder indirekt ubernommenen¨ Daten und Konzepte sind unter Angabe der Quelle gekennzeichnet. Die Arbeit wurde bisher weder im In- noch im Ausland in gleicher oder ¨ahnlicher Form in einem Verfahren zur Erlangung eines akademischen Grades vorgelegt. Erlangen, July 29, 2014 Venkatesh Kulkarni i Master Thesis, Venkatesh Kulkarni ACKNOWLEDGEMENTS Acknowledgements I would like to express my gratitude to my supervisor, Dr. Balaji Thoshkahna, whose expertise, understanding and patience added considerably to my learning experience. I appreciate his vast knowledge and skill in many areas (e.g., signal processing, Carnatic music, ethics and interaction with participants).He provided me with direction, technical support and became more of a friend, than a supervisor. A very special thanks goes out to my Prof. Dr. Meinard M¨uller,without whose motivation and encouragement, I would not have considered a graduate career in music signal analysis research. Prof. Dr. Meinard M¨ulleris the one professor/teacher who truly made a difference in my life. He was always there to give his valuable and inspiring ideas during my thesis which motivated me to think like a researcher. -

Fusion Without Confusion Raga Basics Indian

Fusion Without Confusion Raga Basics Indian Rhythm Basics Solkattu, also known as konnakol is the art of performing percussion syllables vocally. It comes from the Carnatic music tradition of South India and is mostly used in conjunction with instrumental music and dance instruction, although it has been widely adopted throughout the world as a modern composition and performance tool. Similarly, the music of North India has its own system of rhythm vocalization that is based on Bols, which are the vocalization of specific sounds that correspond to specific sounds that are made on the drums of North India, most notably the Tabla drums. Like in the south, the bols are used in musical training, as well as composition and performance. In addition, solkattu sounds are often referred to as bols, and the practice of reciting bols in the north is sometimes referred to as solkattu, so the distinction between the two practices is blurred a bit. The exercises and compositions we will discuss contain bols that are found in both North and South India, however they come from the tradition of the North Indian tabla drums. Furthermore, the theoretical aspect of the compositions is distinctly from the Hindustani, (north Indian) tradition. Hence, for the purpose of this presentation, the use of the term Solkattu refers to the broader, more general practice of Indian rhythmic language. South Indian Percussion Mridangam Dolak Kanjira Gattam North Indian Percussion Tabla Baya (a.k.a. Tabla) Pakhawaj Indian Rhythm Terms Tal (also tala, taal, or taala) – The Indian system of rhythm. Tal literally means "clap". -

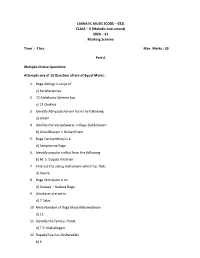

CARNATIC MUSIC (CODE – 032) CLASS – X (Melodic Instrument) 2020 – 21 Marking Scheme

CARNATIC MUSIC (CODE – 032) CLASS – X (Melodic Instrument) 2020 – 21 Marking Scheme Time - 2 hrs. Max. Marks : 30 Part A Multiple Choice Questions: Attempts any of 15 Question all are of Equal Marks : 1. Raga Abhogi is Janya of a) Karaharapriya 2. 72 Melakarta Scheme has c) 12 Chakras 3. Identify AbhyasaGhanam form the following d) Gitam 4. Idenfity the VarjyaSwaras in Raga SuddoSaveri b) GhanDharam – NishanDham 5. Raga Harikambhoji is a d) Sampoorna Raga 6. Identify popular vidilist from the following b) M. S. Gopala Krishnan 7. Find out the string instrument which has frets d) Veena 8. Raga Mohanam is an d) Audava – Audava Raga 9. Alankaras are set to d) 7 Talas 10 Mela Number of Raga Maya MalawaGoula d) 15 11. Identify the famous flutist d) T R. Mahalingam 12. RupakaTala has AksharaKals b) 6 13. Indentify composer of Navagrehakritis c) MuthuswaniDikshitan 14. Essential angas of kriti are a) Pallavi-Anuppallavi- Charanam b) Pallavi –multifplecharanma c) Pallavi – MukkyiSwaram d) Pallavi – Charanam 15. Raga SuddaDeven is Janya of a) Sankarabharanam 16. Composer of Famous GhanePanchartnaKritis – identify a) Thyagaraja 17. Find out most important accompanying instrument for a vocal concert b) Mridangam 18. A musical form set to different ragas c) Ragamalika 19. Identify dance from of music b) Tillana 20. Raga Sri Ranjani is Janya of a) Karahara Priya 21. Find out the popular Vena artist d) S. Bala Chander Part B Answer any five questions. All questions carry equal marks 5X3 = 15 1. Gitam : Gitam are the simplest musical form. The term “Gita” means song it is melodic extension of raga in which it is composed. -

Tillana Raaga: Bageshri; Taala: Aadi; Composer

Tillana Raaga: Bageshri; Taala: Aadi; Composer: Lalgudi G. Jayaraman Aarohana: Sa Ga2 Ma1 Dha2 Ni2 Sa Avarohana: Sa Ni2 Dha2 Ma1 Pa Dha2 Ga2 Ma1 Ga2 Ri2 Sa SaNiDhaMa .MaPaDha | Ga. .Ma | RiRiSa . || DhaNiSaGa .SaGaMa | Dha. MaDha| NiRi Sa . || DhaNiSaMa .GaRiSa |Ri. NiDha | NiRi Sa . || SaRiNiDha .MaPaDha |Ga . Ma . | RiNiSa . || Sa ..Ni .Dha Ma . |Sa..Ma .Ga | RiNiSa . || Sa ..Ni .Dha Ma~~ . |Sa..Ma .Ga | RiNiSa . || Pallavi tom dhru dhru dheem tadara | tadheem dheem ta na || dhim . dhira | na dhira na Dhridhru| (dhirana: DhaMaNi .. dhirana.: DhaMaGa .) tom dhru dhru dheem tadara | tadheem dheem ta na || dhim . dhira | na dhira na Dhridhru|| (dhirana: MaDha NiSa.. dhirana:DhaMa Ga..) tom dhru dhru dheem tadara | tadheem dheem ta na || (ta:DhaNi na:NiGaRi) dhim . dhira | na dhira na Dhridhru|| (dhirana:NiGaSaSaNi. Dhirana:DhaSaNiNiDha .) tom dhru dhru dheem tadara | tadheem dheem ta na || dhim . dhira | na dhira na Dhridhru|| (dhira:GaMaDhaNi na:GaGaRiSa dhira:NiDha na:Ga..) tom dhru dhru dheem tadana | tadheem dheem ta na || dhim.... Anupallavi SaMa .Ga MaNi . Dha| NiGa .Ri | NiDhaSa . || GaRi .Sa NiMa .Pa | Dha Ga..Ma | RiNi Sa . || naadhru daani tomdhru dhim | ^ta- ka-jha | Nuta dhim || … naadhru daani tomdhru dhim | (Naadru:MaGa, daani:DhaMa, tomdhru:NiDha, dhim: Sa) ^ta- ka-jha | Nuta dhim || (NiDha SaNi RiSa) taJha-Nu~ta dhim jhaNu | (tajha:SaSa Nu~ta: NiSaRiSa dhim:Ni; jha~Nu:MaDhaNi. tadhim . na | ta dhim ta || (tadhim:Dha Ga..;nata dhimta: MNiDha Sa.Sa) tanadheem .tatana dheemta tanadheem |(tanadheemta: DhaNi Ri ..Sa tanadheem: NiRiSa. .Sa tanadheem: NiDhaNi . ) .dheem dheemta | tom dhru dheem (dheem: Sa deemta:Ga.Ma tomdhrudeem:Ri..Ri Sa) .dheem dheem dheemta ton-| (dheem:Dha. -

108 Melodies

SRI SRI HARINAM SANKIRTAN - l08 MELODIES - INDEX CONTENTS PAGE CONTENTS PAGE Preface 1-2 Samant Sarang 37 Indian Classical Music Theory 3-13 Kurubh 38 Harinam Phylosphy & Development 14-15 Devagiri 39 FIRST PRAHAR RAGAS (6 A.M. to 9 A.M.) THIRD PRAHAR RAGAS (12 P.M. to 3 P.M.) Vairav 16 Gor Sarang 40 Bengal Valrav 17 Bhimpalasi 4 1 Ramkal~ 18 Piloo 42 B~bhas 19 Multani 43 Jog~a 20 Dhani 44 Tori 21 Triveni 45 Jaidev 22 Palasi 46 Morning Keertan 23 Hanskinkini 47 Prabhat Bhairav 24 FOURTH PKAHAR RAGAS (3 P.M. to 6 P.M.) Gunkali 25 Kalmgara 26 Traditional Keertan of Bengal 48-49 Dhanasari 50 SECOND PRAHAR RAGAS (9 A.M. to 12 P.M.) Manohar 5 1 Deva Gandhar 27 Ragasri 52 Bha~ravi 28 Puravi 53 M~shraBhairav~ 29 Malsri 54 Asavar~ 30 Malvi 55 JonPurl 3 1 Sr~tank 56 Durga (Bilawal That) 32 Hans Narayani 57 Gandhari 33 FIFTH PRAHAR RAGAS (6 P.M. to 9 P.M.) Mwa Bilawal 34 Bilawal 35 Yaman 58 Brindawani Sarang 36 Yaman Kalyan 59 Hem Kalyan 60 Purw Kalyan 61 Hindol Bahar 94 Bhupah 62 Arana Bahar 95 Pur~a 63 Kedar 64 SEVENTH PRAHAR RAGAS (12 A.M. to 3 A.M.) Jaldhar Kedar 65 Malgunj~ 96 Marwa 66 Darbar~Kanra 97 Chhaya 67 Basant Bahar 98 Khamaj 68 Deepak 99 Narayani 69 Basant 100 Durga (Khamaj Thhat) 70 Gaur~ 101 T~lakKarnod 71 Ch~traGaur~ 102 H~ndol 72 Shivaranjini 103 M~sraKhamaj 73 Ja~tsr~ 104 Nata 74 Dhawalsr~ 105 Ham~r 75 Paraj 106 Mall Gaura 107 SIXTH PRAHAR RAGAS (9Y.M. -

Rāga Recognition Based on Pitch Distribution Methods

Rāga Recognition based on Pitch Distribution Methods Gopala K. Koduria, Sankalp Gulatia, Preeti Raob, Xavier Serraa aMusic Technology Group, Universitat Pompeu Fabra, Barcelona, Spain. bDepartment of Electrical Engg., Indian Institute of Technology Bombay, Mumbai, India. Abstract Rāga forms the melodic framework for most of the music of the Indian subcontinent. us automatic rāga recognition is a fundamental step in the computational modeling of the Indian art-music traditions. In this work, we investigate the properties of rāga and the natural processes by which people identify it. We bring together and discuss the previous computational approaches to rāga recognition correlating them with human techniques, in both Karṇāṭak (south Indian) and Hindustānī (north Indian) music traditions. e approaches which are based on first-order pitch distributions are further evaluated on a large com- prehensive dataset to understand their merits and limitations. We outline the possible short and mid-term future directions in this line of work. Keywords: Indian art music, Rāga recognition, Pitch-distribution analysis 1. Introduction ere are two prominent art-music traditions in the Indian subcontinent: Karṇāṭak in the Indian peninsular and Hindustānī in north India, Pakistan and Bangladesh. Both are orally transmied from one generation to another, and are heterophonic in nature (Viswanathan & Allen (2004)). Rāga and tāla are their fundamental melodic and rhythmic frameworks respectively. However, there are ample differ- ences between the two traditions in their conceptualization (See (Narmada (2001)) for a comparative study of rāgas). ere is an abundance of musicological resources that thoroughly discuss the melodic and rhythmic concepts (Viswanathan & Allen (2004); Clayton (2000); Sambamoorthy (1998); Bagchee (1998)). -

The Thaat-Ragas of North Indian Classical Music: the Basic Atempt to Perform Dr

The Thaat-Ragas of North Indian Classical Music: The Basic Atempt to Perform Dr. Sujata Roy Manna ABSTRACT Indian classical music is divided into two streams, Hindustani music and Carnatic music. Though the rules and regulations of the Indian Shastras provide both bindings and liberties for the musicians, one can use one’s innovations while performing. As the Indian music requires to be learnt under the guidance of Master or Guru, scriptural guidelines are never sufficient for a learner. Keywords: Raga, Thaat, Music, Performing, Alapa. There are two streams of Classical music of India – the Ragas are to be performed with the basic help the North Indian i.e., Hindustani music and the of their Thaats. Hence, we may compare the Thaats South Indian i.e., Carnatic music. The vast area of with the skeleton of creature, whereas the body Indian Classical music consists upon the foremost can be compared with the Raga. The names of the criterion – the origin of the Ragas, named the 10 (ten) Thaats of North Indian Classical Music Thaats. In the Carnatic system, there are 10 system i.e., Hindustani music are as follows: Thaats. Let us look upon the origin of the 10 Thaats Sl. Thaats Ragas as well as their Thaat-ragas (i.e., the Ragas named 01. Vilabal Vilabal, Alhaiya–Vilaval, Bihag, according to their origin). The Indian Shastras Durga, Deshkar, Shankara etc. 02. Kalyan Yaman, Bhupali, Hameer, Kedar, throw light on the rules and regulations, the nature Kamod etc. of Ragas, process of performing these, and the 03. Khamaj Khamaj, Desh, Tilakkamod, Tilang, liberty and bindings of the Ragas while Jayjayanti / Jayjayvanti etc. -

CARNATIC MUSIC (VOCAL) (Code No

CARNATIC MUSIC (VOCAL) (code no. 031) Class IX (2020-21) Theory Marks: 30 Time: 2 Hours No. of periods I. Brief history of Carnatic Music with special reference to Saint 10 Purandara dasa, Annamacharya, Bhadrachala Ramadasa, Saint Tyagaraja, Muthuswamy Dikshitar, Syama Sastry and Swati Tirunal. II. Definition of the following terms: 12 Sangeetam, Nada, raga, laya, Tala, Dhatu, Mathu, Sruti, Alankara, Arohana, Avarohana, Graha (Sama, Atita, Anagata), Svara – Prakruti & Vikriti Svaras, Poorvanga & Uttaranga, Sthayi, vadi, Samvadi, Anuvadi & Vivadi Svara – Amsa, Nyasa and Jeeva. III. Brief raga lakshanas of Mohanam, Hamsadhvani, Malahari, 12 Sankarabharanam, Mayamalavagoula, Bilahari, khamas, Kharaharapriya, Kalyani, Abhogi & Hindolam. IV. Brief knowledge about the musical forms. 8 Geetam, Svarajati, Svara Exercises, Alankaras, Varnam, Jatisvaram, Kirtana & Kriti. V. Description of following Talas: 8 Adi – Single & Double Kalai, Roopakam, Chapu – Tisra, Misra & Khanda and Sooladi Sapta Talas. VI. Notation of Gitams in Roopaka and Triputa Tala 10 Total Periods 60 CARNATIC MUSIC (VOCAL) Format of written examination for class IX Theory Marks: 30 Time: 2 Hours 1 Section I Six MCQ based on all the above mentioned topic 6 Marks 2 Section II Notation of Gitams in above mentioned Tala 6 Marks Writing of minimum Two Raga-lakshana from prescribed list in 6 Marks the syllabus. 3 Section III 6 marks Biography Musical Forms 4 Section IV 6 marks Description of talas, illustrating with examples Short notes of minimum 05 technical terms from the topic II. Definition of any two from the following terms (Sangeetam, Nada, Raga,Sruti, Alankara, Svara) Total Marks 30 marks Note: - Examiners should set atleast seven questions in total and the students should answer five questions from them, including two Essays, two short answer and short notes questions based on technical terms will be compulsory. -

Ragamala World Music 2017 Program Book

RAGAMALA 2017: WELCOME SCHEDULE Ragamala 2017 A CELEBRATION OF INDIAN CLASSICAL MUSIC + DANCE For the 5th year, spanning 15 hours and featuring dozens of performers, Ragamala offers a FRIDAY, SEPTEMBER 8 - SATURDAY, SEPTEMBER 9 Presented in collaboration with Kalapriya Foundation, Center for Indian Performing Arts jaw-dropping assortment of Indian classical music from some of its greatest and emerging Ragamala: A Celebration of Indian Classical Music + Dance A CELEBRATION OF INDIAN CLASSICAL MUSIC + DANCE practitioners. Ragamala functions as the perfect, immersive introduction to the classical music Chicago Cultural Center, Preston Bradley Hall FRIDAY, SEPTEMBER 8 - SATURDAY, SEPTEMBER 9 of India. Not only are both the music of the north (Hindustani) and the south (Carnatic) + Millennium Park, Great Lawn FRIDAY, SEPTEMBER 8 - SATURDAY, SEPTEMBER 9 - 6:30PM-10AM Chicago Cultural Center represented, but listeners will also get the rare chance to hear ragas performed at the time of 78 E. Washington Street, 3rd Floor Preston Bradley Hall, 3rd Floor day they were originally composed for—a facet of the tradition lost in the west. 6:30pm-10am 78 E Washington Street Presented in collaboration with Kalapriya Foundation, Center for Indian Performing Arts 6:30pm-6:30am The word “raga” has a Sanskrit origin, meaning "coloring or dyeing". The term also connotes an Evolution of Songs from Indian Films: 1930-2017 emotional state referring to a "feeling, affection, desire, interest, joy or delight", particularly 6:30-7:30pm SATURDAY, SEPTEMBER 9 related to passion, love, or sympathy for a subject or something. In the context of ancient Anjali Ray, vocals with Rishi Thakkar, tabla and Anis Chandnani, harmonium Yoga + Gong Meditation Indian music it is often devotional and used as a prayer. -

CET Syllabus of Record

CET Syllabus of Record Program: UW in India Course Title: Tutorial: Studio and Performing Arts: Sitar (North Indian Classical Music Instrument) Course Equivalencies: TBD Total Hours: 45 contact hours Recommended Credits: 3 Suggested Cross Listings: TBD Language of Instruction: Hindi/English Prerequisites/Requirements: None Description The Tutorial course seeks to bring a hands-on, applied dimension to students’ academic interests and requires substantial, sustained contact with and immersion into the host community. Though there are associated readings and writing assignments that are required for the completion of the course and the granting of academic credit, the tutorial focuses on the benefits of structured experiential learning. The Sitar Tutorial focuses on one of India’s major classical musical instruments, most notably made famous on the international scene by Pandit Ravi Shankar. Although the exact origin of the instrument remains debated among scholars, the tradition can be traced back several centuries, perhaps as early as the thirteenth century. The sitar combines elements of Indian and Persian stringed instruments, and flourished in the courts of the Mughals. Over the years, the sitar has gone through several transformations, which have resulted in variations in both sound and style. Today, the distinct sound of the sitar—characterized by its drone strings and resonator—has become emblematic of Hindustani (North Indian) classical music. Students of the Sitar Tutorial in Varanasi will have the unique opportunity to participate in the guru- shishya (student) tradition of pedagogy, while at the same time working with program faculty to review contemporary literature related to the field and to discuss themes related to experiential learning more broadly. -

A Non Linear Approach Towards Automated Emotion Analysis in Hindustani Music

A NON LINEAR APPROACH TOWARDS AUTOMATED EMOTION ANALYSIS IN HINDUSTANI MUSIC Shankha Sanyal*1,2, Archi Banerjee1,2, Tarit Guhathakurata1, Ranjan Sengupta1 and Dipak Ghosh1 1Sir C.V. Raman Centre for Physics and Music, 2Department of Physics Jadavpur University, Kolkata: 700032 *[email protected] ABSTRACT: In North Indian Classical Music, raga forms the basic structure over which individual improvisations is performed by an artist based on his/her creativity. The Alap is the opening section of a typical Hindustani Music (HM) performance, where the raga is introduced and the paths of its development are revealed using all the notes used in that particular raga and allowed transitions between them with proper distribution over time. In India, corresponding to each raga, several emotional flavors are listed, namely erotic love, pathetic, devotional, comic, horrific, repugnant, heroic, fantastic, furious, peaceful. The detection of emotional cues from Hindustani Classical music is a demanding task due to the inherent ambiguity present in the different ragas, which makes it difficult to identify any particular emotion from a certain raga. In this study we took the help of a high resolution mathematical microscope (MFDFA or Multifractal Detrended Fluctuation Analysis) to procure information about the inherent complexities and time series fluctuations that constitute an acoustic signal. With the help of this technique, 3 min alap portion of six conventional ragas of Hindustani classical music namely, Darbari Kanada, Yaman, Mian ki Malhar, Durga, Jay Jayanti and Hamswadhani played in three different musical instruments were analyzed. The results are discussed in detail. Keywords: Emotion Categorization, Hindustani Classical Music, Multifractal Analysis; Complexity INTRODUCTION: Musical instruments are often thought of as linear harmonic systems, and a first-order description of their operation can indeed be given on this basis. -

(Rajashekar Shastry , Dr.R.Manivannan, Dr. A.Kanaka

I J C T A, 9(28) 2016, pp. 197-204 © International Science Press A SURVEY ON TECHNIQUES OF EXTRAC- TING CHARACTERISTICS, COMPONENTS OF A RAGA AND AUTOMATIC RAGA IDENTIFICATION SYSTEM Rajashekar Shastry* R.Manivannan** and A.Kanaka Durga*** Abstract: This paper gives a brief survey of several techniques and approaches, which are applied for Raga Identifi cation of Indian classical music. In particular to the problem of analyzing an existing music signal using signal processing techniques, machine learning techniques to extract and classify a wide variety of information like tonic frequency, arohana and avaroha patterns, vaadi and samvaadi, pakad and chalan of a raga etc., Raga identifi cation system that may be important for different kinds of application such as, automatic annotation of swaras in the raga, correctness detection system, raga training system to mention a few. In this paper we presented various properties of raga and the way how a trained person identifi es the raga and the past raga identifi cation techniques. Keywords : Indian classical music; raga; signal processing; machine learning. 1. INTRODUCTION The Music can be a social activity, but it can also be a very spiritual experience. Ancient Indians were deeply impressed by the spiritual power of music, and it is out of this that Indian classical music was born. So, for those who take it seriously, classical music involves single-minded devotion and lifelong commitment. But the thing about music is that you can take it as seriously or as casually as you like. It is a rewarding experience, no matter how deep or shallow your involvement.