Towards Automatic Audio Segmentation of Indian Carnatic Music

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Famous Indian Classical Musicians and Vocalists Free Static GK E-Book

oliveboard FREE eBooks FAMOUS INDIAN CLASSICAL MUSICIANS & VOCALISTS For All Banking and Government Exams Famous Indian Classical Musicians and Vocalists Free static GK e-book Current Affairs and General Awareness section is one of the most important and high scoring sections of any competitive exam like SBI PO, SSC-CGL, IBPS Clerk, IBPS SO, etc. Therefore, we regularly provide you with Free Static GK and Current Affairs related E-books for your preparation. In this section, questions related to Famous Indian Classical Musicians and Vocalists have been asked. Hence it becomes very important for all the candidates to be aware about all the Famous Indian Classical Musicians and Vocalists. In all the Bank and Government exams, every mark counts and even 1 mark can be the difference between success and failure. Therefore, to help you get these important marks we have created a Free E-book on Famous Indian Classical Musicians and Vocalists. The list of all the Famous Indian Classical Musicians and Vocalists is given in the following pages of this Free E-book on Famous Indian Classical Musicians and Vocalists. Sample Questions - Q. Ustad Allah Rakha played which of the following Musical Instrument? (a) Sitar (b) Sarod (c) Surbahar (d) Tabla Answer: Option D – Tabla Q. L. Subramaniam is famous for playing _________. (a) Saxophone (b) Violin (c) Mridangam (d) Flute Answer: Option B – Violin Famous Indian Classical Musicians and Vocalists Free static GK e-book Famous Indian Classical Musicians and Vocalists. Name Instrument Music Style Hindustani -

Note Staff Symbol Carnatic Name Hindustani Name Chakra Sa C

The Indian Scale & Comparison with Western Staff Notations: The vowel 'a' is pronounced as 'a' in 'father', the vowel 'i' as 'ee' in 'feet', in the Sa-Ri-Ga Scale In this scale, a high note (swara) will be indicated by a dot over it and a note in the lower octave will be indicated by a dot under it. Hindustani Chakra Note Staff Symbol Carnatic Name Name MulAadhar Sa C - Natural Shadaj Shadaj (Base of spine) Shuddha Swadhishthan ri D - flat Komal ri Rishabh (Genitals) Chatushruti Ri D - Natural Shudhh Ri Rishabh Sadharana Manipur ga E - Flat Komal ga Gandhara (Navel & Solar Antara Plexus) Ga E - Natural Shudhh Ga Gandhara Shudhh Shudhh Anahat Ma F - Natural Madhyam Madhyam (Heart) Tivra ma F - Sharp Prati Madhyam Madhyam Vishudhh Pa G - Natural Panchama Panchama (Throat) Shuddha Ajna dha A - Flat Komal Dhaivat Dhaivata (Third eye) Chatushruti Shudhh Dha A - Natural Dhaivata Dhaivat ni B - Flat Kaisiki Nishada Komal Nishad Sahsaar Ni B - Natural Kakali Nishada Shudhh Nishad (Crown of head) Så C - Natural Shadaja Shadaj Property of www.SarodSitar.com Copyright © 2010 Not to be copied or shared without permission. Short description of Few Popular Raags :: Sanskrut (Sanskrit) pronunciation is Raag and NOT Raga (Alphabetical) Aroha Timing Name of Raag (Karnataki Details Avroha Resemblance) Mood Vadi, Samvadi (Main Swaras) It is a old raag obtained by the combination of two raags, Ahiri Sa ri Ga Ma Pa Ga Ma Dha ni Så Ahir Bhairav Morning & Bhairav. It belongs to the Bhairav Thaat. Its first part (poorvang) has the Bhairav ang and the second part has kafi or Så ni Dha Pa Ma Ga ri Sa (Chakravaka) serious, devotional harpriya ang. -

Syllabus for Post Graduate Programme in Music

1 Appendix to U.O.No.Acad/C1/13058/2020, dated 10.12.2020 KANNUR UNIVERSITY SYLLABUS FOR POST GRADUATE PROGRAMME IN MUSIC UNDER CHOICE BASED CREDIT SEMESTER SYSTEM FROM 2020 ADMISSION NAME OF THE DEPARTMENT: DEPARTMENT OF MUSIC NAME OF THE PROGRAMME: MA MUSIC DEPARTMENT OF MUSIC KANNUR UNIVERSITY SWAMI ANANDA THEERTHA CAMPUS EDAT PO, PAYYANUR PIN: 670327 2 SYLLABUS FOR POST GRADUATE PROGRAMME IN MUSIC UNDER CHOICE BASED CREDIT SEMESTER SYSTEM FROM 2020 ADMISSION NAME OF THE DEPARTMENT: DEPARTMENT OF MUSIC NAME OF THE PROGRAMME: M A (MUSIC) ABOUT THE DEPARTMENT. The Department of Music, Kannur University was established in 2002. Department offers MA Music programme and PhD. So far 17 batches of students have passed out from this Department. This Department is the only institution offering PG programme in Music in Malabar area of Kerala. The Department is functioning at Swami Ananda Theertha Campus, Kannur University, Edat, Payyanur. The Department has a well-equipped library with more than 1800 books and subscription to over 10 Journals on Music. We have gooddigital collection of recordings of well-known musicians. The Department also possesses variety of musical instruments such as Tambura, Veena, Violin, Mridangam, Key board, Harmonium etc. The Department is active in the research of various facets of music. So far 7 scholars have been awarded Ph D and two Ph D thesis are under evaluation. Department of Music conducts Seminars, Lecture programmes and Music concerts. Department of Music has conducted seminars and workshops in collaboration with Indira Gandhi National Centre for the Arts-New Delhi, All India Radio, Zonal Cultural Centre under the Ministry of Culture, Government of India, and Folklore Academy, Kannur. -

Fusion Without Confusion Raga Basics Indian

Fusion Without Confusion Raga Basics Indian Rhythm Basics Solkattu, also known as konnakol is the art of performing percussion syllables vocally. It comes from the Carnatic music tradition of South India and is mostly used in conjunction with instrumental music and dance instruction, although it has been widely adopted throughout the world as a modern composition and performance tool. Similarly, the music of North India has its own system of rhythm vocalization that is based on Bols, which are the vocalization of specific sounds that correspond to specific sounds that are made on the drums of North India, most notably the Tabla drums. Like in the south, the bols are used in musical training, as well as composition and performance. In addition, solkattu sounds are often referred to as bols, and the practice of reciting bols in the north is sometimes referred to as solkattu, so the distinction between the two practices is blurred a bit. The exercises and compositions we will discuss contain bols that are found in both North and South India, however they come from the tradition of the North Indian tabla drums. Furthermore, the theoretical aspect of the compositions is distinctly from the Hindustani, (north Indian) tradition. Hence, for the purpose of this presentation, the use of the term Solkattu refers to the broader, more general practice of Indian rhythmic language. South Indian Percussion Mridangam Dolak Kanjira Gattam North Indian Percussion Tabla Baya (a.k.a. Tabla) Pakhawaj Indian Rhythm Terms Tal (also tala, taal, or taala) – The Indian system of rhythm. Tal literally means "clap". -

M.A-Music-Vocal-Syllabus.Pdf

BANGALORE UNIVERSITY NAAC ACCREDITED WITH ‘A’ GRADE P.G. DEPARTMENT OF PERFORMING ARTS JNANABHARATHI, BANGALORE-560056 MUSIC SYLLABUS – M.A KARNATAKA MUSIC VOCAL AND INSTRUMENTAL (VEENA, VIOLIN AND FLUTE) CBCS SYSTEM- 2014 Dr. B.M. Jayashree. Professor of Music Chairperson, BOS (PG) M.A. KARNATAKA MUSIC VOCAL AND INSTRUMENTAL (VEENA, VIOLIN AND FLUTE) Semester scheme syllabus CBCS Scheme of Examination, continuous Evaluation and other Requirements: 1. ELIGIBILITY: A Degree with music vocal/instrumental as one of the optional subject with at least 50% in the concerned optional subject an merit internal among these applicant Of A Graduate with minimum of 50% marks secured in the senior grade examination in music (vocal/instrumental) conducted by secondary education board of Karnataka OR a graduate with a minimum of 50% marks secured in PG Diploma or 2 years diploma or 4 year certificate course in vocal/instrumental music conducted either by any recognized Universities of any state out side Karnataka or central institution/Universities Any degree with: a) Any certificate course in music b) All India Radio/Doordarshan gradation c) Any diploma in music or five years of learning certificate by any veteran musician d) Entrance test (practical) is compulsory for admission. 2. M.A. MUSIC course consists of four semesters. 3. First semester will have three theory paper (core), three practical papers (core) and one practical paper (soft core). 4. Second semester will have three theory papers (core), two practical papers (core), one is project work/Dissertation practical paper and one is practical paper (soft core) 5. Third semester will have two theory papers (core), three practical papers (core) and one is open Elective Practical paper 6. -

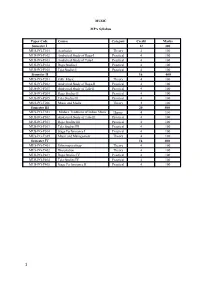

MUSIC MPA Syllabus Paper Code Course Category Credit Marks

MUSIC MPA Syllabus Paper Code Course Category Credit Marks Semester I 12 300 MUS-PG-T101 Aesthetics Theory 4 100 MUS-PG-P102 Analytical Study of Raga-I Practical 4 100 MUS-PG-P103 Analytical Study of Tala-I Practical 4 100 MUS-PG-P104 Raga Studies I Practical 4 100 MUS-PG-P105 Tala Studies I Practical 4 100 Semester II 16 400 MUS-PG-T201 Folk Music Theory 4 100 MUS-PG-P202 Analytical Study of Raga-II Practical 4 100 MUS-PG-P203 Analytical Study of Tala-II Practical 4 100 MUS-PG-P204 Raga Studies II Practical 4 100 MUS-PG-P205 Tala Studies II Practical 4 100 MUS-PG-T206 Music and Media Theory 4 100 Semester III 20 500 MUS-PG-T301 Modern Traditions of Indian Music Theory 4 100 MUS-PG-P302 Analytical Study of Tala-III Practical 4 100 MUS-PG-P303 Raga Studies III Practical 4 100 MUS-PG-P303 Tala Studies III Practical 4 100 MUS-PG-P304 Stage Performance I Practical 4 100 MUS-PG-T305 Music and Management Theory 4 100 Semester IV 16 400 MUS-PG-T401 Ethnomusicology Theory 4 100 MUS-PG-T402 Dissertation Theory 4 100 MUS-PG-P403 Raga Studies IV Practical 4 100 MUS-PG-P404 Tala Studies IV Practical 4 100 MUS-PG-P405 Stage Performance II Practical 4 100 1 Semester I MUS-PG-CT101:- Aesthetic Course Detail- The course will primarily provide an overview of music and allied issues like Aesthetics. The discussions will range from Rasa and its varieties [According to Bharat, Abhinavagupta, and others], thoughts of Rabindranath Tagore and Abanindranath Tagore on music to aesthetics and general comparative. -

UCLA Electronic Theses and Dissertations

UCLA UCLA Electronic Theses and Dissertations Title Performative Geographies: Trans-Local Mobilities and Spatial Politics of Dance Across & Beyond the Early Modern Coromandel Permalink https://escholarship.org/uc/item/90b9h1rs Author Sriram, Pallavi Publication Date 2017 Peer reviewed|Thesis/dissertation eScholarship.org Powered by the California Digital Library University of California UNIVERSITY OF CALIFORNIA Los Angeles Performative Geographies: Trans-Local Mobilities and Spatial Politics of Dance Across & Beyond the Early Modern Coromandel A dissertation submitted in partial satisfaction of the requirements for the degree Doctor of Philosophy in Culture and Performance by Pallavi Sriram 2017 Copyright by Pallavi Sriram 2017 ABSTRACT OF DISSERTATION Performative Geographies: Trans-Local Mobilities and Spatial Politics of Dance Across & Beyond the Early Modern Coromandel by Pallavi Sriram Doctor of Philosophy in Culture and Performance University of California, Los Angeles, 2017 Professor Janet M. O’Shea, Chair This dissertation presents a critical examination of dance and multiple movements across the Coromandel in a pivotal period: the long eighteenth century. On the eve of British colonialism, this period was one of profound political and economic shifts; new princely states and ruling elite defined themselves in the wake of Mughal expansion and decline, weakening Nayak states in the south, the emergence of several European trading companies as political stakeholders and a series of fiscal crises. In the midst of this rapidly changing landscape, new performance paradigms emerged defined by hybrid repertoires, focus on structure and contingent relationships to space and place – giving rise to what we understand today as classical south Indian dance. Far from stable or isolated tradition fixed in space and place, I argue that dance as choreographic ii practice, theorization and representation were central to the negotiation of changing geopolitics, urban milieus and individual mobility. -

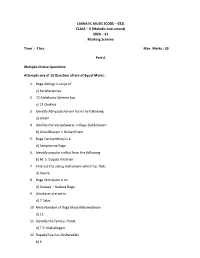

CARNATIC MUSIC (CODE – 032) CLASS – X (Melodic Instrument) 2020 – 21 Marking Scheme

CARNATIC MUSIC (CODE – 032) CLASS – X (Melodic Instrument) 2020 – 21 Marking Scheme Time - 2 hrs. Max. Marks : 30 Part A Multiple Choice Questions: Attempts any of 15 Question all are of Equal Marks : 1. Raga Abhogi is Janya of a) Karaharapriya 2. 72 Melakarta Scheme has c) 12 Chakras 3. Identify AbhyasaGhanam form the following d) Gitam 4. Idenfity the VarjyaSwaras in Raga SuddoSaveri b) GhanDharam – NishanDham 5. Raga Harikambhoji is a d) Sampoorna Raga 6. Identify popular vidilist from the following b) M. S. Gopala Krishnan 7. Find out the string instrument which has frets d) Veena 8. Raga Mohanam is an d) Audava – Audava Raga 9. Alankaras are set to d) 7 Talas 10 Mela Number of Raga Maya MalawaGoula d) 15 11. Identify the famous flutist d) T R. Mahalingam 12. RupakaTala has AksharaKals b) 6 13. Indentify composer of Navagrehakritis c) MuthuswaniDikshitan 14. Essential angas of kriti are a) Pallavi-Anuppallavi- Charanam b) Pallavi –multifplecharanma c) Pallavi – MukkyiSwaram d) Pallavi – Charanam 15. Raga SuddaDeven is Janya of a) Sankarabharanam 16. Composer of Famous GhanePanchartnaKritis – identify a) Thyagaraja 17. Find out most important accompanying instrument for a vocal concert b) Mridangam 18. A musical form set to different ragas c) Ragamalika 19. Identify dance from of music b) Tillana 20. Raga Sri Ranjani is Janya of a) Karahara Priya 21. Find out the popular Vena artist d) S. Bala Chander Part B Answer any five questions. All questions carry equal marks 5X3 = 15 1. Gitam : Gitam are the simplest musical form. The term “Gita” means song it is melodic extension of raga in which it is composed. -

Sanjay Subrahmanyan……………………………Revathi Subramony & Sanjana Narayanan

Table of Contents From the Publications & Outreach Committee ..................................... Lakshmi Radhakrishnan ............ 1 From the President’s Desk ...................................................................... Balaji Raghothaman .................. 2 Connect with SRUTI ............................................................................................................................ 4 SRUTI at 30 – Some reflections…………………………………. ........... Mani, Dinakar, Uma & Balaji .. 5 A Mellifluous Ode to Devi by Sikkil Gurucharan & Anil Srinivasan… .. Kamakshi Mallikarjun ............. 11 Concert – Sanjay Subrahmanyan……………………………Revathi Subramony & Sanjana Narayanan ..... 14 A Grand Violin Trio Concert ................................................................... Sneha Ramesh Mani ................ 16 What is in a raga’s identity – label or the notes?? ................................... P. Swaminathan ...................... 18 Saayujya by T.M.Krishna & Priyadarsini Govind ................................... Toni Shapiro-Phim .................. 20 And the Oscar goes to …… Kaapi – Bombay Jayashree Concert .......... P. Sivakumar ......................... 24 Saarangi – Harsh Narayan ...................................................................... Allyn Miner ........................... 26 Lec-Dem on Bharat Ratna MS Subbulakshmi by RK Shriramkumar .... Prabhakar Chitrapu ................ 28 Bala Bhavam – Bharatanatyam by Rumya Venkateshwaran ................. Roopa Nayak ......................... 33 Dr. M. Balamurali -

Tillana Raaga: Bageshri; Taala: Aadi; Composer

Tillana Raaga: Bageshri; Taala: Aadi; Composer: Lalgudi G. Jayaraman Aarohana: Sa Ga2 Ma1 Dha2 Ni2 Sa Avarohana: Sa Ni2 Dha2 Ma1 Pa Dha2 Ga2 Ma1 Ga2 Ri2 Sa SaNiDhaMa .MaPaDha | Ga. .Ma | RiRiSa . || DhaNiSaGa .SaGaMa | Dha. MaDha| NiRi Sa . || DhaNiSaMa .GaRiSa |Ri. NiDha | NiRi Sa . || SaRiNiDha .MaPaDha |Ga . Ma . | RiNiSa . || Sa ..Ni .Dha Ma . |Sa..Ma .Ga | RiNiSa . || Sa ..Ni .Dha Ma~~ . |Sa..Ma .Ga | RiNiSa . || Pallavi tom dhru dhru dheem tadara | tadheem dheem ta na || dhim . dhira | na dhira na Dhridhru| (dhirana: DhaMaNi .. dhirana.: DhaMaGa .) tom dhru dhru dheem tadara | tadheem dheem ta na || dhim . dhira | na dhira na Dhridhru|| (dhirana: MaDha NiSa.. dhirana:DhaMa Ga..) tom dhru dhru dheem tadara | tadheem dheem ta na || (ta:DhaNi na:NiGaRi) dhim . dhira | na dhira na Dhridhru|| (dhirana:NiGaSaSaNi. Dhirana:DhaSaNiNiDha .) tom dhru dhru dheem tadara | tadheem dheem ta na || dhim . dhira | na dhira na Dhridhru|| (dhira:GaMaDhaNi na:GaGaRiSa dhira:NiDha na:Ga..) tom dhru dhru dheem tadana | tadheem dheem ta na || dhim.... Anupallavi SaMa .Ga MaNi . Dha| NiGa .Ri | NiDhaSa . || GaRi .Sa NiMa .Pa | Dha Ga..Ma | RiNi Sa . || naadhru daani tomdhru dhim | ^ta- ka-jha | Nuta dhim || … naadhru daani tomdhru dhim | (Naadru:MaGa, daani:DhaMa, tomdhru:NiDha, dhim: Sa) ^ta- ka-jha | Nuta dhim || (NiDha SaNi RiSa) taJha-Nu~ta dhim jhaNu | (tajha:SaSa Nu~ta: NiSaRiSa dhim:Ni; jha~Nu:MaDhaNi. tadhim . na | ta dhim ta || (tadhim:Dha Ga..;nata dhimta: MNiDha Sa.Sa) tanadheem .tatana dheemta tanadheem |(tanadheemta: DhaNi Ri ..Sa tanadheem: NiRiSa. .Sa tanadheem: NiDhaNi . ) .dheem dheemta | tom dhru dheem (dheem: Sa deemta:Ga.Ma tomdhrudeem:Ri..Ri Sa) .dheem dheem dheemta ton-| (dheem:Dha. -

List of Empanelled Artist

INDIAN COUNCIL FOR CULTURAL RELATIONS EMPANELMENT ARTISTS S.No. Name of Artist/Group State Date of Genre Contact Details Year of Current Last Cooling off Social Media Presence Birth Empanelment Category/ Sponsorsred Over Level by ICCR Yes/No 1 Ananda Shankar Jayant Telangana 27-09-1961 Bharatanatyam Tel: +91-40-23548384 2007 Outstanding Yes https://www.youtube.com/watch?v=vwH8YJH4iVY Cell: +91-9848016039 September 2004- https://www.youtube.com/watch?v=Vrts4yX0NOQ [email protected] San Jose, Panama, https://www.youtube.com/watch?v=YDwKHb4F4tk [email protected] Tegucigalpa, https://www.youtube.com/watch?v=SIh4lOqFa7o Guatemala City, https://www.youtube.com/watch?v=MiOhl5brqYc Quito & Argentina https://www.youtube.com/watch?v=COv7medCkW8 2 Bali Vyjayantimala Tamilnadu 13-08-1936 Bharatanatyam Tel: +91-44-24993433 Outstanding No Yes https://www.youtube.com/watch?v=wbT7vkbpkx4 +91-44-24992667 https://www.youtube.com/watch?v=zKvILzX5mX4 [email protected] https://www.youtube.com/watch?v=kyQAisJKlVs https://www.youtube.com/watch?v=q6S7GLiZtYQ https://www.youtube.com/watch?v=WBPKiWdEtHI 3 Sucheta Bhide Maharashtra 06-12-1948 Bharatanatyam Cell: +91-8605953615 Outstanding 24 June – 18 July, Yes https://www.youtube.com/watch?v=WTj_D-q-oGM suchetachapekar@hotmail 2015 Brazil (TG) https://www.youtube.com/watch?v=UOhzx_npilY .com https://www.youtube.com/watch?v=SgXsRIOFIQ0 https://www.youtube.com/watch?v=lSepFLNVelI 4 C.V.Chandershekar Tamilnadu 12-05-1935 Bharatanatyam Tel: +91-44- 24522797 1998 Outstanding 13 – 17 July 2017- No https://www.youtube.com/watch?v=Ec4OrzIwnWQ -

The Journal the Music Academy

■ '■)''' ^ % fHr MUSIC AC/*.nrH« , * **’"•- **C ■■ f / v ’s •OVAPETT a h , M A 0R , THE JOURNAL OF THE MUSIC ACADEMY { | M A D R A S A QUARTERLY DEVOTED TO THE ADVANCEMENT OF THE SCIENCE AND ART OF MUSIC ol. XXXI 1960 Parts I-IV 5frt srcrrfo lrf^% * i *Tr*rf?cT fts r f ir stt^ ii “ I dwell not in Vaikuntha, nor in the hearts of Yogins, nor in the Sun ; where my Bhaktas sing, there be I, Narada ! ” E D IT E D BY V. RAGHAVAN, M .A ., p h . d . 1 9 6 0 PUBLISHED BY THE MUSIC ACADEMY, MADRAS 115-E, MOWBRAY’S ROAD, MADRAS-14. Annual Subscription—Inland Rs. 4. Foreign 8 sb. Post paid. n l u X-gfr*** ■»" '« Hi* '% 8" >«■ "«■ nMin { ADVERSTISEMENT CHARGES a | | COVER P A G E S : Full Page Half page | T Back (outside) Rs. 25 A Front (inside) „ 20 Rs. 11 a Back (Do.) „ 20 „ 11 * ♦ INSIDE PAGES: 1st page (after cover) „ 18 „ 10 Other pages (each) „ 15 „ 9 | Preference will be given to advertisers of musical I i instruments and books and other artistic wares. f * * I A Special position and special rates on application. o ^ T CONTENTS The XXXIIIrd Madras Music Conference, 1959, Official Report Gramas and Musical Intervals By S. Ramanathan The Historical development of Prabandha Giti By Swami Prajnanananda Significant use of Srutis in North Indian Ragas By Robindralal Roy Gamakas in Hindusthani Music By Pt. Ratanjankar New Trends in American Dancing By Clifford Jones Untempered Intonation in the West By H. Boatwright Three Dance Styles of Assam By Maheshwar Neog History of Indian Music as gleaned through Technical terms, Idioms and Usages By G.