Reducing Faulty Executions of Distributed Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Arguments for an End-Middle-End Internet

CHASING EME: ARGUMENTS FOR AN END-MIDDLE-END INTERNET A Dissertation Presented to the Faculty of the Graduate School of Cornell University in Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy by Saikat Guha August 2009 c 2009 Saikat Guha ALL RIGHTS RESERVED CHASING EME: ARGUMENTS FOR AN END-MIDDLE-END INTERNET Saikat Guha, Ph.D. Cornell University 2009 Connection establishment in the Internet has remained unchanged from its orig- inal design in the 1970s: first, the path between the communicating endpoints is assumed to always be open. It is assumed that an endpoint can reach any other endpoint by simply sending a packet addressed to the destination. This assumption is no longer borne out in practice: Network Address Translators (NATs) prevent all hosts from being addressed, firewalls prevent all packets from being delivered, and middleboxes transparently intercept packets with- out endpoint knowledge. Second, the Internet strives to deliver all packets ad- dressed to a destination regardless of whether the packet is ultimately desired by the destination or not. Denial of Service (DoS) attacks are therefore common- place, and the Internet remains vulnerable to flash worms. This thesis presents the End-Middle-End (EME) requirements for connec- tion establishment that the modern Internet should satisfy, and explores the de- sign space of a signaling-based architecture that meets these requirements with minimal changes to the existing Internet. In so doing, this thesis proposes so- lutions to three real-world problems. First, it focuses on the problem of TCP NAT Traversal, where endpoints behind their respective NATs today cannot es- tablish a direct TCP connection with each other due to default NAT behavior. -

Democratizing Content Distribution

Democratizing content distribution Michael J. Freedman New York University Primary work in collaboration with: Martin Casado, Eric Freudenthal, Karthik Lakshminarayanan, David Mazières Additional work in collaboration with: Siddhartha Annapureddy, Hari Balakrishnan, Dan Boneh, Nick Feamster, Scott Garriss, Yuval Ishai, Michael Kaminsky, Brad Karp, Max Krohn, Nick McKeown, Kobbi Nissim, Benny Pinkas, Omer Reingold, Kevin Shanahan, Scott Shenker, Ion Stoica, and Mythili Vutukuru Overloading content publishers Feb 3, 2004: Google linked banner to “julia fractals” Users clicked onto University of Western Australia web site …University’s network link overloaded, web server taken down temporarily… Adding insult to injury… Next day: Slashdot story about Google overloading site …UWA site goes down again Insufficient server resources Browser Browser Origin Server Browser Browser Browser Browser Browser Browser Many clients want content Server has insufficient resources Solving the problem requires more resources Serving large audiences possible… Where do their resources come from? Must consider two types of content separately • Static • Dynamic Static content uses most bandwidth Dynamic HTML: 19.6 KB Static content: 6.2 MB 1 flash movie 5 style sheets 18 images 3 scripts Serving large audiences possible… How do they serve static content? Content distribution networks (CDNs) Centralized CDNs Static, manual deployment Centrally managed Implications: Trusted infrastructure Costs scale linearly Not solved for little guy Browser Browser Origin Server Browser Browser Browser Browser Browser Browser Problem: Didn’t anticipate sudden load spike (flash crowd) Wouldn’t want to pay / couldn’t afford costs Leveraging cooperative resources Many people want content Many willing to mirror content e.g., software mirrors, file sharing, open proxies, etc. -

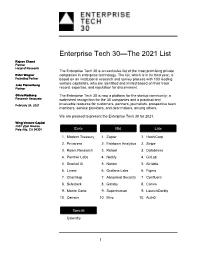

Enterprise Tech 30—The 2021 List

Enterprise Tech 30—The 2021 List Rajeev Chand Partner Head of Research The Enterprise Tech 30 is an exclusive list of the most promising private Peter Wagner companies in enterprise technology. The list, which is in its third year, is Founding Partner based on an institutional research and survey process with 103 leading venture capitalists, who are identified and invited based on their track Jake Flomenberg Partner record, expertise, and reputation for discernment. Olivia Rodberg The Enterprise Tech 30 is now a platform for the startup community: a Research Associate watershed recognition for the 30 companies and a practical and February 24, 2021 invaluable resource for customers, partners, journalists, prospective team members, service providers, and deal makers, among others. We are pleased to present the Enterprise Tech 30 for 2021. Wing Venture Capital 480 Lytton Avenue Palo Alto, CA 94301 Early Mid Late 1. Modern Treasury 1. Zapier 1. HashiCorp 2. Privacera 2. Fishtown Analytics 2. Stripe 3. Roam Research 3. Retool 3. Databricks 4. Panther Labs 4. Netlify 4. GitLab 5. Snorkel AI 5. Notion 5. Airtable 6. Linear 6. Grafana Labs 6. Figma 7. ChartHop 7. Abnormal Security 7. Confluent 8. Substack 8. Gatsby 8. Canva 9. Monte Carlo 9. Superhuman 9. LaunchDarkly 10. Census 10. Miro 10. Auth0 Special Calendly 1 2021 The Curious Case of Calendly This year’s Enterprise Tech 30 has 31 companies rather than 30 due to the “curious case” of Calendly. Calendly, a meeting scheduling company, was categorized as Early-Stage when the ET30 voting process started on January 11 as the company had raised $550,000. -

Managing Large-Scale, Distributed Systems Research Experiments with Control-Flows Tomasz Buchert

Managing large-scale, distributed systems research experiments with control-flows Tomasz Buchert To cite this version: Tomasz Buchert. Managing large-scale, distributed systems research experiments with control-flows. Distributed, Parallel, and Cluster Computing [cs.DC]. Université de Lorraine, 2016. English. tel- 01273964 HAL Id: tel-01273964 https://tel.archives-ouvertes.fr/tel-01273964 Submitted on 15 Feb 2016 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. École doctorale IAEM Lorraine MANAGINGLARGE-SCALE,DISTRIBUTED SYSTEMSRESEARCHEXPERIMENTSWITH CONTROL-FLOWS THÈSE présentée et soutenue publiquement le 6 janvier 2016 pour l’obtention du DOCTORATDEL’UNIVERSITÉDELORRAINE (mention informatique) par TOMASZBUCHERT Composition du jury : Rapporteurs Christian Pérez, Directeur de recherche Inria, LIP, Lyon Eric Eide, Research assistant professor, Univ. of Utah Examinateurs François Charoy, Professeur, Université de Lorraine Olivier Richard, Maître de conférences, Univ. Joseph Fourier, Grenoble Directeurs de thèse Lucas Nussbaum, Maître de conférences, Université de Lorraine Jens Gustedt, Directeur de recherche Inria, ICUBE, Strasbourg Laboratoire Lorrain de Recherche en Informatique et ses Applications – UMR 7503 ABSTRACT(ENGLISH) Keywords: distributed systems, large-scale experiments, experiment control, busi- ness processes, experiment provenance Running experiments on modern systems such as supercomputers, cloud infrastructures or P2P networks became very complex, both technically and methodologically. -

Software-Defined Networking: Improving Security for Enterprise

Software-defined Networking: Improving Security for Enterprise and Home Networks by Curtis R. Taylor A Dissertation Submitted to the Faculty of the WORCESTER POLYTECHNIC INSTITUTE In partial fulfillment of the requirements for the Degree of Doctor of Philosophy in Computer Science by May 2017 APPROVED: Professor Craig A. Shue, Dissertation Advisor Professor Craig E. Wills, Head of Department Professor Mark Claypool, Committee Member Professor Thomas Eisenbarth, Committee Member Doctor Nathanael Paul, External Committee Member Abstract In enterprise networks, all aspects of the network, such as placement of security devices and performance, must be carefully considered. Even with forethought, networks operators are ulti- mately unaware of intra-subnet traffic. The inability to monitor intra-subnet traffic leads to blind spots in the network where compromised hosts have unfettered access to the network for spreading and reconnaissance. While network security middleboxes help to address compromises, they are limited in only seeing a subset of all network traffic that traverses routed infrastructure, which is where middleboxes are frequently deployed. Furthermore, traditional middleboxes are inherently limited to network-level information when making security decisions. Software-defined networking (SDN) is a networking paradigm that allows logically centralized control of network switches and routers. SDN can help address visibility concerns while providing the benefits of a centralized network control platform, but traditional switch-based SDN leads to concerns of scalability and is ultimately limited in that only network-level information is available to the controller. This dissertation addresses these SDN limitations in the enterprise by pushing the SDN functionality to the end-hosts. In doing so, we address scalability concerns and provide network operators with better situational awareness by incorporating system-level and graphical user interface (GUI) context into network information handled by the controller. -

A Defense Framework Against Denial-Of-Service in Computer Networks

A DEFENSE FRAMEWORK AGAINST DENIAL-OF-SERVICE IN COMPUTER NETWORKS by Sherif Khattab M.Sc. Computer Science, University of Pittsburgh, USA, 2004 B.Sc. Computer Engineering, Cairo University, Egypt, 1998 Submitted to the Graduate Faculty of the Department of Computer Science in partial fulfillment of the requirements for the degree of Doctor of Philosophy University of Pittsburgh 2008 UNIVERSITY OF PITTSBURGH DEPARTMENT OF COMPUTER SCIENCE This dissertation was presented by Sherif Khattab It was defended on June 25th, 2008 and approved by Prof. Rami Melhem, Department of Computer Science Prof. Daniel Moss´e,Department of Computer Science Prof. Taieb Znati, Department of Computer Science Prof. Prashant Krishnamurthy, School of Information Sciences Dissertation Advisors: Prof. Rami Melhem, Department of Computer Science, Prof. Daniel Moss´e,Department of Computer Science ii A DEFENSE FRAMEWORK AGAINST DENIAL-OF-SERVICE IN COMPUTER NETWORKS Sherif Khattab, PhD University of Pittsburgh, 2008 Denial-of-Service (DoS) is a computer security problem that poses a serious challenge to trustworthiness of services deployed over computer networks. The aim of DoS attacks is to make services unavailable to legitimate users, and current network architectures allow easy-to-launch, hard-to-stop DoS attacks. Particularly challenging are the service-level DoS attacks, whereby the victim service is flooded with legitimate-like requests, and the jamming attack, in which wireless communication is blocked by malicious radio interference. These attacks are overwhelming even for massively-resourced services, and effective and efficient defenses are highly needed. This work contributes a novel defense framework, which I call dodging, against service- level DoS and wireless jamming. -

DISSERTATION DOCTEUR DE L UNIVERSITÉ DU LUXEMBOURG EN INFORMATIQUE Diego KREUTZ Dissertation Defence Committee

PhD-FSTM-2020-52 The Faculty of Sciences, Technology and Medicine DISSERTATION Defence held on 15/09/2020 in Luxembourg to obtain the degree of DOCTEUR DE L’UNIVERSITÉ DU LUXEMBOURG EN INFORMATIQUE by Diego KREUTZ Born on 29 November 1979 in Santa Rosa (Brazil) LOGICALLY CENTRALIZED SECURITY FOR SOFTWARE-DEFINED NETWORKING Dissertation defence committee Dr Paulo Esteves-Veríssimo, dissertation supervisor Professor, Université du Luxembourg Dr Björn Ottersten, Chairman Professor, Université du Luxembourg Dr Radu State, Vice Chairman Associate Professor, Université du Luxembourg Dr Jennifer Rexford Professor, Princeton University Dr Sandra Scott-Hayward, Lecturer, Queen’s University Be lfast Acknowledgements I am very proud of and grateful to my supervisors Paulo Esteves-Veríssimo and Fernando M. V. Ramos because I have learned so much with them. One of the few things I am sure of is my eternal debt with them. I am (arguably) sure I am a better researcher now because of them. In fact, I am still learning everyday something new with them. Paulo was also an awesome sort of coach in my personal life as well. He is an impressively smart and knowledgeable person. I have to say, in some ways, he is indeed better than a regular doctor. I know that (probably) just me and him will truly understand the meaning of this statement. IhadoneofthemostinterestingandproductivetimesafterImetJiangshan Yu. He is a very smart, easy going and inspiring researcher and person. Because of him, I started to better understand and to have joy in designing security protocols. JY inspired me in different ways. I consider myself lucky to have met him. -

Chasing Eme: Arguments for an End-Middle-End Internet

CHASING EME: ARGUMENTS FOR AN END-MIDDLE-END INTERNET A Dissertation Presented to the Faculty of the Graduate School of Cornell University in Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy by Saikat Guha August 2009 c 2009 Saikat Guha ALL RIGHTS RESERVED CHASING EME: ARGUMENTS FOR AN END-MIDDLE-END INTERNET Saikat Guha, Ph.D. Cornell University 2009 Connection establishment in the Internet has remained unchanged from its orig- inal design in the 1970s: first, the path between the communicating endpoints is assumed to always be open. It is assumed that an endpoint can reach any other endpoint by simply sending a packet addressed to the destination. This assumption is no longer borne out in practice: Network Address Translators (NATs) prevent all hosts from being addressed, firewalls prevent all packets from being delivered, and middleboxes transparently intercept packets with- out endpoint knowledge. Second, the Internet strives to deliver all packets ad- dressed to a destination regardless of whether the packet is ultimately desired by the destination or not. Denial of Service (DoS) attacks are therefore common- place, and the Internet remains vulnerable to flash worms. This thesis presents the End-Middle-End (EME) requirements for connec- tion establishment that the modern Internet should satisfy, and explores the de- sign space of a signaling-based architecture that meets these requirements with minimal changes to the existing Internet. In so doing, this thesis proposes so- lutions to three real-world problems. First, it focuses on the problem of TCP NAT Traversal, where endpoints behind their respective NATs today cannot es- tablish a direct TCP connection with each other due to default NAT behavior. -

From Ethane to SDN and Beyond

From Ethane to SDN and Beyond Martín Casado Nick McKeown Scott Shenker Andreessen Horowitz Stanford University University of California, Berkeley [email protected] [email protected] [email protected] This article is an editorial note submitted to CCR. It has NOT been peer reviewed. The authors take full responsibility for this article’s technical content. Comments can be posted through CCR Online. ABSTRACT and expensive, and internally they were based on old engineering We briefly describe the history behind the Ethane paper andits practices and poorly defined APIs. ultimate evolution into SDN and beyond. Yet, most customers only used a handful of these features. One approach would have been to completely redesign the router hard- CCS CONCEPTS ware and software around cleaner APIs, better abstractions, and modern software practices. But time-to-market pressures meant • Networks → Network architectures; Network types; they couldn’t start over with a simpler design. And while in other industries startups can enter the market with more efficient ap- KEYWORDS proaches, the barrier to entry in the router business had been made Software Defined Networks (SDN) so tall that there was little chance for significant innovation. Instead, the router vendors continued to fight problems of reliability and security brought on by overwhelming complexity. The research 1 THE ETHANE STORY community, sensing the frustration and struggling to get new ideas SDN is often described as a revolutionary architectural approach adopted, labeled the Internet as “ossified” and unable to change. In to building networks; after all, it was named and first discussed in response, they started research programs like NewArch [7], GENI the context of research. -

SV Angel - RC Current Investments As of 11/22/2011

SV Angel - RC Current Investments as of 11/22/2011 Investment Sector Company Description Founder(s) City Date Other Investors LBS/Mobile Ntro Service for meeting new people based on location and Josh Resnick LA Mar 03 2011 Initial Capital, Baroda (nProgress) interests Ventures, WGI, Jarl Mohn, Angels LBS/Mobile foursquare Location-based mobile app with gaming elements Dennis Crowley, NY Sep 29 2009 Andreessen Horowitz,Union Naveen Selvadurai Square Ventures, OATV LBS/Mobile App.net Mobile photo sharing platform Dalton Caldwell SF Feb 03 2010 Accel Partners, Morgenthaler, Gideon Yu, Alex Zubillaga LBS/Mobile Jawbone *via High-end bluetooth audio accessories Alexander Asseily, SF Andreessen Horowitz, Khosla Advisor Hosain Rahman Ventures, Sequoia Capital LBS/Mobile Lovely Marketplace/search for real estate renters Blake Pierson SF Apr 29 2010 Founder Collective, Keith Rabois, Ben Ling, Alex Zubillaga LBS/Mobile One Mobile LBS app to connect with people around you based on Cory Levy, Michael SF Mar 17 2011 CRV, True Ventures, General common interests Callahan Catalyst, Keith Rabois LBS/Mobile PhoneTell Intuitive word search dialing, engaging mobile users in Wendell Brown, SF Nov 19 2009 Angels dynamic phone call interactions Steve Larson LBS/Mobile RollCall Short form party planning allowing users to share plans with Chuck Lam, Ibrahim SF Jun 10 2010 Deep Nishar, Paul Buchheit, friends Okuyucu XG Ventures LBS/Mobile SimpleGeo Geo and alternate reality platform for apps Joe Stump, Matt SF Nov 25 2009 First Round Capital, Redpoint Galligan -

Contents U U U

Contents u u u ACM Awards Reception and Banquet, June 2018 .................................................. 2 Introduction ......................................................................................................................... 3 A.M. Turing Award .............................................................................................................. 4 ACM Prize in Computing ................................................................................................. 5 ACM Charles P. “Chuck” Thacker Breakthrough in Computing Award ............. 6 ACM – AAAI Allen Newell Award .................................................................................. 7 Software System Award ................................................................................................... 8 Grace Murray Hopper Award ......................................................................................... 9 Paris Kanellakis Theory and Practice Award ...........................................................10 Karl V. Karlstrom Outstanding Educator Award .....................................................11 Eugene L. Lawler Award for Humanitarian Contributions within Computer Science and Informatics ..........................................................12 Distinguished Service Award .......................................................................................13 ACM Athena Lecturer Award ........................................................................................14 Outstanding Contribution -

Using All Networks Around Us

USING ALL NETWORKS AROUND US A DISSERTATION SUBMITTED TO THE DEPARTMENT OF ELECTRICAL ENGINEERING AND THE COMMITTEE ON GRADUATE STUDIES OF STANFORD UNIVERSITY IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF DOCTOR OF PHILOSOPHY Kok-Kiong Yap March 2013 c Copyright by Kok-Kiong Yap 2013 All Rights Reserved ii iv The very tool of fairness is sufficient for systematic unfairness. v vi Abstract Our smartphones are increasingly becoming loaded with more applications and equipped with more network interfaces|3G, 4G, WiFi. The exponential increase in applications for mobile devices has powered unprecedented growth for mobile networks. Mobile operating system and mobile network infrastructure are struggling to cope with this growth. In this thesis, I advocate that we use all of the networks around us. Our smartphones are already equipped with multiple radios that can connect us to multiple networks at the same time. By using those connections, we can open up tremendous capacity and coverage to better serve users, applications, and network operators alike. If done right, this will provide mobile users with seamless connectivity, faster connections, even lower charges and smaller energy footprints. To do so, we must first overcome several technical challenges that I will be describing in this dissertation, along with their proposed solutions. 1. I design a client network stack that allows the applications to migrate flows from one network to another, aggregates bandwidth across multiple networks to achieve faster connections and provides applications with flexible control over interface choices. I also present a prototype of this client network stack|called Hercules|to demonstrate its viability and usefulness.