Complete Paper

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A DATA-ORIENTED NETWORK ARCHITECTURE Doctoral Dissertation

TKK Dissertations 140 Espoo 2008 A DATA-ORIENTED NETWORK ARCHITECTURE Doctoral Dissertation Teemu Koponen Helsinki University of Technology Faculty of Information and Natural Sciences Department of Computer Science and Engineering TKK Dissertations 140 Espoo 2008 A DATA-ORIENTED NETWORK ARCHITECTURE Doctoral Dissertation Teemu Koponen Dissertation for the degree of Doctor of Science in Technology to be presented with due permission of the Faculty of Information and Natural Sciences for public examination and debate in Auditorium T1 at Helsinki University of Technology (Espoo, Finland) on the 2nd of October, 2008, at 12 noon. Helsinki University of Technology Faculty of Information and Natural Sciences Department of Computer Science and Engineering Teknillinen korkeakoulu Informaatio- ja luonnontieteiden tiedekunta Tietotekniikan laitos Distribution: Helsinki University of Technology Faculty of Information and Natural Sciences Department of Computer Science and Engineering P.O. Box 5400 FI - 02015 TKK FINLAND URL: http://cse.tkk.fi/ Tel. +358-9-4511 © 2008 Teemu Koponen ISBN 978-951-22-9559-3 ISBN 978-951-22-9560-9 (PDF) ISSN 1795-2239 ISSN 1795-4584 (PDF) URL: http://lib.tkk.fi/Diss/2008/isbn9789512295609/ TKK-DISS-2510 Picaset Oy Helsinki 2008 AB ABSTRACT OF DOCTORAL DISSERTATION HELSINKI UNIVERSITY OF TECHNOLOGY P. O. BOX 1000, FI-02015 TKK http://www.tkk.fi Author Teemu Koponen Name of the dissertation A Data-Oriented Network Architecture Manuscript submitted 09.06.2008 Manuscript revised 12.09.2008 Date of the defence 02.10.2008 Monograph X Article dissertation (summary + original articles) Faculty Information and Natural Sciences Department Computer Science and Engineering Field of research Networking Opponent(s) Professor Jon Crowcroft Supervisor Professor Antti Ylä-Jääski Instructor(s) Dr. -

NSDI '11: 8Th USENIX Symposium on Networked Systems Design And

NSDI ’11: 8th USENIX Symposium on Networked Systems Design and Implementation Boston, MA March 30–April 1, 2011 Opening Remarks and Awards Presentation Summarized by Rik Farrow ([email protected]) David Andersen (CMU), co-chair with Sylvia Ratnasamy (Intel Labs, Berkeley), presided over the opening of NSDI. David told us that there were 251 attendees by the time of the opening, three short of a record for NSDI; 157 papers were submitted and 27 accepted. David joked that they used a Bayesian ranking process based on keywords, and that using the word “A” in your title seemed to increase your chances of having your paper accepted. In reality, format checking and Geoff Voelker’s Banal paper checker were used in a first pass, and all surviving papers received three initial reviews. By the time of the day-long program committee meeting, there were 56 papers left. In the end, there were no invited talks at NSDI ’11, just paper presentation sessions. David announced the winners of the Best Paper awards: “ServerSwitch: A Programmable and High Performance Platform for Datacenter Networks,” Guohan Lu et al. (Microsoft Research Asia), and “Design, Implementation and Evaluation of Congestion Control for Multipath TCP,” Damon Wischik et al. (University College London). Patrick Wendell (Princeton University) was awarded a CRA Undergraduate Research Award for outstanding research potential in CS for his work on DONAR, a system for selecting the best online replica, a service he deployed and tested. Patrick also worked at Cloudera in the summer of 2010 and become a committer for the Apache Avro system, the RPC networking layer to be used in Hadoop. -

Arguments for an End-Middle-End Internet

CHASING EME: ARGUMENTS FOR AN END-MIDDLE-END INTERNET A Dissertation Presented to the Faculty of the Graduate School of Cornell University in Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy by Saikat Guha August 2009 c 2009 Saikat Guha ALL RIGHTS RESERVED CHASING EME: ARGUMENTS FOR AN END-MIDDLE-END INTERNET Saikat Guha, Ph.D. Cornell University 2009 Connection establishment in the Internet has remained unchanged from its orig- inal design in the 1970s: first, the path between the communicating endpoints is assumed to always be open. It is assumed that an endpoint can reach any other endpoint by simply sending a packet addressed to the destination. This assumption is no longer borne out in practice: Network Address Translators (NATs) prevent all hosts from being addressed, firewalls prevent all packets from being delivered, and middleboxes transparently intercept packets with- out endpoint knowledge. Second, the Internet strives to deliver all packets ad- dressed to a destination regardless of whether the packet is ultimately desired by the destination or not. Denial of Service (DoS) attacks are therefore common- place, and the Internet remains vulnerable to flash worms. This thesis presents the End-Middle-End (EME) requirements for connec- tion establishment that the modern Internet should satisfy, and explores the de- sign space of a signaling-based architecture that meets these requirements with minimal changes to the existing Internet. In so doing, this thesis proposes so- lutions to three real-world problems. First, it focuses on the problem of TCP NAT Traversal, where endpoints behind their respective NATs today cannot es- tablish a direct TCP connection with each other due to default NAT behavior. -

Democratizing Content Distribution

Democratizing content distribution Michael J. Freedman New York University Primary work in collaboration with: Martin Casado, Eric Freudenthal, Karthik Lakshminarayanan, David Mazières Additional work in collaboration with: Siddhartha Annapureddy, Hari Balakrishnan, Dan Boneh, Nick Feamster, Scott Garriss, Yuval Ishai, Michael Kaminsky, Brad Karp, Max Krohn, Nick McKeown, Kobbi Nissim, Benny Pinkas, Omer Reingold, Kevin Shanahan, Scott Shenker, Ion Stoica, and Mythili Vutukuru Overloading content publishers Feb 3, 2004: Google linked banner to “julia fractals” Users clicked onto University of Western Australia web site …University’s network link overloaded, web server taken down temporarily… Adding insult to injury… Next day: Slashdot story about Google overloading site …UWA site goes down again Insufficient server resources Browser Browser Origin Server Browser Browser Browser Browser Browser Browser Many clients want content Server has insufficient resources Solving the problem requires more resources Serving large audiences possible… Where do their resources come from? Must consider two types of content separately • Static • Dynamic Static content uses most bandwidth Dynamic HTML: 19.6 KB Static content: 6.2 MB 1 flash movie 5 style sheets 18 images 3 scripts Serving large audiences possible… How do they serve static content? Content distribution networks (CDNs) Centralized CDNs Static, manual deployment Centrally managed Implications: Trusted infrastructure Costs scale linearly Not solved for little guy Browser Browser Origin Server Browser Browser Browser Browser Browser Browser Problem: Didn’t anticipate sudden load spike (flash crowd) Wouldn’t want to pay / couldn’t afford costs Leveraging cooperative resources Many people want content Many willing to mirror content e.g., software mirrors, file sharing, open proxies, etc. -

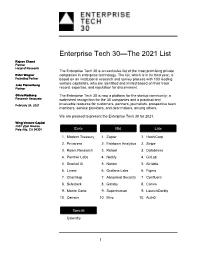

Enterprise Tech 30—The 2021 List

Enterprise Tech 30—The 2021 List Rajeev Chand Partner Head of Research The Enterprise Tech 30 is an exclusive list of the most promising private Peter Wagner companies in enterprise technology. The list, which is in its third year, is Founding Partner based on an institutional research and survey process with 103 leading venture capitalists, who are identified and invited based on their track Jake Flomenberg Partner record, expertise, and reputation for discernment. Olivia Rodberg The Enterprise Tech 30 is now a platform for the startup community: a Research Associate watershed recognition for the 30 companies and a practical and February 24, 2021 invaluable resource for customers, partners, journalists, prospective team members, service providers, and deal makers, among others. We are pleased to present the Enterprise Tech 30 for 2021. Wing Venture Capital 480 Lytton Avenue Palo Alto, CA 94301 Early Mid Late 1. Modern Treasury 1. Zapier 1. HashiCorp 2. Privacera 2. Fishtown Analytics 2. Stripe 3. Roam Research 3. Retool 3. Databricks 4. Panther Labs 4. Netlify 4. GitLab 5. Snorkel AI 5. Notion 5. Airtable 6. Linear 6. Grafana Labs 6. Figma 7. ChartHop 7. Abnormal Security 7. Confluent 8. Substack 8. Gatsby 8. Canva 9. Monte Carlo 9. Superhuman 9. LaunchDarkly 10. Census 10. Miro 10. Auth0 Special Calendly 1 2021 The Curious Case of Calendly This year’s Enterprise Tech 30 has 31 companies rather than 30 due to the “curious case” of Calendly. Calendly, a meeting scheduling company, was categorized as Early-Stage when the ET30 voting process started on January 11 as the company had raised $550,000. -

Abstract Sensor Event Processing to Achieve Dynamic Composition of Cyber-Physical Systems

Abstract Sensor Event Processing to Achieve Dynamic Composition of Cyber-Physical Systems D I S S E R T A T I O N zur Erlangung des akademischen Grades Doktoringenieur (Dr.-Ing.) angenommen durch die Fakultät für Informatik der Otto-von-Guericke-Universität Magdeburg von Dipl.-Inform. Christoph Steup geb. am 29.01.1985 in Halle (Saale) Gutachterinnen/Gutachter Prof. Dr. rer. nat. Jörg Kaiser Prof. Dr. s.c. ETH Kay Uwe Römer Prof. Dr.-Ing. Christian Diedrich Magdeburg, den 19.02.2018 Zusammenfassung Diese Thesis widmet sich dem übergeordneten Ziel Cyber-Physische Systeme (CPS) dy- namisch aus Komponenten zusammenzusetzen. CPS sind eine Schlüsseltechnologie für die Industrie 4.0, moderne Robotik und autonom fahrende Fahrzeuge. Jedoch werden die realen Systeme immer komplexer und setzen sich aus immer mehr einzelnen Komponenten zusam- men, welche über ein Kommunikationsnetz verbunden sind. Die Herausforderung bei der En- twicklung dieser Systeme ist die Spezifikation und der Austausch der im System vorhandenen Informationen um die vorhandenen Komponenten bestmöglich zu nutzen. Die notwendigen Informationen sind dabei sehr Szenario spezifisch. Diese Arbeit versucht die Konstruktion und Komposition dieser Systeme zu vereinfachen indem eine neue Form der Information- sabstraktion für CPS eingeführt wird: Abstract Sensor Events (ASE). Diese stellen die Kapselung von Sensorinformation in maschinenlesbare Form dar. Die Kapselung vereint die inhärenten Sensordaten mit den notwendigen Kontextdaten. Der Kontext der Information besteht hierbei mindestens aus Zeitpunkt, Position, Ersteller und semantischer Beschrei- bung. Um die unvermeidbare Unschärfe der Sensorinformationen abzubilden, enthalten ASE eine intervallbasierte Unschärfebeschreibung, der Daten und des Kontextes. Die semantische Beschreibung basiert auf einem Wörterbuch von Attributen, die in den Ereignissen enthal- ten sein können. -

Managing Large-Scale, Distributed Systems Research Experiments with Control-Flows Tomasz Buchert

Managing large-scale, distributed systems research experiments with control-flows Tomasz Buchert To cite this version: Tomasz Buchert. Managing large-scale, distributed systems research experiments with control-flows. Distributed, Parallel, and Cluster Computing [cs.DC]. Université de Lorraine, 2016. English. tel- 01273964 HAL Id: tel-01273964 https://tel.archives-ouvertes.fr/tel-01273964 Submitted on 15 Feb 2016 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. École doctorale IAEM Lorraine MANAGINGLARGE-SCALE,DISTRIBUTED SYSTEMSRESEARCHEXPERIMENTSWITH CONTROL-FLOWS THÈSE présentée et soutenue publiquement le 6 janvier 2016 pour l’obtention du DOCTORATDEL’UNIVERSITÉDELORRAINE (mention informatique) par TOMASZBUCHERT Composition du jury : Rapporteurs Christian Pérez, Directeur de recherche Inria, LIP, Lyon Eric Eide, Research assistant professor, Univ. of Utah Examinateurs François Charoy, Professeur, Université de Lorraine Olivier Richard, Maître de conférences, Univ. Joseph Fourier, Grenoble Directeurs de thèse Lucas Nussbaum, Maître de conférences, Université de Lorraine Jens Gustedt, Directeur de recherche Inria, ICUBE, Strasbourg Laboratoire Lorrain de Recherche en Informatique et ses Applications – UMR 7503 ABSTRACT(ENGLISH) Keywords: distributed systems, large-scale experiments, experiment control, busi- ness processes, experiment provenance Running experiments on modern systems such as supercomputers, cloud infrastructures or P2P networks became very complex, both technically and methodologically. -

A New Approach to Network Function Virtualization

A New Approach to Network Function Virtualization By Aurojit Panda A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Computer Science in the Graduate Division of the University of California, Berkeley Committee in charge: Professor Scott J. Shenker, Chair Professor Sylvia Ratnasamy Professor Ion Stoica Professor Deirdre Mulligan Summer 2017 A New Approach to Network Function Virtualization Copyright 2017 by Aurojit Panda 1 Abstract A New Approach to Network Function Virtualization by Aurojit Panda Doctor of Philosophy in Computer Science University of California, Berkeley Professor Scott J. Shenker, Chair Networks provide functionality beyond just packet routing and delivery. Network functions such as firewalls, caches, WAN optimizers, etc. are crucial for scaling networks and in supporting new applications. While traditionally network functions were implemented using dedicated hardware middleboxes, recent efforts have resulted in them being implemented as software and deployed in virtualized environment . This move towards virtualized network function is commonly referred to as network function virtualization (NFV). While the NFV proposal has been enthusiastically accepted by carriers and enterprises, actual efforts to deploy NFV have not been as successful. In this thesis we argue that this is because the current deployment strategy which relies on operators to ensure that network functions are configured to correctly implement policies, and then deploys these network functions as virtual machines (or containers), connected by virtual switches are ill- suited to NFV workload. In this dissertation we propose an alternative NFV framework based on the use of static tech- niques such as type checking and formal verification. -

Software-Defined Networking: Improving Security for Enterprise

Software-defined Networking: Improving Security for Enterprise and Home Networks by Curtis R. Taylor A Dissertation Submitted to the Faculty of the WORCESTER POLYTECHNIC INSTITUTE In partial fulfillment of the requirements for the Degree of Doctor of Philosophy in Computer Science by May 2017 APPROVED: Professor Craig A. Shue, Dissertation Advisor Professor Craig E. Wills, Head of Department Professor Mark Claypool, Committee Member Professor Thomas Eisenbarth, Committee Member Doctor Nathanael Paul, External Committee Member Abstract In enterprise networks, all aspects of the network, such as placement of security devices and performance, must be carefully considered. Even with forethought, networks operators are ulti- mately unaware of intra-subnet traffic. The inability to monitor intra-subnet traffic leads to blind spots in the network where compromised hosts have unfettered access to the network for spreading and reconnaissance. While network security middleboxes help to address compromises, they are limited in only seeing a subset of all network traffic that traverses routed infrastructure, which is where middleboxes are frequently deployed. Furthermore, traditional middleboxes are inherently limited to network-level information when making security decisions. Software-defined networking (SDN) is a networking paradigm that allows logically centralized control of network switches and routers. SDN can help address visibility concerns while providing the benefits of a centralized network control platform, but traditional switch-based SDN leads to concerns of scalability and is ultimately limited in that only network-level information is available to the controller. This dissertation addresses these SDN limitations in the enterprise by pushing the SDN functionality to the end-hosts. In doing so, we address scalability concerns and provide network operators with better situational awareness by incorporating system-level and graphical user interface (GUI) context into network information handled by the controller. -

Two Decades of Ddos Attacks and Defenses

Two Decades of DDoS Attacks and Defenses LUMIN SHI, University of Oregon Two decades after the first distributed denial-of-service (DDoS) attack, the Internet continues to faceDDoS attacks. To understand why DDoS defense is a difficult problem, we must study how the attacks are carried out and whether the existing defense solutions are sufficient. In this work, we review the latest DDoS attacks and DDoS defense solutions. In particular, we focus on the key advancements and missing pieces in DDoS research. CCS Concepts: • Networks → Network security; Denial-of-service attacks. Additional Key Words and Phrases: DDoS attack, DDoS defense, CDN, SDN 1 INTRODUCTION Distributed denial-of-service (DDoS) attacks are easy to launch but difficult to defend against. Despite two decades of DDoS research, DDoS attacks continue to impose threats to today’s networks. Recent attacks such as [1, 2, 3] have shown that resourceful attackers can launch DDoS attacks to flood network links and leaves them paralyzed for hours or days. In the meantime, the proliferation of Internet-of-Things (IoT) devices amplifies the threat of DDoS. Many of these devices come with little to no updates for security vulnerabilities, and as such, they are exploitable by the attackers. Compromised IoT devices remain vulnerable even after major incidents such as the Mirai attack [1]. The sheer amount of vulnerable, increasing IoT devices challenges the capacity/scalability of existing defense solutions. On the other hand, the frequent DDoS attacks led the network community to seek better defense solutions. From remotely triggered black hole (RTBH) [4] to BGP FlowSpec [5], we see the progress made by the network community to move away from high-collateral-damage solutions [4]. -

A Defense Framework Against Denial-Of-Service in Computer Networks

A DEFENSE FRAMEWORK AGAINST DENIAL-OF-SERVICE IN COMPUTER NETWORKS by Sherif Khattab M.Sc. Computer Science, University of Pittsburgh, USA, 2004 B.Sc. Computer Engineering, Cairo University, Egypt, 1998 Submitted to the Graduate Faculty of the Department of Computer Science in partial fulfillment of the requirements for the degree of Doctor of Philosophy University of Pittsburgh 2008 UNIVERSITY OF PITTSBURGH DEPARTMENT OF COMPUTER SCIENCE This dissertation was presented by Sherif Khattab It was defended on June 25th, 2008 and approved by Prof. Rami Melhem, Department of Computer Science Prof. Daniel Moss´e,Department of Computer Science Prof. Taieb Znati, Department of Computer Science Prof. Prashant Krishnamurthy, School of Information Sciences Dissertation Advisors: Prof. Rami Melhem, Department of Computer Science, Prof. Daniel Moss´e,Department of Computer Science ii A DEFENSE FRAMEWORK AGAINST DENIAL-OF-SERVICE IN COMPUTER NETWORKS Sherif Khattab, PhD University of Pittsburgh, 2008 Denial-of-Service (DoS) is a computer security problem that poses a serious challenge to trustworthiness of services deployed over computer networks. The aim of DoS attacks is to make services unavailable to legitimate users, and current network architectures allow easy-to-launch, hard-to-stop DoS attacks. Particularly challenging are the service-level DoS attacks, whereby the victim service is flooded with legitimate-like requests, and the jamming attack, in which wireless communication is blocked by malicious radio interference. These attacks are overwhelming even for massively-resourced services, and effective and efficient defenses are highly needed. This work contributes a novel defense framework, which I call dodging, against service- level DoS and wireless jamming. -

DISSERTATION DOCTEUR DE L UNIVERSITÉ DU LUXEMBOURG EN INFORMATIQUE Diego KREUTZ Dissertation Defence Committee

PhD-FSTM-2020-52 The Faculty of Sciences, Technology and Medicine DISSERTATION Defence held on 15/09/2020 in Luxembourg to obtain the degree of DOCTEUR DE L’UNIVERSITÉ DU LUXEMBOURG EN INFORMATIQUE by Diego KREUTZ Born on 29 November 1979 in Santa Rosa (Brazil) LOGICALLY CENTRALIZED SECURITY FOR SOFTWARE-DEFINED NETWORKING Dissertation defence committee Dr Paulo Esteves-Veríssimo, dissertation supervisor Professor, Université du Luxembourg Dr Björn Ottersten, Chairman Professor, Université du Luxembourg Dr Radu State, Vice Chairman Associate Professor, Université du Luxembourg Dr Jennifer Rexford Professor, Princeton University Dr Sandra Scott-Hayward, Lecturer, Queen’s University Be lfast Acknowledgements I am very proud of and grateful to my supervisors Paulo Esteves-Veríssimo and Fernando M. V. Ramos because I have learned so much with them. One of the few things I am sure of is my eternal debt with them. I am (arguably) sure I am a better researcher now because of them. In fact, I am still learning everyday something new with them. Paulo was also an awesome sort of coach in my personal life as well. He is an impressively smart and knowledgeable person. I have to say, in some ways, he is indeed better than a regular doctor. I know that (probably) just me and him will truly understand the meaning of this statement. IhadoneofthemostinterestingandproductivetimesafterImetJiangshan Yu. He is a very smart, easy going and inspiring researcher and person. Because of him, I started to better understand and to have joy in designing security protocols. JY inspired me in different ways. I consider myself lucky to have met him.