A Defense Framework Against Denial-Of-Service in Computer Networks

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The FCO's Human Rights Work in 2012

House of Commons Foreign Affairs Committee The FCO’s human rights work in 2012 Fourth Report of Session 2013–14 Volume I: Report, together with formal minutes, oral and written evidence Additional written evidence is contained in Volume II, available on the Committee website at www.parliament.uk/facom Ordered by the House of Commons to be printed 8 October 2013 HC 267 Published on 17 October 2013 by authority of the House of Commons London: The Stationery Office Limited £15.50 The Foreign Affairs Committee The Foreign Affairs Committee is appointed by the House of Commons to examine the expenditure, administration, and policy of the Foreign and Commonwealth Office and its associated agencies. Current membership Richard Ottaway (Conservative, Croydon South) (Chair) Mr John Baron (Conservative, Basildon and Billericay) Rt Hon Sir Menzies Campbell (Liberal Democrat, North East Fife) Rt Hon Ann Clwyd (Labour, Cynon Valley) Mike Gapes (Labour/Co-op, Ilford South) Mark Hendrick (Labour/Co-op, Preston) Sandra Osborne (Labour, Ayr, Carrick and Cumnock) Andrew Rosindell (Conservative, Romford) Mr Frank Roy (Labour, Motherwell and Wishaw) Rt Hon Sir John Stanley (Conservative, Tonbridge and Malling) Rory Stewart (Conservative, Penrith and The Border) The following Members were also members of the Committee during the parliament: Rt Hon Bob Ainsworth (Labour, Coventry North East) Emma Reynolds (Labour, Wolverhampton North East) Mr Dave Watts (Labour, St Helens North) Powers The Committee is one of the departmental select committees, the powers of which are set out in House of Commons Standing Orders, principally in SO No 152. These are available on the internet via www.parliament.uk. -

Citizens' Band (CB) Radio

Citizens’ Band (CB) radio – Authorising Amplitude Modulation (AM) modes of operation Permitting AM double and single side band CB radio in the UK Statement Publication date: 10 December 2013 Contents Section Page 1 Executive Summary 1 2 Introduction and background 2 3 Consultation Responses 5 4 Conclusions and next steps 10 Annex Page 1 List of non-confidential respondents 11 Citizens’ Band (CB) radio – Authorising Amplitude Modulation (AM) modes of operation Section 1 1 Executive Summary 1.1 This Statement sets out Ofcom’s decision to proceed with proposals made in our Consultation “Citizens’ Band (CB) radio – Authorising Amplitude Modulation (AM) modes of operation”1 (the ‘Consultation') which was published on 7 October 2013 and closed on 8 November 2013. 1.2 The Consultation proposed to amend current arrangements for Citizens’ Band (CB) Radio in the UK to allow the use of Amplitude Modulation (AM) Double-sideband (DSB) and Single-sideband (SSB) transmission on CB radio. 1.3 Ofcom specifically proposed to: • Authorise the use of AM emissions on European Conference of Postal and Telecommunications Administrations (CEPT) harmonised channels in line with European Communication Committee (ECC) Decision (11)032; and • Authorise such use on a licence exempt basis (in line with our authorisation approach for other modes of operation for CB). 1.4 These proposals followed on from work carried out in Europe. In June 2011 the ECC, part of CEPT, published a Decision, ECC/DEC/ (11)03 (the ‘Decision’) on the harmonised use of frequencies for CB radio equipment. The Decision sought to harmonise the technical standards and usage conditions relating to the use of frequencies for CB radio equipment in CEPT administrations. -

Jamming Attacks and Anti-Jamming Strategies in Wireless Networks

1 Jamming Attacks and Anti-Jamming Strategies in Wireless Networks: A Comprehensive Survey Hossein Pirayesh and Huacheng Zeng Department of Computer Science and Engineering, Michigan State University, East Lansing, MI USA Abstract—Wireless networks are a key component of the will become ubiquitously available for massive devices to telecommunications infrastructure in our society, and wireless realize the vision of Internet of Everything (IoE) in the near services become increasingly important as the applications of future [18]. wireless devices have penetrated every aspect of our lives. Although wireless technologies have significantly advanced in As we are increasingly reliant on wireless services, security the past decades, most wireless networks are still vulnerable to threats have become a big concern about the confidentiality, radio jamming attacks due to the openness nature of wireless integrity, and availability of wireless communications. Com- channels, and the progress in the design of jamming-resistant pared to other security threats such as eavesdropping and wireless networking systems remains limited. This stagnation can data fabrication, wireless networks are particularly vulnerable be attributed to the lack of practical physical-layer wireless tech- nologies that can efficiently decode data packets in the presence to radio jamming attacks for the following reasons. First, of jamming attacks. This article surveys existing jamming attacks jamming attacks are easy to launch. With the advances in and anti-jamming strategies in wireless local area networks software-defined radio, one can easily program a small $10 (WLANs), cellular networks, cognitive radio networks (CRNs), USB dongle device to a jammer that covers 20 MHz bandwidth ZigBee networks, Bluetooth networks, vehicular networks, LoRa below 6 GHz and up to 100 mW transmission power [34]. -

Jamming the Stations: Is There an International Free Flow of Info

Schenone: Jamming The Stations: Is There an International Free Flow of Info JAMMING THE STATIONS: IS THERE AN INTERNATIONAL FREE FLOW OF INFORMATION? In September 1983, Korean Airlines flight 7 was shot down by a Soviet interceptor.' All 269 passengers perished.2 Within an hour, news of the incident was broadcast to citizens of the Eastern European countries and the Soviet Union 3 over Radio Free Europe (RFE)4 and Radio Liberty (RL).5 The Soviet news services, how- ever, failed to mention the incident.6 The Soviet government delib- erately attempted to block reception of the RFE and RL broadcast through radio jamming.' Radio jamming is a significant international problem because radio broadcasting is an essential and powerful means of dissemi- nating information among nations.8 Radio jamming is generally defined as "deliberate radio interference to prevent reception of a foreign broadcast."9 A more technical definition is "intentional harmful interference" '0 which results in intentional non-conformity 1. THE BOARD FOR INTERNATIONAL BROADCASTING, TENTH ANNUAL REPORT 11 (1984) [hereinafter cited as TENTH ANNUAL REPORT]. 2. Id. 3. Id. 4.' See infra note 35 and accompanying text. 5. See infra note 36 and accompanying text. 6. TENTH ANNUAL REPORT, supranote I, at 11. The Soviet Union at first denied that the plane was shot down. When the Soviet news services later admitted it had in fact been shot down by one of their pilots, they claimed the Korean plane had no lights identifying it as non-agressive. However, RFE and RL broadcasts of the tapes of the interceptor's pilot revealed that he claimed he could see the Korean jet's strobe lights. -

Journalism Is Not a Crime” Violations of Media Freedom in Ethiopia WATCH

HUMAN RIGHTS “Journalism Is Not a Crime” Violations of Media Freedom in Ethiopia WATCH “Journalism Is Not a Crime” Violations of Media Freedom in Ethiopia Copyright © 2015 Human Rights Watch All rights reserved. Printed in the United States of America ISBN: 978-1-6231-32279 Cover design by Rafael Jimenez Human Rights Watch defends the rights of people worldwide. We scrupulously investigate abuses, expose the facts widely, and pressure those with power to respect rights and secure justice. Human Rights Watch is an independent, international organization that works as part of a vibrant movement to uphold human dignity and advance the cause of human rights for all. Human Rights Watch is an international organization with staff in more than 40 countries, and offices in Amsterdam, Beirut, Berlin, Brussels, Chicago, Geneva, Goma, Johannesburg, London, Los Angeles, Moscow, Nairobi, New York, Paris, San Francisco, Sydney, Tokyo, Toronto, Tunis, Washington DC, and Zurich. For more information, please visit our website: http://www.hrw.org 2 HUMAN RIGHTS WATCH | JANUARY 2015 JANUARY 2015 978-1-6231-32279 “Journalism Is Not a Crime” Violations of Media Freedoms in Ethiopia Glossary of Abbreviations ................................................................................................... i Map of Ethiopia .................................................................................................................. ii Summary .......................................................................................................................... -

A Double Horizon Defense Design for Robust Regulation of Malicious Traffic

University of Pennsylvania ScholarlyCommons Departmental Papers (ESE) Department of Electrical & Systems Engineering August 2006 A Double Horizon Defense Design for Robust Regulation of Malicious Traffic Ying Xu University of Pennsylvania, [email protected] Roch A. Guérin University of Pennsylvania, [email protected] Follow this and additional works at: https://repository.upenn.edu/ese_papers Recommended Citation Ying Xu and Roch A. Guérin, "A Double Horizon Defense Design for Robust Regulation of Malicious Traffic", . August 2006. Copyright 2006 IEEE. In Proceedings of the Second IEEE Communications Society/CreateNet International Conference on Security and Privacy in Communication Networks (SecureComm 2006). This material is posted here with permission of the IEEE. Such permission of the IEEE does not in any way imply IEEE endorsement of any of the University of Pennsylvania's products or services. Internal or personal use of this material is permitted. However, permission to reprint/republish this material for advertising or promotional purposes or for creating new collective works for resale or redistribution must be obtained from the IEEE by writing to [email protected]. By choosing to view this document, you agree to all provisions of the copyright laws protecting it. This paper is posted at ScholarlyCommons. https://repository.upenn.edu/ese_papers/190 For more information, please contact [email protected]. A Double Horizon Defense Design for Robust Regulation of Malicious Traffic Abstract Deploying defense mechanisms in routers holds promises for protecting infrastructure resources such as link bandwidth or router buffers against network Denial-of-Service (DoS) attacks. However, in spite of their efficacy against bruteforce flooding attacks, existing outer-basedr defenses often perform poorly when confronted to more sophisticated attack strategies. -

Arguments for an End-Middle-End Internet

CHASING EME: ARGUMENTS FOR AN END-MIDDLE-END INTERNET A Dissertation Presented to the Faculty of the Graduate School of Cornell University in Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy by Saikat Guha August 2009 c 2009 Saikat Guha ALL RIGHTS RESERVED CHASING EME: ARGUMENTS FOR AN END-MIDDLE-END INTERNET Saikat Guha, Ph.D. Cornell University 2009 Connection establishment in the Internet has remained unchanged from its orig- inal design in the 1970s: first, the path between the communicating endpoints is assumed to always be open. It is assumed that an endpoint can reach any other endpoint by simply sending a packet addressed to the destination. This assumption is no longer borne out in practice: Network Address Translators (NATs) prevent all hosts from being addressed, firewalls prevent all packets from being delivered, and middleboxes transparently intercept packets with- out endpoint knowledge. Second, the Internet strives to deliver all packets ad- dressed to a destination regardless of whether the packet is ultimately desired by the destination or not. Denial of Service (DoS) attacks are therefore common- place, and the Internet remains vulnerable to flash worms. This thesis presents the End-Middle-End (EME) requirements for connec- tion establishment that the modern Internet should satisfy, and explores the de- sign space of a signaling-based architecture that meets these requirements with minimal changes to the existing Internet. In so doing, this thesis proposes so- lutions to three real-world problems. First, it focuses on the problem of TCP NAT Traversal, where endpoints behind their respective NATs today cannot es- tablish a direct TCP connection with each other due to default NAT behavior. -

Speeding up Correlation Search for Binary Data

Speeding up Correlation Search for Binary Data Lian Duan and W. Nick Street [email protected] Management Sciences Department The University of Iowa Abstract Finding the most interesting correlations in a collection of items is essential for problems in many commercial, medical, and scientific domains. Much previous re- search focuses on finding correlated pairs instead of correlated itemsets in which all items are correlated with each other. Though some existing methods find correlated itemsets of any size, they suffer from both efficiency and effectiveness problems in large datasets. In our previous paper [10], we propose a fully-correlated itemset (FCI) framework to decouple the correlation measure from the need for efficient search. By wrapping the desired measure in our FCI framework, we take advantage of the desired measure’s superiority in evaluating itemsets, eliminate itemsets with irrelevant items, and achieve good computational performance. However, FCIs must start pruning from 2-itemsets unlike frequent itemsets which can start the pruning from 1-itemsets. When the number of items in a given dataset is large and the support of all the pairs cannot be loaded into the memory, the IO cost O(n2) for calculating correlation of all the pairs is very high. In addition, users usually need to try different correlation thresholds and the cost of processing the Apriori procedure each time for a different threshold is very high. Consequently, we propose two techniques to solve the efficiency problem in this paper. With respect to correlated pair search, we identify a 1-dimensional monotone prop- erty of the upper bound of any good correlation measure, and different 2-dimensional monotone properties for different types of correlation measures. -

Democratizing Content Distribution

Democratizing content distribution Michael J. Freedman New York University Primary work in collaboration with: Martin Casado, Eric Freudenthal, Karthik Lakshminarayanan, David Mazières Additional work in collaboration with: Siddhartha Annapureddy, Hari Balakrishnan, Dan Boneh, Nick Feamster, Scott Garriss, Yuval Ishai, Michael Kaminsky, Brad Karp, Max Krohn, Nick McKeown, Kobbi Nissim, Benny Pinkas, Omer Reingold, Kevin Shanahan, Scott Shenker, Ion Stoica, and Mythili Vutukuru Overloading content publishers Feb 3, 2004: Google linked banner to “julia fractals” Users clicked onto University of Western Australia web site …University’s network link overloaded, web server taken down temporarily… Adding insult to injury… Next day: Slashdot story about Google overloading site …UWA site goes down again Insufficient server resources Browser Browser Origin Server Browser Browser Browser Browser Browser Browser Many clients want content Server has insufficient resources Solving the problem requires more resources Serving large audiences possible… Where do their resources come from? Must consider two types of content separately • Static • Dynamic Static content uses most bandwidth Dynamic HTML: 19.6 KB Static content: 6.2 MB 1 flash movie 5 style sheets 18 images 3 scripts Serving large audiences possible… How do they serve static content? Content distribution networks (CDNs) Centralized CDNs Static, manual deployment Centrally managed Implications: Trusted infrastructure Costs scale linearly Not solved for little guy Browser Browser Origin Server Browser Browser Browser Browser Browser Browser Problem: Didn’t anticipate sudden load spike (flash crowd) Wouldn’t want to pay / couldn’t afford costs Leveraging cooperative resources Many people want content Many willing to mirror content e.g., software mirrors, file sharing, open proxies, etc. -

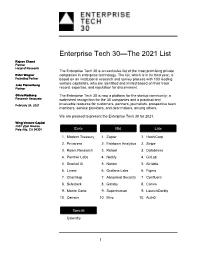

Enterprise Tech 30—The 2021 List

Enterprise Tech 30—The 2021 List Rajeev Chand Partner Head of Research The Enterprise Tech 30 is an exclusive list of the most promising private Peter Wagner companies in enterprise technology. The list, which is in its third year, is Founding Partner based on an institutional research and survey process with 103 leading venture capitalists, who are identified and invited based on their track Jake Flomenberg Partner record, expertise, and reputation for discernment. Olivia Rodberg The Enterprise Tech 30 is now a platform for the startup community: a Research Associate watershed recognition for the 30 companies and a practical and February 24, 2021 invaluable resource for customers, partners, journalists, prospective team members, service providers, and deal makers, among others. We are pleased to present the Enterprise Tech 30 for 2021. Wing Venture Capital 480 Lytton Avenue Palo Alto, CA 94301 Early Mid Late 1. Modern Treasury 1. Zapier 1. HashiCorp 2. Privacera 2. Fishtown Analytics 2. Stripe 3. Roam Research 3. Retool 3. Databricks 4. Panther Labs 4. Netlify 4. GitLab 5. Snorkel AI 5. Notion 5. Airtable 6. Linear 6. Grafana Labs 6. Figma 7. ChartHop 7. Abnormal Security 7. Confluent 8. Substack 8. Gatsby 8. Canva 9. Monte Carlo 9. Superhuman 9. LaunchDarkly 10. Census 10. Miro 10. Auth0 Special Calendly 1 2021 The Curious Case of Calendly This year’s Enterprise Tech 30 has 31 companies rather than 30 due to the “curious case” of Calendly. Calendly, a meeting scheduling company, was categorized as Early-Stage when the ET30 voting process started on January 11 as the company had raised $550,000. -

Managing Large-Scale, Distributed Systems Research Experiments with Control-Flows Tomasz Buchert

Managing large-scale, distributed systems research experiments with control-flows Tomasz Buchert To cite this version: Tomasz Buchert. Managing large-scale, distributed systems research experiments with control-flows. Distributed, Parallel, and Cluster Computing [cs.DC]. Université de Lorraine, 2016. English. tel- 01273964 HAL Id: tel-01273964 https://tel.archives-ouvertes.fr/tel-01273964 Submitted on 15 Feb 2016 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. École doctorale IAEM Lorraine MANAGINGLARGE-SCALE,DISTRIBUTED SYSTEMSRESEARCHEXPERIMENTSWITH CONTROL-FLOWS THÈSE présentée et soutenue publiquement le 6 janvier 2016 pour l’obtention du DOCTORATDEL’UNIVERSITÉDELORRAINE (mention informatique) par TOMASZBUCHERT Composition du jury : Rapporteurs Christian Pérez, Directeur de recherche Inria, LIP, Lyon Eric Eide, Research assistant professor, Univ. of Utah Examinateurs François Charoy, Professeur, Université de Lorraine Olivier Richard, Maître de conférences, Univ. Joseph Fourier, Grenoble Directeurs de thèse Lucas Nussbaum, Maître de conférences, Université de Lorraine Jens Gustedt, Directeur de recherche Inria, ICUBE, Strasbourg Laboratoire Lorrain de Recherche en Informatique et ses Applications – UMR 7503 ABSTRACT(ENGLISH) Keywords: distributed systems, large-scale experiments, experiment control, busi- ness processes, experiment provenance Running experiments on modern systems such as supercomputers, cloud infrastructures or P2P networks became very complex, both technically and methodologically. -

Mining Web User Behaviors to Detect Application Layer Ddos Attacks

JOURNAL OF SOFTWARE, VOL. 9, NO. 4, APRIL 2014 985 Mining Web User Behaviors to Detect Application Layer DDoS Attacks Chuibi Huang1 1Department of Automation, USTC Key laboratory of network communication system and control Hefei, China Email: [email protected] Jinlin Wang1, 2, Gang Wu1, Jun Chen2 2Institute of Acoustics, Chinese Academy of Sciences National Network New Media Engineering Research Center Beijing, China Email: {wangjl, chenj}@dsp.ac.cn Abstract— Distributed Denial of Service (DDoS) attacks than TCP or UDP-based attacks, requiring fewer network have caused continuous critical threats to the Internet connections to achieve their malicious purposes. The services. DDoS attacks are generally conducted at the MyDoom worm and the CyberSlam are all instances of network layer. Many DDoS attack detection methods are this type attack [2, 3]. focused on the IP and TCP layers. However, they are not suitable for detecting the application layer DDoS attacks. In The challenges of detecting the app-DDoS attacks can this paper, we propose a scheme based on web user be summarized as the following aspects: browsing behaviors to detect the application layer DDoS • The app-DDoS attacks make use of higher layer attacks (app-DDoS). A clustering method is applied to protocols such as HTTP to pass through most of extract the access features of the web objects. Based on the the current anomaly detection systems designed access features, an extended hidden semi-Markov model is for low layers. proposed to describe the browsing behaviors of web user. • Along with flooding, App-DDoS traces the The deviation from the entropy of the training data set average request rate of the legitimate user and uses fitting to the hidden semi-Markov model can be considered as the abnormality of the observed data set.