From Ethane to SDN and Beyond

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Openflow: Enabling Innovation in Campus Networks

OpenFlow: Enabling Innovation in Campus Networks Nick McKeown Tom Anderson Hari Balakrishnan Stanford University University of Washington MIT Guru Parulkar Larry Peterson Jennifer Rexford Stanford University Princeton University Princeton University Scott Shenker Jonathan Turner University of California, Washington University in Berkeley St. Louis This article is an editorial note submitted to CCR. It has NOT been peer reviewed. Authors take full responsibility for this article’s technical content. Comments can be posted through CCR Online. ABSTRACT to experiment with production traffic, which have created an This whitepaper proposes OpenFlow: a way for researchers exceedingly high barrier to entry for new ideas. Today, there to run experimental protocols in the networks they use ev- is almost no practical way to experiment with new network ery day. OpenFlow is based on an Ethernet switch, with protocols (e.g., new routing protocols, or alternatives to IP) an internal flow-table, and a standardized interface to add in sufficiently realistic settings (e.g., at scale carrying real and remove flow entries. Our goal is to encourage network- traffic) to gain the confidence needed for their widespread ing vendors to add OpenFlow to their switch products for deployment. The result is that most new ideas from the net- deployment in college campus backbones and wiring closets. working research community go untried and untested; hence We believe that OpenFlow is a pragmatic compromise: on the commonly held belief that the network infrastructure has one hand, it allows researchers to run experiments on hetero- “ossified”. geneous switches in a uniform way at line-rate and with high Having recognized the problem, the networking commu- port-density; while on the other hand, vendors do not need nity is hard at work developing programmable networks, to expose the internal workings of their switches. -

July 18, 2012 Chairman Julius Genachowski Federal Communications Commission 445 12Th Street SW Washington, DC 20554 Re

July 18, 2012 Chairman Julius Genachowski Federal Communications Commission 445 12th Street SW Washington, DC 20554 Re: Letter, CG Docket No. 09-158, CC Docket No. 98-170, WC Docket No. 04-36 Dear Chairman Genachowski, Open data and an independent, transparent measurement framework must be the cornerstones of any scientifically credible broadband Internet access measurement program. The undersigned members of the academic and research communities therefore respectfully ask the Commission to remain committed to the principles of openness and transparency and to allow the scientific process to serve as the foundation of the broadband measurement program. Measuring network performance is complex. Even among those of us who focus on this topic as our life’s work, there are disagreements. The scientific process happens best in the sunlight and that can only happen when as many eyes as possible are able to look at a shared set of data, work to replicate results, and assess its meaning and impact. This ensures the conclusions from the broadband measurement allow for meaningful, data-driven policy making. Since the inception of the broadband measurement program, those of us who work on Internet research have lauded its precedent-setting commitment to open-data and transparency. Many of us have engaged with this program, advising on network transparency and measurement methodology and using the openly-released raw data as a part of our research. However, we understand that some participants in the program have proposed significant changes that would transform an open measurement process into a closed one. Specifically, that the Federal Communications Commission (FCC) is considering a proposal to replace the Measurement Lab server infrastructure with closed infrastructure, run by the participating Internet service providers (ISPs) whose own speeds are being measured. -

Arguments for an End-Middle-End Internet

CHASING EME: ARGUMENTS FOR AN END-MIDDLE-END INTERNET A Dissertation Presented to the Faculty of the Graduate School of Cornell University in Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy by Saikat Guha August 2009 c 2009 Saikat Guha ALL RIGHTS RESERVED CHASING EME: ARGUMENTS FOR AN END-MIDDLE-END INTERNET Saikat Guha, Ph.D. Cornell University 2009 Connection establishment in the Internet has remained unchanged from its orig- inal design in the 1970s: first, the path between the communicating endpoints is assumed to always be open. It is assumed that an endpoint can reach any other endpoint by simply sending a packet addressed to the destination. This assumption is no longer borne out in practice: Network Address Translators (NATs) prevent all hosts from being addressed, firewalls prevent all packets from being delivered, and middleboxes transparently intercept packets with- out endpoint knowledge. Second, the Internet strives to deliver all packets ad- dressed to a destination regardless of whether the packet is ultimately desired by the destination or not. Denial of Service (DoS) attacks are therefore common- place, and the Internet remains vulnerable to flash worms. This thesis presents the End-Middle-End (EME) requirements for connec- tion establishment that the modern Internet should satisfy, and explores the de- sign space of a signaling-based architecture that meets these requirements with minimal changes to the existing Internet. In so doing, this thesis proposes so- lutions to three real-world problems. First, it focuses on the problem of TCP NAT Traversal, where endpoints behind their respective NATs today cannot es- tablish a direct TCP connection with each other due to default NAT behavior. -

Democratizing Content Distribution

Democratizing content distribution Michael J. Freedman New York University Primary work in collaboration with: Martin Casado, Eric Freudenthal, Karthik Lakshminarayanan, David Mazières Additional work in collaboration with: Siddhartha Annapureddy, Hari Balakrishnan, Dan Boneh, Nick Feamster, Scott Garriss, Yuval Ishai, Michael Kaminsky, Brad Karp, Max Krohn, Nick McKeown, Kobbi Nissim, Benny Pinkas, Omer Reingold, Kevin Shanahan, Scott Shenker, Ion Stoica, and Mythili Vutukuru Overloading content publishers Feb 3, 2004: Google linked banner to “julia fractals” Users clicked onto University of Western Australia web site …University’s network link overloaded, web server taken down temporarily… Adding insult to injury… Next day: Slashdot story about Google overloading site …UWA site goes down again Insufficient server resources Browser Browser Origin Server Browser Browser Browser Browser Browser Browser Many clients want content Server has insufficient resources Solving the problem requires more resources Serving large audiences possible… Where do their resources come from? Must consider two types of content separately • Static • Dynamic Static content uses most bandwidth Dynamic HTML: 19.6 KB Static content: 6.2 MB 1 flash movie 5 style sheets 18 images 3 scripts Serving large audiences possible… How do they serve static content? Content distribution networks (CDNs) Centralized CDNs Static, manual deployment Centrally managed Implications: Trusted infrastructure Costs scale linearly Not solved for little guy Browser Browser Origin Server Browser Browser Browser Browser Browser Browser Problem: Didn’t anticipate sudden load spike (flash crowd) Wouldn’t want to pay / couldn’t afford costs Leveraging cooperative resources Many people want content Many willing to mirror content e.g., software mirrors, file sharing, open proxies, etc. -

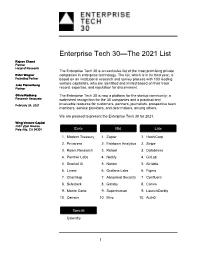

Enterprise Tech 30—The 2021 List

Enterprise Tech 30—The 2021 List Rajeev Chand Partner Head of Research The Enterprise Tech 30 is an exclusive list of the most promising private Peter Wagner companies in enterprise technology. The list, which is in its third year, is Founding Partner based on an institutional research and survey process with 103 leading venture capitalists, who are identified and invited based on their track Jake Flomenberg Partner record, expertise, and reputation for discernment. Olivia Rodberg The Enterprise Tech 30 is now a platform for the startup community: a Research Associate watershed recognition for the 30 companies and a practical and February 24, 2021 invaluable resource for customers, partners, journalists, prospective team members, service providers, and deal makers, among others. We are pleased to present the Enterprise Tech 30 for 2021. Wing Venture Capital 480 Lytton Avenue Palo Alto, CA 94301 Early Mid Late 1. Modern Treasury 1. Zapier 1. HashiCorp 2. Privacera 2. Fishtown Analytics 2. Stripe 3. Roam Research 3. Retool 3. Databricks 4. Panther Labs 4. Netlify 4. GitLab 5. Snorkel AI 5. Notion 5. Airtable 6. Linear 6. Grafana Labs 6. Figma 7. ChartHop 7. Abnormal Security 7. Confluent 8. Substack 8. Gatsby 8. Canva 9. Monte Carlo 9. Superhuman 9. LaunchDarkly 10. Census 10. Miro 10. Auth0 Special Calendly 1 2021 The Curious Case of Calendly This year’s Enterprise Tech 30 has 31 companies rather than 30 due to the “curious case” of Calendly. Calendly, a meeting scheduling company, was categorized as Early-Stage when the ET30 voting process started on January 11 as the company had raised $550,000. -

Netfpga—An Open Platform for Teaching How to Build Gigabit-Rate Network Switches and Routers Glen Gibb, Member, IEEE, John W

364 IEEE TRANSACTIONS ON EDUCATION, VOL. 51, NO. 3, AUGUST 2008 NetFPGA—An Open Platform for Teaching How to Build Gigabit-Rate Network Switches and Routers Glen Gibb, Member, IEEE, John W. Lockwood, Member, IEEE, Jad Naous, Paul Hartke, and Nick McKeown, Fellow, IEEE Abstract—The NetFPGA platform enables students and re- searchers to build high-performance networking systems using field-programmable gate array (FPGA) hardware. A new version of the NetFPGA platform has been developed and is available for use by the academic community. The NetFPGA platform has modular interfaces that enable development of complex hardware designs by integration of simple building blocks. FPGA logic is used to implement the core data processing functions while software running on an attached host computer or embedded cores within the device implement control functions. Reference Fig. 1. Photograph of a NetFPGA installed in a Desktop PC. designs and component libraries have been developed for the CS344 course at Stanford University, Stanford, CA, and taught at a series of tutorials held in the United States, United Kingdom, India, China, Australia, and Europe. The open-source Verilog, C, C commercial vendors of high-speed networking equipment Perl, and Java reference design is available for download from the use application specific integrated circuits (ASICs) and/or project website. field-programmable gate arrays (FPGAs) to accelerate the Index Terms—Field-programmable gate arrays (FPGAs), In- switching, routing, and processing of packet data. To be com- ternet, networks, protocols, routing, switches. petitive, students need to understand how these hardware-accel- erated systems operate. Using the NetFPGA platform, students can build and prototype their own hardware-accelerated net- I. -

Nick Mckeown Academic Employment Current Research Interests

Last updated: October 10, 2017 Nick McKeown Departments of Computer Science Tel: (650) 7253641 & Electrical Engineering Gates 344 Email: [email protected] Stanford University Stanford, CA 943059030 http://www.stanford.edu/~nickm Academic Employment Stanford University ● Kleiner Perkins, Mayfield, Sequoia Professor of Engineering (2012 ) ● Professor of Electrical Engineering and Computer Science (2010 ) ● Faculty Director, Open Networking Research Center (20122016) ● Faculty Director, Clean Slate Design for the Internet (20062012) ● Associate Professor of Electrical Engineering and Computer Science (20022010) ● Assistant Professor of Electrical Engineering and Computer Science (1995 2002) Current research interests Softwaredefined networks (SDN), programmable networks, languages for expressing forwarding behavior, netneutrality and personalized networks. Academic Background Place of Study Degree Dates University of California, Berkeley PhD May 1995 Electrical Engineering and Computer Science University of California, Berkeley MS May 1992 Electrical Engineering and Computer Science University of Leeds, England BEng May 1986 Electrical and Electronic Engineering Phd Thesis: Scheduling Cells in an InputQueued Cell Switch. Adviser: Professor Jean Walrand, University of California, Berkeley. Last updated: October 10, 2017 Other Organizations P4 Language Consortium ( P4.org) , Board Member (2014) Barefoot Networks Inc, CoFounder, Chairman and Chief Scientist (2013) Open Networking Lab (ON.Lab), Board Member (2011) Open Networking Foundation (ONF), CoFounder and Board Member (2010) Nicira Networks Inc, CoFounder and Board Member (20072012; Acquired by VMware) Nicira was one of the first “softwaredefined networking” (SDN) companies and invented the concept of “network virtualization”. Nemo Systems Inc, CEO and CoFounder (20032005; Acquired by Cisco) “Network Memory” saves networking companies hundreds of millions of dollars per year on high price SRAMs for packet buffering and event counters. -

Managing Large-Scale, Distributed Systems Research Experiments with Control-Flows Tomasz Buchert

Managing large-scale, distributed systems research experiments with control-flows Tomasz Buchert To cite this version: Tomasz Buchert. Managing large-scale, distributed systems research experiments with control-flows. Distributed, Parallel, and Cluster Computing [cs.DC]. Université de Lorraine, 2016. English. tel- 01273964 HAL Id: tel-01273964 https://tel.archives-ouvertes.fr/tel-01273964 Submitted on 15 Feb 2016 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. École doctorale IAEM Lorraine MANAGINGLARGE-SCALE,DISTRIBUTED SYSTEMSRESEARCHEXPERIMENTSWITH CONTROL-FLOWS THÈSE présentée et soutenue publiquement le 6 janvier 2016 pour l’obtention du DOCTORATDEL’UNIVERSITÉDELORRAINE (mention informatique) par TOMASZBUCHERT Composition du jury : Rapporteurs Christian Pérez, Directeur de recherche Inria, LIP, Lyon Eric Eide, Research assistant professor, Univ. of Utah Examinateurs François Charoy, Professeur, Université de Lorraine Olivier Richard, Maître de conférences, Univ. Joseph Fourier, Grenoble Directeurs de thèse Lucas Nussbaum, Maître de conférences, Université de Lorraine Jens Gustedt, Directeur de recherche Inria, ICUBE, Strasbourg Laboratoire Lorrain de Recherche en Informatique et ses Applications – UMR 7503 ABSTRACT(ENGLISH) Keywords: distributed systems, large-scale experiments, experiment control, busi- ness processes, experiment provenance Running experiments on modern systems such as supercomputers, cloud infrastructures or P2P networks became very complex, both technically and methodologically. -

Software-Defined Networking: Improving Security for Enterprise

Software-defined Networking: Improving Security for Enterprise and Home Networks by Curtis R. Taylor A Dissertation Submitted to the Faculty of the WORCESTER POLYTECHNIC INSTITUTE In partial fulfillment of the requirements for the Degree of Doctor of Philosophy in Computer Science by May 2017 APPROVED: Professor Craig A. Shue, Dissertation Advisor Professor Craig E. Wills, Head of Department Professor Mark Claypool, Committee Member Professor Thomas Eisenbarth, Committee Member Doctor Nathanael Paul, External Committee Member Abstract In enterprise networks, all aspects of the network, such as placement of security devices and performance, must be carefully considered. Even with forethought, networks operators are ulti- mately unaware of intra-subnet traffic. The inability to monitor intra-subnet traffic leads to blind spots in the network where compromised hosts have unfettered access to the network for spreading and reconnaissance. While network security middleboxes help to address compromises, they are limited in only seeing a subset of all network traffic that traverses routed infrastructure, which is where middleboxes are frequently deployed. Furthermore, traditional middleboxes are inherently limited to network-level information when making security decisions. Software-defined networking (SDN) is a networking paradigm that allows logically centralized control of network switches and routers. SDN can help address visibility concerns while providing the benefits of a centralized network control platform, but traditional switch-based SDN leads to concerns of scalability and is ultimately limited in that only network-level information is available to the controller. This dissertation addresses these SDN limitations in the enterprise by pushing the SDN functionality to the end-hosts. In doing so, we address scalability concerns and provide network operators with better situational awareness by incorporating system-level and graphical user interface (GUI) context into network information handled by the controller. -

A Network in a Laptop: Rapid Prototyping for Software-Defined

A Network in a Laptop: Rapid Prototyping for Software-Defined Networks Bob Lantz Brandon Heller Nick McKeown Network Innovations Lab Dept. of Computer Science, Dept. of Electrical Engineering DOCOMO USA Labs Stanford University and Computer Science, Palo Alto, CA, USA Stanford, CA, USA Stanford University [email protected] [email protected] Stanford, CA, USA [email protected] ABSTRACT 1. INTRODUCTION Mininet is a system for rapidly prototyping large networks Inspiration hits late one night and you arrive at a on the constrained resources of a single laptop. The world-changing idea: a new network architecture, ad- lightweight approach of using OS-level virtualization fea- dress scheme, mobility protocol, or a feature to add to tures, including processes and network namespaces, allows a router. With a paper deadline approaching, you have it to scale to hundreds of nodes. Experiences with our ini- a laptop and three months. What prototyping environ- tial implementation suggest that the ability to run, poke, and ment should you use to evaluate your idea? With this debug in real time represents a qualitative change in work- question in mind, we set out to create a prototyping flow. We share supporting case studies culled from over workflow with the following attributes: 100 users, at 18 institutions, who have developed Software- Defined Networks (SDN). Ultimately, we think the great- Flexible: new topologies and new functionality est value of Mininet will be supporting collaborative net- should be defined in software, using familiar lan- work research, by enabling self-contained SDN prototypes guages and operating systems. which anyone with a PC can download, run, evaluate, ex- Deployable: deploying a functionally correct pro- plore, tweak, and build upon. -

A Defense Framework Against Denial-Of-Service in Computer Networks

A DEFENSE FRAMEWORK AGAINST DENIAL-OF-SERVICE IN COMPUTER NETWORKS by Sherif Khattab M.Sc. Computer Science, University of Pittsburgh, USA, 2004 B.Sc. Computer Engineering, Cairo University, Egypt, 1998 Submitted to the Graduate Faculty of the Department of Computer Science in partial fulfillment of the requirements for the degree of Doctor of Philosophy University of Pittsburgh 2008 UNIVERSITY OF PITTSBURGH DEPARTMENT OF COMPUTER SCIENCE This dissertation was presented by Sherif Khattab It was defended on June 25th, 2008 and approved by Prof. Rami Melhem, Department of Computer Science Prof. Daniel Moss´e,Department of Computer Science Prof. Taieb Znati, Department of Computer Science Prof. Prashant Krishnamurthy, School of Information Sciences Dissertation Advisors: Prof. Rami Melhem, Department of Computer Science, Prof. Daniel Moss´e,Department of Computer Science ii A DEFENSE FRAMEWORK AGAINST DENIAL-OF-SERVICE IN COMPUTER NETWORKS Sherif Khattab, PhD University of Pittsburgh, 2008 Denial-of-Service (DoS) is a computer security problem that poses a serious challenge to trustworthiness of services deployed over computer networks. The aim of DoS attacks is to make services unavailable to legitimate users, and current network architectures allow easy-to-launch, hard-to-stop DoS attacks. Particularly challenging are the service-level DoS attacks, whereby the victim service is flooded with legitimate-like requests, and the jamming attack, in which wireless communication is blocked by malicious radio interference. These attacks are overwhelming even for massively-resourced services, and effective and efficient defenses are highly needed. This work contributes a novel defense framework, which I call dodging, against service- level DoS and wireless jamming. -

DISSERTATION DOCTEUR DE L UNIVERSITÉ DU LUXEMBOURG EN INFORMATIQUE Diego KREUTZ Dissertation Defence Committee

PhD-FSTM-2020-52 The Faculty of Sciences, Technology and Medicine DISSERTATION Defence held on 15/09/2020 in Luxembourg to obtain the degree of DOCTEUR DE L’UNIVERSITÉ DU LUXEMBOURG EN INFORMATIQUE by Diego KREUTZ Born on 29 November 1979 in Santa Rosa (Brazil) LOGICALLY CENTRALIZED SECURITY FOR SOFTWARE-DEFINED NETWORKING Dissertation defence committee Dr Paulo Esteves-Veríssimo, dissertation supervisor Professor, Université du Luxembourg Dr Björn Ottersten, Chairman Professor, Université du Luxembourg Dr Radu State, Vice Chairman Associate Professor, Université du Luxembourg Dr Jennifer Rexford Professor, Princeton University Dr Sandra Scott-Hayward, Lecturer, Queen’s University Be lfast Acknowledgements I am very proud of and grateful to my supervisors Paulo Esteves-Veríssimo and Fernando M. V. Ramos because I have learned so much with them. One of the few things I am sure of is my eternal debt with them. I am (arguably) sure I am a better researcher now because of them. In fact, I am still learning everyday something new with them. Paulo was also an awesome sort of coach in my personal life as well. He is an impressively smart and knowledgeable person. I have to say, in some ways, he is indeed better than a regular doctor. I know that (probably) just me and him will truly understand the meaning of this statement. IhadoneofthemostinterestingandproductivetimesafterImetJiangshan Yu. He is a very smart, easy going and inspiring researcher and person. Because of him, I started to better understand and to have joy in designing security protocols. JY inspired me in different ways. I consider myself lucky to have met him.