Recognizing Handwritten Japanese Characters Using Deep Convolutional Neural Networks

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Man'yogana.Pdf (574.0Kb)

Bulletin of the School of Oriental and African Studies http://journals.cambridge.org/BSO Additional services for Bulletin of the School of Oriental and African Studies: Email alerts: Click here Subscriptions: Click here Commercial reprints: Click here Terms of use : Click here The origin of man'yogana John R. BENTLEY Bulletin of the School of Oriental and African Studies / Volume 64 / Issue 01 / February 2001, pp 59 73 DOI: 10.1017/S0041977X01000040, Published online: 18 April 2001 Link to this article: http://journals.cambridge.org/abstract_S0041977X01000040 How to cite this article: John R. BENTLEY (2001). The origin of man'yogana. Bulletin of the School of Oriental and African Studies, 64, pp 5973 doi:10.1017/S0041977X01000040 Request Permissions : Click here Downloaded from http://journals.cambridge.org/BSO, IP address: 131.156.159.213 on 05 Mar 2013 The origin of man'yo:gana1 . Northern Illinois University 1. Introduction2 The origin of man'yo:gana, the phonetic writing system used by the Japanese who originally had no script, is shrouded in mystery and myth. There is even a tradition that prior to the importation of Chinese script, the Japanese had a native script of their own, known as jindai moji ( , age of the gods script). Christopher Seeley (1991: 3) suggests that by the late thirteenth century, Shoku nihongi, a compilation of various earlier commentaries on Nihon shoki (Japan's first official historical record, 720 ..), circulated the idea that Yamato3 had written script from the age of the gods, a mythical period when the deity Susanoo was believed by the Japanese court to have composed Japan's first poem, and the Sun goddess declared her son would rule the land below. -

SUPPORTING the CHINESE, JAPANESE, and KOREAN LANGUAGES in the OPENVMS OPERATING SYSTEM by Michael M. T. Yau ABSTRACT the Asian L

SUPPORTING THE CHINESE, JAPANESE, AND KOREAN LANGUAGES IN THE OPENVMS OPERATING SYSTEM By Michael M. T. Yau ABSTRACT The Asian language versions of the OpenVMS operating system allow Asian-speaking users to interact with the OpenVMS system in their native languages and provide a platform for developing Asian applications. Since the OpenVMS variants must be able to handle multibyte character sets, the requirements for the internal representation, input, and output differ considerably from those for the standard English version. A review of the Japanese, Chinese, and Korean writing systems and character set standards provides the context for a discussion of the features of the Asian OpenVMS variants. The localization approach adopted in developing these Asian variants was shaped by business and engineering constraints; issues related to this approach are presented. INTRODUCTION The OpenVMS operating system was designed in an era when English was the only language supported in computer systems. The Digital Command Language (DCL) commands and utilities, system help and message texts, run-time libraries and system services, and names of system objects such as file names and user names all assume English text encoded in the 7-bit American Standard Code for Information Interchange (ASCII) character set. As Digital's business began to expand into markets where common end users are non-English speaking, the requirement for the OpenVMS system to support languages other than English became inevitable. In contrast to the migration to support single-byte, 8-bit European characters, OpenVMS localization efforts to support the Asian languages, namely Japanese, Chinese, and Korean, must deal with a more complex issue, i.e., the handling of multibyte character sets. -

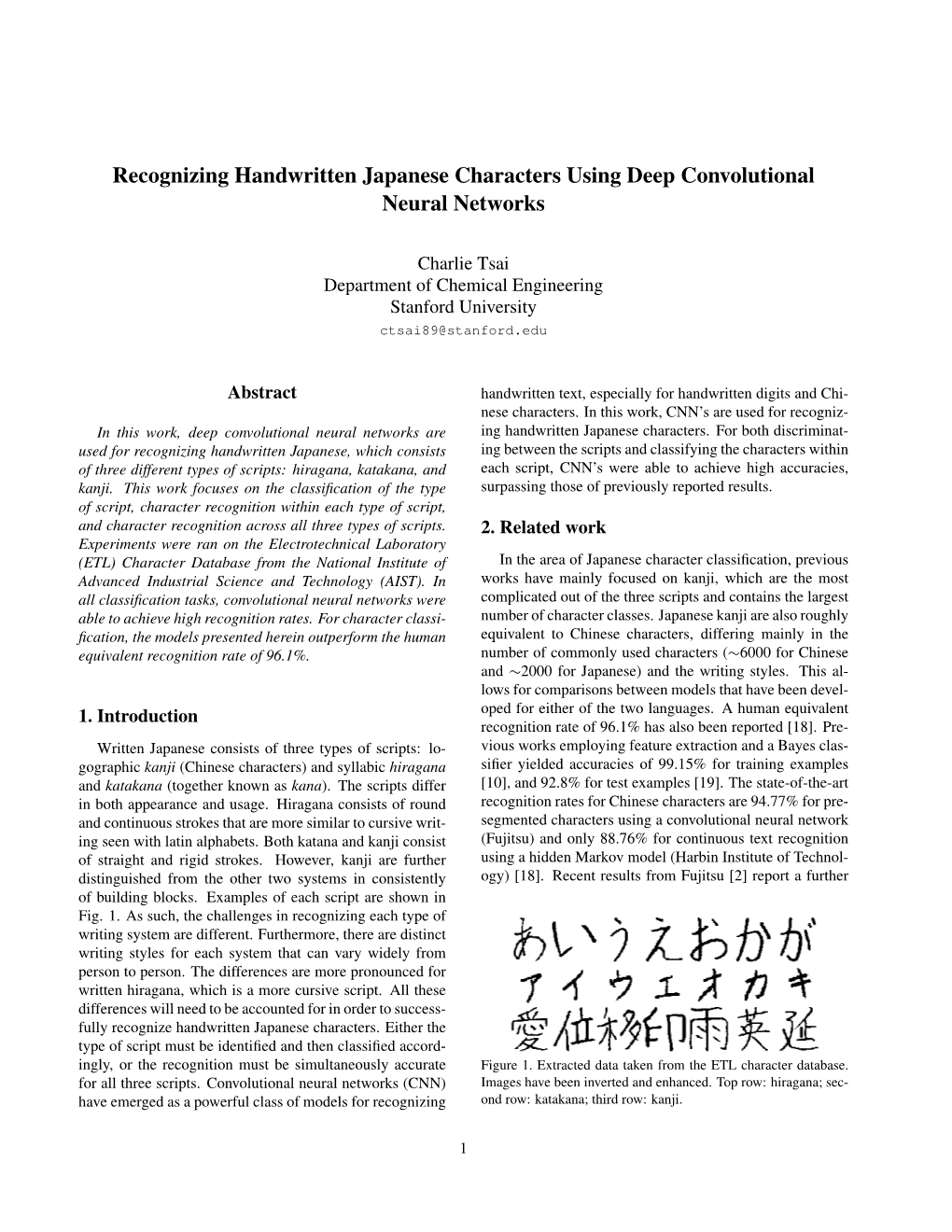

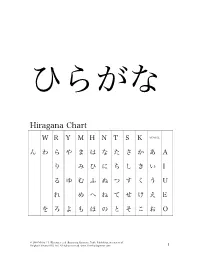

Hiragana Chart

ひらがな Hiragana Chart W R Y M H N T S K VOWEL ん わ ら や ま は な た さ か あ A り み ひ に ち し き い I る ゆ む ふ ぬ つ す く う U れ め へ ね て せ け え E を ろ よ も ほ の と そ こ お O © 2010 Michael L. Kluemper et al. Beginning Japanese, Tuttle Publishing, an imprint of Periplus Editions (HK) Ltd. All rights reserved. www.TimeForJapanese.com. 1 Beginning Japanese 名前: ________________________ 1-1 Hiragana Activity Book 日付: ___月 ___日 一、 Practice: あいうえお かきくけこ がぎぐげご O E U I A お え う い あ あ お え う い あ お う あ え い あ お え う い お う い あ お え あ KO KE KU KI KA こ け く き か か こ け く き か こ け く く き か か こ き き か こ こ け か け く く き き こ け か © 2010 Michael L. Kluemper et al. Beginning Japanese, Tuttle Publishing, an imprint of Periplus Editions (HK) Ltd. All rights reserved. www.TimeForJapanese.com. 2 GO GE GU GI GA ご げ ぐ ぎ が が ご げ ぐ ぎ が ご ご げ ぐ ぐ ぎ ぎ が が ご げ ぎ が ご ご げ が げ ぐ ぐ ぎ ぎ ご げ が 二、 Fill in each blank with the correct HIRAGANA. SE N SE I KI A RA NA MA E 1. -

Handy Katakana Workbook.Pdf

First Edition HANDY KATAKANA WORKBOOK An Introduction to Japanese Writing: KANA THIS IS A SUPPLEMENT FOR BEGINNING LEVEL JAPANESE LANGUAGE INSTRUCTION. \ FrF!' '---~---- , - Y. M. Shimazu, Ed.D. -----~---- TABLE OF CONTENTS Page Introduction vi ACKNOWLEDGEMENlS vii STUDYSHEET#l 1 A,I,U,E, 0, KA,I<I, KU,KE, KO, GA,GI,GU,GE,GO, N WORKSHEET #1 2 PRACTICE: A, I,U, E, 0, KA,KI, KU,KE, KO, GA,GI,GU, GE,GO, N WORKSHEET #2 3 MORE PRACTICE: A, I, U, E,0, KA,KI,KU, KE, KO, GA,GI,GU,GE,GO, N WORKSHEET #~3 4 ADDmONAL PRACTICE: A,I,U, E,0, KA,KI, KU,KE, KO, GA,GI,GU,GE,GO, N STUDYSHEET #2 5 SA,SHI,SU,SE, SO, ZA,JI,ZU,ZE,ZO, TA, CHI, TSU, TE,TO, DA, DE,DO WORI<SHEEI' #4 6 PRACTICE: SA,SHI,SU,SE, SO, ZA,II, ZU,ZE,ZO, TA, CHI, 'lSU,TE,TO, OA, DE,DO WORI<SHEEI' #5 7 MORE PRACTICE: SA,SHI,SU,SE,SO, ZA,II, ZU,ZE, W, TA, CHI, TSU, TE,TO, DA, DE,DO WORKSHEET #6 8 ADDmONAL PRACI'ICE: SA,SHI,SU,SE, SO, ZA,JI, ZU,ZE,ZO, TA, CHI,TSU,TE,TO, DA, DE,DO STUDYSHEET #3 9 NA,NI, NU,NE,NO, HA, HI,FU,HE, HO, BA, BI,BU,BE,BO, PA, PI,PU,PE,PO WORKSHEET #7 10 PRACTICE: NA,NI, NU, NE,NO, HA, HI,FU,HE,HO, BA,BI, BU,BE, BO, PA, PI,PU,PE,PO WORKSHEET #8 11 MORE PRACTICE: NA,NI, NU,NE,NO, HA,HI, FU,HE, HO, BA,BI,BU,BE, BO, PA,PI,PU,PE,PO WORKSHEET #9 12 ADDmONAL PRACTICE: NA,NI, NU, NE,NO, HA, HI, FU,HE, HO, BA,BI,3U, BE, BO, PA, PI,PU,PE,PO STUDYSHEET #4 13 MA, MI,MU, ME, MO, YA, W, YO WORKSHEET#10 14 PRACTICE: MA,MI, MU,ME, MO, YA, W, YO WORKSHEET #11 15 MORE PRACTICE: MA, MI,MU,ME,MO, YA, W, YO WORKSHEET #12 16 ADDmONAL PRACTICE: MA,MI,MU, ME, MO, YA, W, YO STUDYSHEET #5 17 -

Writing As Aesthetic in Modern and Contemporary Japanese-Language Literature

At the Intersection of Script and Literature: Writing as Aesthetic in Modern and Contemporary Japanese-language Literature Christopher J Lowy A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy University of Washington 2021 Reading Committee: Edward Mack, Chair Davinder Bhowmik Zev Handel Jeffrey Todd Knight Program Authorized to Offer Degree: Asian Languages and Literature ©Copyright 2021 Christopher J Lowy University of Washington Abstract At the Intersection of Script and Literature: Writing as Aesthetic in Modern and Contemporary Japanese-language Literature Christopher J Lowy Chair of the Supervisory Committee: Edward Mack Department of Asian Languages and Literature This dissertation examines the dynamic relationship between written language and literary fiction in modern and contemporary Japanese-language literature. I analyze how script and narration come together to function as a site of expression, and how they connect to questions of visuality, textuality, and materiality. Informed by work from the field of textual humanities, my project brings together new philological approaches to visual aspects of text in literature written in the Japanese script. Because research in English on the visual textuality of Japanese-language literature is scant, my work serves as a fundamental first-step in creating a new area of critical interest by establishing key terms and a general theoretical framework from which to approach the topic. Chapter One establishes the scope of my project and the vocabulary necessary for an analysis of script relative to narrative content; Chapter Two looks at one author’s relationship with written language; and Chapters Three and Four apply the concepts explored in Chapter One to a variety of modern and contemporary literary texts where script plays a central role. -

Implementing Cross-Locale CJKV Code Conversion

Implementing Cross-Locale CJKV Code Conversion Ken Lunde CJKV Type Development Adobe Systems Incorporated bc ftp://ftp.oreilly.com/pub/examples/nutshell/ujip/unicode/iuc13-c2-paper.pdf ftp://ftp.oreilly.com/pub/examples/nutshell/ujip/unicode/iuc13-c2-slides.pdf Code Conversion Basics dc • Algorithmic code conversion — Within a single locale: Shift-JIS, EUC-JP, and ISO-2022-JP — A purely mathematical process • Table-driven code conversion — Required across locales: Chinese ↔ Japanese — Required when dealing with Unicode — Mapping tables are required — Can sometimes be faster than algorithmic code conversion— depends on the implementation September 10, 1998 Copyright © 1998 Adobe Systems Incorporated Code Conversion Basics (Cont’d) dc • CJKV character set differences — Different number of characters — Different ordering of characters — Different characters September 10, 1998 Copyright © 1998 Adobe Systems Incorporated Character Sets Versus Encodings dc • Common CJKV character set standards — China: GB 1988-89, GB 2312-80; GB 1988-89, GBK — Taiwan: ASCII, Big Five; CNS 5205-1989, CNS 11643-1992 — Hong Kong: ASCII, Big Five with Hong Kong extension — Japan: JIS X 0201-1997, JIS X 0208:1997, JIS X 0212-1990 — South Korea: KS X 1003:1993, KS X 1001:1992, KS X 1002:1991 — North Korea: ASCII (?), KPS 9566-97 — Vietnam: TCVN 5712:1993, TCVN 5773:1993, TCVN 6056:1995 • Common CJKV encodings — Locale-independent: EUC-*, ISO-2022-* — Locale-specific: GBK, Big Five, Big Five Plus, Shift-JIS, Johab, Unified Hangul Code — Other: UCS-2, UCS-4, UTF-7, UTF-8, -

D Igital Text

Digital text The joys of character sets Contents Storing text ¡ General problems ¡ Legacy character encodings ¡ Unicode ¡ Markup languages Using text ¡ Processing and display ¡ Programming languages A little bit about writing systems Overview Latin Cyrillic Devanagari − − − − − − Tibetan \ / / Gujarati | \ / − Armenian / Bengali SOGDIAN − Mongolian \ / / Gurumukhi SCRIPT Greek − Georgian / Oriya Chinese | / / | / Telugu / PHOENICIAN BRAHMI − − Kannada SINITIC − Japanese SCRIPT \ SCRIPT Malayalam SCRIPT \ / | \ \ Tamil \ Hebrew | Arabic \ Korean | \ \ − − Sinhala | \ \ | \ \ _ _ Burmese | \ \ Khmer | \ \ Ethiopic Thaana \ _ _ Thai Lao The easy ones Latin is the alphabet and writing system used in the West and some other places Greek and Cyrillic (Russian) are very similar, they just use different characters Armenian and Georgian are also relatively similar More difficult Hebrew is written from right−to−left, but numbers go left−to−right... Arabic has the same rules, but also requires variant selection depending on context and ligature forming The far east Chinese uses two ’alphabets’: hanzi ideographs and zhuyin syllables Japanese mixes four alphabets: kanji ideographs, katakana and hiragana syllables and romaji (latin) letters and numbers Korean uses hangul ideographs, combined from jamo components Vietnamese uses latin letters... The Indic languages Based on syllabic alphabets Require complex ligature forming Letters are not written in logical order, but require a strange ’circular’ ordering In addition, a single line consists of separate -

Characteristics of Developmental Dyslexia in Japanese Kana: From

al Ab gic no lo rm o a h l i c t y i e s s Ogawa et al., J Psychol Abnorm Child 2014, 3:3 P i Journal of Psychological Abnormalities n f o C l DOI: 10.4172/2329-9525.1000126 h a i n l d ISSN:r 2329-9525 r u e o n J in Children Research Article Open Access Characteristics of Developmental Dyslexia in Japanese Kana: from the Viewpoint of the Japanese Feature Shino Ogawa1*, Miwa Fukushima-Murata2, Namiko Kubo-Kawai3, Tomoko Asai4, Hiroko Taniai5 and Nobuo Masataka6 1Graduate School of Medicine, Kyoto University, Kyoto, Japan 2Research Center for Advanced Science and Technology, the University of Tokyo, Tokyo, Japan 3Faculty of Psychology, Aichi Shukutoku University, Aichi, Japan 4Nagoya City Child Welfare Center, Aichi, Japan 5Department of Pediatrics, Nagoya Central Care Center for Disabled Children, Aichi, Japan 6Section of Cognition and Learning, Primate Research Institute, Kyoto University, Aichi, Japan Abstract This study identified the individual differences in the effects of Japanese Dyslexia. The participants consisted of 12 Japanese children who had difficulties in reading and writing Japanese and were suspected of having developmental disorders. A test battery was created on the basis of the characteristics of the Japanese language to examine Kana’s orthography-to-phonology mapping and target four cognitive skills: analysis of phonological structure, letter-to-sound conversion, visual information processing, and eye–hand coordination. An examination of the individual ability levels for these four elements revealed that reading and writing difficulties are not caused by a single disability, but by a combination of factors. -

Developmental Reading Disorders in Japan— Prevalence, Profiles, and Possible Mechanisms

Top Lang Disorders Vol. 34, No. 2, pp. 121–132 Copyright c 2014 Wolters Kluwer Health | Lippincott Williams & Wilkins Developmental Reading Disorders in Japan— Prevalence, Profiles, and Possible Mechanisms Yumiko Tanaka Welty, Lise Menn, and Noriko Oishi Japan has been considered dyslexia-free because of the nature of the orthography, which con- sists of the visually simple kana syllabary and some thousands of visually complex, logographic kanji characters. It is true that few children struggle with learning kana, which provide consistent mappings between symbols and their pronunciation. Indeed, most children can read most of the kana by age 6. However, many Japanese children struggle with reading the kanji, which represent most of the content words in a text; in addition to their visual complexity and impoverished or nonexistent phonological information, kanji are difficult because they typically have several pronunciations and multiple meanings, depending on the context. Because kanji must be learned semantically rather than phonologically, many people believe that Japanese dyslexia is due to visuospatial rather than phonological processing impairments. We sketch the complex psycholin- guistic demands of retrieving the correct pronunciations for kanji, especially in kanji compound words. Some individuals have extreme difficulty in learning the correspondences between these symbols and their sounds; whether these difficulties are visual, phonological, or both is an urgent topic for further research. After introducing Japanese orthography, we present 2 case studies. The first is a profile of a boy we observed from ages 7 to 20 years with difficulties in learning both kana and kanji. The second is a case study of using an interactive reading intervention for a fifth- grade boy with dyslexia. -

AIX Globalization

AIX Version 7.1 AIX globalization IBM Note Before using this information and the product it supports, read the information in “Notices” on page 233 . This edition applies to AIX Version 7.1 and to all subsequent releases and modifications until otherwise indicated in new editions. © Copyright International Business Machines Corporation 2010, 2018. US Government Users Restricted Rights – Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents About this document............................................................................................vii Highlighting.................................................................................................................................................vii Case-sensitivity in AIX................................................................................................................................vii ISO 9000.....................................................................................................................................................vii AIX globalization...................................................................................................1 What's new...................................................................................................................................................1 Separation of messages from programs..................................................................................................... 1 Conversion between code sets............................................................................................................. -

Frank's Do-It-Yourself Kana Cards V

Frank's do-it-yourself kana cards v. 1.0, 2000-08-07 Frank Stajano University of Cambridge and AT&T Laboratories Cambridge http://www.cl.cam.ac.uk/~fms27/ and http://www.uk.research.att.com/~fms/ This set of flash cards is meant to help you familiar cards and insist on the difficult part of き and さ with a separate stroke, become fluent in the use of the Japanese ones. unlike what happens in the fonts used in hiragana and katakana syllabaries. I made this document. I have followed the stroke it because I needed one myself and could The complete set consists of 10 double- counts of Henshall-Takagaki, even when not find it in the local bookshops (kanji sided sheets (20 printable pages) of 50 they seem weird for the shape of the char- cards were available, and I bought those; cards each, but you may choose to print acter as drawn on the card. but kana cards weren't); if it helps you too, smaller subsets as detailed below. Actu- so much the better. ally there are some blanks, so the total The easiest way to turn this document into number of cards is only 428 instead of 500. a set of cards is simply to print it (double The romanisation system chosen for these It would have been possible to fit them on sided of course!) and then cut each page cards is the Hepburn, which is the most 9 sheets instead of 10, but only by com- into cards with a ruler and a sharp blade. -

Katakana Back

321“u” as in you “i” as in easy “a” as in father ウ イ アAAAaaa! “Oooo!” The water balloon was An easel holds your picture “AAAaaa!” cried the critter as cold as it splashed on his back! while you work on it or display it. he fell off the edge of the cliff. 654“ka” as in car “o” as in oak “e” as in red カか Katakana “ka” カ and オ hiragana “ka” か look a bit alike. an Olympic figure skater elevator doors 987“ke” as in Kevin “ku” as in cuckoo “ki” as in key ク キき ケ Katakana “ki” キ and a kangaroo a cool way to write7 seven (7) hiragana “ki” き look a bit alike. 12“shi” as in she 11“sa” as in saw 10 “ko” as in cocoa シ サ A sawhorse holds She tilted her head and smiled. wood while you cut it. コa cup of hot cocoa 15“so” as in so 14“se” as in set 13 “su” as in super セ When other kids said, せ “You only have one eye,” Katakana “se” and hiragana “se” he said, “So!” look a little alike. スIt’s Superman... er, super-critter. 18“tsu” as in cats 17“chi” as in cheer 16 “ta” as in tall チ タ Tw o children are the leaning tower of Pisa sliding down a slide. (In Japanese “tower” is pronounced (“ts” like cats and “u” like you) a cheerleader with a “ta” as in tall). 21“na” as in not 20“to” as in totem 19 “te” as in telephone ト テ a knife a totem pole a telephone pole and wires 24“ne” as in nest 23“nu” as in new 22 “ni” as in need ネ a nest on top of a tree a new way to write seven (7) The Japanese word for “two” is ni.