A Framework for RDF Data Exploration and Conversion

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

12 AUGUST 2017 “For There Is Nothing Either Good Or Bad, but Thinking Makes It So.“ Hamlet, Act II, Scene 2

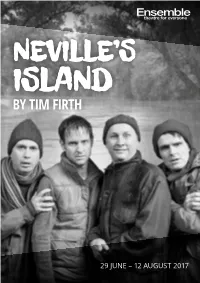

29 JUNE – 12 AUGUST 2017 “For there is nothing either good or bad, but thinking makes it so.“ Hamlet, Act II, Scene 2. Ramsey Island, Dargonwater. Friday. June. DIRECTOR ASSISTANT DIRECTOR MARK KILMURRY SHAUN RENNIE CAST CREW ROY DESIGNER ANDREW HANSEN HUGH O’CONNOR NEVILLE LIGHTING DESIGNER DAVID LYNCH BENJAMIN BROCKMAN ANGUS SOUND DESIGNER DARYL WALLIS CRAIG REUCASSEL DRAMATURGY GORDON JANE FITZGERALD CHRIS TAYLOR STAGE MANAGER STEPHANIE LINDWALL WITH SPECIAL THANKS TO: ASSISTANT STAGE MANAGER SLADE BLANCH / DANI IRONSIDE Jacqui Dark as Denise, WARDROBE COORDINATOR Shaun Rennie as DJ Kirk, ALANA CANCERI Vocal Coach Natasha McNamara, MAKEUP Kanen Breen & Kyle Rowling PEGGY CARTER First performed by Stephen Joseph Theatre, Scarborough in May 1992. NEVILLE’S ISLAND @Tim Firth Copyright agent: Alan Brodie Representation Ltd. www.alanbrodie.com RUNNING TIME APPROX 2 HOURS 10 MINUTES INCLUDING INTERVAL 02 9929 0644 • ensemble.com.au TIM FIRTH – PLAYWRIGHT Tim’s recent theatre credits include the musicals: THE GIRLS (West End, Olivier Nomination), THIS IS MY FAMILY (UK Theatre Award Best Musical), OUR HOUSE (West End, Olivier Award Best Musical) and THE FLINT STREET NATIVITY. His plays include NEVILLE’S ISLAND (West End, Olivier Nomination), CALENDAR GIRLS (West End, Olivier Nomination) SIGN OF THE TIMES (West End) and THE SAFARI PARTY. Tim’s film credits include CALENDAR GIRLS, BLACKBALL, KINKY BOOTS and THE WEDDING VIDEO. His work for television includes MONEY FOR NOTHING (Writer’s Guild Award), THE ROTTENTROLLS (BAFTA Award), CRUISE OF THE GODS, THE FLINT STREET NATIVITY and PRESTON FRONT (Writer’s Guild “For there is nothing either good or bad, but thinking makes it so.“ Award; British Comedy Award, RTS Award, BAFTA nomination). -

Stephen Harrington Thesis

PUBLIC KNOWLEDGE BEYOND JOURNALISM: INFOTAINMENT, SATIRE AND AUSTRALIAN TELEVISION STEPHEN HARRINGTON BCI(Media&Comm), BCI(Hons)(MediaSt) Submitted April, 2009 For the degree of Doctor of Philosophy Creative Industries Faculty Queensland University of Technology, Australia 1 2 STATEMENT OF ORIGINAL AUTHORSHIP The work contained in this thesis has not been previously submitted to meet requirements for an award at this or any other higher education institution. To the best of my knowledge and belief, the thesis contains no material previously published or written by another person, except where due reference is made. _____________________________________________ Stephen Matthew Harrington Date: 3 4 ABSTRACT This thesis examines the changing relationships between television, politics, audiences and the public sphere. Premised on the notion that mediated politics is now understood “in new ways by new voices” (Jones, 2005: 4), and appropriating what McNair (2003) calls a “chaos theory” of journalism sociology, this thesis explores how two different contemporary Australian political television programs (Sunrise and The Chaser’s War on Everything) are viewed, understood, and used by audiences. In analysing these programs from textual, industry and audience perspectives, this thesis argues that journalism has been largely thought about in overly simplistic binary terms which have failed to reflect the reality of audiences’ news consumption patterns. The findings of this thesis suggest that both ‘soft’ infotainment (Sunrise) and ‘frivolous’ satire (The Chaser’s War on Everything) are used by audiences in intricate ways as sources of political information, and thus these TV programs (and those like them) should be seen as legitimate and valuable forms of public knowledge production. -

Julian Morrow

Julian Morrow Co-founder of The Chaser; Entertaining MC and speaker Julian Morrow is a comedian and television producer and is best known for being a member of the satirical team The Chaser. Julian has made a career of public nuisance in various forms, co-founding satirical media empire The Chaser and joke company Giant Dwarf, as well as making TV shows including The Election Chaser, CNNNN, The Chaser’s War on Everything, The Hamster Wheel and The Checkout. His work has been nominated, unsuccessfully, for many awards, and prosecuted successfully in many courts. More about Julian Morrow: In recent years, he was taken to claiming credit for the work of others as Executive Producer of Lawrence Leung’s series Choose Your Own Adventure and Unbelievable, Eliza and Hannah Reilly’s Growing Up Gracefully and Sarah Scheller & Alison Bell’s The Letdown (which on the 2018 AACTA Award for Best TV Comedy). In 2012 and 2013 he lowered standards at ABC Radio National as host of Friday Drive, a program which led to huge career opportunities for its Monday-Thursday host, Waleed Aly. In 2015, Julian founded Giant Dwarf theatre at 199 Cleveland Street Redfern, a venue which has been described as “absolutely hilarious” by his accountant. Over the years, Julian has interviewed countless people whose cache he tries to cash in on in his CV including Edward Snowden, Christopher Hitchens, Sting, Nobel Peace Prize winner Leymah Gbowee, Marc Newsome and the curator of TED Chris Anderson. Due to the unavailability of several respected media commentators, in 2009 Julian was invited to give the Andrew Olle Media Lecture, an honour he later demeaned by creating The Annual Inaugural Chaser Lecture. -

Feduni Researchonline Copyright Notice

FedUni ResearchOnline https://researchonline.federation.edu.au Copyright Notice This is an original manuscript/preprint of an article published by Taylor & Francis in Continuum in September 2020, available online: https://doi.org/10.1080/10304312.2020.1782838 CRICOS 00103D RTO 4909 Page 1 of 1 Comic investigation and genre-mixing: the television docucomedies of Lawrence Leung, Judith Lucy and Luke McGregor Lesley Speed In the twenty-first century, comedians have come to serve as public commentators. This article examines the relationship between genre-mixing and cultural commentary in four documentary series produced for the Australian Broadcasting Corporation (ABC) that centre on established comedians. These series are Lawrence Leung’s Choose Your Own Adventure (2009), Judith Lucy’s Spiritual Journey (2011), Judith Lucy is All Woman (2015) and Luke Warm Sex (2016). Each series combines the documentary and comedy genres by centring on a comedian’s investigation of a theme such as spirituality, gender or sex. While appearing alongside news satire, these docucomedies depart from the latter by eschewing politics in favour of existential themes. Embracing conventions of personalized documentaries, these series use performance reflexively to draw attention to therapeutic discourses and awkward situations. Giving expression to uncertain and questioning views of contemporary spirituality, gender and mediated intimacies, the docucomedies of Lawrence Leung, Judith Lucy and Luke McGregor stage interventions in contemporary debates that constitute forms of public pedagogy. Documentary comedy These series can be identified as documentary comedies, or docucomedies, which combine humour and non-fiction representation without adhering primarily to either genre. Although the relationship between comedy and factual screen works has received little sustained attention, notes Paul Ward, a program can combine both genres (2005, 67, 78–9). -

Commercial Radio Australia

MEDIA RELEASE 22 September 2016 Chris Taylor and Andrew Hansen to host the 28th annual Australian Commercial Radio Awards Chris Taylor and Andrew Hansen, best known as members of the comedy group The Chaser, will host the 28th annual Australian Commercial Radio Awards (ACRAs) in Melbourne. Chris and Andrew were part of the full Chaser team that made their radio debut on Triple M (2003) with The Friday Chaser. They have worked on radio throughout their careers and in 2015 toured their live show, a parody of celebrity Q&As entitled In Conversation with Lionel Corn. Chris is currently a regular co-host of the Merrick & Australia national drive show on Triple M and presenter of the We Was Robbed, alongside HG Nelson on Kinderling Kids Radio on DAB+ digital radio. Writers, producers and stars of the TV shows Media Circus (2014-15), The Hamster Wheel (2011- 2013), Yes We Canberra! (2010), The Chaser’s War On Everything (2006-9), The Chaser Decides (2004, 2007), CNNNN (2002-3) and The Election Chaser (2001). Their shows won two AFI awards and two Logies. The ACRAs recognise excellence in radio broadcasting across news, talk, sport, music and entertainment. Commercial Radio Australia chief executive officer, Joan Warner said: “The ACRAs are the highlight of the year for the radio industry and we look forward to Chris and Andrew hosting the awards this year in Melbourne.” Radio announcers joining Chris and Andrew on stage to present awards will include: Hamish & Andy – Hit Network (Hamish Blake & Andy Lee) Jonesy & Amanda – WSFM (Brendan Jones & Amanda Keller) Kate, Tim & Marty – Nova Network (Kate Ritchie, Tim Blackwell & Marty Sheargold) Neil Mitchell – 3AW Rove & Sam – 2Day FM (Rove McManus & Sam Frost) Merrick Watts – Triple M, Southern Cross Austereo Jo & Lehmo – Gold 104.3 (Jo Stanley & Anthony Lehmann) Mike E & Emma – The Edge (Michael Etheridge & Emma Chow) The list of ACRA finalists is available here. -

Andrew Hansen

Andrew Hansen Comedian, musician, MC and The Chaser star Andrew Hansen is a comedian, pianist, guitarist, composer and vocalist as well as a writer and performer who is best known as a member of the Australian satirical team on ABC TV’s The Chaser. Andrew is very versatile on the corporate stage too. He can MC an event, give a custom-written comedy presentation or even compose and perform a song if inspired to do so. Andrew’s radio work includes shows on Triple M as well as composing and starring in the musical comedy series and ARIA Award winning album The Blow Parade (triple j, 2010). The Chaser’s TV shows include Media Circus (2014-15), The Hamster Wheel (2011-2013), Yes We Canberra! (2010), The Chaser’s War On Everything (2006-9), The Chaser Decides (2004, 2007) and CNNNN (2002-3). On the Seven network, Andrew produced The Unbelievable Truth (2012). He has also appeared as a guest on most Australian TV shows that have guests. In print he wrote for the humorous fortnightly newspaper The Chaser (1999-2005), eleven Chaser Annuals (Text Publishing, 2000-10), and for the recently launched Chaser Quarterly (2015). On stage Andrew composed and starred in the musical Dead Caesar (Sydney Theatre Company), did two national tours with The Chaser, and two live collaborations with Chris Taylor (One Man Show, 2014 and In Conversation with Lionel Corn, 2015). The Chaser team also runs a cool theatre in Sydney called Giant Dwarf. Andrew composed all The Chaser’s songs as well as the theme music for Media Circus and The Chaser’s War On Everything. -

The Chaser Team

The Chaser Team Satirical Entertainment The Chaser is a satirical media empire which rivals Rupert Murdoch’s News Corp in all fields except power, influence, popularity and profitability. The Chaser is the group behind ABC TV series The Election Chaser (2001), CNNNN (2002) and CNNNN Live (2003) which together have lost a host of Australian and international TV comedy awards. However, their credibility-building run of losses ended in 2004 when CNNNN tied with Kath & Kim to win the Logie for Most Outstanding TV Comedy. In 2005 they won the Logie for Most Outstanding TV Comedy with the hugely popular, The Chaser Decides (ABC). In 2006, the team returned to ABC TV with The Chaser’s War On Everything, which won them two AFI Awards (Best Comedy and Best Comedy Performer for Andrew Hansen), as well as several court appearances. The show returned in 2009. Extremely popular in the corporate arena, The Chaser Team offer a range of speaking and entertainment options tailored to client’s needs. As hosts and presenters, they are beautifully irrelevant with just the right amount of professionalism. As after dinner and keynote speakers, they can present custom-written satirical songs and parodies of corporate presentations including powerpoint and trivia quizzes. More about The Chaser: The Chaser was founded in 1999 as a fortnightly satirical newspaper produced out of a spare bedroom by The Chaser Team: Charles Firth, Craig Reucassel, Julian Morrow, Dominic Knight, Andrew Hansen, Chas Licciardello and Chris Taylor. Since those humble beginnings, the Chaser team has lost its humility and has produced ClassicTM comedy in all media, including print, online, radio, television and Christmas crackers. -

School & Library Catalogue

2018 TERM 3 & 4 SCHOOL & LIBRARY CATALOGUE Walker Books Australia 1 WALKER BOOKS AUSTRALIA Walker Books Australia has been bringing the best of children’s publishing to Australian children for 25 years and is recognised as a market leader in quality children’s publishing. For more information visit WWW.WALKERBOOKS.COM.AU Black Dog Books, an imprint of Walker Books Australia, publishes some of Australia's finest writers including Carole Wilkinson, Sue Lawson and Suzy Zail. Black Dog Books are Australian stories for Australian readers. WALKER BOOKS CLASSROOM Walker Books Classroom is the perfect destination for teachers and librarians to find the right book to use in the classroom and find free teacher resources. For more information visit CLASSROOM.WALKERBOOKS.COM.AU Keep up to date with the latest news and releases with the Walker Books Classroom Newsletter classroom.walkerbooks.com.au/home/newsletter Twitter @walker_class Facebook @walkerbooksaus Instagram @walkerbooksaus [email protected] 2 NARRATIVE NONFICTION PICTURE BOOK THE HAPPINESS BOX: A WARTIME BOOK OF HOPE Mark Greenwood Andrew McLean 9781925081381 Hardback August 2018 Recommended age: 7-10 Walker Books Australia The inspirational true story of a book that became a National Treasure. In 1942, Sergeant “Griff” Griffin was a prisoner of war. With Christmas approaching, he decided to make a book for the children cooped up in nearby Changi Prison. The book was said to contain the secrets to happiness. But the enemy was suspicious … THEMES TEACHER NOTES WWII, hope, compassion, courage. classroom.walkerbooks.com.au/thehappinessbox 3 PICTURE BOOK BACKYARD Ananda Braxton-Smith Lizzy Newcomb 9781925381177 Hardback August 2018 Recommended age: 5-8 Black Dog Books A lush, lyrical picture book all about the life that children can find in a suburban backyard. -

NEWMEDIA Greig ‘Boldy’ Bolderrow, 103.5 Mix FM (103.5 Triple Postal Address: M)/ 101.9 Sea FM (Now Hit 101.9) GM, Has Retired from Brisbane Radio

Volume 29. No 9 Jocks’ Journal May 1-16,2017 “Australia’s longest running radio industry publication” ‘Boldy’ Bows Out Of Radio NEWMEDIA Greig ‘Boldy’ Bolderrow, 103.5 Mix FM (103.5 Triple Postal Address: M)/ 101.9 Sea FM (now Hit 101.9) GM, has retired from Brisbane radio. His final day was on March 31. Greig began PO Box 2363 his career as a teenage announcer but he will be best Mansfield BC Qld 4122 remembered for his 33 years as General Manager for Web Address: Southern Cross Austereo in Wide Bay. The day after www.newmedia.com.au he finished his final exam he started his job at the Email: radio station. He had worked a lot of jobs throughout [email protected] the station before becoming the general manager. He started out as an announcer at night. After that he Phone Contacts: worked on breakfast shows and sales, all before he Office: (07) 3422 1374 became the general manager.” He managed Mix and Mobile: 0407 750 694 Sea in Maryborough and 93.1 Sea FM in Bundaberg, as well as several television channels. He says that supporting community organisations was the best part of the job. Radio News The brand new Bundy breakfast Karen-Louise Allen has left show has kicked off on Hitz939. ARN Sydney. She is moving Tim Aquilina, Assistant Matthew Ambrose made the to Macquarie Media in the Content Director of EON move north from Magic FM, role of Direct Sales Manager, Broadcasters, is leaving the Port Augusta teaming up with Sydney. -

Ruby Hutchison Memorial Lecture Co-Hosted by CHOICE and Presented by Julian Morrow

2021 National Consumer Congress virtual lecture series How can we rebuild a safer, fairer and more sustainable Australia post pandemic? Program Session 1: Monday 15 March—World Consumer Rights Day Ruby Hutchison memorial lecture Co-hosted by CHOICE and presented by Julian Morrow 4:30–4:35 pm Welcome Delia Rickard, Deputy Chair, ACCC 4:35–4:40 pm Acknowledgement of Country 4:40–4:50 pm Introduction to Ruby Hutchison and CHOICE Alan Kirkland, CEO, CHOICE 4:50–4:55 pm Ruby Hutchison biography Video presentation 4:55–5.25 pm Ruby Hutchison memorial lecture Julian Morrow, Co-creator and Executive Producer, The Checkout 5:25–5:55 pm Q&A session Moderated by ACCC/CHOICE staff via Teams Live chat 5:55–6:00 pm Closing comments Delia Rickard Australian Eastern Daylight Time (AEDT) #ConsumerCongress2021 @acccgovau Speaker Biographies (in order of appearance) Delia Rickard Deputy Chair, Australian Competition and Consumer Commission Delia Rickard is the Deputy Chair of the Australian Competition and Consumer Commission (ACCC). She has been working in the area of consumer protection for around 30 years. Delia is passionate about ensuring consumers can operate in a well-regulated, fair and ethical marketplace and have access to the information and tools they need to make good choices and exercise their rights. Prior to her current role, Delia spent time in senior roles at the Australian Securities and Investments Commission and the ACCC. She is also trustee of the Jan Pentland Foundation and chairs the Good Shepherd Microfinance—Financial Inclusion Action Plan Advisory Group. On Australia Day 2011 Delia was awarded the Public Service Medal for her contribution to consumer protection and financial services. -

Playful Politicians and Serious Satirists

This article was downloaded by: [115.187.238.16] On: 26 April 2015, At: 08:19 Publisher: Routledge Informa Ltd Registered in England and Wales Registered Number: 1072954 Registered office: Mortimer House, 37-41 Mortimer Street, London W1T 3JH, UK Comedy Studies Publication details, including instructions for authors and subscription information: http://www.tandfonline.com/loi/rcos20 Playful politicians and serious satirists: comedic and earnest interplay in Australian political discourse Rebecca Higgiea a Department of Communication and Cultural Studies, Curtin University, Western Australia Published online: 09 Apr 2015. Click for updates To cite this article: Rebecca Higgie (2015): Playful politicians and serious satirists: comedic and earnest interplay in Australian political discourse, Comedy Studies, DOI: 10.1080/2040610X.2015.1026077 To link to this article: http://dx.doi.org/10.1080/2040610X.2015.1026077 PLEASE SCROLL DOWN FOR ARTICLE Taylor & Francis makes every effort to ensure the accuracy of all the information (the “Content”) contained in the publications on our platform. However, Taylor & Francis, our agents, and our licensors make no representations or warranties whatsoever as to the accuracy, completeness, or suitability for any purpose of the Content. Any opinions and views expressed in this publication are the opinions and views of the authors, and are not the views of or endorsed by Taylor & Francis. The accuracy of the Content should not be relied upon and should be independently verified with primary sources of information. Taylor and Francis shall not be liable for any losses, actions, claims, proceedings, demands, costs, expenses, damages, and other liabilities whatsoever or howsoever caused arising directly or indirectly in connection with, in relation to or arising out of the use of the Content. -

From The'little Aussie Bleeder'to Newstopia:(Really) Fake News In

This may be the author’s version of a work that was submitted/accepted for publication in the following source: Harrington, Stephen (2012) From the ’little Aussie bleeder’ to newstopia: (Really) fake news in Aus- tralia. Popular Communication, 10(1 - 2), pp. 27-39. This file was downloaded from: https://eprints.qut.edu.au/48573/ c Consult author(s) regarding copyright matters This work is covered by copyright. Unless the document is being made available under a Creative Commons Licence, you must assume that re-use is limited to personal use and that permission from the copyright owner must be obtained for all other uses. If the docu- ment is available under a Creative Commons License (or other specified license) then refer to the Licence for details of permitted re-use. It is a condition of access that users recog- nise and abide by the legal requirements associated with these rights. If you believe that this work infringes copyright please provide details by email to [email protected] Notice: Please note that this document may not be the Version of Record (i.e. published version) of the work. Author manuscript versions (as Sub- mitted for peer review or as Accepted for publication after peer review) can be identified by an absence of publisher branding and/or typeset appear- ance. If there is any doubt, please refer to the published source. https://doi.org/10.1080/15405702.2012.638571 From The “Little Aussie Bleeder” to Newstopia: (Really) Fake News in Australia Stephen Harrington Media & Communication Queensland University of Technology GPO Box 2434 Brisbane, QLD 4001 Australia Ph: (07) 3138 8177 Fx: (07) 3138 8195 [email protected] Abstract This paper offers an overview of the key characteristics of “fake” news in the Australian national context.