Daniel Cintra Cugler

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Herpetofauna of Serra Do Timbó, an Atlantic Forest Remnant in Bahia State, Northeastern Brazil

Herpetology Notes, volume 12: 245-260 (2019) (published online on 03 February 2019) Herpetofauna of Serra do Timbó, an Atlantic Forest remnant in Bahia State, northeastern Brazil Marco Antonio de Freitas1, Thais Figueiredo Santos Silva2, Patrícia Mendes Fonseca3, Breno Hamdan4,5, Thiago Filadelfo6, and Arthur Diesel Abegg7,8,* Originally, the Atlantic Forest Phytogeographical The implications of such scarce knowledge on the Domain (AF) covered an estimated total area of conservation of AF biodiversity are unknown, but they 1,480,000 km2, comprising 17% of Brazil’s land area. are of great concern (Lima et al., 2015). However, only 160,000 km2 of AF still remains, the Historical data on deforestation show that 11% of equivalent to 12.5% of the original forest (SOS Mata AF was destroyed in only ten years, leading to a tragic Atlântica and INPE, 2014). Given the high degree of estimate that, if this rhythm is maintained, in fifty years threat towards this biome, concomitantly with its high deforestation will completely eliminate what is left of species richness and significant endemism, AF has AF outside parks and other categories of conservation been classified as one of twenty-five global biodiversity units (SOS Mata Atlântica, 2017). The future of the AF hotspots (e.g., Myers et al., 2000; Mittermeier et al., will depend on well-planned, large-scale conservation 2004). Our current knowledge of the AF’s ecological strategies that must be founded on quality information structure is based on only 0.01% of remaining forest. about its remnants to support informed decision- making processes (Kim and Byrne, 2006), including the investigations of faunal and floral richness and composition, creation of new protected areas, the planning of restoration projects and the management of natural resources. -

Amphibia: Anura) Da Reserva Catuaba E Seu Entorno, Acre, Amazônia Sul-Ocidental

Efeitos da sucessão florestal sobre a anurofauna (Amphibia: Anura) da Reserva Catuaba e seu entorno, Acre, Amazônia sul-ocidental Vanessa M. de Souza 1; Moisés B. de Souza 2 & Elder F. Morato 2 1 Avenida Assis Chateaubriand 1170, ap. 304, Edifício Azul, Setor Oeste, 74130-011 Goiânia, Goiás, Brasil. E-mail: [email protected] 2 Departamento de Ciências da Natureza, Universidade Federal do Acre. Rodovia BR-364, km 04, Distrito Industrial, 69915-900 Rio Branco, Acre, Brasil. E-mail: [email protected]; [email protected] ABSTRACT. Effect of the forest succession on the anurans (Amphibia(Amphibia: Anura) of the Reserve Catuaba and its peripheryy, Acree, southwestern Amazonia. The objective of this work it was verify the abundance, richness, and the anuran composition in plots of vegetation of different succession stages in a forest and the matrix that surrounds it, of Acre (10º04’S, 67º37’W). The sampling was carried out between August 2005 and April 2006 in twelve plots located in three different sites in the forest. In each site four kinds of environments were chosen: primary forest (wood), secondary forest (capoeira), periphery (matrix) and secondary forest (succession). A total of twenty-seven species distributed in seven families was found. Greater abundance was registered in the plots of matrix two and capoeira three and the least in succession one. The richness was greater in matrix two, with the greater number of exclusive species. The abundance of anurans correlated significantly, with the average circum- ference at the breast height of the trees of the plots. The richness however correlated only marginally, with this structural feature. -

Download Download

Phyllomedusa 17(2):285–288, 2018 © 2018 Universidade de São Paulo - ESALQ ISSN 1519-1397 (print) / ISSN 2316-9079 (online) doi: http://dx.doi.org/10.11606/issn.2316-9079.v17i2p285-288 Short CommuniCation A case of bilateral anophthalmy in an adult Boana faber (Anura: Hylidae) from southeastern Brazil Ricardo Augusto Brassaloti and Jaime Bertoluci Escola Superior de Agricultura Luiz de Queiroz, Universidade de São Paulo. Av. Pádua Dias 11, 13418-900, Piracicaba, SP, Brazil. E-mails: [email protected], [email protected]. Keywords: absence of eyes, deformity, malformation, Smith Frog. Palavras-chave: ausência de olhos, deformidade, malformação, sapo-ferreiro. Morphological deformities, commonly collected and adult female Boana faber with osteological malformations of several types, bilateral anophthalmy in the Estação Ecológica occur in natural populations of amphibians dos Caetetus, Gália Municipality, state of São around the world (e.g., Peloso 2016, Silva- Paulo, Brazil (22°24'11'' S, 49°42'05'' W); the Soares and Mônico 2017). Ouellet (2000) and station encompasses 2,178.84 ha (Tabanez et al. Henle et al. (2017) provided comprehensive 2005). The animal was collected at about 660 m reviews on amphibian deformities and their a.s.l. in an undisturbed area (Site 9 of Brassaloti possible causes. Anophthalmy, the absence of et al. 2010; 22°23'27'' S, 49°41'31'' W; see this one or both eyes, has been documented in some reference for a map). The female is a subadult anuran species (Henle et al. 2017 and references (SVL 70 mm) and was collected on 13 May therein, Holer and Koleska 2018). -

Sexual Maturity and Sexual Dimorphism in a Population of the Rocket-Frog Colostethus Aff

Tolosa et al. Actual Biol Volumen 37 / Número 102, 2014 Sexual maturity and sexual dimorphism in a population of the rocket-frog Colostethus aff. fraterdanieli (Anura: Dendrobatidae) on the northeastern Cordillera Central of Colombia Madurez y dimorfismo sexual de la ranita cohete Colostethus aff. fraterdanieli (Anura: Dendrobatidae) en una población al este de la Cordillera Central de Colombia Yeison Tolosa1, 2, *, Claudia Molina-Zuluaga1, 4,*, Adriana Restrepo1, 5, Juan M. Daza1, ** Abstract The minimum size of sexual maturity and sexual dimorphism are important life history traits useful to study and understand the population dynamics of any species. In this study, we determined the minimum size at sexual maturity and the existence of sexual dimorphism in a population of the rocket-frog, Colostethus aff. fraterdanieli, by means of morphological and morphometric data and macro and microscopic observation of the gonads. Females attained sexual maturity at 17.90 ± 0.1 mm snout-vent length (SVL), while males attained sexual maturity at 16.13 ± 0.06 mm SVL. Females differed from males in size, shape and throat coloration. Males were smaller than females and had a marked and dark throat coloration that sometimes extended to the chest, while females lacked this characteristic, with a throat either immaculate or weakly pigmented. In this study, we describe some important aspects of the reproductive ecology of a population of C. aff. fraterdanieli useful as a baseline for other more specialized studies. Key words: Amphibian, Andes, gonads, histology, morphometry, reproduction Resumen El tamaño mínimo de madurez sexual y el dimorfismo sexual son importantes características de historia de vida, útiles para estudiar y comprender la dinámica poblacional de cualquier especie. -

New Species of the Rhinella Crucifer Group (Anura, Bufonidae) from the Brazilian Cerrado

Zootaxa 3265: 57–65 (2012) ISSN 1175-5326 (print edition) www.mapress.com/zootaxa/ Article ZOOTAXA Copyright © 2012 · Magnolia Press ISSN 1175-5334 (online edition) New species of the Rhinella crucifer group (Anura, Bufonidae) from the Brazilian Cerrado WILIAN VAZ-SILVA1,2,5, PAULA HANNA VALDUJO3 & JOSÉ P. POMBAL JR.4 1 Departamento de Ciências Biológicas, Centro Universitário de Goiás – Uni-Anhanguera, Rua Professor Lázaro Costa, 456, CEP: 74.415-450 Goiânia, GO, Brazil. 2 Laboratório de Genética e Biodiversidade, Departamento de Biologia, Instituto de Ciências Biológicas, Universidade Federal de Goiás, Campus Samambaia, Cx. Postal 131 CEP: 74.001-970, Goiânia, GO, Brazil. 3 Departamento de Ecologia. Universidade de São Paulo. Rua do Matão, travessa 14. CEP: 05508-900, São Paulo, SP, Brazil. 4 Universidade Federal do Rio de Janeiro, Departamento de Vertebrados, Museu Nacional, Quinta da Boa Vista, CEP: 20940-040, Rio de Janeiro, RJ, Brazil. 5 Corresponding author: E-mail: [email protected] Abstract A new species of Rhinella of Central Brazil from the Rhinella crucifer group is described. Rhinella inopina sp. nov. is restricted to the disjunct Seasonal Tropical Dry Forests enclaves in the western Cerrado biome. The new species is characterized mainly by head wider than long, shape of parotoid gland, and oblique arrangement of the parotoid gland. Data on natural history and distribution are also presented. Key words: Rhinella crucifer group, Seasonally Dry Forest, Cerrado, Central Brazil Introduction The cosmopolitan Bufonidae family (true toads) presented currently 528 species. The second most diverse genus of Bufonidae, Rhinella Fitzinger 1826, comprises 77 species, distributed in the Neotropics and some species were introduced in several world locations (Frost 2011). -

For Review Only

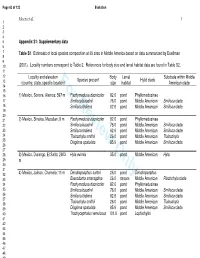

Page 63 of 123 Evolution Moen et al. 1 1 2 3 4 5 Appendix S1: Supplementary data 6 7 Table S1 . Estimates of local species composition at 39 sites in Middle America based on data summarized by Duellman 8 9 10 (2001). Locality numbers correspond to Table 2. References for body size and larval habitat data are found in Table S2. 11 12 Locality and elevation Body Larval Subclade within Middle Species present Hylid clade 13 (country, state, specific location)For Reviewsize Only habitat American clade 14 15 16 1) Mexico, Sonora, Alamos; 597 m Pachymedusa dacnicolor 82.6 pond Phyllomedusinae 17 Smilisca baudinii 76.0 pond Middle American Smilisca clade 18 Smilisca fodiens 62.6 pond Middle American Smilisca clade 19 20 21 2) Mexico, Sinaloa, Mazatlan; 9 m Pachymedusa dacnicolor 82.6 pond Phyllomedusinae 22 Smilisca baudinii 76.0 pond Middle American Smilisca clade 23 Smilisca fodiens 62.6 pond Middle American Smilisca clade 24 Tlalocohyla smithii 26.0 pond Middle American Tlalocohyla 25 Diaglena spatulata 85.9 pond Middle American Smilisca clade 26 27 28 3) Mexico, Durango, El Salto; 2603 Hyla eximia 35.0 pond Middle American Hyla 29 m 30 31 32 4) Mexico, Jalisco, Chamela; 11 m Dendropsophus sartori 26.0 pond Dendropsophus 33 Exerodonta smaragdina 26.0 stream Middle American Plectrohyla clade 34 Pachymedusa dacnicolor 82.6 pond Phyllomedusinae 35 Smilisca baudinii 76.0 pond Middle American Smilisca clade 36 Smilisca fodiens 62.6 pond Middle American Smilisca clade 37 38 Tlalocohyla smithii 26.0 pond Middle American Tlalocohyla 39 Diaglena spatulata 85.9 pond Middle American Smilisca clade 40 Trachycephalus venulosus 101.0 pond Lophiohylini 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 Evolution Page 64 of 123 Moen et al. -

Linking Environmental Drivers with Amphibian Species Diversity in Ponds from Subtropical Grasslands

Anais da Academia Brasileira de Ciências (2015) 87(3): 1751-1762 (Annals of the Brazilian Academy of Sciences) Printed version ISSN 0001-3765 / Online version ISSN 1678-2690 http://dx.doi.org/10.1590/0001-3765201520140471 www.scielo.br/aabc Linking environmental drivers with amphibian species diversity in ponds from subtropical grasslands DARLENE S. GONÇALVES1, LUCAS B. CRIVELLARI2 and CARLOS EDUARDO CONTE3*,4 1Programa de Pós-Graduação em Zoologia, Universidade Federal do Paraná, Caixa Postal 19020, 81531-980 Curitiba, PR, Brasil 2Programa de Pós-Graduação em Biologia Animal, Universidade Estadual Paulista, Rua Cristovão Colombo, 2265, Jardim Nazareth, 15054-000 São José do Rio Preto, SP, Brasil 3Universidade Federal do Paraná. Departamento de Zoologia, Caixa Postal 19020, 81531-980 Curitiba, PR, Brasil 4Instituto Neotropical: Pesquisa e Conservação. Rua Purus, 33, 82520-750 Curitiba, PR, Brasil Manuscript received on September 17, 2014; accepted for publication on March 2, 2015 ABSTRACT Amphibian distribution patterns are known to be influenced by habitat diversity at breeding sites. Thus, breeding sites variability and how such variability influences anuran diversity is important. Here, we examine which characteristics at breeding sites are most influential on anuran diversity in grasslands associated with Araucaria forest, southern Brazil, especially in places at risk due to anthropic activities. We evaluate the associations between habitat heterogeneity and anuran species diversity in nine body of water from September 2008 to March 2010, in 12 field campaigns in which 16 species of anurans were found. Of the seven habitat descriptors we examined, water depth, pond surface area and distance to the nearest forest fragment explained 81% of total species diversity. -

Identification of the Taxonomic Status of Scinax Nebulosus and Scinax Constrictus (Scinaxinae, Anura) Based on Molecular Markers T

Brazilian Journal of Biology https://doi.org/10.1590/1519-6984.225646 ISSN 1519-6984 (Print) Original Article ISSN 1678-4375 (Online) Identification of the taxonomic status of Scinax nebulosus and Scinax constrictus (Scinaxinae, Anura) based on molecular markers T. M. B. Freitasa* , J. B. L. Salesb , I. Sampaioc , N. M. Piorskia and L. N. Weberd aUniversidade Federal do Maranhão – UFMA, Departamento de Biologia, Laboratório de Ecologia e Sistemática de Peixes, Programa de Pós-graduação Bionorte, Grupo de Taxonomia, Biogeografia, Ecologia e Conservação de Peixes do Maranhão, São Luís, MA, Brasil bUniversidade Federal do Pará – UFPA, Centro de Estudos Avançados da Biodiversidade – CEABIO, Programa de Pós-graduação em Ecologia Aquática e Pesca – PPGEAP, Grupo de Investigação Biológica Integrada – GIBI, Belém, PA, Brasil cUniversidade Federal do Pará – UFPA, Instituto de Estudos Costeiros – IECOS, Laboratório e Filogenomica e Bioinformatica, Programa de Pós-graduação Biologia Ambiental – PPBA, Grupo de Estudos em Genética e Filogenômica, Bragança, PA, Brasil dUniversidade Federal do Sul da Bahia – UFSB, Centro de Formação em Ciências Ambientais, Instituto Sosígenes Costa de Humanidades, Artes e Ciências, Departamento de Ciências Biológicas, Laboratório de Zoologia, Programa de Pós-graduação Bionorte, Grupo Biodiversidade da Fauna do Sul da Bahia, Porto Seguro, BA, Brasil *e-mail: [email protected] Received: June 26, 2019 – Accepted: May 4, 2020 – Distributed: November 30, 2021 (With 4 figures) Abstract The validation of many anuran species is based on a strictly descriptive, morphological analysis of a small number of specimens with a limited geographic distribution. The Scinax Wagler, 1830 genus is a controversial group with many doubtful taxa and taxonomic uncertainties, due a high number of cryptic species. -

Etar a Área De Distribuição Geográfica De Anfíbios Na Amazônia

Universidade Federal do Amapá Pró-Reitoria de Pesquisa e Pós-Graduação Programa de Pós-Graduação em Biodiversidade Tropical Mestrado e Doutorado UNIFAP / EMBRAPA-AP / IEPA / CI-Brasil YURI BRENO DA SILVA E SILVA COMO A EXPANSÃO DE HIDRELÉTRICAS, PERDA FLORESTAL E MUDANÇAS CLIMÁTICAS AMEAÇAM A ÁREA DE DISTRIBUIÇÃO DE ANFÍBIOS NA AMAZÔNIA BRASILEIRA MACAPÁ, AP 2017 YURI BRENO DA SILVA E SILVA COMO A EXPANSÃO DE HIDRE LÉTRICAS, PERDA FLORESTAL E MUDANÇAS CLIMÁTICAS AMEAÇAM A ÁREA DE DISTRIBUIÇÃO DE ANFÍBIOS NA AMAZÔNIA BRASILEIRA Dissertação apresentada ao Programa de Pós-Graduação em Biodiversidade Tropical (PPGBIO) da Universidade Federal do Amapá, como requisito parcial à obtenção do título de Mestre em Biodiversidade Tropical. Orientador: Dra. Fernanda Michalski Co-Orientador: Dr. Rafael Loyola MACAPÁ, AP 2017 YURI BRENO DA SILVA E SILVA COMO A EXPANSÃO DE HIDRELÉTRICAS, PERDA FLORESTAL E MUDANÇAS CLIMÁTICAS AMEAÇAM A ÁREA DE DISTRIBUIÇÃO DE ANFÍBIOS NA AMAZÔNIA BRASILEIRA _________________________________________ Dra. Fernanda Michalski Universidade Federal do Amapá (UNIFAP) _________________________________________ Dr. Rafael Loyola Universidade Federal de Goiás (UFG) ____________________________________________ Alexandro Cezar Florentino Universidade Federal do Amapá (UNIFAP) ____________________________________________ Admilson Moreira Torres Instituto de Pesquisas Científicas e Tecnológicas do Estado do Amapá (IEPA) Aprovada em de de , Macapá, AP, Brasil À minha família, meus amigos, meu amor e ao meu pequeno Sebastião. AGRADECIMENTOS Agradeço a CAPES pela conceção de uma bolsa durante os dois anos de mestrado, ao Programa de Pós-Graduação em Biodiversidade Tropical (PPGBio) pelo apoio logístico durante a pesquisa realizada. Obrigado aos professores do PPGBio por todo o conhecimento compartilhado. Agradeço aos Doutores, membros da banca avaliadora, pelas críticas e contribuições construtivas ao trabalho. -

Revista 6-1 Jan-Jun 2007 RGB NOVA.P65

Phyllomedusa 6(1):61-68, 2007 © 2007 Departamento de Ciências Biológicas - ESALQ - USP ISSN 1519-1397 Visual and acoustic signaling in three species of Brazilian nocturnal tree frogs (Anura, Hylidae) Luís Felipe Toledo1, Olívia G. S. Araújo1, Lorena D. Guimarães2, Rodrigo Lingnau3, and Célio F. B. Haddad1 1 Departamento de Zoologia, Instituto de Biociências, Universidade Estadual Paulista. Caixa Postal 199, 13506-970, Rio Claro, SP, Brazil. E-mail: [email protected]. 2 Departamento de Biologia, Instituto de Biociências, Universidade Federal de Goiás. Caixa Postal 131, 74001-970, Goiânia, GO, Brazil. 3 Laboratório de Herpetologia, Museu de Ciências e Tecnologia & Faculdade de Biociências, Pontifícia Universidade Católica do Rio Grande do Sul. Av. Ipiranga, 6681, 90619-900, Porto Alegre, RS, Brazil. Abstract Visual and acoustic signaling in three species of Brazilian nocturnal tree frogs (Anura, Hylidae). Visual communication seems to be widespread among nocturnal anurans, however, reports of these behaviors in many Neotropical species are lacking. Therefore, we gathered information collected during several sporadic field expeditions in central and southern Brazil with three nocturnal tree frogs: Aplastodiscus perviridis, Hypsiboas albopunctatus and H. bischoffi. These species displayed various aggressive behaviors, both visual and acoustic, towards other males. For A. perviridis we described arm lifting and leg kicking; for H. albopunctatus we described the advertisement and territorial calls, visual signalizations, including a previously unreported behavior (short leg kicking), and male-male combat; and for H. bischoffi we described the advertisement and fighting calls, toes and fingers trembling, leg lifting, and leg kicking. We speculate about the evolution of some behaviors and concluded that the use of visual signals among Neotropical anurans may be much more common than suggested by the current knowledge. -

Diet Composition of Lysapsus Bolivianus Gallardo, 1961 (Anura, Hylidae) of the Curiaú Environmental Protection Area in the Amazonas River Estuary

Herpetology Notes, volume 13: 113-123 (2020) (published online on 11 February 2020) Diet composition of Lysapsus bolivianus Gallardo, 1961 (Anura, Hylidae) of the Curiaú Environmental Protection Area in the Amazonas river estuary Mayara F. M. Furtado1 and Carlos E. Costa-Campos1,* Abstract. Information on a species’ diet is important to determine habitat conditions and resources and to assess the effect of preys on the species distribution. The present study aimed describing the diet of Lysapsus bolivianus in the floodplain of the Curiaú Environmental Protection Area. Individuals of L. bolivianus were collected by visual search in the floodplain of the Curiaú River. A total of 60 specimens of L. bolivianus were euthanized with 2% lidocaine, weighed, and their stomachs were removed for diet analysis. A total of 3.020 prey items were recorded in the diet. The most representative preys were: Diptera (36.21%), Collembola (16.61%), and Hemiptera (8.31%), representing 73.20% of the total consumed prey. Based on the Index of Relative Importance for males, females, and juveniles, the most important items in the diet were Diptera and Collembola. The richness of preys recorded in the diet of L. bolivianus in the dry season was lower than that of the rainy season. Regarding prey abundance and richness, L. bolivianus can be considered a generalist species and a passive forager, with a diet dependent on the availability of preys in the environment. Keywords. Amphibia; Eastern Amazon, Niche overlap, Predation, Prey diversity, Pseudinae Introduction (Toft 1980; 1981; Donnelly, 1991; López et al., 2009; López et al., 2015). The diet of most anuran species is composed mainly of The genus Lysapsus Cope, 1862 is restricted to South arthropods and because of the opportunistic behaviour of America and comprises aquatic and semi-aquatic anurans many species, anurans are usually regarded as generalist inhabiting temporary or permanent ponds with large predators (Duellman and Trueb, 1994). -

Dietary Resource Use by an Assemblage of Terrestrial Frogs from the Brazilian Cerrado

NORTH-WESTERN JOURNAL OF ZOOLOGY 15 (2): 135-146 ©NWJZ, Oradea, Romania, 2019 Article No.: e181502 http://biozoojournals.ro/nwjz/index.html Dietary resource use by an assemblage of terrestrial frogs from the Brazilian Cerrado Thiago MARQUES-PINTO1,*, André Felipe BARRETO-LIMA1,2,3 and Reuber Albuquerque BRANDÃO1 1. Laboratório de Fauna e Unidades de Conservação, Departamento de Engenharia Florestal, Universidade de Brasília, Brasília – DF, Brazil. 70.910-900, [email protected] 2. Departamento de Ciências Fisiológicas, Instituto de Ciências Biológicas, Universidade de Brasília, Campus Darcy Ribeiro, Brasília – DF, Brazil. 70.910-900, [email protected] 3. Programa de Pós-Graduação em Ecologia, Instituto de Ciências Biológicas, Universidade de Brasília, Campus Darcy Ribeiro, Brasília – DF, Brazil. 70.910-900. *Corresponding author, T. Marques-Pinto, E-mail: [email protected] Received: 08. June 2016 / Accepted: 07. April 2018 / Available online: 19. April 2018 / Printed: December 2019 Abstract. Diet is an important aspect of the ecological niche, and assemblages are often structured based on the ways food resources are partitioned among coexisting species. However, few works investigated the use of food resources in anuran communities in the Brazilian Cerrado biome. Thereby, we studied the feeding ecology of an anuran assembly composed of six terrestrial frog species in a Cerrado protected area. Our main purpose was to detect if there was a structure in the assemblage based on the species’ diet, in terms of the feeding niche overlap and the species’ size. All specimens were collected by pitfall traps placed along a lagoon margin, during the rainy season. We collected six frog species: Elachistocleis cesarii (172 individuals), Leptodactylus fuscus (10), L.