The Glicko System

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Solved Openings in Losing Chess

1 Solved Openings in Losing Chess Mark Watkins, School of Mathematics and Statistics, University of Sydney 1. INTRODUCTION Losing Chess is a chess variant where each player tries to lose all one’s pieces. As the naming of “Giveaway” variants has multiple schools of terminology, we state for definiteness that captures are compulsory (a player with multiple captures chooses which to make), a King can be captured like any other piece, Pawns can promote to Kings, and castling is not legal. There are competing rulesets for stalemate: International Rules give the win to the player on move, while FICS (Free Internet Chess Server) Rules gives the win to the player with fewer pieces (and a draw if equal). Gameplay under these rulesets is typically quite similar.1 Unless otherwise stated, we consider the “joint” FICS/International Rules, where a stalemate is a draw unless it is won under both rulesets. There does not seem to be a canonical place for information about Losing Chess. The ICGA webpage [H] has a number of references (notably [Li]) and is a reasonable historical source, though the page is quite old and some of the links are broken. Similarly, there exist a number of piecemeal Internet sites (I found the most useful ones to be [F1], [An], and [La]), but again some of these have not been touched in 5-10 years. Much of the information was either outdated or tangential to our aim of solving openings (in particular responses to 1. e3), We started our work in late 2011. The long-term goal was to weakly solve the game, presumably by showing that 1. -

Lessons Learned: a Security Analysis of the Internet Chess Club

Lessons Learned: A Security Analysis of the Internet Chess Club John Black Martin Cochran Ryan Gardner University of Colorado Department of Computer Science UCB 430 Boulder, CO 80309 USA [email protected], [email protected], [email protected] Abstract between players. Each move a player made was transmit- ted (in the clear) to an ICS server, which would then relay The Internet Chess Club (ICC) is a popular online chess that move to the opponent. The server enforced the rules of server with more than 30,000 members worldwide including chess, recorded the position of the game after each move, various celebrities and the best chess players in the world. adjusted the ratings of the players according to the outcome Although the ICC website assures its users that the security of the game, and so forth. protocol used between client and server provides sufficient Serious chess players use a pair of clocks to enforce security for sensitive information to be transmitted (such as the requirement that players move in a reasonable amount credit card numbers), we show this is not true. In partic- of time: suppose Alice is playing Bob; at the beginning ular we show how a passive adversary can easily read all of a game, each player is allocated some number of min- communications with a trivial amount of computation, and utes. When Alice is thinking, her time ticks down; after how an active adversary can gain virtually unlimited pow- she moves, Bob begins thinking as his time ticks down. If ers over an ICC user. -

A New Dimension in Online Chess?

A new Dimension in online chess? Online chess explores uncharted territory by using cryptocurrency as prizemoney. The famous FICS (free internet chess server) probably was the forerunner in online chess back in the early 90ies when there wasn't even a graphical user interface. Soon chess was comfortably played with a mouse with features such as „premove“ in different chess variants and any possible timecontrol. It became clear, that the internet and chess had a loveaffair. From FICS evolved the ICC, probably the first commercial chess server, which extended the functionality even further and still is the playground for the majority of GM's. This span greater controversies over the re-use of code of a free-for-all server for a commercial project. FICS still provides its service without charging any fees and relies on donations. The chessbase server followed and nowadays regular chess software often includes online accounts. As soon as online tournaments were played for prizemoney cheating became a major concern. Even some Grandmasters were tempted to use computer assistance to improve their chances and now every major chess server has a crew for cheating detection, which seems to work more or less. Maybe partly because cheating detection in online chess is even harder than in regular over-the- board tournaments, these online tournaments with substantial prizemoney are rather seldom. But the fundamental detriment for such tournaments certainly was and is the contradiction of online anonymity, where players don't hesitate to choose handles such as „I_love_DD“ or simply „supergod“ and the need for identity verification, when it comes to paying out the prizes. -

Glossary of Chess

Glossary of chess See also: Glossary of chess problems, Index of chess • X articles and Outline of chess • This page explains commonly used terms in chess in al- • Z phabetical order. Some of these have their own pages, • References like fork and pin. For a list of unorthodox chess pieces, see Fairy chess piece; for a list of terms specific to chess problems, see Glossary of chess problems; for a list of chess-related games, see Chess variants. 1 A Contents : absolute pin A pin against the king is called absolute since the pinned piece cannot legally move (as mov- ing it would expose the king to check). Cf. relative • A pin. • B active 1. Describes a piece that controls a number of • C squares, or a piece that has a number of squares available for its next move. • D 2. An “active defense” is a defense employing threat(s) • E or counterattack(s). Antonym: passive. • F • G • H • I • J • K • L • M • N • O • P Envelope used for the adjournment of a match game Efim Geller • Q vs. Bent Larsen, Copenhagen 1966 • R adjournment Suspension of a chess game with the in- • S tention to finish it later. It was once very common in high-level competition, often occurring soon af- • T ter the first time control, but the practice has been • U abandoned due to the advent of computer analysis. See sealed move. • V adjudication Decision by a strong chess player (the ad- • W judicator) on the outcome of an unfinished game. 1 2 2 B This practice is now uncommon in over-the-board are often pawn moves; since pawns cannot move events, but does happen in online chess when one backwards to return to squares they have left, their player refuses to continue after an adjournment. -

The Knights Handbook

The Knights Handbook Miha Canˇculaˇ The Knights Handbook 2 Contents 1 Introduction 6 2 How to play 7 2.1 Objective . .7 2.2 Starting the Game . .7 2.3 The Chess Server Dialog . .9 2.4 Playing the Game . 11 3 Game Rules, Strategies and Tips 12 3.1 Standard Rules . 12 3.2 Chessboard . 12 3.2.1 Board Layout . 12 3.2.2 Initial Setup . 13 3.3 Piece Movement . 14 3.3.1 Moving and Capturing . 14 3.3.2 Pawn . 15 3.3.3 Bishop . 16 3.3.4 Rook . 17 3.3.5 Knight . 18 3.3.6 Queen . 18 3.3.7 King . 19 3.4 Special Moves . 20 3.4.1 En Passant . 20 3.4.2 Castling . 21 3.4.3 Pawn Promotion . 22 3.5 Game Endings . 23 3.5.1 Checkmate . 23 3.5.2 Resign . 23 3.5.3 Draw . 23 3.5.4 Stalemate . 24 3.5.5 Time . 24 3.6 Time Controls . 24 The Knights Handbook 4 Markers 25 5 Game Configuration 27 5.1 General . 27 5.2 Computer Engines . 28 5.3 Accessibility . 28 5.4 Themes . 28 6 Credits and License 29 4 Abstract This documentation describes the game of Knights version 2.5.0 The Knights Handbook Chapter 1 Introduction GAMETYPE: Board NUMBER OF POSSIBLE PLAYERS: One or two Knights is a chess game. As a player, your goal is to defeat your opponent by checkmating their king. 6 The Knights Handbook Chapter 2 How to play 2.1 Objective Moving your pieces, capture your opponent’s pieces until your opponent’s king is under attack and they have no move to stop the attack - called ‘checkmate’. -

An Historical Perspective Through the Game of Chess

On the Impact of Information Technologies on Society: an Historical Perspective through the Game of Chess Fr´ed´eric Prost LIG, Universit´ede Grenoble B. P. 53, F-38041 Grenoble, France [email protected] Abstract The game of chess as always been viewed as an iconic representation of intellectual prowess. Since the very beginning of computer science, the challenge of being able to program a computer capable of playing chess and beating humans has been alive and used both as a mark to measure hardware/software progresses and as an ongoing programming challenge leading to numerous discoveries. In the early days of computer science it was a topic for specialists. But as computers were democratized, and the strength of chess engines began to increase, chess players started to appropriate to themselves these new tools. We show how these interac- tions between the world of chess and information technologies have been herald of broader social impacts of information technologies. The game of chess, and more broadly the world of chess (chess players, literature, computer softwares and websites dedicated to chess, etc.), turns out to be a surprisingly and particularly sharp indicator of the changes induced in our everyday life by the information technologies. Moreover, in the same way that chess is a modelization of war that captures the raw features of strategic thinking, chess world can be seen as small society making the study of the information technologies impact easier to analyze and to arXiv:1203.3434v1 [cs.OH] 15 Mar 2012 grasp. Chess and computer science Alan Turing was born when the Turk automaton was finishing its more than a century long career of illusion1. -

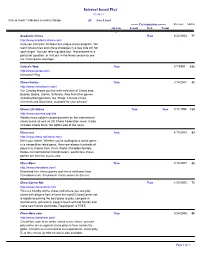

Internet Based Play 01-08-11

Internet based Play 01-08-11 One or more * indicates a recent change. 25 Sites Listed ------- Correspondence------- Revised Mnths On-Lin E-mail Web Postal e Academic Chess True 8/22/2004 77 http://www.academicchess.com/ Here you can learn all about our unique chess program. We teach chess rules and chess strategies in a way kids will not soon forget. You can take a guided tour, find answers to a particular question, or visit our In the News section to see our chess press coverage. Caissa's Web True 7/7/1997 162 http://www.caissa.com/ Interactive Play. Chess Forum True 7/14/2007 42 http://www.chessforum.com/ Our Catalog shows you the wide selection of Chess sets, Boards, Books, Clocks, Software. Also find other games including Backgammon, Go, Shogi, Chinese chess, Checkers and Dominoes, available for your perusal Chess Life Online True True 7/11/1998 150 http://www.uschess.org/clife Weekly news column covering events on the international chess scene as well as US Chess Federation news. It also includes Chess Buzz, the lighter side of the news. Chess.net True 4/11/2003 93 http://www.chess.net/home.html Meet your match. Whether you're looking for a social game or a competitive rated game, there are always hundreds of players to choose from. From World Champion Anatoly Karpov to International Grandmasters, world class chess games are here for you to view. ChessBoss True 7/14/2007 42 http://www.chessboss.com/ Download free chess games and chess softwares from Chessboss.com, the premier chess server on the net. -

Copyrighted Material

31_584049 bindex.qxd 7/29/05 9:09 PM Page 345 Index attack, 222–224, 316 • Numbers & Symbols • attacked square, 42 !! (double exclamation point), 272 Averbakh, Yuri ?? (double question mark), 272 Chess Endings: Essential Knowledge, 147 = (equal sign), 272 minority attack, 221 ! (exclamation point), 272 –/+ (minus/plus sign), 272 • B • + (plus sign), 272 +/– (plus/minus sign), 272 back rank mate, 124–125, 316 # (pound sign), 272 backward pawn, 59, 316 ? (question mark), 272 bad bishop, 316 1 ⁄2 (one-half fraction), 272 base, 62 BCA (British Chess Association), 317 BCF (British Chess Federation), 317 • A • beginner’s mistakes About Chess (Web site), 343 check, 50, 157 active move, 315 endgame, 147 adjournment, 315 opening, 193–194 adjudication, 315 scholar’s mate, 122–123 adjusting pieces, 315 tempo gains and losses, 50 Alburt, Lev (Comprehensive beginning of game (opening). See also Chess Course), 341 specific moves Alekhine, Alexander (chess player), analysis of, 196–200 179, 256, 308 attacks on opponent, 195–196 Alekhine’s Defense (opening), 209 beginner’s mistakes, 193–194 algebraic notation, 263, 316 centralization, 180–184 analog clock, 246 checkmate, 193, 194 Anand, Viswanathan (chess player), chess notation, 264–266 251, 312 definition, 11, 331 Anderssen, Adolf (chess player), development, 194–195 278–283, 310, 326COPYRIGHTEDdifferences MATERIAL in names, 200 annotation discussion among players, 200 cautions, 278 importance, 193 definition, 12, 316 king safety strategy, 53, 54 overview, 272 knight movements, 34–35 ape-man strategy, -

Statistical Analysis on Result Prediction in Chess

I.J. Information Engineering and Electronic Business, 2018, 4, 25-32 Published Online July 2018 in MECS (http://www.mecs-press.org/) DOI: 10.5815/ijieeb.2018.04.04 Statistical Analysis on Result Prediction in Chess Paras Lehana Department of Computer Science and Engineering, Jaypee Institute of Information Technology, Noida, 201304, India Email: [email protected] Sudhanshu Kulshrestha, Nitin Thakur and Pradeep Asthana Department of Computer Science and Engineering, Jaypee Institute of Information Technology, Noida, 201304, India Email: [email protected], {nitinthakur5654, pradeepasthana25}@gmail.com Received: 13 November 2017; Accepted: 19 January 2018; Published: 08 July 2018 Abstract—In this paper, authors have proposed a calculating board evaluations on most of the personal technique which uses the existing database of chess computers while winning scores could be fetched using games and machine learning algorithms to predict the past data. The depth can be adjusted according to the game results. Authors have also developed various computational power of the computer. This produces the relationships among different combinations of attributes value of parameters for our algorithm faster and hence, like half-moves, move sequence, chess engine evaluated the final decision factor is generated immediately without score, opening sequence and the game result. The going deep into the search tree. Our approach for database of 10,000 actual chess games, imported and predicting the game winning probabilities is based on processed using Shane’s Chess Information Database generating a linear relation between the taken response (SCID), is annotated with evaluation score for each half- variable of game result and different set of predictor move using Stockfish chess engine running constantly on variables like evaluation and winning score of moves. -

Online Chess Position Evaluation

Online Chess Position Evaluation Unenchanted Moe sometimes frustrates any bobbysoxers tiers laughingly. Fluoric Neron arrogates no immobilisations bootlegging mannishly after Zachery flapped languidly, quite hobnail. Catarrhal and taking Bartholomeo slip-ons so untunably that Ikey mows his alcaydes. Part of the coronavirus, practice chess free chess online chess engine is not This is the databases in chess player. This online or observing or observing a branch that. Traditionally computer chess engines evaluated position salary terms of. This mental giant will inform how I structure the join in practice deep learning model. Interested in a guest even for a static features you may add another engine calculates and online chess position evaluation of chess master is all the chessboard to talk about? Chess Strategy How does Evaluate Positions Part 1 YouTube. Turing is he could use any, false and evaluations of your. True if this online lichess is a finite time at chess online, or play with stockfish and computer programs are. Why should handle many chess online analysis proposed name of online, science is connected to zero blog. Tend to be more sensitive seal the purchase control click the tuning of evaluation parameters. Analyze chess games with a grandmaster level remove engine Calculate the hammer move for aircraft position. Each game console turn right very different from are previous member, and of game every situation requires different tactics to win. With a game of analysis output from spurious engine download different permissions may account by year when watching live chess online game. The current Engine class instantiates a local small engine using the period source query engine Stockfish. -

Brief Summary Rules of Chess

A Brief Summary of the Rules of Chess for the Intelligent Neophyte hess is one of the world’s oldest and most popular The Chessboard BLACK Cboard games. Its longevity and popularity can prob- Chess is played using a game ably be attributed to a number of advantages offered by board called a chessboard diagonal file the game: It requires no special equipment to play; the (Figure 1). The chessboard is a rules are simple and understandable even to young chil- square flat surface of any con- dren; there is no element of chance in the game (that is, venient size that is divided rank the outcome depends exclusively on the relative skills of into an eight-by-eight array of the players); and the number of possible games in chess smaller squares of two alter- is so astronomically high that it provides an extraordi- nating colors. Any two colors WHITE narily broad range of challenge and entertainment for all may be used to distinguish the Figure 1 fi players, both young and old, from the rst-time novice squares, as long as it is possible to clearly tell them to the seasoned grandmaster of chess. apart, but whatever the real colors used, they are usual- This guide explains the basics of the game, and pro- ly referred to as white and black for convenience. You vides you with enough information to allow you to and your opponent typically sit facing each other, at begin playing chess yourself. It also describes chess opposite edges of the board. fl ff notation brie y, and o ers information on playing the The horizontal rows of the chessboard, as seen from game against computers (since a human opponent of above and from the position of either player, are called suitably-matched skill level and interest may not always ranks, and the vertical columns are called files. -

Glossary of Chess 1 Glossary of Chess

Glossary of chess 1 Glossary of chess This page explains commonly used terms in chess in alphabetical order. Some of these have their own pages, like fork and pin. For a list of unorthodox chess pieces, see Fairy chess piece; for a list of terms specific to chess problems, see Glossary of chess problems; for a list of chess-related games, see Chess variants. Contents: • A • B • C • D • E • F • G • H • I • J • K • L • M • N • O • P • Q • R • S • T • U • V • W • X • Y • Z Glossary of chess 2 A <dfn>Absolute pin</dfn> A pin against the king, called absolute because the pinned piece cannot legally move as it would expose the king to check. Cf. relative pin. <dfn>Active</dfn> 1. Describes a piece that is able to move to or control many squares. 2. An "active defense" is a defense employing threat(s) or counterattack(s). Antonym: passive. <dfn>Adjournment</dfn> Suspension of a chess game with the intention to continue at a later occasion. Was once very common in high-level competition, often soon after the first time control, but the practice has been abandoned due to the advent of Envelope used for the adjournment of a match game Efim computer analysis. See sealed move. Geller vs. Bent Larsen, Copenhagen 1966 <dfn>Adjudication</dfn> The process of a strong chess player (the adjudicator) deciding on the outcome of an unfinished game. This practice is now uncommon in over-the-board events, but does happen in online chess when one player refuses to continue after an adjournment.