Processing-In-Memory: a Workload-Driven Perspective

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Performance Impact of Memory Channels on Sparse and Irregular Algorithms

Performance Impact of Memory Channels on Sparse and Irregular Algorithms Oded Green1,2, James Fox2, Jeffrey Young2, Jun Shirako2, and David Bader3 1NVIDIA Corporation — 2Georgia Institute of Technology — 3New Jersey Institute of Technology Abstract— Graph processing is typically considered to be a Graph algorithms are typically latency-bound if there is memory-bound rather than compute-bound problem. One com- not enough parallelism to saturate the memory subsystem. mon line of thought is that more available memory bandwidth However, the growth in parallelism for shared-memory corresponds to better graph processing performance. However, in this work we demonstrate that the key factor in the utilization systems brings into question whether graph algorithms are of the memory system for graph algorithms is not necessarily still primarily latency-bound. Getting peak or near-peak the raw bandwidth or even the latency of memory requests. bandwidth of current memory subsystems requires highly Instead, we show that performance is proportional to the parallel applications as well as good spatial and temporal number of memory channels available to handle small data memory reuse. The lack of spatial locality in sparse and transfers with limited spatial locality. Using several widely used graph frameworks, including irregular algorithms means that prefetched cache lines have Gunrock (on the GPU) and GAPBS & Ligra (for CPUs), poor data reuse and that the effective bandwidth is fairly low. we evaluate key graph analytics kernels using two unique The introduction of high-bandwidth memories like HBM and memory hierarchies, DDR-based and HBM/MCDRAM. Our HMC have not yet closed this inefficiency gap for latency- results show that the differences in the peak bandwidths sensitive accesses [19]. -

For Immediate Release

FOR IMMEDIATE RELEASE ACM - Association for Computing Machinery IEEE Computer Society Contacts: Virginia Gold Margo McCall 212-626-0505 714-816-2182 [email protected] [email protected] ACM, IEEE COMPUTER SOCIETY HONOR INNOVATOR OF HIGH-PERFORMANCE COMPUTER SYSTEMS DESIGNS University of Wisconsin’s Sohi Developed Advances Widely Adopted by Microprocessor Manufacturers to Increase the Power of Computing NEW YORK, MAY 4, 2011 -- ACM (the Association for Computing Machinery) and the IEEE Computer Society (IEEE-CS) will jointly present the Eckert-Mauchly Award to Gurindar S. (Guri) Sohi of the University of Wisconsin-Madison for pioneering widely used micro-architectural techniques in the design of high-performance microprocessors. These innovations increase the instruction-level parallelism, a measure of how many operations in a computer program can be performed simultaneously. They can be found in almost every commercial microprocessor used today in personal computers and high-end servers. The Eckert Mauchly Award http://awards.acm.org/eckert_mauchly www.computer.org/portal/web/awards/eckert is known as the computer architecture community’s most prestigious award. Sohi will receive the 2011 Eckert-Mauchly Award at the International Symposium on Computer Architecture (ISCA), held as part of the Federated Computing Research Conference (FCRC) http://www.acm.org/fcrc, June 7, 2011, in San Jose, CA. Early in his career, Sohi articulated a model for a dynamically scheduled processor supporting precise exceptions. This model has served as the basis for many commercial superscalar microprocessors that have been designed and built since the early 1990s. His group also proposed the idea of memory dependence prediction to further improve instruction-level parallelism, a technology that has been considered a key innovation in some recent microprocessors. -

Department of Computer Science

i cl i ck ! MAGAZINE click MAGAZINE 2014, VOLUME II FIVE DECADES AS A DEPARTMENT. THOUSANDS OF REMARKABLE GRADUATES. 50COUNTLESS INNOVATIONS. Department of Computer Science click! Magazine is produced twice yearly for the friends of got your CS swag? CS @ ILLINOIS to showcase the innovations of our faculty and Commemorative 50-10 Anniversary students, the accomplishments of our alumni, and to inspire our t-shirts are available! partners and peers in the field of computer science. Department Head: Editorial Board: Rob A. Rutenbar Tom Moone Colin Robertson Associate Department Heads: Rob A. Rutenbar shop now! my.cs.illinois.edu/buy Gerald DeJong Michelle Wellens Jeff Erickson David Forsyth Writers: David Cunningham CS Alumni Advisory Board: Elizabeth Innes Alex R. Bratton (BS CE ’93) Mike Koon Ira R. Cohen (BS CS ’81) Rick Kubetz Vilas S. Dhar (BS CS ’04, BS LAS BioE ’04) Leanne Lucas William M. Dunn (BS CS ‘86, MS ‘87) Tom Moone Mary Jane Irwin (MS CS ’75, PhD ’77) Michelle Rice Jennifer A. Mozen (MS CS ’97) Colin Robertson Daniel L. Peterson (BS CS ’05) Laura Schmitt Peter L. Tannenwald (BS LAS Math & CS ’85) Michelle Wellens Jill C. Zmaczinsky (BS CS ’00) Design: Contact us: SURFACE 51 [email protected] 217-333-3426 Machines take me by surprise with great frequency. Alan Turing 2 CS @ ILLINOIS Department of Computer Science College of Engineering, College of Liberal Arts & Sciences University of Illinois at Urbana-Champaign shop now! my.cs.illinois.edu/buy click i MAGAZINE 2014, VOLUME II 2 Letter from the Head 4 ALUMNI NEWS 4 Alumni -

Benchmarking the Intel FPGA SDK for Opencl Memory Interface

The Memory Controller Wall: Benchmarking the Intel FPGA SDK for OpenCL Memory Interface Hamid Reza Zohouri*†1, Satoshi Matsuoka*‡ *Tokyo Institute of Technology, †Edgecortix Inc. Japan, ‡RIKEN Center for Computational Science (R-CCS) {zohour.h.aa@m, matsu@is}.titech.ac.jp Abstract—Supported by their high power efficiency and efficiency on Intel FPGAs with different configurations recent advancements in High Level Synthesis (HLS), FPGAs are for input/output arrays, vector size, interleaving, kernel quickly finding their way into HPC and cloud systems. Large programming model, on-chip channels, operating amounts of work have been done so far on loop and area frequency, padding, and multiple types of blocking. optimizations for different applications on FPGAs using HLS. However, a comprehensive analysis of the behavior and • We outline one performance bug in Intel’s compiler, and efficiency of the memory controller of FPGAs is missing in multiple deficiencies in the memory controller, leading literature, which becomes even more crucial when the limited to significant loss of memory performance for typical memory bandwidth of modern FPGAs compared to their GPU applications. In some of these cases, we provide work- counterparts is taken into account. In this work, we will analyze arounds to improve the memory performance. the memory interface generated by Intel FPGA SDK for OpenCL with different configurations for input/output arrays, II. METHODOLOGY vector size, interleaving, kernel programming model, on-chip channels, operating frequency, padding, and multiple types of A. Memory Benchmark Suite overlapped blocking. Our results point to multiple shortcomings For our evaluation, we develop an open-source benchmark in the memory controller of Intel FPGAs, especially with respect suite called FPGAMemBench, available at https://github.com/ to memory access alignment, that can hinder the programmer’s zohourih/FPGAMemBench. -

A Modern Primer on Processing in Memory

A Modern Primer on Processing in Memory Onur Mutlua,b, Saugata Ghoseb,c, Juan Gomez-Luna´ a, Rachata Ausavarungnirund SAFARI Research Group aETH Z¨urich bCarnegie Mellon University cUniversity of Illinois at Urbana-Champaign dKing Mongkut’s University of Technology North Bangkok Abstract Modern computing systems are overwhelmingly designed to move data to computation. This design choice goes directly against at least three key trends in computing that cause performance, scalability and energy bottlenecks: (1) data access is a key bottleneck as many important applications are increasingly data-intensive, and memory bandwidth and energy do not scale well, (2) energy consumption is a key limiter in almost all computing platforms, especially server and mobile systems, (3) data movement, especially off-chip to on-chip, is very expensive in terms of bandwidth, energy and latency, much more so than computation. These trends are especially severely-felt in the data-intensive server and energy-constrained mobile systems of today. At the same time, conventional memory technology is facing many technology scaling challenges in terms of reliability, energy, and performance. As a result, memory system architects are open to organizing memory in different ways and making it more intelligent, at the expense of higher cost. The emergence of 3D-stacked memory plus logic, the adoption of error correcting codes inside the latest DRAM chips, proliferation of different main memory standards and chips, specialized for different purposes (e.g., graphics, low-power, high bandwidth, low latency), and the necessity of designing new solutions to serious reliability and security issues, such as the RowHammer phenomenon, are an evidence of this trend. -

Advanced X86

Advanced x86: BIOS and System Management Mode Internals Input/Output Xeno Kovah && Corey Kallenberg LegbaCore, LLC All materials are licensed under a Creative Commons “Share Alike” license. http://creativecommons.org/licenses/by-sa/3.0/ ABribuEon condiEon: You must indicate that derivave work "Is derived from John BuBerworth & Xeno Kovah’s ’Advanced Intel x86: BIOS and SMM’ class posted at hBp://opensecuritytraining.info/IntroBIOS.html” 2 Input/Output (I/O) I/O, I/O, it’s off to work we go… 2 Types of I/O 1. Memory-Mapped I/O (MMIO) 2. Port I/O (PIO) – Also called Isolated I/O or port-mapped IO (PMIO) • X86 systems employ both-types of I/O • Both methods map peripheral devices • Address space of each is accessed using instructions – typically requires Ring 0 privileges – Real-Addressing mode has no implementation of rings, so no privilege escalation needed • I/O ports can be mapped so that they appear in the I/O address space or the physical-memory address space (memory mapped I/O) or both – Example: PCI configuration space in a PCIe system – both memory-mapped and accessible via port I/O. We’ll learn about that in the next section • The I/O Controller Hub contains the registers that are located in both the I/O Address Space and the Memory-Mapped address space 4 Memory-Mapped I/O • Devices can also be mapped to the physical address space instead of (or in addition to) the I/O address space • Even though it is a hardware device on the other end of that access request, you can operate on it like it's memory: – Any of the processor’s instructions -

Case Study on Integrated Architecture for In-Memory and In-Storage Computing

electronics Article Case Study on Integrated Architecture for In-Memory and In-Storage Computing Manho Kim 1, Sung-Ho Kim 1, Hyuk-Jae Lee 1 and Chae-Eun Rhee 2,* 1 Department of Electrical Engineering, Seoul National University, Seoul 08826, Korea; [email protected] (M.K.); [email protected] (S.-H.K.); [email protected] (H.-J.L.) 2 Department of Information and Communication Engineering, Inha University, Incheon 22212, Korea * Correspondence: [email protected]; Tel.: +82-32-860-7429 Abstract: Since the advent of computers, computing performance has been steadily increasing. Moreover, recent technologies are mostly based on massive data, and the development of artificial intelligence is accelerating it. Accordingly, various studies are being conducted to increase the performance and computing and data access, together reducing energy consumption. In-memory computing (IMC) and in-storage computing (ISC) are currently the most actively studied architectures to deal with the challenges of recent technologies. Since IMC performs operations in memory, there is a chance to overcome the memory bandwidth limit. ISC can reduce energy by using a low power processor inside storage without an expensive IO interface. To integrate the host CPU, IMC and ISC harmoniously, appropriate workload allocation that reflects the characteristics of the target application is required. In this paper, the energy and processing speed are evaluated according to the workload allocation and system conditions. The proof-of-concept prototyping system is implemented for the integrated architecture. The simulation results show that IMC improves the performance by 4.4 times and reduces total energy by 4.6 times over the baseline host CPU. -

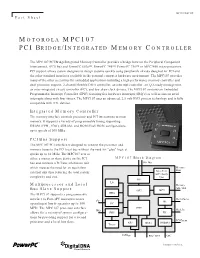

Motorola Mpc107 Pci Bridge/Integrated Memory Controller

MPC107FACT/D Fact Sheet MOTOROLA MPC107 PCI BRIDGE/INTEGRATED MEMORY CONTROLLER The MPC107 PCI Bridge/Integrated Memory Controller provides a bridge between the Peripheral Component Interconnect, (PCI) bus and PowerPC 603e™, PowerPC 740™, PowerPC 750™ or MPC7400 microprocessors. PCI support allows system designers to design systems quickly using peripherals already designed for PCI and the other standard interfaces available in the personal computer hardware environment. The MPC107 provides many of the other necessities for embedded applications including a high-performance memory controller and dual processor support, 2-channel flexible DMA controller, an interrupt controller, an I2O-ready message unit, an inter-integrated circuit controller (I2C), and low skew clock drivers. The MPC107 contains an Embedded Programmable Interrupt Controller (EPIC) featuring five hardware interrupts (IRQ’s) as well as sixteen serial interrupts along with four timers. The MPC107 uses an advanced, 2.5-volt HiP3 process technology and is fully compatible with TTL devices. Integrated Memory Controller The memory interface controls processor and PCI interactions to main memory. It supports a variety of programmable timing supporting DRAM (FPM, EDO), SDRAM, and ROM/Flash ROM configurations, up to speeds of 100 MHz. PCI Bus Support The MPC107 PCI interface is designed to connect the processor and memory buses to the PCI local bus without the need for "glue" logic at speeds up to 66 MHz. The MPC107 acts as either a master or slave device on the PCI MPC107 Block Diagram bus and contains a PCI bus arbitration unit 60x Bus which reduces the need for an equivalent Memory Data external unit thus reducing the total system Data Path ECC / Parity complexity and cost. -

The Impulse Memory Controller

IEEE TRANSACTIONS ON COMPUTERS, VOL. 50, NO. 11, NOVEMBER 2001 1 The Impulse Memory Controller John B. Carter, Member, IEEE, Zhen Fang, Student Member, IEEE, Wilson C. Hsieh, Sally A. McKee, Member, IEEE, and Lixin Zhang, Student Member, IEEE AbstractÐImpulse is a memory system architecture that adds an optional level of address indirection at the memory controller. Applications can use this level of indirection to remap their data structures in memory. As a result, they can control how their data is accessed and cached, which can improve cache and bus utilization. The Impuse design does not require any modification to processor, cache, or bus designs since all the functionality resides at the memory controller. As a result, Impulse can be adopted in conventional systems without major system changes. We describe the design of the Impulse architecture and how an Impulse memory system can be used in a variety of ways to improve the performance of memory-bound applications. Impulse can be used to dynamically create superpages cheaply, to dynamically recolor physical pages, to perform strided fetches, and to perform gathers and scatters through indirection vectors. Our performance results demonstrate the effectiveness of these optimizations in a variety of scenarios. Using Impulse can speed up a range of applications from 20 percent to over a factor of 5. Alternatively, Impulse can be used by the OS for dynamic superpage creation; the best policy for creating superpages using Impulse outperforms previously known superpage creation policies. Index TermsÐComputer architecture, memory systems. æ 1 INTRODUCTION INCE 1987, microprocessor performance has improved at memory. By giving applications control (mediated by the Sa rate of 55 percent per year; in contrast, DRAM latencies OS) over the use of shadow addresses, Impulse supports have improved by only 7 percent per year and DRAM application-specific optimizations that restructure data. -

SIGARCH FY'06 Annual Report July 2005- June 2006 Submitted By

SIGARCH FY’06 Annual Report July 2005- June 2006 Submitted by: Norm Jouppi, SIGARCH Chair Overview The primary mission of SIGARCH continues to be the forum where researchers and practitioners of computer architecture can exchange ideas. SIGARCH sponsors or cosponsors the premier conferences in the field as well as a number of workshops. It publishes a quarterly newsletter and the proceedings of several conferences. It is financially strong with a fund balance of over one million dollars. The SIGARCH bylaws are available online at http://www.acm.org/sigs/bylaws/arch_bylaws.html Officers and Directors The most recent SIGARCH election was held in the spring of 2003. Norm Jouppi of HP currently serves as SIGARCH Chair, with Margaret Martonosi of Princeton as Vice Chair and Matt Farrens of UC Davis as Secretary/Treasurer. SIGARCH has a four member Board of Directors, which currently consist of Alan Berenbaum, Joel Emer, Bill Dally, and Mark Hill. Alan Berenbaum also serves as Past Chair. In addition to these elected positions, Doug DeGroot serves as the Editor of the SIGARCH newsletter Computer Architecture News, and Doug Burger serves as Information Director, providing SIGARCH information online. The current slate of officers and directors has unanimously agreed to accept the optional 2-year term extension, as approved by the ACM SIG Governing Board Executive Committee. This means the term of the current officers and directors will expire in June 2007. Awards The Eckert-Mauchly Award, cosponsored by the IEEE Computer Society, is the most prestigious award in computer architecture. SIGARCH endows its half of the award, which is presented annually at the Awards Banquet of ISCA. -

Optimizing Thread Throughput for Multithreaded Workloads on Memory Constrained Cmps

Optimizing Thread Throughput for Multithreaded Workloads on Memory Constrained CMPs Major Bhadauria and Sally A. Mckee Computer Systems Lab Cornell University Ithaca, NY, USA [email protected], [email protected] ABSTRACT 1. INTRODUCTION Multi-core designs have become the industry imperative, Power and thermal constraints have begun to limit the replacing our reliance on increasingly complicated micro- maximum operating frequency of high performance proces- architectural designs and VLSI improvements to deliver in- sors. The cubic increase in power from increases in fre- creased performance at lower power budgets. Performance quency and higher voltages required to attain those frequen- of these multi-core chips will be limited by the DRAM mem- cies has reached a plateau. By leveraging increasing die ory system: we demonstrate this by modeling a cycle-accurate space for more processing cores (creating chip multiproces- DDR2 memory controller with SPLASH-2 workloads. Sur- sors, or CMPs) and larger caches, designers hope that multi- prisingly, benchmarks that appear to scale well with the threaded programs can exploit shrinking transistor sizes to number of processors fail to do so when memory is accurately deliver equal or higher throughput as single-threaded, single- modeled. We frequently find that the most efficient config- core predecessors. The current software paradigm is based uration is not the one with the most threads. By choosing on the assumption that multi-threaded programs with little the most efficient number of threads for each benchmark, contention for shared data scale (nearly) linearly with the average energy delay efficiency improves by a factor of 3.39, number of processors, yielding power-efficient data through- and performance improves by 19.7%, on average. -

ECE 571 – Advanced Microprocessor-Based Design Lecture 17

ECE 571 { Advanced Microprocessor-Based Design Lecture 17 Vince Weaver http://web.eece.maine.edu/~vweaver [email protected] 3 April 2018 Announcements • HW8 is readings 1 More DRAM 2 ECC Memory • There's debate about how many errors can happen, anywhere from 10−10 error/bit*h (roughly one bit error per hour per gigabyte of memory) to 10−17 error/bit*h (roughly one bit error per millennium per gigabyte of memory • Google did a study and they found more toward the high end • Would you notice if you had a bit flipped? • Scrubbing { only notice a flip once you read out a value 3 Registered Memory • Registered vs Unregistered • Registered has a buffer on board. More expensive but can have more DIMMs on a channel • Registered may be slower (if it buffers for a cycle) • RDIMM/UDIMM 4 Bandwidth/Latency Issues • Truly random access? No, burst speed fast, random speed not. • Is that a problem? Mostly filling cache lines? 5 Memory Controller • Can we have full random access to memory? Why not just pass on CPU mem requests unchanged? • What might have higher priority? • Why might re-ordering the accesses help performance (back and forth between two pages) 6 Reducing Refresh • DRAM Refresh Mechanisms, Penalties, and Trade-Offs by Bhati et al. • Refresh hurts performance: ◦ Memory controller stalls access to memory being refreshed ◦ Refresh takes energy (read/write) On 32Gb device, up to 20% of energy consumption and 30% of performance 7 Async vs Sync Refresh • Traditional refresh rates ◦ Async Standard (15.6us) ◦ Async Extended (125us) ◦ SDRAM -