Fact Sheet: Intel Unveils Biggest Architectural Shifts in a Generation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Intel® Architecture Instruction Set Extensions and Future Features Programming Reference

Intel® Architecture Instruction Set Extensions and Future Features Programming Reference 319433-037 MAY 2019 Intel technologies features and benefits depend on system configuration and may require enabled hardware, software, or service activation. Learn more at intel.com, or from the OEM or retailer. No computer system can be absolutely secure. Intel does not assume any liability for lost or stolen data or systems or any damages resulting from such losses. You may not use or facilitate the use of this document in connection with any infringement or other legal analysis concerning Intel products described herein. You agree to grant Intel a non-exclusive, royalty-free license to any patent claim thereafter drafted which includes subject matter disclosed herein. No license (express or implied, by estoppel or otherwise) to any intellectual property rights is granted by this document. The products described may contain design defects or errors known as errata which may cause the product to deviate from published specifica- tions. Current characterized errata are available on request. This document contains information on products, services and/or processes in development. All information provided here is subject to change without notice. Intel does not guarantee the availability of these interfaces in any future product. Contact your Intel representative to obtain the latest Intel product specifications and roadmaps. Copies of documents which have an order number and are referenced in this document, or other Intel literature, may be obtained by calling 1- 800-548-4725, or by visiting http://www.intel.com/design/literature.htm. Intel, the Intel logo, Intel Deep Learning Boost, Intel DL Boost, Intel Atom, Intel Core, Intel SpeedStep, MMX, Pentium, VTune, and Xeon are trademarks of Intel Corporation in the U.S. -

GPU Developments 2018

GPU Developments 2018 2018 GPU Developments 2018 © Copyright Jon Peddie Research 2019. All rights reserved. Reproduction in whole or in part is prohibited without written permission from Jon Peddie Research. This report is the property of Jon Peddie Research (JPR) and made available to a restricted number of clients only upon these terms and conditions. Agreement not to copy or disclose. This report and all future reports or other materials provided by JPR pursuant to this subscription (collectively, “Reports”) are protected by: (i) federal copyright, pursuant to the Copyright Act of 1976; and (ii) the nondisclosure provisions set forth immediately following. License, exclusive use, and agreement not to disclose. Reports are the trade secret property exclusively of JPR and are made available to a restricted number of clients, for their exclusive use and only upon the following terms and conditions. JPR grants site-wide license to read and utilize the information in the Reports, exclusively to the initial subscriber to the Reports, its subsidiaries, divisions, and employees (collectively, “Subscriber”). The Reports shall, at all times, be treated by Subscriber as proprietary and confidential documents, for internal use only. Subscriber agrees that it will not reproduce for or share any of the material in the Reports (“Material”) with any entity or individual other than Subscriber (“Shared Third Party”) (collectively, “Share” or “Sharing”), without the advance written permission of JPR. Subscriber shall be liable for any breach of this agreement and shall be subject to cancellation of its subscription to Reports. Without limiting this liability, Subscriber shall be liable for any damages suffered by JPR as a result of any Sharing of any Material, without advance written permission of JPR. -

CTL RFP Proposal

State of Maine Department of Education in coordination with the National Association of State Procurement Officials PROPOSAL COVER PAGE RFP # 201210412 MULTI-STATE LEARNING TECHNOLOGY INITIATIVE Bidder’s Organization Name: CTL Chief Executive - Name/Title: Erik Stromquist / COO Tel: 800.642.3087 x 212 Fax: 503.641.5586 E-mail: [email protected] Headquarters Street Address: 3460 NW Industrial St. Headquarters City/State/Zip: Portland, OR 97210 (provide information requested below if different from above) Lead Point of Contact for Proposal - Name/Title: Michael Mahanay / GM, Sales & Marketing Tel: 800.642.3087 x 205 Fax: 503.641.5586 E-mail: [email protected] Street Address: 3460 NW Industrial St. City/State/Zip: Portland, OR 97219 Proposed Cost: $294/yr. The proposed cost listed above is for reference purposes only, not evaluation purposes. In the event that the cost noted above does not match the Bidder’s detailed cost proposal documents, then the information on the cost proposal documents will take precedence. This proposal and the pricing structure contained herein will remain firm for a period of 180 days from the date and time of the bid opening. No personnel on the multi-state Sourcing Team or any other involved state agency participated, either directly or indirectly, in any activities relating to the preparation of the Bidder’s proposal. No attempt has been made or will be made by the Bidder to induce any other person or firm to submit or not to submit a proposal. The undersigned is authorized to enter into contractual obligations on behalf of the above-named organization. -

1 Intel CEO Remarks Pat Gelsinger Q2'21 Earnings Webcast July 22

Intel CEO Remarks Pat Gelsinger Q2’21 Earnings Webcast July 22, 2021 Good afternoon, everyone. Thanks for joining our second-quarter earnings call. It’s a thrilling time for both the semiconductor industry and for Intel. We're seeing unprecedented demand as the digitization of everything is accelerated by the superpowers of AI, pervasive connectivity, cloud-to-edge infrastructure and increasingly ubiquitous compute. Our depth and breadth of software, silicon and platforms, and packaging and process, combined with our at-scale manufacturing, uniquely positions Intel to capitalize on this vast growth opportunity. Our Q2 results, which exceeded our top and bottom line expectations, reflect the strength of the industry, the demand for our products, as well as the superb execution of our factory network. As I’ve said before, we are only in the early innings of what is likely to be a decade of sustained growth across the industry. Our momentum is building as once again we beat expectations and raise our full-year revenue and EPS guidance. Since laying out our IDM 2.0 strategy in March, we feel increasingly confident that we're moving the company forward toward our goal of delivering leadership products in every category in which we compete. While we have work to do, we are making strides to renew our execution machine: 7nm is progressing very well. We’ve launched new innovative products, established Intel Foundry Services, and made operational and organizational changes to lay the foundation needed to win in the next phase of our company’s great history. Here at Intel, we’re proud of our past, pragmatic about the work ahead, but, most importantly, confident in our future. -

Mass-Producing Your Certified Cluster Solutions

Mass-Producing Your Certified Cluster Solutions White Paper The Intel® Cluster Ready program is designed to help you make the most of your engineering Intel® Cluster Ready resources. The program enables you to sell several different types of clusters from each solution High-Performance you design, by varying the hardware while maintaining the same software stack. You can gain a Computing broader range of cluster products to sell without engineering each one “from scratch.” As long as the software stack remains the same and works on each one of the hardware configurations, you can be confident that your clusters will interoperate with registered Intel Cluster Ready applications. Your customers can purchase your clusters with that same confidence, knowing they’ll be able to get their applications up and running quickly on your systems. Intel® Cluster Ready: Mass-Producing Your Certified Cluster Solutions Table of Contents Overview of Production-Related Activities � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � 3 Creating and certifying recipes � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � 3 Maintaining recipes � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � 3 Mass-producing recipes � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � -

NVM Express and the PCI Express* SSD Revolution SSDS003

NVM Express and the PCI Express* SSD Revolution Danny Cobb, CTO Flash Memory Business Unit, EMC Amber Huffman, Sr. Principal Engineer, Intel SSDS003 Agenda • NVM Express (NVMe) Overview • New NVMe Features in Enterprise & Client • Driver Ecosystem for NVMe • NVMe Interoperability and Plugfest Plans • EMC’s Perspective: NVMe Use Cases and Proof Points The PDF for this Session presentation is available from our Technical Session Catalog at the end of the day at: intel.com/go/idfsessions URL is on top of Session Agenda Pages in Pocket Guide 2 Agenda • NVM Express (NVMe) Overview • New NVMe Features in Enterprise & Client • Driver Ecosystem for NVMe • NVMe Interoperability and Plugfest Plans • EMC’s Perspective: NVMe Use Cases and Proof Points 3 NVM Express (NVMe) Overview • NVM Express is a scalable host controller interface designed for Enterprise and client systems that use PCI Express* SSDs • NVMe was developed by industry consortium of 80+ members and is directed by a 13-company Promoter Group • NVMe 1.0 was published March 1, 2011 • Product introductions later this year, first in Enterprise 4 Technical Basics • The focus of the effort is efficiency, scalability and performance – All parameters for 4KB command in single 64B DMA fetch – Supports deep queues (64K commands per Q, up to 64K queues) – Supports MSI-X and interrupt steering – Streamlined command set optimized for NVM (6 I/O commands) – Enterprise: Support for end-to-end data protection (i.e., DIF/DIX) – NVM technology agnostic 5 NVMe = NVM Express NVMe Command Execution 7 1 -

Motherboards, Processors, and Memory

220-1001 COPYRIGHTED MATERIAL c01.indd 03/23/2019 Page 1 Chapter Motherboards, Processors, and Memory THE FOLLOWING COMPTIA A+ 220-1001 OBJECTIVES ARE COVERED IN THIS CHAPTER: ✓ 3.3 Given a scenario, install RAM types. ■ RAM types ■ SODIMM ■ DDR2 ■ DDR3 ■ DDR4 ■ Single channel ■ Dual channel ■ Triple channel ■ Error correcting ■ Parity vs. non-parity ✓ 3.5 Given a scenario, install and configure motherboards, CPUs, and add-on cards. ■ Motherboard form factor ■ ATX ■ mATX ■ ITX ■ mITX ■ Motherboard connectors types ■ PCI ■ PCIe ■ Riser card ■ Socket types c01.indd 03/23/2019 Page 3 ■ SATA ■ IDE ■ Front panel connector ■ Internal USB connector ■ BIOS/UEFI settings ■ Boot options ■ Firmware upgrades ■ Security settings ■ Interface configurations ■ Security ■ Passwords ■ Drive encryption ■ TPM ■ LoJack ■ Secure boot ■ CMOS battery ■ CPU features ■ Single-core ■ Multicore ■ Virtual technology ■ Hyperthreading ■ Speeds ■ Overclocking ■ Integrated GPU ■ Compatibility ■ AMD ■ Intel ■ Cooling mechanism ■ Fans ■ Heat sink ■ Liquid ■ Thermal paste c01.indd 03/23/2019 Page 4 A personal computer (PC) is a computing device made up of many distinct electronic components that all function together in order to accomplish some useful task, such as adding up the numbers in a spreadsheet or helping you to write a letter. Note that this defi nition describes a computer as having many distinct parts that work together. Most PCs today are modular. That is, they have components that can be removed and replaced with another component of the same function but with different specifi cations in order to improve performance. Each component has a specifi c function. Much of the computing industry today is focused on smaller devices, such as laptops, tablets, and smartphones. -

Intel 2019 Year Book

YEARBOOK 2019 POWERING THE FUTURE Our 2019 yearbook invites you to look back and reflect on a memorable year for Intel. TABLE OF CONTENTS 2019 kicked off with the announcement of our new p4 New CEO. Evolving culture. Expanded ambitions. chief executive, Bob Swan. It was followed by a stream of notable news: product announcements, technology p6 More data. More storage. More processing. breakthroughs, new customers and partnerships, p10 Innovation for the PC user experience and important moves to evolve Intel’s culture as the company entered its sixth decade. p12 Self-driving cars hit the road p2 p16 AI unlocks the power of data It’s a privilege to tell the Intel story in all its complexity and humanity. Looking through these pages, the p18 Helping customers push boundaries breadth and depth of what we’ve achieved in 12 p22 More supply to meet strong demand months is substantial, as is the strong foundation we’ve built for even greater impact in the future. p26 Next-gen hardware and software to unlock it p28 Tech’s future: Inventing and investing I hope you enjoy this colorful look at what’s possible when more than 100,000 individuals from every p32 Reinforcing the nature of Moore’s Law corner of the globe unite to change the world – p34 Building for the smarter future through technologies that make a positive difference to our customers, to society, and to people’s lives. — Claire Dixon, Chief Communications Officer NEW CEO. EVOLVING CULTURE. EXPANDED AMBITIONS. 2019 was an important year in Intel’s transformation, with a new chief executive officer, ambitious business priorities, an aspirational culture evolution, and a farewell to Focal. -

Intel® Architecture Instruction Set Extensions and Future Features Programming Reference

Intel® Architecture Instruction Set Extensions and Future Features Programming Reference 319433-041 OCTOBER 2020 Intel technologies may require enabled hardware, software or service activation. No product or component can be absolutely secure. Your costs and results may vary. You may not use or facilitate the use of this document in connection with any infringement or other legal analysis concerning Intel products described herein. You agree to grant Intel a non-exclusive, royalty-free license to any patent claim thereafter drafted which includes subject matter disclosed herein. No license (express or implied, by estoppel or otherwise) to any intellectual property rights is granted by this document. All product plans and roadmaps are subject to change without notice. The products described may contain design defects or errors known as errata which may cause the product to deviate from published specifications. Current characterized errata are available on request. Intel disclaims all express and implied warranties, including without limitation, the implied warranties of merchantability, fitness for a particular purpose, and non-infringement, as well as any warranty arising from course of performance, course of dealing, or usage in trade. Code names are used by Intel to identify products, technologies, or services that are in development and not publicly available. These are not “commercial” names and not intended to function as trademarks. Copies of documents which have an order number and are referenced in this document, or other Intel literature, may be ob- tained by calling 1-800-548-4725, or by visiting http://www.intel.com/design/literature.htm. Copyright © 2020, Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. -

System Design for Telecommunication Gateways

P1: OTE/OTE/SPH P2: OTE FM BLBK307-Bachmutsky August 30, 2010 15:13 Printer Name: Yet to Come SYSTEM DESIGN FOR TELECOMMUNICATION GATEWAYS Alexander Bachmutsky Nokia Siemens Networks, USA A John Wiley and Sons, Ltd., Publication P1: OTE/OTE/SPH P2: OTE FM BLBK307-Bachmutsky August 30, 2010 15:13 Printer Name: Yet to Come P1: OTE/OTE/SPH P2: OTE FM BLBK307-Bachmutsky August 30, 2010 15:13 Printer Name: Yet to Come SYSTEM DESIGN FOR TELECOMMUNICATION GATEWAYS P1: OTE/OTE/SPH P2: OTE FM BLBK307-Bachmutsky August 30, 2010 15:13 Printer Name: Yet to Come P1: OTE/OTE/SPH P2: OTE FM BLBK307-Bachmutsky August 30, 2010 15:13 Printer Name: Yet to Come SYSTEM DESIGN FOR TELECOMMUNICATION GATEWAYS Alexander Bachmutsky Nokia Siemens Networks, USA A John Wiley and Sons, Ltd., Publication P1: OTE/OTE/SPH P2: OTE FM BLBK307-Bachmutsky August 30, 2010 15:13 Printer Name: Yet to Come This edition first published 2011 C 2011 John Wiley & Sons, Ltd Registered office John Wiley & Sons Ltd, The Atrium, Southern Gate, Chichester, West Sussex, PO19 8SQ, United Kingdom For details of our global editorial offices, for customer services and for information about how to apply for permission to reuse the copyright material in this book please see our website at www.wiley.com. The right of the author to be identified as the author of this work has been asserted in accordance with the Copyright, Designs and Patents Act 1988. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording or otherwise, except as permitted by the UK Copyright, Designs and Patents Act 1988, without the prior permission of the publisher. -

EARNINGS Presentation Disclosures

Q2 2020 EARNINGS Presentation Disclosures This presentation contains non-GAAP financial measures. Earnings per share (EPS), gross margin, and operating margin are presented on a non- GAAP basis unless otherwise indicated, and this presentation also includes a non-GAAP free cash flow (FCF) measure. The Appendix provides a reconciliation of these measures to the most directly comparable GAAP financial measure. The non-GAAP financial measures disclosed by Intel should not be considered a substitute for, or superior to, the financial measures prepared in accordance with GAAP. Please refer to “Explanation of Non-GAAP Measures” in Intel's quarterly earnings release for a detailed explanation of the adjustments made to the comparable GAAP measures, the ways management uses the non-GAAP measures, and the reasons why management believes the non-GAAP measures provide investors with useful supplemental information. Statements in this presentation that refer to business outlook, future plans, and expectations are forward-looking statements that involve a number of risks and uncertainties. Words such as "anticipate," "expect," "intend," "goals," "plans," "believe," "seek," "estimate," "continue,“ “committed,” “on-track,” ”positioned,” “launching,” "may," "will," “would,” "should," “could,” and variations of such words and similar expressions are intended to identify such forward-looking statements. Statements that refer to or are based on estimates, forecasts, projections, uncertain events or assumptions, including statements relating to total addressable market (TAM) or market opportunity, future products and technology and the expected availability and benefits of such products and technology, including our 10nm and 7nm process technologies, products, and product designs, and anticipated trends in our businesses or the markets relevant to them, also identify forward-looking statements. -

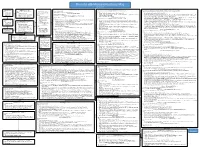

The Intel X86 Microarchitectures Map Version 3.4

The Intel x86 Microarchitectures Map Version 3.4 Willamette (2000, 180 nm) Skylake (2015, 14 nm to 14 nm++) 8086 (1978, 3 µm) 80386 (1985, 1.5 to 1 µm) P6 (1995, 0.50 to 0.35 μm) Series: Alternative Names: i686 Alternative Names: NetBurst, Pentium 4, Pentium IV, P4, , Pentium 4 180 nm Alternative Names: SKL (Desktop and Mobile), SKX (Server) (Note : all U and Y processors are MCPs) Alternative Names: iAPX 386, 386, i386 P5 (1993, 0.80 to 0.35 μm) • 16-bit data bus: Series: Pentium Pro (used in desktops and servers) Series: Series: Series: Alternative Names: Pentium, 8086 (iAPX 86) Variant: Klamath (1997, 0.35 μm) • Desktop: Pentium 4 1.x (except those with a suffix), Pentium 4 2.0 • Desktop: Desktop 6th Generation Core i5 (i5-6xxx, S-H, 14 nm) • Desktop/Server: i386DX 80586, 586, i586 • 8-bit data bus: Alternative Names: Pentium II, PII • Desktop lower-performance: Celeron 1.x (where x is a single digit between 5-9, except Celeron 1.6 SL7EZ, • Desktop higher-performance: Desktop 6th Generation Core i7 (i7-6xxx, S-H , 14 nm), Desktop 7th Generation Core i7 X (i7-7xxxX, X, 14 nm+), • Desktop lower-performance: i386SX Series: 8088 (iAPX 88) Series: Pentium II 233/266/300 steppings C0 and C1 (Klamath, used in desktops) Willamette-128), Celeron 2.0 SL68F Desktop 7th Generation Core i9 X (i9-7xxxXE, i9-7xxxX, X, 14 nm+), Desktop 9th Generation Core i7 X (i7-9xxxX, X, 14 nm++), Desktop 9th • Mobile: i386SL, 80376, i386EX, • Desktop/Server: P5, P54C New instructions: Deschutes (1998, 0.25 to 0.18 μm) • Server: Foster (Xeon), Foster-MP (Xeon)