Motherboards, Processors, and Memory

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Titan X Amd 1.2 V4 Ig 20210319

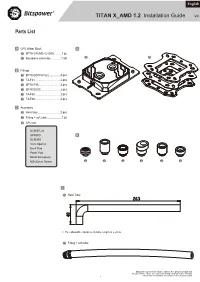

English TITAN X_AMD 1.2 Installation Guide V4 Parts List A CPU Water Block A A-1 BPTA-CPUMS-V2-SKA ..........1 pc A-1 A-2 A-2 Backplane assembly ..............1 set B Fittings B-1 BPTA-DOTFH1622 ...............4 pcs B-2 TA-F61 ...................................2 pcs B-3 BPTA-F95 ..............................2 pcs B-4 BP-RIGOS5 ...........................2 pcs B-5 TA-F60 ..................................2 pcs B-6 TA-F40 ..................................2 pcs C Accessory C-1 Hard tube ..............................2 pcs C-2 Fitting + soft tube ....................1 pc C-3 CPU set SCM3FL20 SPRING B SCM3F6 1mm Spacer Back Pad Paste Pad Metal Backplane M3x32mm Screw B-1 B-2 B-3 B-4 B-5 B-6 C C-1 Hard Tube ※ The allowable variance in tube length is ± 2mm C-2 Fitting + soft tube Bitspower reserves the right to change the product design and interpretations. These are subject to change without notice. Product colors and accessories are based on the actual product. — 1 — I. AMD Motherboard system 54 AMD SOCKET 939 / 754 / 940 IN 48 AMD SOCKET AM4 AMD SOCKET AM3 / AM3+ AMD SOCKET AM2 / AM2+ AMD SOCKET FM1 / FM2+ Bitspower Fan and DRGB RF Remote Controller Hub (Not included) are now available at microcenter.com DRGB PIN on Motherboard or other equipment. 96 90 BPTA-RFCHUB The CPU water block has a DRGB cable, which AMD SOCKET AM4 AMD SOCKET AM3 AM3+ / AMD SOCKET AM2 AM2+ / AMD SOCKET FM1 / FM2+ can be connected to the DRGB extension cable of the radiator fans. Fan and DRGB RF Remote Motherboard Controller Hub (Not included) OUT DRGB LED Do not over-tighten the thumb screws Installation (SCM3FL20). -

Direct Memory Access Components Verification System

ТРУДЫ МФТИ. — 2012. — Том 4, № 1 Frolov P. V. et al. 1 УДК 004.052.42 P. V. Frolov, V. N. Kutsevol, A. N. Meshkov, N. Yu. Polyakov, M. P. Ryzhov AO «MCST» PAO «INEUM» Direct Memory Access components verification system A method of direct memory access subsystem verification used for Elbrus series micro- processors has been described. A peripheral controller imitator has been developed in order to reduce verification overhead. The model of imitator has been included into the functional machine simulator. A pseudorandom test generator for verification of the direct memory access subsystem has been based on the simulator. Ключевые слова: system verification, functional model, direct memory access, pseu- dorandom test generation. Direct Memory Access components verification system 1. Introduction Modern computer systems require very intensive data exchange between the peripheral de- vices and the random-access memory. In the most cases this exchange is performed by the direct memory access (DMA) subsystem. The increasing demands for the performance of the subsys- tem lead to an increase in its complexity, therefore requiring development of effective approaches to DMA subsystem verification [1,2]. This article is based on a result of a comprehensive project than combined implementation of a there co-designed verification techniques based on the consecutive investigation of theDMA subsystem employing one the three models: 1) a functional model written in C++ that corre- sponds to behaviour of the subsystem in the environment determined by a real computer system configuration, 2) RTL model in Verilog and 3) FPGA-based prototype. This article describesthe first method that enables verifying correctness of the design at an early stage of the verification and eliminate a large quantity of bugs using simple tests. -

Intel® Architecture Instruction Set Extensions and Future Features Programming Reference

Intel® Architecture Instruction Set Extensions and Future Features Programming Reference 319433-037 MAY 2019 Intel technologies features and benefits depend on system configuration and may require enabled hardware, software, or service activation. Learn more at intel.com, or from the OEM or retailer. No computer system can be absolutely secure. Intel does not assume any liability for lost or stolen data or systems or any damages resulting from such losses. You may not use or facilitate the use of this document in connection with any infringement or other legal analysis concerning Intel products described herein. You agree to grant Intel a non-exclusive, royalty-free license to any patent claim thereafter drafted which includes subject matter disclosed herein. No license (express or implied, by estoppel or otherwise) to any intellectual property rights is granted by this document. The products described may contain design defects or errors known as errata which may cause the product to deviate from published specifica- tions. Current characterized errata are available on request. This document contains information on products, services and/or processes in development. All information provided here is subject to change without notice. Intel does not guarantee the availability of these interfaces in any future product. Contact your Intel representative to obtain the latest Intel product specifications and roadmaps. Copies of documents which have an order number and are referenced in this document, or other Intel literature, may be obtained by calling 1- 800-548-4725, or by visiting http://www.intel.com/design/literature.htm. Intel, the Intel logo, Intel Deep Learning Boost, Intel DL Boost, Intel Atom, Intel Core, Intel SpeedStep, MMX, Pentium, VTune, and Xeon are trademarks of Intel Corporation in the U.S. -

VIA RAID Configurations

VIA RAID configurations The motherboard includes a high performance IDE RAID controller integrated in the VIA VT8237R southbridge chipset. It supports RAID 0, RAID 1 and JBOD with two independent Serial ATA channels. RAID 0 (called Data striping) optimizes two identical hard disk drives to read and write data in parallel, interleaved stacks. Two hard disks perform the same work as a single drive but at a sustained data transfer rate, double that of a single disk alone, thus improving data access and storage. Use of two new identical hard disk drives is required for this setup. RAID 1 (called Data mirroring) copies and maintains an identical image of data from one drive to a second drive. If one drive fails, the disk array management software directs all applications to the surviving drive as it contains a complete copy of the data in the other drive. This RAID configuration provides data protection and increases fault tolerance to the entire system. Use two new drives or use an existing drive and a new drive for this setup. The new drive must be of the same size or larger than the existing drive. JBOD (Spanning) stands for Just a Bunch of Disks and refers to hard disk drives that are not yet configured as a RAID set. This configuration stores the same data redundantly on multiple disks that appear as a single disk on the operating system. Spanning does not deliver any advantage over using separate disks independently and does not provide fault tolerance or other RAID performance benefits. If you use either Windows® XP or Windows® 2000 operating system (OS), copy first the RAID driver from the support CD to a floppy disk before creating RAID configurations. -

ATX-945G Industrial Motherboard in ATX Form Factor with Intel® 945G Chipset

ATX-945G Industrial Motherboard in ATX form factor with Intel® 945G chipset Supports Intel® Core™2 Duo, Pentium® D CPU on LGA775 socket 1066/800/533MHz Front Side Bus Intel® Graphics Media Accelerator 950 Dual Channel DDR2 DIMM, maximum 4GB Integrated PCI Express LAN, USB 2.0 and SATA 3Gb/s ATX-945G Product Overview The ATX-945G is an ATX form factor single-processor (x8) and SATA round out the package for a powerful industrial motherboard that is based on the Intel® 945G industrial PC. I/O features of the ATX-945G include a chipset with ICH7 I/O Controller Hub. It supports the Intel® 32-bit/33MHz PCI Bus, 16-bit/8MHz ISA bus, 1x PCI Express Core™2 Duo, Pentium® D, Pentium® 4 with Hyper-Threading x16 slot, 2x PCI Express x1 slots, 3x PCI slots, 1x ISA slot Technology, or Celeron® D Processor on the LGA775 socket, (shared w/ PCI), onboard Gigabit Ethernet, LPC, EIDE Ultra 1066/800/533MHz Front Side Bus, Dual Channel DDR2 DIMM ATA/100, 4 channels SATA 3Gb/s, Mini PCI card slot, UART 667/533/400MHz, up to 4 DIMMs and a maximum of 4GB. The compatible serial ports, parallel port, floppy drive port, HD Intel® Graphic Media Accelerator 950 technology with Audio, and PS/2 Keyboard and Mouse ports. 2048x1536x8bit at 75Hz resolution, PCI Express LAN, USB 2.0 Block Diagram CPU Core™2 Duo Pentium® D LGA775 package 533/800/1066MHz FSB Dual-Core Hyper-Threading 533/800/1066 MHz FSB D I M Northbridge DDR Channel A M ® x Intel 945G GMCH 2 DDRII 400/533/667 MHz D I CRT M M DB-15 DDR Channel B x 2 Discrete PCIe x16 Graphics DMI Interface 2 GB/s IDE Device -

Get More out of the Intel Foxhollow Platform

Get More Out Of the Intel Foxhollow Platform Akber Kazmi, Marketing Director, PLX Technology Introduction As being reported by the mainstream technology media, Intel is leveraging the technology from its latest server-class Nehalem CPU to offer the Lynnfield CPU, targeted for high-end desktop and entry-level servers. This platform is codenamed “Foxhollow “. Intel is expected to launch this new platform sometime in the second half of 2009. This entry-level uni-processor (UP) server platform will feature two to four cores as Intel wants to pack a lot of processing power in all its platforms. The Foxhollow platform is quite different from the previous Desktops and UP servers in that it reduces the solution from three chips to two chips by eliminating the northbridge and replacing the southbridge with a new device called the Platform Controller Hub (or PCH) code named Ibexpeak (5 Series Chipset). As Intel has moved the memory controller and the graphics function into the CPU, there's no need for an MCH (Memory Controller Hub), so Intel has simplified its chipset design to keep costs down in the entry-level and mainstream segments. The PCH chip interfaces with the CPU through Intel’s DMI interconnect. The PCH will support eight PCIe lanes, up to four PCI slots, the GE MAC, display interface controllers, I/O controllers, RAID controllers, SATA controllers, USB 2.0 controllers, etc. Foxhollow Motherboards Foxhollow motherboards are being offered in two configurations, providing either two or three x8 PCIe ports for high performance I/Os. However, motherboard vendors can use an alternate configuration that provides one more PCIe x8 port with no significant burden and instead offers 33% more value than the three port solution and 50% more value than the two port solution. -

Vorbereitung Auf Comptia A+

Markus Kammermann, Ramon Kratzer CompTIA A+ Systemtechnik und Support von A bis Z Vorbereitung auf die Prüfungen 220-901 und 220-902 Bibliografische Information der Deutschen Nationalbibliothek Die Deutsche Nationalbibliothek verzeichnet diese Publikation in der Deutschen Nationalbibliografie; detaillierte bibliografische Daten sind im Internet über <http://dnb.d-nb.de> abrufbar. ISBN 978-3-95845-466-8 4. Auflage 2016 www.mitp.de E-Mail: [email protected] Telefon: +49 7953 / 7189 – 079 Telefax: +49 7953 / 7189 – 082 © 2016 mitp Verlags GmbH & Co. KG Dieses Werk, einschließlich aller seiner Teile, ist urheberrechtlich geschützt. Jede Verwertung außerhalb der engen Grenzen des Urheberrechtsgesetzes ist ohne Zustimmung des Verlages unzulässig und straf- bar. Dies gilt insbesondere für Vervielfältigungen, Übersetzungen, Mikroverfilmungen und die Einspei- cherung und Verarbeitung in elektronischen Systemen. Die Wiedergabe von Gebrauchsnamen, Handelsnamen, Warenbezeichnungen usw. in diesem Werk berechtigt auch ohne besondere Kennzeichnung nicht zu der Annahme, dass solche Namen im Sinne der Warenzeichen- und Markenschutz-Gesetzgebung als frei zu betrachten wären und daher von jedermann benutzt werden dürften. Dieses Lehrmittel wurde für das CompTIA Authorized Curriculum durch ProCert Labs geprüft und ist CAQC-zertifiziert. Weitere Informationen zu dieser Qualifizierung erhalten Sie unter www.comptia.org/ certification/caqc/ sowie unter der Adresse www.procertlabs.com Das Bildmaterial in diesem Buch, soweit es nicht von uns selber erstellt -

Intel/AMD CPU Release Dates, 2018

Release dates of desktop microprocessors (2018) http://www.cpu-world.com/Releases/Desktop_CPU_releases_(2018).html News • CPUs / Chips • Benchmarks • Information • Forum • Links • About Identification • Pinouts • S-Spec numbers • Glossary Search the site / Ident CPU / Quick CPU looku Release dates of desktop microprocessors (2018) 2017 2019 AMD Intel Desktop CPU releases January 2004 February First Zen-based desktop APUs 2005 14nm, 4 cores, Radeon Vega graphics 2006 2007 Ryzen 3 2200G 2008 3.5GHz / 4MB L3 / 4 cores / Unlocked / Socket AM4 2009 Ryzen 5 2400G 2010 3.6GHz / 4MB L3 / 4 cores / Unlocked / Socket AM4 2011 Other markets: March April 2nd Generation Zen CPUs 8th Generation lineup launch 2018 embedded CPUs 2018 mobile CPUs "Pinnacle Ridge", 12nm, up to 8 cores Coffee Lake-S, 14nm, Up to 6 cores 2018 server CPUs Ryzen 5 2600X Celeron G4900T 3.6GHz / 16MB L3 / 6 cores / Unlocked / Socket AM4 2.9GHz / 2MB L3 / 2 cores / Socket 1151 Ryzen 5 2600 Celeron G4900 3.4GHz / 16MB L3 / 6 cores / Unlocked / Socket AM4 3.1GHz / 2MB L3 / 2 cores / Socket 1151 Ryzen 7 2700X Celeron G4920 3.7GHz / 16MB L3 / 8 cores / Unlocked / Socket AM4 3.2GHz / 2MB L3 / 2 cores / Socket 1151 Ryzen 7 2700 Core i3-8100T 3.2GHz / 16MB L3 / 8 cores / Unlocked / Socket AM4 3.1GHz / 6MB L3 / 4 cores / Socket 1151 Core i3-8300T Ryzen 3 2200GE 3.2GHz / 8MB L3 / 4 cores / Socket 1151 3.2GHz / 4MB L3 / 4 cores / Unlocked / Socket AM4 Core i3-8300 Ryzen 5 2400GE 3.7GHz / 8MB L3 / 4 cores / Socket 1151 3.2GHz / 4MB L3 / 4 cores / Unlocked / Socket AM4 Core i5-8400T 1.7GHz -

ECS P4M890T-M2 Manual.Pdf

Preface Copyright This publication, including all photographs, illustrations and software, is protected under international copyright laws, with all rights reserved. Neither this manual, nor any of the material contained herein, may be reproduced without written consent of the author. Version 1.0B Disclaimer The information in this document is subject to change without notice. The manufacturer makes no representations or warranties with respect to the contents hereof and specifically disclaims any implied warranties of merchantability or fitness for any particular purpose. The manufacturer reserves the right to revise this publication and to make changes from time to time in the content hereof without obligation of the manufacturer to notify any person of such revision or changes. Trademark Recognition Microsoft, MS-DOS and Windows are registered trademarks of Microsoft Corp. MMX, Pentium, Pentium-II, Pentium-III, Pentium-4, Celeron are registered trademarks of Intel Corporation. Other product names used in this manual are the properties of their respective owners and are acknowledged. Federal Communications Commission (FCC) This equipment has been tested and found to comply with the limits for a Class B digital device, pursuant to Part 15 of the FCC Rules. These limits are designed to provide reason- able protection against harmful interference in a residential installation. This equipment generates, uses, and can radiate radio frequency energy and, if not installed and used in accordance with the instructions, may cause harmful interference -

Intel® Architecture Instruction Set Extensions and Future Features Programming Reference

Intel® Architecture Instruction Set Extensions and Future Features Programming Reference 319433-041 OCTOBER 2020 Intel technologies may require enabled hardware, software or service activation. No product or component can be absolutely secure. Your costs and results may vary. You may not use or facilitate the use of this document in connection with any infringement or other legal analysis concerning Intel products described herein. You agree to grant Intel a non-exclusive, royalty-free license to any patent claim thereafter drafted which includes subject matter disclosed herein. No license (express or implied, by estoppel or otherwise) to any intellectual property rights is granted by this document. All product plans and roadmaps are subject to change without notice. The products described may contain design defects or errors known as errata which may cause the product to deviate from published specifications. Current characterized errata are available on request. Intel disclaims all express and implied warranties, including without limitation, the implied warranties of merchantability, fitness for a particular purpose, and non-infringement, as well as any warranty arising from course of performance, course of dealing, or usage in trade. Code names are used by Intel to identify products, technologies, or services that are in development and not publicly available. These are not “commercial” names and not intended to function as trademarks. Copies of documents which have an order number and are referenced in this document, or other Intel literature, may be ob- tained by calling 1-800-548-4725, or by visiting http://www.intel.com/design/literature.htm. Copyright © 2020, Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. -

MSI MEG X570 GODLIKE Datasheet

MOTHERBOARD MEG X570 GODLIKE ONE BOARD TO RULE THEM ALL FEATURE Mystic Light Infinity II Personalize your PC with 16.8 million colors / 29 effects with MSI Mystic Light APP, making your system come alive with infinity. Dynamic Dashboard Built-in dynamic panel that indicates the status of GODLIKE and shows off your own personality. Lightning Gen4 solution The latest Gen4 PCI-E and M.2 solution with up to 64GB/s bandwidth for maximum transfer speed. Frozr Heatsink Design Designed with the patented fan and double ball bearings to SPECIFICATION provide best performance for enthusiast gamers and prosumers. Extended Heat-Pipe Design Enlarge the surface of heat dissipation, connecting from Model Name MEG X570 GODLIKE MOS heatsink to chipset heatsink. CPU Support Supports 2nd and 3rd Gen AMD Ryzen™ / Ryzen™ with Radeon™ Vega Graphics and 2nd Gen AMD Ryzen™ with Radeon™ Graphics Desktop Processors M.2 Shield FROZR CPU Socket Socket AM4 Strengthened built-in M.2 thermal solution. Keeps M.2 SSDs ® Chipset AMD X570 Chipset safe while preventing throttling, making them run faster. Graphics Interface 4 x PCI-E 4.0 x16 slots Supports 2-way SLI / 4-way CrossFire WIFI 6 Memory Support 4 DIMMs, Dual Channel DDR4 Storage 3 x Lightning Gen 4 M.2 slots The latest wireless solution supports MU-MIMO and BSS 6 x SATA 6Gb/s ports color technology, delivering speeds up to 2400Mbps. USB Ports 5 x USB 3.2 (Gen2, 3A+2C) + 6 x USB 3.2 (Gen1) + 4 x USB 2.0 Core Boost LAN Killer™ E3000 2.5G LAN, Killer™ E2600 Gigabit LAN Wi-Fi / BT Killer™ Wi-Fi 6 AX1650 Combining dual 8 pin power connectors and IR digital power Audio 8-Channel (7.1) HD Audio with XTREME AUDIO DAC design, the GODLIKE is ready for more cores also provide better performance. -

2018 Annual Report on Form 10-K

2018 ANNUAL REPORT ON FORM 10-K MARCH 2019 DEAR SHAREHOLDERS: From the industry’s first 1GHz CPU to the world’s first GPU delivering a teraflop of computing power, AMD has always stood for pushing the boundaries of what is possible. A few years ago, we made several big bets to accelerate our pace of innovation, strengthen our execution, and enable AMD to deliver a leadership portfolio of computing and graphics processors capable of increasing our share of the $75 billion high-performance computing market. In 2018, we saw those bets begin to pay off as we delivered our second straight year of greater than 20% annual revenue growth and significantly improved our gross margin and profitability from the previous year. REVENUE GROSS MARGIN % R&D INVESTMENT EXPENSE/REVENUE % $ Billions $ Billions $6.5B 38% $1.43B 34% $5.3B 34% 33% $4.3B $1.20B 23% $1.01B 31% 2016 2017 2018 2016 2017 2018 2016 2017 2018 2016 2017 2018 Added $2.2B in revenue Significantly improved gross Increased R&D by more than Significant improvement over the last 2 years margin over last 2 years based 40% over the last 2 years in OPEX leverage on new product portfolio Our newest Ryzen™, EPYC™ and datacenter GPU products contributed more than $1.2 billion of revenue in 2018 and helped us gain share across our priority markets. In 2018, we added 3.9% points of desktop processor unit share, 5.3% points of notebook processor unit share and met our goal of exiting the year with mid-single digit server processor market share.