The Clouds Distributed Operating System

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Billg the Manager

BillG the Manager “Intelli…what?”–Bill Gates The breadth of the Microsoft product line and the rapid turnover of core technologies all but precluded BillG from micro-managing the company in spite of the perceptions and lore around that topic. In less than 10 years the technology base of the business changed from the 8-bit BASIC era to the 16-bit MS-DOS era and to now the tail end of the 16-bit Windows era, on the verge of the Win32 decade. How did Bill manage this — where and how did he engage? This post introduces the topic and along with the next several posts we will explore some specific projects. At 38, having grown Microsoft as CEO from the start, Bill was leading Microsoft at a global scale that in 1993 was comparable to an industrial-era CEO. Even the legendary Thomas Watson Jr., son of the IBM founder, did not lead IBM until his 40s. Microsoft could never have scaled the way it did had BillG managed via a centralized hub-and-spoke system, with everything bottlenecked through him. In many ways, this was BillG’s product leadership gift to Microsoft—a deeply empowered organization that also had deep product conversations at the top and across the whole organization. This video from the early 1980’s is a great introduction to the breadth of Microsoft’s product offerings, even at a very early stage of the company. It also features some vintage BillG voiceover and early sales executive Vern Raburn. (Source: Microsoft videotape) Bill honed a set of undocumented principles that defined interactions with product groups. -

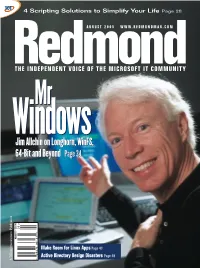

Jim Allchin on Longhorn, Winfs, 64-Bit and Beyond Page 34 Jim

0805red_cover.v5 7/19/05 2:57 PM Page 1 4 Scripting Solutions to Simplify Your Life Page 28 AUGUST 2005 WWW.REDMONDMAG.COM MrMr WindowsWindows Jim Allchin on Longhorn, WinFS, 64-Bit and Beyond Page 34 > $5.95 05 • AUGUST Make Room for Linux Apps Page 43 25274 867 27 Active Directory Design Disasters Page 49 71 Project1 6/16/05 12:36 PM Page 1 Exchange Server stores & PSTs driving you crazy? Only $399 for 50 mailboxes; $1499 for unlimited mailboxes! Archive all mail to SQL and save 80% storage space! Email archiving solution for internal and external email Download your FREE trial from www.gfi.com/rma Project1 6/16/05 12:37 PM Page 2 Get your FREE trial version of GFI MailArchiver for Exchange today! GFI MailArchiver for Exchange is an easy-to-use email archiving solution that enables you to archive all internal and external mail into a single SQL database. Now you can provide users with easy, centralized access to past email via a web-based search interface and easily fulfill regulatory requirements (such as the Sarbanes-Oxley Act). GFI MailArchiver leverages the journaling feature of Exchange Server 2000/2003, providing unparalleled scalability and reliability at a competitive cost. GFI MailArchiver for Exchange features Provide end-users with a single web-based location in which to search all their past email Increase Exchange performance and ease backup and restoration End PST hell by storing email in SQL format Significantly reduce storage requirements for email by up to 80% Comply with Sarbanes-Oxley, SEC and other regulations. -

'Freeware' Programs - WSJ

4/24/2021 Linux Lawsuit Could Undercut Other 'Freeware' Programs - WSJ This copy is for your personal, non-commercial use only. To order presentation-ready copies for distribution to your colleagues, clients or customers visit https://www.djreprints.com. https://www.wsj.com/articles/SB106081595219055800 Linux Lawsuit Could Undercut Other 'Freeware' Programs By William M. BulkeleyStaff Reporter of THE WALL STREET JOURNAL Aug. 14, 2003 1159 pm ET (See Corrections & Amplifications item below.) To many observers, tiny SCO Group Inc.'s $3 billion copyright-infringement lawsuit against International Business Machines Corp., and SCO's related demands for stiff license fees from hundreds of users of the free Linux software, seem like little more than a financial shakedown. But the early legal maneuverings in the suit suggest the impact could be far broader. For the first time, the suit promises to test the legal underpinnings that have allowed free software such as Linux to become a potent challenge to programs made by Microsoft Corp. and others. Depending on the outcome, the suit could strengthen or drastically weaken the free-software juggernaut. The reason: In filing its legal response to the suit last week, IBM relied on an obscure software license that undergirds most of the free-software industry. Called the General Public License, or GPL, it requires that the software it covers, and derivative works as well, may be copied by anyone, free of charge. IBM's argument is that SCO, in effect, "signed" this license by distributing Linux for years, and therefore can't now turn around and demand fees. -

Media Update Comes Vs

RE: IOWA COURT CASE Media Update Comes vs. Microsoft, Inc. January 22, 2007 Contact: Eileen Wixted 515-240-6115 Jim Hibbs 515-201-9004 Coverage Notes: Office 515-226-0818 1. Plaintiffs officially notify the U.S. Department of Justice and the Iowa Attorney General of Microsoft’s noncompliance with the 2002 Final Judgment in United States v. Microsoft. 2. Exhibit of interest: PX7278 shows that Vista evangelists at Microsoft raved about Apple’s Mac Tiger in 2004. WEB AND BROADCAST EDITORS PLEASE NOTE LANGUAGE IN THIS SECTION BEFORE PUBLISHING 3. Former General Counsel for software company Novell testifies about Microsoft’s anticompetitive conduct against Novell and DR DOS. 1. Plaintiffs notify the Department of Justice and Iowa Attorney General Tom Miller of Microsoft’s non-compliance with the 2002 Final Judgment in United States v. Microsoft. Today, Roxanne Conlin, co-lead counsel for the Plaintiffs in Comes v. Microsoft, sent a letter to Thomas Barnett of the United States Department of Justice and Iowa Attorney General Tom Miller, informing them that the Comes Plaintiffs have obtained confidential discovery materials from Microsoft showing that Microsoft is not complying with, and is circumventing, its disclosure obligations under the 2002 Final Judgment entered by the federal court in United States v. Microsoft. The 2002 Final Judgment requires Microsoft to fully disclose applications programming interfaces (“APIs”) and related documentation used by Microsoft. An expert retained by Plaintiffs analyzed source code and other materials produced by Microsoft in the Comes matter, and has concluded that a large number of Windows APIs used by Microsoft middleware remain undocumented. -

Virtual Memory

Today’s topics Operating Systems Brookshear, Chapter 3 Great Ideas, Chapter 10 Slides from Kevin Wayne’s COS 126 course Performance & Computer Architecture Notes from David A. Patterson and John L. Hennessy, Computer Organization and Design: The Hardware/Software Interface, Morgan Kaufmann, 1997. http://computer.howstuffworks.com/pc.htm Slides from Prof. Marti Hearst of UC Berkeley SIMS Upcoming Computability • Great Ideas, Chapter 15 • Brookshear, Chapter 11 Compsci 001 12.1 Performance Performance= 1/Time The goal for all software and hardware developers is to increase performance Metrics for measuring performance (pros/cons?) Elapsed time CPU time • Instruction count (RISC vx. CISC) • Clock cycles per instruction • Clock cycle time MIPS vs. MFLOPS Throughput (tasks/time) Other more subjective metrics? What kind of workload to be used? Applications, kernels and benchmarks (toy or synthetic) Compsci 001 12.2 Boolean Logic AND, OR, NOT, NOR, NAND, XOR Each operator has a set of rules for combining two binary inputs These rules are defined in a Truth Table (This term is from the field of Logic) Each implemented in an electronic device called a gate Gates operate on inputs of 0’s and 1’s These are more basic than operations like addition Gates are used to build up circuits that • Compute addition, subtraction, etc • Store values to be used later • Translate values from one format to another Compsci 001 12.3 Truth Tables Compsci 001 12.4 Images from http://courses.cs.vt.edu/~csonline/MachineArchitecture/Lessons/Circuits/index.html The Big Picture Since 1946 all computers have had 5 components The Von Neumann Machine Processor Input Control Memory Datapath Output What is computer architecture? Computer Architecture = Machine Organization + Instruction Set Architecture + .. -

138 C. Cross-Platform Java Also Presented a Middleware Threat To

C. Cross-Platform Java also presented a middleware threat to Microsoft’s operating system monopoly 57. Cross-platform Java is another middleware technology that has the potential to erode the applications barrier to entry by gaining widespread usage of APIs without competing directly against Windows as an operating system. 1. The nature of the Java threat 58. James Gosling and others at Sun Microsystems developed Java in significant part to provide developers a choice between writing cross-platform applications and writing applications that depend on a particular operating system. 58.1. Java consists of a series of interlocking elements designed to facilitate the creation of cross-platform applications, i.e. applications that can run on multiple operating systems. i. Gosling testified: "The Java technology is intended to make it possible to develop software applications that are not dependent on a particular operating system or particular computer hardware . A principal goal of the Java technology is to assure that a Java-based program -- unlike a traditional software application -- is no longer tied to a particular operating system and hardware platform, and does not require the developer to undertake the time- consuming and expensive effort to port the program to different platforms. As we said in the Preface to The Java Programming Language, ‘software developers creating applications in Java benefit by developing code only once, with no need to ‘port’ their applications to every software and hardware platform.’ . Because the Java technology allows developers to make software applications that can run on various JVMs on multiple platforms, it holds the promise of giving consumers greater choice in applications, operating systems, and hardware. -

Hasta La Vista Microsoft and the Vista Operating System

HASTA LA VISTA MICROSOFT AND THE VISTA OPERATING SYSTEM James R. “Doc” Ogden Kutztown University of Pennsylvania Denise T. Ogden Pennsylvania State University – Lehigh Valley Anatoly Belousov Elsa G. Collins Andrew Geiges Karl Long Kutztown University of Pennsylvania Introduction Jim Allchin, an executive in the Platform Product and Service Group, responsible for operating system development, walked into Bill Gates’ office in July 2004 to deliver bad news about the Longhorn operating system. “It’s not going to work,” said Allchin to Gates, Chairman of the Board and Steve Ballmer, CEO. Longhorn was too complex to run properly. The news got worse with reports that the team would have to start over. Mr. Gates, known for his directness and temperament, insisted the team take more time until everything worked. Upset over the mess, Gates said, “Hey, let's not throw things out we shouldn't throw out. Let's keep things in that we can keep in,” The executives agreed to reserve a final decision until Mr. Ballmer returned from a business trip (Guth, 2005). Over time it became clear that Longhorn had to start from scratch and remove all the bells and whistles that made the system so complex. After Bill Gates reluctantly agreed, the change was announced to Microsoft employees on August 26, 2004 and the new development process began in September 2004 (Guth, 2005). Often technology-oriented businesses have a more difficult time understanding the nuances of marketing because of the complex nature of the products/services. Nevertheless marketing is important and understanding customer needs becomes central to success. -

Commentary Microsoft Vs. Linux: the Changing Nature of Competition

COM-19-3908 Research Note D. Smith 13 March 2003 Commentary Microsoft vs. Linux: The Changing Nature of Competition Microsoft's attitude toward competition has changed as a result of Linux and other open- source software. Its business tactics are changing to focus on areas that have not been Microsoft's traditional strengths. Linux and open-source software are unlike any competition that Microsoft has ever faced. Microsoft is now perceived as "expensive," at least in preliminary discussions regarding Linux. There is no single competitor or group of competitors that Microsoft can challenge. Free open-source software is more a fundamental movement, and because the nature of competition has changed, Microsoft must now employ strategies that are very different. Background Microsoft has faced many types of competitors in the past. It has actually thrived on this competition, often worrying more about that than technology or customers’ wants and needs. While the constant focus and even obsession with competition has kept Microsoft remarkably agile, it has also contributed to Microsoft's sometimes overly aggressive tactics. One of these tactics involved bundling, which landed Microsoft in antitrust court in the late 1990s. It was found guilty of illegally maintaining a monopoly, but it escaped with permission (at least for now) to bundle products in any way its sees fit, with relatively minor restrictions. However, many are wondering whether this will matter in the future. Competitors have come and gone, but one tactic has remained constant — Microsoft's ability to use price to its advantage. Although neither unique to Microsoft nor the sole reason for its success, this price advantage has been effective and consistent with Microsoft's original goal — enabling PCs that run Microsoft software on every desktop. -

Erik Stevenson

Erik Stevenson From: Brad Silverberg To: pauline Subject: FW: DMTF and what to do with it... Date: Friday, January 28, 1994 5:36PM what a fucking mess. From: John Ludwig To: Brad Silverberg Subject: FW: DMTF and what to do with it... Date: Friday, January 28, 1994 4:39PM From: Jim Allchin To: johnlu Subject: FW: DMTF and what to do with it... Date: Thursday, January 27, 1994 3:55PM I thought you should see this. All I cansay Is "simply amazing..." Below Dan Is answering my questions to him on this mesS. If you have thoughts, I could use your help. thanks, jim From: Dan Shelly To: Jim AIIchin; Jonathan Roberts; Richard Tong Cc: Bob Muglia; David Thompson (NT) Subject: RE: DMTF and what to do with it... Date: Monday, January 24, 1994 5:52PM Answers embedded below. I was supposed to meet with IBM this Friday to discuss our distribution of source code to the DMTF. I backed out based on Re fact that our lawyers are still looking this over and it could take a *LONG* time for them to approve. I~h~t a fucking mess. > > A clear analysis of the situation 0. Is there anything in writing? > > The DMTF bylaws upon which this is all based are not clear. The original intent of the DMTF was to deliver an object level implementation. Nowhere have we committed in *writing* to deliver source code, however, if we don’t Intel has already stated that they will. Evidentatly the verbal agreement for the last 1.5 years has been that all work done by DMTF will be shared. -

I PLAINTIFF's |

i PLAINTIFF’S | I Bill Neukom ~ From: Paul Maritz To: David Thacher; Dwayne Walker Subject: FW: Netware competition Date: Wednesday, December 23, 1992 9:55AM From: Jim AIIchin To: Bill Gates; Dwayne Walker; Paul Maritz Co: Brad Silverberg; Jonathan Lazarus Subject: RE: Netware competition Date: Thursday, December 17, 1992 9:0DAM ;From: Bill Gates I’To: Dwayne Walker; Paul Maritz = Cc: Brad Silverberg; Jim Allchin; Jonathan Lazarus Subject: Netware competition Date: Wednesday, December 16, 1992 20:24 read this document from Novell labeled "Selling Guide Against MS" with interest. It makes a lot of very interesting attacks on our strategy - some wrong like that we are not enhancing DOS or that their network R&D is the largest in the industry (maybe we should make it clear that this is wrong). The y say that the I_AN man version of NT will be 4 to 6 months after NT. The Quote every negative article written about us. One part I learned from is this C2 certification issue. They claim they are the only product going for C2 networking certification and that that is a big deal. My understanding was that C2 network is easier to get and that )roducts like UNIX already have it. I want to understand this because it could be a huge marketing problem for us if what they say in their document is correct and we are not getting the same certification that they are. How can they be better than us when they have no preemp~on and most things run at ring O. W~th respect to security and C2, this document claims: -that we have either not started the process or are not very far into the process -this claim is based on the notion that we have not been issued a MOU (memorandum of understanding). -

Award Winning Newsletter of the North Orange County Computer Club

Newsletter of the North Orange County Computer Club Volume 43 No 5 May 2019 $1.50 NOCCC Meetings for Sunday May 5, 2019 The consignment table will be open from 10 am to 1 pm Main Meeting Jim Sanders will present a number of: Windows 10 Tips, Tricks, and Trade secrets. Actually more tips than tricks and only one trade secret, but an important secret. Special Interest Groups (SIGs) & Main Meeting Schedule 9:00 AM – 10:30 AM 12:00 PM Noon – 1:00 PM Beginners Digital Photography ........... Science 129 3D Printing………………………………… Irvine Auditorium Questions and Answers about Digital Photography Questions and Answers about our new printer Linux for Desktop Users……………….Science 131 PIG SIG …………………………………….. Irvine Courtyard Beginners’ Questions about Linux Bring your lunch. Consume it in the open-air benches in front of the Irvine Hall. Talk about your computer and 10:30 AM – 12:00 PM Noon life experiences. 3D Printing ................................... Irvine Auditorium Questions and Answers about our new printer Advanced Digital Photography… ........ Science 129 1:00 PM – 3:00 PM Main Questions and Answers about Digital Photography Meeting Linux Administration ............................ Science 131 More topics about the Linux operating system …………………..…….. Irvine Auditorium Mobile Computing ................................. Science 109 We discuss smartphones, tablets, laptops, operating sys- tems and computer related news. VBA and Microsoft Access/Excel ........ Science 127 Jim Sanders - Windows 10 Tips. Using VBA code to enhance the capabilities of Access and Excel 3:00 PM – 4:00 PM Board Meeting………………………………………………Science 129 Verify your membership renewal information by Mark your calendars for these meeting dates checking your address label on the last page 2019: Jun2 Jul7 Aug4 Sep8 Oct6 Nov3 Dec1 2020: Jan5 Feb9 Mar1 Apr5 May3 Coffee, cookies and donuts are available during the day in the Irvine Hall lobby. -

Oral History of David Cutler

Oral History of David Cutler Interviewed by: Grant Saviers Recorded: February 25, 2016 Medina, WA CHM Reference number: X7733.2016 © 2016 Computer History Museum Oral History of David Cutler Grant Saviers: I'm Grant Saviers, Trustee at the Computer History Museum, here with Dave Cutler in his living room in Bellevue, on a beautiful Seattle day. Nice weather, for a change. I’m here to discuss with Dave his long career in the computer industry. And so we'll begin. So we were talking about your entry into computers. But let's roll back a bit into early childhood and living in Michigan and being near the Oldsmobile factory in Lansing. So tell us a little bit about your early days and high school. David Cutler: Well, I grew up in Dewitt, Michigan, which is about ten miles north of Lansing, where Oldsmobile was at. I went to Dewitt High School. I was primarily an athlete, not a student, although I had pretty good grades at Dewitt. I was a 16-letter athlete. I played football, basketball, baseball and I ran track. And I'm a Michigan State fan. Michigan State was right there in East Lansing, so I grew up close to East Lansing and Michigan State. Early on, I was very interested in model airplanes, and built a lot of model airplanes and wanted to be a pilot when I grew up. But somehow that just went by the way. And later on, I discovered I had motion sickness problems, anyway, so that wasn't going to make it.