The Evolution of Microsoft SQL Server: 1989 to 2000

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

SQL Server 2017 on Linux Quick Start Guide | 4

SQL Server 2017 on Linux Quick Start Guide Contents Who should read this guide? ........................................................................................................................ 4 Getting started with SQL Server on Linux ..................................................................................................... 5 Why SQL Server with Linux? ..................................................................................................................... 5 Supported platforms ................................................................................................................................. 5 Architectural changes ............................................................................................................................... 6 Comparing SQL on Windows vs. Linux ...................................................................................................... 6 SQL Server installation on Linux ................................................................................................................ 8 Installing SQL Server packages .................................................................................................................. 8 Configuration capabilities ....................................................................................................................... 11 Licensing .................................................................................................................................................. 12 Administering and -

Bag-Of-Features Image Indexing and Classification in Microsoft SQL Server Relational Database

Bag-of-Features Image Indexing and Classification in Microsoft SQL Server Relational Database Marcin Korytkowski, Rafał Scherer, Paweł Staszewski, Piotr Woldan Institute of Computational Intelligence Cze¸stochowa University of Technology al. Armii Krajowej 36, 42-200 Cze¸stochowa, Poland Email: [email protected], [email protected] Abstract—This paper presents a novel relational database ar- data directly in the database files. The example of such an chitecture aimed to visual objects classification and retrieval. The approach can be Microsoft SQL Server where binary data is framework is based on the bag-of-features image representation stored outside the RDBMS and only the information about model combined with the Support Vector Machine classification the data location is stored in the database tables. MS SQL and is integrated in a Microsoft SQL Server database. Server utilizes a special field type called FileStream which Keywords—content-based image processing, relational integrates SQL Server database engine with NTFS file system databases, image classification by storing binary large object (BLOB) data as files in the file system. Microsoft SQL dialect (Transact-SQL) statements I. INTRODUCTION can insert, update, query, search, and back up FileStream data. Application Programming Interface provides streaming Thanks to content-based image retrieval (CBIR) access to the data. FileStream uses operating system cache for [1][2][3][4][5][6][7][8] we are able to search for similar caching file data. This helps to reduce any negative effects images and classify them [9][10][11][12][13]. Images can be that FileStream data might have on the RDBMS performance. -

Socrates: the New SQL Server in the Cloud

Socrates: The New SQL Server in the Cloud Panagiotis Antonopoulos, Alex Budovski, Cristian Diaconu, Alejandro Hernandez Saenz, Jack Hu, Hanuma Kodavalla, Donald Kossmann, Sandeep Lingam, Umar Farooq Minhas, Naveen Prakash, Vijendra Purohit, Hugh Qu, Chaitanya Sreenivas Ravella, Krystyna Reisteter, Sheetal Shrotri, Dixin Tang, Vikram Wakade Microsoft Azure & Microsoft Research ABSTRACT 1 INTRODUCTION The database-as-a-service paradigm in the cloud (DBaaS) The cloud is here to stay. Most start-ups are cloud-native. is becoming increasingly popular. Organizations adopt this Furthermore, many large enterprises are moving their data paradigm because they expect higher security, higher avail- and workloads into the cloud. The main reasons to move ability, and lower and more flexible cost with high perfor- into the cloud are security, time-to-market, and a more flexi- mance. It has become clear, however, that these expectations ble “pay-as-you-go” cost model which avoids overpaying for cannot be met in the cloud with the traditional, monolithic under-utilized machines. While all these reasons are com- database architecture. This paper presents a novel DBaaS pelling, the expectation is that a database runs in the cloud architecture, called Socrates. Socrates has been implemented at least as well as (if not better) than on premise. Specifically, in Microsoft SQL Server and is available in Azure as SQL DB customers expect a “database-as-a-service” to be highly avail- Hyperscale. This paper describes the key ideas and features able (e.g., 99.999% availability), support large databases (e.g., of Socrates, and it compares the performance of Socrates a 100TB OLTP database), and be highly performant. -

Billg the Manager

BillG the Manager “Intelli…what?”–Bill Gates The breadth of the Microsoft product line and the rapid turnover of core technologies all but precluded BillG from micro-managing the company in spite of the perceptions and lore around that topic. In less than 10 years the technology base of the business changed from the 8-bit BASIC era to the 16-bit MS-DOS era and to now the tail end of the 16-bit Windows era, on the verge of the Win32 decade. How did Bill manage this — where and how did he engage? This post introduces the topic and along with the next several posts we will explore some specific projects. At 38, having grown Microsoft as CEO from the start, Bill was leading Microsoft at a global scale that in 1993 was comparable to an industrial-era CEO. Even the legendary Thomas Watson Jr., son of the IBM founder, did not lead IBM until his 40s. Microsoft could never have scaled the way it did had BillG managed via a centralized hub-and-spoke system, with everything bottlenecked through him. In many ways, this was BillG’s product leadership gift to Microsoft—a deeply empowered organization that also had deep product conversations at the top and across the whole organization. This video from the early 1980’s is a great introduction to the breadth of Microsoft’s product offerings, even at a very early stage of the company. It also features some vintage BillG voiceover and early sales executive Vern Raburn. (Source: Microsoft videotape) Bill honed a set of undocumented principles that defined interactions with product groups. -

SQL Server 2019 Licensing Guide

Microsoft SQL Server 2019 Licensing guide Contents Overview 3 SQL Server 2019 editions 4 SQL Server and Software Assurance 7 How SQL Server 2019 licenses are sold 9 Server and Cloud Enrolment SQL Server 2019 licensing models 11 Core-based licensing Server+CAL licensing Licensing SQL Server 2019 Big Data Cluster 14 Licensing SQL Server 2019 components 18 Licensing SQL Server 2019 in a virtualized environment 19 Licensing individual virtual machines Licensing for maximum virtualization Licensing SQL Server in containers 23 Licensing individual containers Licensing containers for maximum density Advanced licensing scenarios and detailed examples 27 Licensing SQL Server for high availability Licensing SQL Server for Disaster Recovery Azure Hybrid Benefit Licensing SQL Server for application mobility Licensing SQL Server for non-production use Licensing SQL Server in a multiplexed application environment Additional product information 39 SQL Server 2019 migration options for Software Assurance customers Additional product licensing resources Licensing SQL Server for the Analytics Platform System © 2019 Microsoft Corporation. All rights reserved. This document is for informational purposes only. MICROSOFT MAKES NO WARRANTIES, EXPRESS OR IMPLIED, IN THIS SUMMARY. Microsoft provides this material solely for informational and marketing purposes. Customers should refer to their agreements for a full understanding of their rights and obligations under Microsoft’s Volume Licensing programs. Microsoft software is licensed not sold. The value and benefit gained through use of Microsoft software and services may vary by customer. Customers with questions about differences between this material and the agreements should contact their reseller or Microsoft account manager. Microsoft does not set final prices or payment terms for licenses acquired through resellers. -

Jim Allchin on Longhorn, Winfs, 64-Bit and Beyond Page 34 Jim

0805red_cover.v5 7/19/05 2:57 PM Page 1 4 Scripting Solutions to Simplify Your Life Page 28 AUGUST 2005 WWW.REDMONDMAG.COM MrMr WindowsWindows Jim Allchin on Longhorn, WinFS, 64-Bit and Beyond Page 34 > $5.95 05 • AUGUST Make Room for Linux Apps Page 43 25274 867 27 Active Directory Design Disasters Page 49 71 Project1 6/16/05 12:36 PM Page 1 Exchange Server stores & PSTs driving you crazy? Only $399 for 50 mailboxes; $1499 for unlimited mailboxes! Archive all mail to SQL and save 80% storage space! Email archiving solution for internal and external email Download your FREE trial from www.gfi.com/rma Project1 6/16/05 12:37 PM Page 2 Get your FREE trial version of GFI MailArchiver for Exchange today! GFI MailArchiver for Exchange is an easy-to-use email archiving solution that enables you to archive all internal and external mail into a single SQL database. Now you can provide users with easy, centralized access to past email via a web-based search interface and easily fulfill regulatory requirements (such as the Sarbanes-Oxley Act). GFI MailArchiver leverages the journaling feature of Exchange Server 2000/2003, providing unparalleled scalability and reliability at a competitive cost. GFI MailArchiver for Exchange features Provide end-users with a single web-based location in which to search all their past email Increase Exchange performance and ease backup and restoration End PST hell by storing email in SQL format Significantly reduce storage requirements for email by up to 80% Comply with Sarbanes-Oxley, SEC and other regulations. -

Using SAP Crystal Reports with SAP Sybase SQL Anywhere

Using SAP Crystal Reports with SAP Sybase SQL Anywhere USING SAP CRYSTAL REPORTS WITH SAP SYBASE SQL ANYWHERE TABLE OF CONTENTS INTRODUCTION ............................................................................................................................................... 3 REQUIREMENTS .............................................................................................................................................. 3 CONNECTING TO SQL ANYWHERE WITH CRYSTAL REPORTS ............................................................... 4 CREATING A SIMPLE REPORT ...................................................................................................................... 7 Adding Data to Crystal Reports ............................................................................................................................ 7 Formatting Records in Crystal Reports ............................................................................................................... 8 Displaying Records on a Map in Crystal Reports ............................................................................................... 9 ADDING DATA TO CRYSTAL REPORTS USING A SQL QUERY .............................................................. 10 Inserting a Chart Displaying Queried Data ........................................................................................................15 CREATING A SALES REPORT .................................................................................................................... -

'Freeware' Programs - WSJ

4/24/2021 Linux Lawsuit Could Undercut Other 'Freeware' Programs - WSJ This copy is for your personal, non-commercial use only. To order presentation-ready copies for distribution to your colleagues, clients or customers visit https://www.djreprints.com. https://www.wsj.com/articles/SB106081595219055800 Linux Lawsuit Could Undercut Other 'Freeware' Programs By William M. BulkeleyStaff Reporter of THE WALL STREET JOURNAL Aug. 14, 2003 1159 pm ET (See Corrections & Amplifications item below.) To many observers, tiny SCO Group Inc.'s $3 billion copyright-infringement lawsuit against International Business Machines Corp., and SCO's related demands for stiff license fees from hundreds of users of the free Linux software, seem like little more than a financial shakedown. But the early legal maneuverings in the suit suggest the impact could be far broader. For the first time, the suit promises to test the legal underpinnings that have allowed free software such as Linux to become a potent challenge to programs made by Microsoft Corp. and others. Depending on the outcome, the suit could strengthen or drastically weaken the free-software juggernaut. The reason: In filing its legal response to the suit last week, IBM relied on an obscure software license that undergirds most of the free-software industry. Called the General Public License, or GPL, it requires that the software it covers, and derivative works as well, may be copied by anyone, free of charge. IBM's argument is that SCO, in effect, "signed" this license by distributing Linux for years, and therefore can't now turn around and demand fees. -

Guide to Migrating from Oracle to SQL Server 2014 and Azure SQL Database

Guide to Migrating from Oracle to SQL Server 2014 and Azure SQL Database SQL Server Technical Article Writers: Yuri Rusakov (DB Best Technologies), Igor Yefimov (DB Best Technologies), Anna Vynograd (DB Best Technologies), Galina Shevchenko (DB Best Technologies) Technical Reviewer: Dmitry Balin (DB Best Technologies) Published: November 2014 Applies to: SQL Server 2014 Summary: This white paper explores challenges that arise when you migrate from an Oracle 7.3 database or later to SQL Server 2014. It describes the implementation differences of database objects, SQL dialects, and procedural code between the two platforms. The entire migration process using SQL Server Migration Assistant (SSMA) v6.0 for Oracle is explained in depth, with a special focus on converting database objects and PL/SQL code. Created by: DB Best Technologies LLC 2535 152nd Ave NE, Redmond, WA 98052 Tel: +1-855-855-3600 E-mail: [email protected] Web: www.dbbest.com Copyright This is a preliminary document and may be changed substantially prior to final commercial release of the software described herein. The information contained in this document represents the current view of Microsoft Corporation on the issues discussed as of the date of publication. Because Microsoft must respond to changing market conditions, it should not be interpreted to be a commitment on the part of Microsoft, and Microsoft cannot guarantee the accuracy of any information presented after the date of publication. This White Paper is for informational purposes only. MICROSOFT MAKES NO WARRANTIES, EXPRESS, IMPLIED OR STATUTORY, AS TO THE INFORMATION IN THIS DOCUMENT. Complying with all applicable copyright laws is the responsibility of the user. -

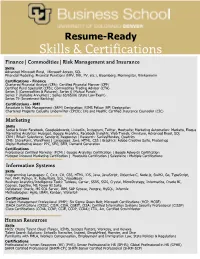

Resume Ready Resource Guide

Resume-Ready Skills & Certifications Finance | Commodities | Risk Management and Insurance Skills Advanced Microsoft Excel, Microsoft Access, SQL Financial Modeling, Financial Functions (NPV, IRR, PV, etc.), Bloomberg, Morningstar, thinkorswim Certifications - Finance Chartered Financial Analyst (CFA); Certified Financial Planner (CFP) Certified Fund Specialist (CFS); Commodities Trading Advisor (CTA) Series 3 (Commodities & Futures); Series 6 (Mutual Funds) Series 7 (Variable Annuities) ; Series 63/65/66 (State Law Exam) Series 79 (Investment Banking) Certifications - RMI Associate in Risk Management (ARM) Designation; RIMS Fellow (RF) Designation Chartered Property Casualty Underwriter (CPCU); Life and Health; Certified Insurance Counselor (CIC) Marketing Skills Social & Web: Facebook, GoogleAdwords, LinkedIn, Instagram, Twitter, Hootsuite; Marketing Automation: Marketo, Eloqua Marketing Analytics: Hubspot, Google Analytics, Facebook Insights, Web Trends, Omniture, Advanced Excel, SQL CRM | Email: Salesforce, Sendgrid, Responsys | Research: SurveyMonkey, Qualtrics CMS: SharePoint, WordPress | Languages: Java, HTML, CSS | Graphics: Adobe Creative Suite, Photoshop Digital Marketing Areas: PPC, SEO, SEM, Demand Generation Certifications Professional Certified Marketer (PCM) | Google Analytics Certification | Google Adwords Certification Hubspot Inbound Marketing Certification | Hootsuite Certification | Salesforce - Multiple Certifications Information Systems Skills Programming Languages: C, C++, C#, CSS, HTML, iOS, Java, JavaScript, Objective-C, -

Sap Sybase (Ase) W Third-Party Support

N SAP SYBASE (ASE) W THIRD-PARTY SUPPORT E S Overview SUPPORTED VERSIONS All Sybase database releases Spinnaker Support is a leading global provider of third-party support and managed and editions services for SAP Sybase (Adaptive Server Enterprise or ASE) database products. Spinnaker Support’s third-party software support replaces SAP’s annual maintenance and SUPPORTED PRODUCTS support. Third-party support is always at least half the cost of SAP support and provides more • Sybase Adaptive Server services through an assigned support team and other personalized service components. Enterprise (Sybase ASE) Your operations depend on Sybase running smoothly and efficiently so that your transactional • Sybase Advantage data is available at a moment’s notice. By switching to third-party Sybase support, you gain high Database Server responsiveness and faster problem resolution when problems do arise. You can remain on your (Sybase ADS) existing Sybase solutions for as long as needed and can rely on our expert advice when you eventually upgrade or migrate away from your current Sybase version. • Sybase Replication Server • Sybase IQ THE SYBASE DILEMMA • Sybase SQL Anywhere Following SAP’s acquisition of Sybase in 2011, Sybase customers have witnessed a steady decline in the commitment to deliver new innovations, product enhancements, and • Sybase PowerBuilder appropriate support resources. With the announced 2025 End of Mainstream Maintenance • Sybase PowerDesigner (EoMM) for Sybase 16.0, organizations are feeling new pressure to either upgrade to SAP HANA or migrate to an alternative database platform. • Sybase Mainframe Connect The Sybase EoMM, along with the rising cost and decreasing quality of SAP-provided support, has put IT leaders on the defensive, pressuring them to make a premature decision regarding the • Sybase EA Server future of their stable, reliable Sybase solution. -

Lesson 4: Optimize a Query Using the Sybase IQ Query Plan

Author: Courtney Claussen – SAP Sybase IQ Technical Evangelist Contributor: Bruce McManus – Director of Customer Support at Sybase Getting Started with SAP Sybase IQ Column Store Analytics Server Lesson 4: Optimize a Query using the SAP Sybase IQ Query Plan Copyright (C) 2012 Sybase, Inc. All rights reserved. Unpublished rights reserved under U.S. copyright laws. Sybase and the Sybase logo are trademarks of Sybase, Inc. or its subsidiaries. SAP and the SAP logo are trademarks or registered trademarks of SAP AG in Germany and in several other countries all over the world. All other trademarks are the property of their respective owners. (R) indicates registration in the United States. Specifications are subject to change without notice. Table of Contents 1. Introduction ................................................................................................................1 2. SAP Sybase IQ Query Processing ............................................................................2 3. SAP Sybase IQ Query Plans .....................................................................................4 4. What a Query Plan Looks Like ................................................................................5 5. What a Query Plan Will Tell You ............................................................................7 5.1 Query Tree Nodes and Node Detail ......................................................................7 5.1.1 Root Node Details ..........................................................................................7