Download Download

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Conspiracy of Law and the State in Anatole France's "Crainquebille"; Or Law and Literature Comes of Age, 24 Loy

Loyola University Chicago Law Journal Volume 24 Article 3 Issue 2 Volume 24, Issue 2-3 Winter 1993 1993 The onsC piracy of Law and the State in Anatole France's "Crainquebille"; or Law and Literature Comes of Age James D. Redwood Assoc. Prof. of Law, Albany Law School of Union University Follow this and additional works at: http://lawecommons.luc.edu/luclj Part of the Law Commons Recommended Citation James D. Redwood, The Conspiracy of Law and the State in Anatole France's "Crainquebille"; or Law and Literature Comes of Age, 24 Loy. U. Chi. L. J. 179 (1993). Available at: http://lawecommons.luc.edu/luclj/vol24/iss2/3 This Article is brought to you for free and open access by LAW eCommons. It has been accepted for inclusion in Loyola University Chicago Law Journal by an authorized administrator of LAW eCommons. For more information, please contact [email protected]. The Conspiracy of Law and the State in Anatole France's "Crainquebille"; or Law and Literature Comes of Age James D. Redwood* A quoi servirait de changer les institutions si 'on ne change pas les moeurs? I1faudrait que [le juge] changeit de coeur. Que sont les juges aujourd'hui pour la plupart? Des machines i con- damner, des moulins i moudre des sentences. I1 faudrait qu'ils prissent un coeur humain. I1 faudrait qu' . un juge ffit un homme. Mais c'est beaucoup demander.' I. INTRODUCTION The law and literature movement appears at last to have come of age. Generally considered born in 1973 after a labor and delivery that can only be described as daunting,2 the movement, if such it can be called, passed a rather quiet and uneventful childhood before bursting into adolescence with all the frenetic energy char- * Associate Professor of Law, Albany Law School of Union University; B.A., 1971, Oberlin College; J.D., Loyola Law School, Los Angeles, 1983. -

Time Present the Newsletter of the T.S

Time Present The Newsletter of the T.S. Eliot Society number 73 spring 2011 contents ESSAYS Un Présent Parfait: T.S. “Un Présent Parfait”: T. S. Eliot in Paris, 1910-1911 Eliot in Paris 1 s Eliot acknowledged in his essay in French “What France Means to You,” he had Alain-Fournier and the Athe “exceptional good fortune” to live in Paris during the academic year 1910-1911. Tutoring of Tom Eliot 2 While he went there with the goals of finding his poetic voice, attending the courses of Henri Bergson at the Collège de France, improving his skills in French and his knowledge of contemporary French literature, and becoming a cosmopolitan young man of the world, Public Sightings 3 he found himself in the French capital during an amazing period of intellectual and artistic developments. Book Reviews 4 It was literally seething with a diversity of ideas that were innovative, exciting, and often conflicting from a host of literary and intellectual figures such as Claudel, Gide, Eliot News 7 Perse, Bergson, Maurras, Durkheim, and Curie. Its cultural riches were never more tan- talizing with extraordinary happenings occurring at an amazing pace: the first exhibition Paris Conference 8 of the Cubists (whose techniques and themes influenced “The Love Song” andThe Waste Land); the daring ballets of the Ballets Russes (whose character Petrouchka was a model for Prufrock); the presentation of Wagner’s Der Ring des Nibelungen for the first time ever Abstracts from the Modern at the Paris Opéra (whose refrain of the Rhine-Daughters is echoed in The Waste Land) , Language Association 10 and the scandalous multimedia extravaganza Le Martyre de Saint Sébastien (which was one inspiration for “The Love Song of Saint Sebastian”). -

The Critical Orientation of T. S. Eliot

Loyola University Chicago Loyola eCommons Master's Theses Theses and Dissertations 1962 The Critical Orientation of T. S. Eliot Honora Remes Loyola University Chicago Follow this and additional works at: https://ecommons.luc.edu/luc_theses Part of the English Language and Literature Commons Recommended Citation Remes, Honora, "The Critical Orientation of T. S. Eliot" (1962). Master's Theses. 1781. https://ecommons.luc.edu/luc_theses/1781 This Thesis is brought to you for free and open access by the Theses and Dissertations at Loyola eCommons. It has been accepted for inclusion in Master's Theses by an authorized administrator of Loyola eCommons. For more information, please contact [email protected]. This work is licensed under a Creative Commons Attribution-Noncommercial-No Derivative Works 3.0 License. Copyright © 1962 Honora Remes THE CRITICAL ORIENTATION OF T. S. ELIOT Sister Honora Re ••• , D. C. A Thesie Submitted to the Facult1 of the Graduate School of Lo101a Uaivers1t, in Partial lUlflllment of the Requirement. for the Degree of Master of Art. LIn Siat.r Honora R•••• was bora in New Prague, Minnesota, March 23. 19Y1. She was graduated froll ••v Pra",e Public Hich School, June, 1954, and entered tbe Coll8N.Ditl of the Dauahter. of Charit.l ot St. Vincent de Paul, Sept.e.ber, 1956, atter one lear at. the CoUe,e ot St. Teresa. Winona, Minne sota. She cont.inued bel' educat.ion at Marillac College, Nol'llWldy 2l. Mi.souri, and was graduated August, 1960, vith a degree of Bach.lor ot Art.s. She began her ,radllate studi.s at Lol01a Uniyera1tl in Sept.ab.r, 1960. -

The Nobel Peace Prize

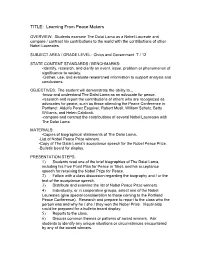

TITLE: Learning From Peace Makers OVERVIEW: Students examine The Dalai Lama as a Nobel Laureate and compare / contrast his contributions to the world with the contributions of other Nobel Laureates. SUBJECT AREA / GRADE LEVEL: Civics and Government 7 / 12 STATE CONTENT STANDARDS / BENCHMARKS: -Identify, research, and clarify an event, issue, problem or phenomenon of significance to society. -Gather, use, and evaluate researched information to support analysis and conclusions. OBJECTIVES: The student will demonstrate the ability to... -know and understand The Dalai Lama as an advocate for peace. -research and report the contributions of others who are recognized as advocates for peace, such as those attending the Peace Conference in Portland: Aldolfo Perez Esquivel, Robert Musil, William Schulz, Betty Williams, and Helen Caldicott. -compare and contrast the contributions of several Nobel Laureates with The Dalai Lama. MATERIALS: -Copies of biographical statements of The Dalai Lama. -List of Nobel Peace Prize winners. -Copy of The Dalai Lama's acceptance speech for the Nobel Peace Prize. -Bulletin board for display. PRESENTATION STEPS: 1) Students read one of the brief biographies of The Dalai Lama, including his Five Point Plan for Peace in Tibet, and his acceptance speech for receiving the Nobel Prize for Peace. 2) Follow with a class discussion regarding the biography and / or the text of the acceptance speech. 3) Distribute and examine the list of Nobel Peace Prize winners. 4) Individually, or in cooperative groups, select one of the Nobel Laureates (give special consideration to those coming to the Portland Peace Conference). Research and prepare to report to the class who the person was and why he / she / they won the Nobel Prize. -

Friedensnobelpreisträger Alljährlich Am 10

WikiPress Friedensnobelpreisträger Alljährlich am 10. Dezember, dem Todestag Alfred Nobels, wird der Frie- Friedensnobelpreisträger densnobelpreis vom norwegischen König in Oslo verliehen. Im Jahr 1901 erhielt Henri Dunant für die Gründung des Roten Kreuzes und seine Ini- Geschichte, Personen, Organisationen tiative zum Abschluss der Genfer Konvention als Erster die begehrte Aus- zeichnung. Mit dem Preis, den Nobel in seinem Testament gestiftet hatte, wurden weltweit zum ersten Mal die Leistungen der Friedensbewegung Aus der freien Enzyklopädie Wikipedia offiziell gewürdigt. Im Gegensatz zu den anderen Nobelpreisen kann der zusammengestellt von Friedensnobelpreis auch an Organisationen vergeben werden, die an ei- nem Friedensprozess beteiligt sind. Dieses Buch stellt in ausführlichen Achim Raschka Beiträgen sämtliche Friedensnobelpreisträger seit 1901 sachkundig vor. Alle Artikel sind aus der freien Enzyklopädie Wikipedia zusammen- gestellt und zeichnen ein lebendiges Bild von der Vielfalt, Dynamik und Qualität freien Wissens – zu dem jeder beitragen kann. Achim Raschka hat einige Jahre seines Lebens damit verbracht, Biologie zu studieren, und vor vier Jahren sein Diplom mit den Schwerpunkten Zoologie, Humanbiologie, Ökologie und Paläontologie abgeschlossen. Er ist verheiratet und Vater von zwei Kindern, hat einen Facharbeiterbrief als Physiklaborant, ist ehemaliger Zivildienstleistender einer Jugendherberge in Nordhessen sowie ambitionierter Rollenspieler und Heavy-Metal-Fan. Während seines Studiums betreute er verschiedene Kurse, vor allem in Ökologie (Bodenzoologie und Limnologie), Zoologie sowie in Evolutions- biologie und Systematik. Seit dem Studium darf er als Dozent an der Frei- en Universität in Berlin regelmäßig eigene Kurse in Ökologie geben. Au- ßerdem war er kurz beim Deutschen Humangenomprojekt (DHGP) und betreute mehrere Jahre Portale bei verschiedenen Internetplattformen. Zur Wikipedia kam Achim Raschka während seiner Zeit im Erziehungs- urlaub für seinen jüngeren Sohn. -

Pacifists During the First World War

PACIFISTS DURING THE FIRST WORLD WAR Nº 24 - SEPTEMBER 2015 nº 24 - SEPTEMBER 2015 PACIFISTS DURING THE FIRST WORLD WAR INDEX Editorial - An essential factor and actor In Depth - Rosa Luxemburg: anticapitalism to get to the pacifst eutopia - Conscription and Conscience in Great Britain - “The whole world is our homeland”: Anarchist antimilitarism - Illusion and vision: the scientifc pacifsm of Alfred H. Fried - The Practical Internationalism of Esperanto Interview - Interview with Joan Botam, Catalan priest and Capuchin friar Recommendations - Materials and resources recommended by the ICIP Platform - 100 years after the genocide: Armenia at the crossroads - A bold statement About ICIP - News, activities and publications about the ICIP nº 24 - SEPTEMBER 2015 PACIFISTS DURING THE FIRST WORLD WAR EDITORIAL An essential factor and actor Rafael Grasa President of the International Catalan Institute for Peace The present issue deals with some of the peace and anti-militarist movements linked, chronologically or thematically, to World War I, marking the culmination of the com- memoration of its centennial. We specifcally take a look at the more political move- ments, such as those linked to anarchism (First International) or to the main leader of the Spartacus League, Rosa Luxemburg, the anti-draft groups and the pioneering work in the academic world of Albert Fried, one of the creators of the epistemic com- munity that is behind the most radical perspectives of international relations and pe- ace research. In addition, Joan Botam, a priest and ecumenist, refects on the legacy of the Great War and opposition to it among the various peace movements inspired by religious beliefs. -

1921 Fun Facts, Trivia & History

1921 Fun Facts, Trivia & History Quick Facts from 1921: • The America Changing Event: The first radio baseball game was broadcast. Harold Arlin announced the Pirates-Phillies game from Forbes Field over Westinghouse KDKA, in Pittsburgh. The Pirates won, 8-5. • Soviet Russia and Poland signed the Treaty of Riga establishing a permanent border between the two countries. • The Russian Great Famine of 1921/22 killed 5 million people. • The Communist Party of China was formed. • Influential Songs include Second Hand Rose and My Man by Fanny Brice. Also: St. Louis Blues by the Original Dixie Land Band and others. • The Movies to Watch include The Kid, The Three Musketeers, The Haunted Castle and The Sheik • The Most Famous Person in America was probably Roscoe ‘Fatty’ Arbuckle • New York Yankee pitcher Babe Ruth hit his 138th home-run, continually growing that record to 714 in 1935. • Adolf Hitler became the Chairman of the Nazi Party in his rise to power and prominence in Germany. • Price of a pound peanut butter in 1921: 15 cents Top Ten Baby Names of 1921: Mary, Dorothy, Helen, Margaret, Ruth, Virginia, Mildred, Betty, Frances, Elizabeth John, Robert, William, James, Charles, George, Joseph, Edward, Frank, Richard US Life Expectancy: (1921) Males: 60.0 years, Females: 61.8 years The Stars: Theda Bara, Pola Negri, Mary Pickford Miss America: Margaret Gorman (Washington, DC) Firsts, Inventions, and Wonders: Guccio Gucci started selling his handbags. Coco Chanel introduced “Chanel No. 5”. On October 23, 1921, an American officer selected the body of the first “Unknown Soldier”. “Andy’s Candies” was founded, but maker Andy Kanelos realized that men would never buy chocolates for women with another man’s name written on them. -

Whose Priorities? a Guide for Campaigners on Military and Social Spending

Whose Priorities? A guide for campaigners on military and social spending INTERNATIONAL PEACE BUREAU Whose Priorities? A guide for campaigners on military and social spending INTERNATIONAL PEACE BUREAU Published by: INTERNATIONAL PEACE BUREAU, 2007 working for a world without war ISBN 92-95006-04-6 International Peace Bureau www.ipb.org [email protected] Tel: +41-22-731-6429 Fax: +41-22-738-9419 41 rue de Zurich 1201 Geneva Switzerland Written and edited by Colin Archer Design: Parfab S.A., 44 Rue de Berne, 1201 Geneva Printing: Imprimerie Minute, Geneva With thanks to: Henrietta Wilkins, Mathias Nnane Ekah, Emma Henriksson and all the groups whose material is featured in the book as well as IPB staff, Board and members This book is dedicated to Ruth Leger Sivard IPB gratefully acknowledges funding support from the Development Cooperation Agency of Catalunya and Rissho Kosei-Kai, Japan Cover photos: courtesy Netwerk Vlaanderen (www.netwerkvlaanderen.be) and World Water Assessment Programme (www.unesco.org/water/wwap) Whose Priorities? A guide for campaigners on military and social spending Table of contents 1. Introduction 5 2. FAQ - Frequently Asked Questions 7 3. Factual Background 11 4. Developing campaigns on spending priorities 17 5. Examples of creative campaigning 27 6. Building a worldwide network 59 7. Websites 63 8. Publications 69 9. About the International Peace Bureau 71 10. IPB - Publications catalogue 73 1. INTRODUCTION “Warfare is the product of cowardice; it takes bravery to forego easy answers and find peaceful resolutions.” -

The Role of Translation in the Nobel Prize in Literature : a Case Study of Howard Goldblatt's Translations of Mo Yan's Works

Lingnan University Digital Commons @ Lingnan University Theses & Dissertations Department of Translation 3-9-2016 The role of translation in the Nobel Prize in literature : a case study of Howard Goldblatt's translations of Mo Yan's works Yau Wun YIM Follow this and additional works at: https://commons.ln.edu.hk/tran_etd Part of the Applied Linguistics Commons, and the Translation Studies Commons Recommended Citation Yim, Y. W. (2016). The role of translation in the Nobel Prize in literature: A case study of Howard Goldblatt's translations of Mo Yan's works (Master's thesis, Lingnan University, Hong Kong). Retrieved from http://commons.ln.edu.hk/tran_etd/16/ This Thesis is brought to you for free and open access by the Department of Translation at Digital Commons @ Lingnan University. It has been accepted for inclusion in Theses & Dissertations by an authorized administrator of Digital Commons @ Lingnan University. Terms of Use The copyright of this thesis is owned by its author. Any reproduction, adaptation, distribution or dissemination of this thesis without express authorization is strictly prohibited. All rights reserved. THE ROLE OF TRANSLATION IN THE NOBEL PRIZE IN LITERATURE: A CASE STUDY OF HOWARD GOLDBLATT’S TRANSLATIONS OF MO YAN’S WORKS YIM YAU WUN MPHIL LINGNAN UNIVERSITY 2016 THE ROLE OF TRANSLATION IN THE NOBEL PRIZE IN LITERATURE: A CASE STUDY OF HOWARD GOLDBLATT’S TRANSLATIONS OF MO YAN’S WORKS by YIM Yau Wun 嚴柔媛 A thesis submitted in partial fulfillment of the requirements for the Degree of Master of Philosophy in Translation LINGNAN UNIVERSITY 2016 ABSTRACT The Role of Translation in the Nobel Prize in Literature: A Case Study of Howard Goldblatt’s Translations of Mo Yan’s Works by YIM Yau Wun Master of Philosophy The purpose of this thesis is to explore the role of the translator and translation in the Nobel Prize in Literature through an illustration of the case of Howard Goldblatt’s translations of Mo Yan’s works. -

Alfred Hermann Fried Papers, 1914-1921

http://oac.cdlib.org/findaid/ark:/13030/tf9q2nb3vj No online items Register of the Alfred Hermann Fried Papers, 1914-1921 Hoover Institution Archives Stanford University Stanford, California 94305-6010 Phone: (650) 723-3563 Fax: (650) 725-3445 Email: [email protected] © 1999 Hoover Institution Archives. All rights reserved. Register of the Alfred Hermann XX300 1 Fried Papers, 1914-1921 Register of the Alfred Hermann Fried Papers, 1914-1921 Hoover Institution Archives Stanford University Stanford, California Contact Information Hoover Institution Archives Stanford University Stanford, California 94305-6010 Phone: (650) 723-3563 Fax: (650) 725-3445 Email: [email protected] Prepared by: Keith Jantzen Date Completed: November 1981 © 1999 Hoover Institution Archives. All rights reserved. Descriptive Summary Title: Alfred Hermann Fried Papers, Date (inclusive): 1914-1921 Collection number: XX300 Creator: Fried, Alfred Hermann, 1864-1921 Collection Size: 5 manuscript boxes(2 linear feet) Repository: Hoover Institution Archives Stanford, California 94305-6010 Abstract: Diaries, correspondence, clippings, and notes, relating to the international peace movement, particularly during World War I, pacifism, international cooperation, and the World War I war guilt question. Language: German. Access Collection open for research. Publication Rights For copyright status, please contact the Hoover Institution Archives. Preferred Citation [Identification of item], Alfred Hermann Fried Papers, [Box no.], Hoover Institution Archives. Access -

Transplanting Surrealism in Greece- a Scandal Or Not?

International Journal of Social and Educational Innovation (IJSEIro) Volume 2 / Issue 3/ 2015 Transplanting Surrealism in Greece- a Scandal or Not? NIKA Maklena University of Tirana, Albania E-mail: [email protected] Received 26.01.2015; Accepted 10.02. 2015 Abstract Transplanting the surrealist movement and literature in Greece and feedback from the critics and philological and journalistic circles of the time is of special importance in the history of Modern Greek Literature. The Greek critics and readers who were used to a traditional, patriotic and strictly rule-conforming literature would find it hard to accept such a kind of literature. The modern Greek surrealist writers, in close cooperation mainly with French surrealist writers, would be subject to harsh criticism for their surrealist, absurd, weird and abstract productivity. All this reaction against the transplanting of surrealism in Greece caused the so called “surrealist scandal”, one of the biggest scandals in Greek letters. Keywords: Surrealism, Modern Greek Literature, criticism, surrealist scandal, transplanting, Greek letters 1. Introduction When Andre Breton published the First Surrealist Manifest in 1924, Greece had started to produce the first modern works of its literature. Everything modern arrives late in Greece due to a number of internal factors (poetic collection of Giorgios Seferis “Mythistorima” (1935) is considered as the first modern work in Greek literature according to Αlexandros Argyriou, History of Greek Literature and its perception over years between two World Wars (1918-1940), volume Α, Kastanioti Publications, Athens 2002, pp. 534-535). Yet, on the other hand Greek writers continued to strongly embrace the new modern spirit prevailing all over Europe. -

Liste Der Nobelpreisträger

Physiologie Wirtschafts- Jahr Physik Chemie oder Literatur Frieden wissenschaften Medizin Wilhelm Henry Dunant Jacobus H. Emil von Sully 1901 Conrad — van ’t Hoff Behring Prudhomme Röntgen Frédéric Passy Hendrik Antoon Theodor Élie Ducommun 1902 Emil Fischer Ronald Ross — Lorentz Mommsen Pieter Zeeman Albert Gobat Henri Becquerel Svante Niels Ryberg Bjørnstjerne 1903 William Randal Cremer — Pierre Curie Arrhenius Finsen Bjørnson Marie Curie Frédéric John William William Mistral 1904 Iwan Pawlow Institut de Droit international — Strutt Ramsay José Echegaray Adolf von Henryk 1905 Philipp Lenard Robert Koch Bertha von Suttner — Baeyer Sienkiewicz Camillo Golgi Joseph John Giosuè 1906 Henri Moissan Theodore Roosevelt — Thomson Santiago Carducci Ramón y Cajal Albert A. Alphonse Rudyard \Ernesto Teodoro Moneta 1907 Eduard Buchner — Michelson Laveran Kipling Louis Renault Ilja Gabriel Ernest Rudolf Klas Pontus Arnoldson 1908 Metschnikow — Lippmann Rutherford Eucken Paul Ehrlich Fredrik Bajer Theodor Auguste Beernaert Guglielmo Wilhelm Kocher Selma 1909 — Marconi Ostwald Ferdinand Lagerlöf Paul Henri d’Estournelles de Braun Constant Johannes Albrecht Ständiges Internationales 1910 Diderik van Otto Wallach Paul Heyse — Kossel Friedensbüro der Waals Allvar Maurice Tobias Asser 1911 Wilhelm Wien Marie Curie — Gullstrand Maeterlinck Alfred Fried Victor Grignard Gerhart 1912 Gustaf Dalén Alexis Carrel Elihu Root — Paul Sabatier Hauptmann Heike Charles Rabindranath 1913 Kamerlingh Alfred Werner Henri La Fontaine — Robert Richet Tagore Onnes Theodore