The University of Chicago Cue Selection and Category Restructuring in Sound Change a Dissertation Submitted to the Faculty of Th

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

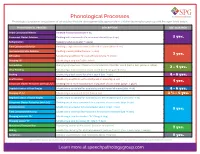

Phonological Processes

Phonological Processes Phonological processes are patterns of articulation that are developmentally appropriate in children learning to speak up until the ages listed below. PHONOLOGICAL PROCESS DESCRIPTION AGE ACQUIRED Initial Consonant Deletion Omitting first consonant (hat → at) Consonant Cluster Deletion Omitting both consonants of a consonant cluster (stop → op) 2 yrs. Reduplication Repeating syllables (water → wawa) Final Consonant Deletion Omitting a singleton consonant at the end of a word (nose → no) Unstressed Syllable Deletion Omitting a weak syllable (banana → nana) 3 yrs. Affrication Substituting an affricate for a nonaffricate (sheep → cheep) Stopping /f/ Substituting a stop for /f/ (fish → tish) Assimilation Changing a phoneme so it takes on a characteristic of another sound (bed → beb, yellow → lellow) 3 - 4 yrs. Velar Fronting Substituting a front sound for a back sound (cat → tat, gum → dum) Backing Substituting a back sound for a front sound (tap → cap) 4 - 5 yrs. Deaffrication Substituting an affricate with a continuant or stop (chip → sip) 4 yrs. Consonant Cluster Reduction (without /s/) Omitting one or more consonants in a sequence of consonants (grape → gape) Depalatalization of Final Singles Substituting a nonpalatal for a palatal sound at the end of a word (dish → dit) 4 - 6 yrs. Stopping of /s/ Substituting a stop sound for /s/ (sap → tap) 3 ½ - 5 yrs. Depalatalization of Initial Singles Substituting a nonpalatal for a palatal sound at the beginning of a word (shy → ty) Consonant Cluster Reduction (with /s/) Omitting one or more consonants in a sequence of consonants (step → tep) Alveolarization Substituting an alveolar for a nonalveolar sound (chew → too) 5 yrs. -

The Phonology, Phonetics, and Diachrony of Sturtevant's

Indo-European Linguistics 7 (2019) 241–307 brill.com/ieul The phonology, phonetics, and diachrony of Sturtevant’s Law Anthony D. Yates University of California, Los Angeles [email protected] Abstract This paper presents a systematic reassessment of Sturtevant’s Law (Sturtevant 1932), which governs the differing outcomes of Proto-Indo-European voiced and voice- less obstruents in Hittite (Anatolian). I argue that Sturtevant’s Law was a con- ditioned pre-Hittite sound change whereby (i) contrastively voiceless word-medial obstruents regularly underwent gemination (cf. Melchert 1994), but gemination was blocked for stops in pre-stop position; and (ii) the inherited [±voice] contrast was then lost, replaced by the [±long] opposition observed in Hittite (cf. Blevins 2004). I pro- vide empirical and typological support for this novel restriction, which is shown not only to account straightforwardly for data that is problematic under previous analy- ses, but also to be phonetically motivated, a natural consequence of the poorly cued durational contrast between voiceless and voiced stops in pre-stop environments. I develop an optimality-theoretic analysis of this gemination pattern in pre-Hittite, and discuss how this grammar gave rise to synchronic Hittite via “transphonologization” (Hyman 1976, 2013). Finally, it is argued that this analysis supports deriving the Hittite stop system from the Proto-Indo-European system as traditionally reconstructed with an opposition between voiceless, voiced, and breathy voiced stops (contra Kloekhorst 2016, Jäntti 2017). Keywords Hittite – Indo-European – diachronic phonology – language change – phonological typology © anthony d. yates, 2019 | doi:10.1163/22125892-00701006 This is an open access article distributed under the terms of the CC-BY-NCDownloaded4.0 License. -

Himalayan Linguistics Segmental and Suprasegmental Features of Brokpa

Himalayan Linguistics Segmental and suprasegmental features of Brokpa Pema Wangdi James Cook University ABSTRACT This paper analyzes segmental and suprasegmental features of Brokpa, a Trans-Himalayan (Tibeto- Burman) language belonging to the Central Bodish (Tibetic) subgroup. Segmental phonology includes segments of speech including consonants and vowels and how they make up syllables. Suprasegmental features include register tone system and stress. We examine how syllable weight or moraicity plays a determining role in the placement of stress, a major criterion for phonological word in Brokpa; we also look at some other evidence for phonological words in this language. We argue that synchronic segmental and suprasegmental features of Brokpa provide evidence in favour of a number of innovative processes in this archaic Bodish language. We conclude that Brokpa, a language historically rich in consonant clusters with a simple vowel system and a relatively simple prosodic system, is losing its consonant clusters and developing additional complexities including lexical tones. KEYWORDS Parallelism in drift, pitch harmony, register tone, stress, suprasegmental This is a contribution from Himalayan Linguistics, Vol. 19(1): 393-422 ISSN 1544-7502 © 2020. All rights reserved. This Portable Document Format (PDF) file may not be altered in any way. Tables of contents, abstracts, and submission guidelines are available at escholarship.org/uc/himalayanlinguistics Himalayan Linguistics, Vol. 19(1). © Himalayan Linguistics 2020 ISSN 1544-7502 Segmental and suprasegmental features of Brokpa Pema Wangdi James Cook University 1 Introduction Brokpa, a Central Bodish language, has a complicated phonological system. This paper aims at analysing its segmental and suprasegmental features. We begin with a brief background information and basic typological features of Brokpa in §1. -

UC Berkeley Proceedings of the Annual Meeting of the Berkeley Linguistics Society

UC Berkeley Proceedings of the Annual Meeting of the Berkeley Linguistics Society Title Perception of Illegal Contrasts: Japanese Adaptations of Korean Coda Obstruents Permalink https://escholarship.org/uc/item/6x34v499 Journal Proceedings of the Annual Meeting of the Berkeley Linguistics Society, 36(36) ISSN 2377-1666 Author Whang, James D. Y. Publication Date 2016 Peer reviewed eScholarship.org Powered by the California Digital Library University of California PROCEEDINGS OF THE THIRTY SIXTH ANNUAL MEETING OF THE BERKELEY LINGUISTICS SOCIETY February 6-7, 2010 General Session Special Session Language Isolates and Orphans Parasession Writing Systems and Orthography Editors Nicholas Rolle Jeremy Steffman John Sylak-Glassman Berkeley Linguistics Society Berkeley, CA, USA Berkeley Linguistics Society University of California, Berkeley Department of Linguistics 1203 Dwinelle Hall Berkeley, CA 94720-2650 USA All papers copyright c 2016 by the Berkeley Linguistics Society, Inc. All rights reserved. ISSN: 0363-2946 LCCN: 76-640143 Contents Acknowledgments v Foreword vii Basque Genitive Case and Multiple Checking Xabier Artiagoitia . 1 Language Isolates and Their History, or, What's Weird, Anyway? Lyle Campbell . 16 Putting and Taking Events in Mandarin Chinese Jidong Chen . 32 Orthography Shapes Semantic and Phonological Activation in Reading Hui-Wen Cheng and Catherine L. Caldwell-Harris . 46 Writing in the World and Linguistics Peter T. Daniels . 61 When is Orthography Not Just Orthography? The Case of the Novgorod Birchbark Letters Andrew Dombrowski . 91 Gesture-to-Speech Mismatch in the Construction of Problem Solving Insight J.T.E. Elms . 101 Semantically-Oriented Vowel Reduction in an Amazonian Language Caleb Everett . 116 Universals in the Visual-Kinesthetic Modality: Politeness Marking Features in Japanese Sign Language (JSL) Johnny George . -

The Emergence of Consonant-Vowel Metathesis in Karuk

Background Methodology and Predictions Results Conclusions References The Emergence of Consonant-Vowel Metathesis in Karuk Andrew Garre & Tyler Lau University of California, Berkeley [email protected] [email protected] Society for the Study of the Indigenous Languages of the Americas (SSILA) 2018 Salt Lake City, UT, USA January 6, 2018 Andrew Garre & Tyler Lau The Emergence of Consonant-Vowel Metathesis in Karuk Background Methodology and Predictions Results Conclusions References Acknowledgements Many thanks to the following: • Karuk master speakers Sonny Davis and the late Lucille Albers, Charlie Thom, and especially Vina Smith; • research collaborators LuLu Alexander, Tamara Alexander, Crystal Richardson, and Florrine Super (in Yreka) and Erik H. Maier, Line Mikkelsen, and Clare Sandy (at Berkeley); and • Susan Lin and the audience at UC Berkeley’s Phonetics and Phonology Forum for insightful comments and suggestions. Data in this talk is drawn from Ararahi’urípih, a Karuk dictionary and text corpus (http://linguistics.berkeley.edu/~karuk). Andrew Garre & Tyler Lau The Emergence of Consonant-Vowel Metathesis in Karuk Background Methodology and Predictions Metathesis Results Karuk Conclusions References Overview • Karuk V1CV2 sequences show much coarticulation of V1 into V2 w j j /uCi/ ! [uC i], /iCa/ ! [iC a], /iCu/ ! [iC u] (all high V1) • We argue that this coarticulation is a source of CV metathesis along lines that are phonologized in other languages. • Goals • To figure out the environments in which this process occurs • -

Lecture 5 Sound Change

An articulatory theory of sound change An articulatory theory of sound change Hypothesis: Most common initial motivation for sound change is the automation of production. Tokens reduced online, are perceived as reduced and represented in the exemplar cluster as reduced. Therefore we expect sound changes to reflect a decrease in gestural magnitude and an increase in gestural overlap. What are some ways to test the articulatory model? The theory makes predictions about what is a possible sound change. These predictions could be tested on a cross-linguistic database. Sound changes that take place in the languages of the world are very similar (Blevins 2004, Bateman 2000, Hajek 1997, Greenberg et al. 1978). We should consider both common and rare changes and try to explain both. Common and rare changes might have different characteristics. Among the properties we could look for are types of phonetic motivation, types of lexical diffusion, gradualness, conditioning environment and resulting segments. Common vs. rare sound change? We need a database that allows us to test hypotheses concerning what types of changes are common and what types are not. A database of sound changes? Most sound changes have occurred in undocumented periods so that we have no record of them. Even in cases with written records, the phonetic interpretation may be unclear. Only a small number of languages have historic records. So any sample of known sound changes would be biased towards those languages. A database of sound changes? Sound changes are known only for some languages of the world: Languages with written histories. Sound changes can be reconstructed by comparing related languages. -

L Vocalisation As a Natural Phenomenon

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by University of Essex Research Repository L Vocalisation as a Natural Phenomenon Wyn Johnson and David Britain Essex University [email protected] [email protected] 1. Introduction The sound /l/ is generally characterised in the literature as a coronal lateral approximant. This standard description holds that the sounds involves contact between the tip of the tongue and the alveolar ridge, but instead of the air being blocked at the sides of the tongue, it is also allowed to pass down the sides. In many (but not all) dialects of English /l/ has two allophones – clear /l/ ([l]), roughly as described, and dark, or velarised, /l/ ([…]) involving a secondary articulation – the retraction of the back of the tongue towards the velum. In dialects which exhibit this allophony, the clear /l/ occurs in syllable onsets and the dark /l/ in syllable rhymes (leaf [li˘f] vs. feel [fi˘…] and table [te˘b…]). The focus of this paper is the phenomenon of l-vocalisation, that is to say the vocalisation of dark /l/ in syllable rhymes 1. feel [fi˘w] table [te˘bu] but leaf [li˘f] 1 This process is widespread in the varieties of English spoken in the South-Eastern part of Britain (Bower 1973; Hardcastle & Barry 1989; Hudson and Holloway 1977; Meuter 2002, Przedlacka 2001; Spero 1996; Tollfree 1999, Trudgill 1986; Wells 1982) (indeed, it appears to be categorical in some varieties there) and which extends to many other dialects including American English (Ash 1982; Hubbell 1950; Pederson 2001); Australian English (Borowsky 2001, Borowsky and Horvath 1997, Horvath and Horvath 1997, 2001, 2002), New Zealand English (Bauer 1986, 1994; Horvath and Horvath 2001, 2002) and Falkland Island English (Sudbury 2001). -

The American Intrusive L

THE AMERICAN INTRUSIVE L BRYAN GICK University of British Columbia The well-known sandhi phenomenon known as intrusive r has been one of the longest-standing problems in English phonology. Recent work has brought to light a uniquely American contribution to this discussion: the intrusive l (as in draw[l]ing for drawing and bra[l] is for bra is in southern Pennsylvania, compared to draw[r]ing and bra[r] is, respectively, in British Received Pronunciation [RP]). In both instances of intrusion, a historically unattested liquid consonant (r or l) intervenes in the hiatus between a morpheme-final nonhigh vowel and a following vowel, either across or within words. Not surprisingly, this process interacts crucially with the well- known cases of /r/-vocalization (e.g., Kurath and McDavid 1961; Labov 1966; Labov, Yaeger, and Steiner 1972; Fowler 1986) and /l/-vocalization (e.g., Ash 1982a, 1982b), which have been identified as important markers of sociolinguistic stratification in New York City, Philadelphia, and else- where. However, previous discussion of the intrusive l (Gick 1999) has focused primarily on its phonological implications, with almost no attempt to describe its geographic, dialectal, and sociolinguistic context. This study marks such an attempt. In particular, it argues that the intrusive l is an instance of phonological change in progress. Descriptively, the intrusive l parallels the intrusive r in many respects. Intrusive r may be viewed simplistically as the extension by analogy of a historically attested final /r/ to words historically ending in a vowel (gener- ally this applies only to the set of non-glide-final vowels: /@, a, O/). -

Morphophonology of Magahi

International Journal of Science and Research (IJSR) ISSN: 2319-7064 SJIF (2019): 7.583 Morphophonology of Magahi Saloni Priya Jawaharlal Nehru University, SLL & CS, New Delhi, India Salonipriya17[at]gmail.com Abstract: Every languages has different types of word formation processes and each and every segment of morphology has a sound. The following paper is concerned with the sound changes or phonemic changes that occur during the word formation process in Magahi. Magahi is an Indo- Aryan Language spoken in eastern parts of Bihar and also in some parts of Jharkhand and West Bengal. The term Morphophonology refers to the interaction of word formation with the sound systems of a language. The paper finds out the phonetic rules interacting with the morphology of lexicons of Magahi. The observations shows that he most frequent morphophonological process are Sandhi, assimilation, Metathesis and Epenthesis. Whereas, the process of Dissimilation, Lenition and Fortition are very Uncommon in nature. Keywords: Morphology, Phonology, Sound Changes, Word formation process, Magahi, Words, Vowels, Consonants 1. Introduction 3.1 The Sources of Magahi Glossary Morphophonology refers to the interaction between Magahi has three kind of vocabulary sources; morphological and phonological or its phonetic processes. i) In the first category, it has those lexemes which has The aim of this paper is to give a detailed account on the been processed or influenced by Sanskrit, Prakrit, sound changes that take place in morphemes, when they Apbhransh, ect. Like, combine to form new words in the language. धमम> ध륍म> धरम, स셍म> सꥍ셍> सााँ셍 ii) In the second category, it has those words which are 2. -

Toward a Unified Theory of Chain Shifting

OUP UNCORRECTED PROOF – FIRST-PROOF, 04/29/12, NEWGEN !"#$%&' () TOWARD A UNIFIED THEORY OF CHAIN SHIFTING *#'+, -. ./,0/, !. I"#$%&'(#)%" Chain shifts play a major role in understanding the phonological history of English—from the prehistoric chain shift of Grimm’s Law that separates the Germanic languages from the rest of Indo-European, to the Great Vowel Shift that is often taken to define the boundary between Middle English and Early Modern English, to the ongoing chain shifts in Present-Day English that are used to estab- lish the geographical boundaries between dialect regions. A chain shift may be defined as a set of phonetic changes affecting a group of phonemes so that as one phoneme moves in phonetic space, another phoneme moves toward the phonetic position the first is abandoning; a third may move toward the original position of the second, and (perhaps) so on. Martinet (12(3) introduced the argument that chain shifts are caused by a need for phonemes to maintain margins of security between each other—so if a phoneme has more phonetic space on one side of it than on others, random phonetic variation will cause it to move toward the free space but not back toward the margins of security of phonemes on the other side. Labov (3414: chapter 5) gives a lucid exposition of the cognitive and phonetic argu- ments underlying this account of chain shifting. Despite their value as a descriptive device for the history of English, however, the ontological status of chain shifts themselves is a matter of some doubt. Is a 559_Nevalainen_Ch58.indd9_Nevalainen_Ch58.indd -

Some /L/S Are Darker Than Others: Accounting for Variation in English /L/ with Ultrasound Tongue Imaging

University of Pennsylvania Working Papers in Linguistics Volume 20 Issue 2 Selected Papers from NWAV 42 Article 21 10-2014 Some /l/s are darker than others: Accounting for variation in English /l/ with ultrasound tongue imaging Danielle Turton Follow this and additional works at: https://repository.upenn.edu/pwpl Recommended Citation Turton, Danielle (2014) "Some /l/s are darker than others: Accounting for variation in English /l/ with ultrasound tongue imaging," University of Pennsylvania Working Papers in Linguistics: Vol. 20 : Iss. 2 , Article 21. Available at: https://repository.upenn.edu/pwpl/vol20/iss2/21 This paper is posted at ScholarlyCommons. https://repository.upenn.edu/pwpl/vol20/iss2/21 For more information, please contact [email protected]. Some /l/s are darker than others: Accounting for variation in English /l/ with ultrasound tongue imaging Abstract The phenomenon of /l/-darkening has been a subject of linguistic interest due to the remarkable amount of contextual variation it displays. Although it is generally stated that the light variant occurs in onsets (e.g. leap) and the dark variant in codas (e.g. peel), many studies report variation in different morphosyntactic environments. Beyond this variation in morphosyntactic conditioning, different dialects of English have been reported as showing highly variable distributions. These descriptions include a claimed lack of dis- tinction in the North of England, a three-way distinction between light, dark and vocalised /l/ in the South-East, and a gradient continuum of darkness in American English. This paper presents ultrasound tongue imaging data collected to test dialectal and contextual descriptions of /l/ in English, providing hitherto absent instrumental evidence for different distributions. -

Metathesis Is Real, and It Is a Regular Relation A

METATHESIS IS REAL, AND IT IS A REGULAR RELATION A Dissertation submitted to the Faculty of the Graduate School of Arts and Sciences of Georgetown University in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Linguistics By Tracy A. Canfield, M. S. Washington, DC November 17 , 2015 Copyright 2015 by Tracy A. Canfield All Rights Reserved ii METATHESIS IS REAL, AND IT IS A REGULAR RELATION Tracy A. Canfield, M.S. Thesis Advisor: Elizabeth C. Zsiga , Ph.D. ABSTRACT Regular relations are mathematical models that are widely used in computational linguistics to generate, recognize, and learn various features of natural languages. While certain natural language phenomena – such as syntactic scrambling, which requires a re-ordering of input elements – cannot be modeled as regular relations, it has been argued that all of the phonological constraints that have been described in the context of Optimality Theory can be, and, thus, that the phonological grammars of all human languages are regular relations; as Ellison (1994) states, "All constraints are regular." Re-ordering of input segments, or metathesis, does occur at a phonological level. Historically, this phenomenon has been dismissed as simple speaker error (Montreuil, 1981; Hume, 2001), but more recent research has shown that metathesis occurs as a synchronic, predictable phonological process in numerous human languages (Hume, 1998; Hume, 2001). This calls the generalization that all phonological processes are regular relations into doubt, and raises other