Digital Technologies And

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Ujumbo: a Generic Development Toolkit for Messaging Based Workflows

Ujumbo: A Generic Development Toolkit for Messaging Based Workflows Dept. of CIS - Senior Design 2012-2013 Archit Budhraja Bob Han Sung Won Hwang [email protected] [email protected] [email protected] Univ. of Pennsylvania Univ. of Pennsylvania Univ. of Pennsylvania Philadelphia, PA Philadelphia, PA Philadelphia, PA Jonathan J. Leung Sean Welleck Boon Thau Loo [email protected] [email protected] [email protected] Univ. of Pennsylvania Univ. of Pennsylvania Univ. of Pennsylvania Philadelphia, PA Philadelphia, PA Philadelphia, PA ABSTRACT We began development by consulting local and interna- Many organizations, especially in developing countries often tional nonprofits and small scale organizations that may lack technical expertise, resource, and financial capital neces- require custom platforms in their operations. We gath- sary to build applications with complex logic flows. Looking ered data on their proposed workflows, needed functional- at the different kinds of products on the market, there seems ity, as well as surveyed their available financial resources. to be a lot of commonality between them yet each of them After talking to several resource-constrained and non-profit is developed independently of each other, requiring program- clients, it was observed there was far too much manual com- ming ability and a substantial investment in time and financ- munication. Most organizations we interviewed are physi- ing for each application. Our project aims to reduce these cally entering and processing data on day to day operations, additional costs in building a new application with common often in simple spreadsheet software. They also invest many communication features by creating a generic platform that hours manually calling and text messaging their members for users with non-technical abilities could build off of. -

Introducion to Duplicate

INTRODUCTION to DUPLICATE INTRODUCTION TO DUPLICATE BRIDGE This book is not about how to bid, declare or defend a hand of bridge. It assumes you know how to do that or are learning how to do those things elsewhere. It is your guide to playing Duplicate Bridge, which is how organized, competitive bridge is played all over the World. It explains all the Laws of Duplicate and the process of entering into Club games or Tournaments, the Convention Card, the protocols and rules of player conduct; the paraphernalia and terminology of duplicate. In short, it’s about the context in which duplicate bridge is played. To become an accomplished duplicate player, you will need to know everything in this book. But you can start playing duplicate immediately after you read Chapter I and skim through the other Chapters. © ACBL Unit 533, Palm Springs, Ca © ACBL Unit 533, 2018 Pg 1 INTRODUCTION to DUPLICATE This book belongs to Phone Email I joined the ACBL on ____/____ /____ by going to www.ACBL.com and signing up. My ACBL number is __________________ © ACBL Unit 533, 2018 Pg 2 INTRODUCTION to DUPLICATE Not a word of this book is about how to bid, play or defend a bridge hand. It assumes you have some bridge skills and an interest in enlarging your bridge experience by joining the world of organized bridge competition. It’s called Duplicate Bridge. It’s the difference between a casual Saturday morning round of golf or set of tennis and playing in your Club or State championships. As in golf or tennis, your skills will be tested in competition with others more or less skilled than you; this book is about the settings in which duplicate happens. -

Gang Definitions, How Do They Work?: What the Juggalos Teach Us About the Inadequacy of Current Anti-Gang Law Zachariah D

Marquette Law Review Volume 97 Article 6 Issue 4 Summer 2014 Gang Definitions, How Do They Work?: What the Juggalos Teach Us About the Inadequacy of Current Anti-Gang Law Zachariah D. Fudge [email protected] Follow this and additional works at: http://scholarship.law.marquette.edu/mulr Part of the Criminal Law Commons Repository Citation Zachariah D. Fudge, Gang Definitions, How Do They Work?: What the Juggalos Teach Us About the Inadequacy of Current Anti-Gang Law, 97 Marq. L. Rev. 979 (2014). Available at: http://scholarship.law.marquette.edu/mulr/vol97/iss4/6 This Article is brought to you for free and open access by the Journals at Marquette Law Scholarly Commons. It has been accepted for inclusion in Marquette Law Review by an authorized administrator of Marquette Law Scholarly Commons. For more information, please contact [email protected]. FUDGE FINAL 7-8-14 (DO NOT DELETE) 7/9/2014 8:40 AM GANG DEFINITIONS, HOW DO THEY WORK?: WHAT THE JUGGALOS TEACH US ABOUT THE INADEQUACY OF CURRENT ANTI-GANG LAW Precisely what constitutes a gang has been a hotly contested academic issue for a century. Recently, this problem has ceased to be purely academic and has developed urgent, real-world consequences. Almost every state and the federal government has enacted anti-gang laws in the past several decades. These anti-gang statutes must define ‘gang’ in order to direct police suppression efforts and to criminally punish gang members or associates. These statutory gang definitions are all too often vague and overbroad, as the example of the Juggalos demonstrates. -

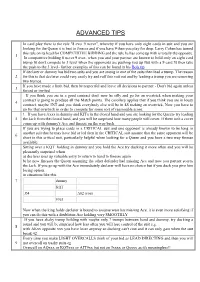

Advanced Tips

ADVANCED TIPS In card play there is the rule "8 ever 9 never", whereby if you have only eight cards in suit and you are looking for the Queen it is best to finesse and if you have 9 then you play for drop. Larry Cohen has turned this rule on its head for COMPETITIVE BIDDING and the rule he has come up with is totally the opposite. 1 In competitive bidding 8 never 9 ever- when you and your partner are known to hold only an eight card trump fit don't compete to 3 level when the opponents are pushing you up But with a 9 card fit then take the push to the 3 level- further examples of this can be found in his Bols tip If declarer or dummy has bid two suits and you are strong in one of the suits then lead a trump. The reason 2 for this is that declarer could very easily try and ruff this suit out and by leading a trump you are removing two trumps. If you have made a limit bid, then be respectful and leave all decisions to partner - Don't bid again unless 3 forced or invited If you think you are in a good contract don't now be silly and go for an overtrick when making your contract is going to produce all the Match points. The corollary applies that if you think you are in lousy 4 contract, maybe 3NT and you think everybody else will be in 4S making an overtrick, Now you have to go for that overtrick in order to compete for some sort of reasonable score. -

Rapidsms in Rwanda

Evaluating the Impact of RapidSMS: Final Report A Report Commissioned by UNICEF Rwanda December 2, 2016 Hinda Ruton, MSc1,2 Angele Musabyimana, MD, MSc1 Karen Grépin, PhD3 Joseph Ngenzi1 Emmanuel Nzabonimana1 Michael R. Law, PhD1,2,4 1. School of Public Health, College of Medicine and Health Sciences, University of Rwanda, Kigali, Rwanda 2. Centre for Health Services and Policy Research, The University of British Columbia, Vancouver, British Columbia, Canada 3. Sir Wilfred Laurier University, Waterloo, Ontario, Canada 4. Department of Global Health and Social Medicine, Harvard Medical School, Boston, MA, USA 1 Table of Contents Table of Contents ..................................................................................................................................... 2 Index of Tables and Figures ...................................................................................................................... 4 Tables ...................................................................................................................................................... 4 Figures ..................................................................................................................................................... 4 List of Acronyms....................................................................................................................................... 6 Executive Summary ................................................................................................................................. -

Návrhy Internetových Aplikací

Bankovní institut vysoká škola Praha Katedra informačních technologií a elektronického obchodování Návrhy internetových aplikací Bakalářská práce Autor: Jiří Nachtigall Informační technologie, MPIS Vedoucí práce: Ing. Jiří Rotschedl Praha Srpen, 2010 Prohlášení Prohlašuji, že jsem bakalářskou práci zpracoval samostatně a s použitím uvedené literatury. V Praze, dne 24. srpna 2010 Jiří Nachtigall Poděkování Na tomto místě bych rád poděkoval vedoucímu práce Ing. Jiřímu Rotschedlovi za vedení a cenné rady při přípravě této práce. Dále bych chtěl poděkovat Ing. Josefu Holému ze společnosti Sun Microsystems za odbornou konzultaci. Anotace Tato práce se zaměřuje na oblast návrhu internetových aplikací. Podrobně popisuje celý proces návrhu počínaje analýzou za použití k tomu určených nástrojů jako je use case a user story. Další částí procesu je návrh technologického řešení, které se skládá z výběru serverového řešení, programovacích technik a databází. Jako poslední je zmíněn návrh uživatelského rozhraní pomocí drátěných modelů a návrh samotného designu internetové aplikace. Annotation This work focuses on web application design. It describes whole process of design in detail. It starts with analysis using some tools especially created for this purpose like use case and user story. Next part of the process is technical design which consists from selection of server solution, programming language and database. And finally user interface prepared using wireframes is mentioned here alongside with graphical design of the web application. Obsah Úvod -

International Teachers On-Line

International Teachers On-line International teachers are available to teach all levels of play. We teach Standard Italia (naturale 4 e 5a nobile), SAYC, the Two Over One system, Acol and Precision. - You can state your preference for which teacher you would like to work . Caitlin, founder of Bridge Forum, is an ACBL accredited teacher and author. She and Ned Downey recently co-authored the popular Standard Bidding with SAYC. As a longtime volunteer of Fifth Chair's popular SAYC team game, Caitlin received their Gold Star award in 2003. She has also beenhonored by OKbridge as "Angelfish" for her bridge ethics and etiquette. Caitlin has written articles for the ACBL's Bulletin and The Bridge Teacher as well as the American Bridge Teachers' Association ABTA Quarterly. Caitlin will be offering free classes on OKbridge with BRIDGE FORUM teacher Bill (athene) Frisby based on Standard Bidding with SAYC. For details of times and days, and to order the book, please check this website or email Caitlin at [email protected]. Ned Downey (ned-maui) is a tournament director, ACBL star teacher, and Silver Life Master with several regional titles to his credit. He is owner of the Maui Bridge Club and author of the novice text Just Plain Bridge as co-writing Standard Bidding with SAYC with Caitlin. Ned teaches regularly aboard cruise ships as well as in the Maui classroom and online. In addition to providing online individual and partnership lessons, he can be found on Swan Games Bridge (www.swangames.com) where he provides free supervised play groups on behalf of BRIDGE FORUM. -

Reviewed Books

REVIEWED BOOKS - Inmate Property 6/27/2019 Disclaimer: Publications may be reviewed in accordance with DOC Administrative Code 309.04 Inmate Mail and DOC 309.05 Publications. The list may not include all books due to the volume of publications received. To quickly find a title press the "F" key along with the CTRL and type in a key phrase from the title, click FIND NEXT. TITLE AUTHOR APPROVEDENY REVIEWED EXPLANATION DOC 309.04 4 (c) 8 a Is pornography. Depicts teenage sexuality, nudity, 12 Beast Vol.2 OKAYADO X 12/11/2018 exposed breasts. DOC 309.04 4 (c) 8 a Is pornography. Depicts teenage sexuality, nudity, 12 Beast Vol.3 OKAYADO X 12/11/2018 exposed breasts. Workbook of Magic Donald Tyson X 1/11/2018 SR per Mike Saunders 100 Deadly Skills Survivor Edition Clint Emerson X 5/29/2018 DOC 309.04 4 (c) 8 b, c. b. Poses a threat to the security 100 No-Equipment Workouts Neila Rey X 4/6/2017 WCI DOC 309.04 4 (c) 8 b. b Teaches fighting techniques along with general fitness DOC 309.04 4 (c) 8 b, c. b. Is inconsistent with or poses a threat to the safety, 100 Things You’re Not Supposed to Know Russ Kick X 11/10/2017 WCI treatment or rehabilitative goals of an inmate. 100 Ways to Win a Ten Spot Paul Zenon X 10/21/2016 WRC DOC 309.04 4 (c) 8 b, c. b. Poses a threat to the security 100 Years of Lynchings Ralph Ginzburg X reviewed by agency trainers, deemed historical Brad Graham and 101 Spy Gadgets for the Evil Genuis Kathy McGowan X 12/23/10 WSPF 309.05(2)(B)2 309.04(4)c.8.d. -

C:\My Documents\Adobe\Boston Fall99

Presents They Had Their Beans Baked In Beantown Appeals at the 1999 Fall NABC Edited by Rich Colker ACBL Appeals Administrator Assistant Editor Linda Trent ACBL Appeals Manager CONTENTS Foreword ...................................................... iii The Expert Panel.................................................v Cases from San Antonio Tempo (Cases 1-24)...........................................1 Unauthorized Information (Cases 25-35)..........................93 Misinformation (Cases 35-49) .................................125 Claims (Cases 50-52)........................................177 Other (Case 53-56)..........................................187 Closing Remarks From the Expert Panelists..........................199 Closing Remarks From the Editor..................................203 Special Section: The WBF Code of Practice (for Appeals Committees) ....209 The Panel’s Director and Committee Ratings .........................215 NABC Appeals Committee .......................................216 Abbreviations used in this casebook: AI Authorized Information AWMPP Appeal Without Merit Penalty Point LA Logical Alternative MI Misinformation PP Procedural Penalty UI Unauthorized Information i ii FOREWORD We continue with our presentation of appeals from NABC tournaments. As always, our goal is to provide information and to foster change for the better in a manner that is entertaining, instructive and stimulating. The ACBL Board of Directors is testing a new appeals process at NABCs in 1999 and 2000 in which a Committee (called a Panel) comprised of pre-selected top Directors will hear appeals at NABCs from non-NABC+ events (including side games, regional events and restricted NABC events). Appeals from NABC+ events will continue to be heard by the National Appeals Committees (NAC). We will review both types of cases as we always have traditional Committee cases. Panelists were sent all cases and invited to comment on and rate each Director ruling and Panel/Committee decision. Not every panelist will comment on every case. -

Mirrors of Modernization: the American Reflection in Turkey

University of Pennsylvania ScholarlyCommons Publicly Accessible Penn Dissertations 2014 Mirrors of Modernization: The American Reflection in urkT ey Begum Adalet University of Pennsylvania, [email protected] Follow this and additional works at: https://repository.upenn.edu/edissertations Part of the History Commons, and the Political Science Commons Recommended Citation Adalet, Begum, "Mirrors of Modernization: The American Reflection in urkT ey" (2014). Publicly Accessible Penn Dissertations. 1186. https://repository.upenn.edu/edissertations/1186 This paper is posted at ScholarlyCommons. https://repository.upenn.edu/edissertations/1186 For more information, please contact [email protected]. Mirrors of Modernization: The American Reflection in urkT ey Abstract This project documents otherwise neglected dimensions entailed in the assemblage and implementations of political theories, namely their fabrication through encounters with their material, local, and affective constituents. Rather than emanating from the West and migrating to their venues of application, social scientific theories are fashioned in particular sites where political relations can be staged and worked upon. Such was the case with modernization theory, which prevailed in official and academic circles in the United States during the early phases of the Cold War. The theory bore its imprint on a series of developmental and infrastructural projects in Turkey, the beneficiary of Marshall Plan funds and academic exchange programs and one of the theory's most important models. The manuscript scrutinizes the corresponding sites of elaboration for the key indices of modernization: the capacity for empathy, mobility, and hospitality. In the case of Turkey the sites included survey research, the implementation of a highway network, and the expansion of the tourism industry through landmarks such as the Istanbul Hilton Hotel. -

Observer5-2015(Health&Beauty)

the Jewish bserver www.jewishobservernashville.org Vol. 80 No. 5 • May 2015 12 Iyyar-13 Sivan 5775 Nashville crowd remembers Israel’s fallen and celebrates its independence By CHARLES BERNSEN atching as about 230 people gath- ered on April 23 for a somber remem- brance of Israel’s fallen soldiers and Wterrors victims followed immediately by a joyful celebration of the 67th anniversary of the Jewish’ state’s birth, Rabbi Saul Strosberg couldn’t help but marvel. After all, it has been only eight years since the Nashville Jewish community started observing Yom Hazikaron, the Israeli equivalent of Memorial Day. Organized by several Israelis living in Nashville, including the late Miriam Halachmi, that first, brief ceremony was held in his office at Congregation Sherith For the third year, members of the community who have helped build relations between Nashville and Israel were given the honor of lighting Israel. About 20 people attended. torches at the annual celebration of Israel’s independence. Photos by Rick Malkin Now here he was in a crowd that of three fallen Israelis – a soldier killed in Catering and music by three Israeli Defense Martha and Alan Segal, who made filled the Gordon Jewish Community combat, a military pilot who died in a Force veterans who are members of the their first ever visits to Israel this spring Center auditorium to mark Yom training accident and a civilian murdered musical troupe Halehaka (The Band). on a congregational mission. Hazikaron and then Yom Ha’atzmaut, the in a terror attack. Their stories were pre- For the third year, the highlight of • Rabbi Mark Schiftan of The Temple Israeli independence day. -

Page 1 of 279 FLORIDA LRC DECISIONS

FLORIDA LRC DECISIONS. January 01, 2012 to Date 2019/06/19 TITLE / EDITION OR ISSUE / AUTHOR OR EDITOR ACTION RULE MEETING (Titles beginning with "A", "An", or "The" will be listed according to the (Rejected / AUTH. DATE second/next word in title.) Approved) (Rejectio (YYYY/MM/DD) ns) 10 DAI THOU TUONG TRUNG QUAC. BY DONG VAN. REJECTED 3D 2017/07/06 10 DAI VAN HAO TRUNG QUOC. PUBLISHER NHA XUAT BAN VAN HOC. REJECTED 3D 2017/07/06 10 POWER REPORTS. SUPPLEMENT TO MEN'S HEALTH REJECTED 3IJ 2013/03/28 10 WORST PSYCHOPATHS: THE MOST DEPRAVED KILLERS IN HISTORY. BY VICTOR REJECTED 3M 2017/06/01 MCQUEEN. 100 + YEARS OF CASE LAW PROVIDING RIGHTS TO TRAVEL ON ROADS WITHOUT A APPROVED 2018/08/09 LICENSE. 100 AMAZING FACTS ABOUT THE NEGRO. BY J. A. ROGERS. APPROVED 2015/10/14 100 BEST SOLITAIRE GAMES. BY SLOANE LEE, ETAL REJECTED 3M 2013/07/17 100 CARD GAMES FOR ALL THE FAMILY. BY JEREMY HARWOOD. REJECTED 3M 2016/06/22 100 COOL MUSHROOMS. BY MICHAEL KUO & ANDY METHVEN. REJECTED 3C 2019/02/06 100 DEADLY SKILLS SURVIVAL EDITION. BY CLINT EVERSON, NAVEL SEAL, RET. REJECTED 3M 2018/09/12 100 HOT AND SEXY STORIES. BY ANTONIA ALLUPATO. © 2012. APPROVED 2014/12/17 100 HOT SEX POSITIONS. BY TRACEY COX. REJECTED 3I 3J 2014/12/17 100 MOST INFAMOUS CRIMINALS. BY JO DURDEN SMITH. APPROVED 2019/01/09 100 NO- EQUIPMENT WORKOUTS. BY NEILA REY. REJECTED 3M 2018/03/21 100 WAYS TO WIN A TEN-SPOT. BY PAUL ZENON REJECTED 3E, 3M 2015/09/09 1000 BIKER TATTOOS.