The Impetus to Creativity in Technology

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Chapter 3 – Block Ciphers and the Data Encryption Standard

Chapter 3 –Block Ciphers and the Data Cryptography and Network Encryption Standard Security All the afternoon Mungo had been working on Stern's Chapter 3 code, principally with the aid of the latest messages which he had copied down at the Nevin Square drop. Stern was very confident. He must be well aware London Central knew about that drop. It was obvious Fifth Edition that they didn't care how often Mungo read their messages, so confident were they in the by William Stallings impenetrability of the code. —Talking to Strange Men, Ruth Rendell Lecture slides by Lawrie Brown Modern Block Ciphers Block vs Stream Ciphers now look at modern block ciphers • block ciphers process messages in blocks, each one of the most widely used types of of which is then en/decrypted cryptographic algorithms • like a substitution on very big characters provide secrecy /hii/authentication services – 64‐bits or more focus on DES (Data Encryption Standard) • stream ciphers process messages a bit or byte at a time when en/decrypting to illustrate block cipher design principles • many current ciphers are block ciphers – better analysed – broader range of applications Block vs Stream Ciphers Block Cipher Principles • most symmetric block ciphers are based on a Feistel Cipher Structure • needed since must be able to decrypt ciphertext to recover messages efficiently • bloc k cihiphers lklook like an extremely large substitution • would need table of 264 entries for a 64‐bit block • instead create from smaller building blocks • using idea of a product cipher 1 Claude -

Chapter 3 – Block Ciphers and the Data Encryption Standard

Symmetric Cryptography Chapter 6 Block vs Stream Ciphers • Block ciphers process messages into blocks, each of which is then en/decrypted – Like a substitution on very big characters • 64-bits or more • Stream ciphers process messages a bit or byte at a time when en/decrypting – Many current ciphers are block ciphers • Better analyzed. • Broader range of applications. Block vs Stream Ciphers Block Cipher Principles • Block ciphers look like an extremely large substitution • Would need table of 264 entries for a 64-bit block • Arbitrary reversible substitution cipher for a large block size is not practical – 64-bit general substitution block cipher, key size 264! • Most symmetric block ciphers are based on a Feistel Cipher Structure • Needed since must be able to decrypt ciphertext to recover messages efficiently Ideal Block Cipher Substitution-Permutation Ciphers • in 1949 Shannon introduced idea of substitution- permutation (S-P) networks – modern substitution-transposition product cipher • These form the basis of modern block ciphers • S-P networks are based on the two primitive cryptographic operations we have seen before: – substitution (S-box) – permutation (P-box) (transposition) • Provide confusion and diffusion of message Diffusion and Confusion • Introduced by Claude Shannon to thwart cryptanalysis based on statistical analysis – Assume the attacker has some knowledge of the statistical characteristics of the plaintext • Cipher needs to completely obscure statistical properties of original message • A one-time pad does this Diffusion -

Cryptography: DH And

1 ì Key Exchange Secure Software Systems Fall 2018 2 Challenge – Exchanging Keys & & − 1 6(6 − 1) !"#ℎ%&'() = = = 15 & 2 2 The more parties in communication, ! $ the more keys that need to be securely exchanged Do we have to use out-of-band " # methods? (e.g., phone?) % Secure Software Systems Fall 2018 3 Key Exchange ì Insecure communica-ons ì Alice and Bob agree on a channel shared secret (“key”) that ì Eve can see everything! Eve doesn’t know ì Despite Eve seeing everything! ! " (alice) (bob) # (eve) Secure Software Systems Fall 2018 Whitfield Diffie and Martin Hellman, 4 “New directions in cryptography,” in IEEE Transactions on Information Theory, vol. 22, no. 6, Nov 1976. Proposed public key cryptography. Diffie-Hellman key exchange. Secure Software Systems Fall 2018 5 Diffie-Hellman Color Analogy (1) It’s easy to mix two colors: + = (2) Mixing two or more colors in a different order results in + + = the same color: + + = (3) Mixing colors is one-way (Impossible to determine which colors went in to produce final result) https://www.crypto101.io/ Secure Software Systems Fall 2018 6 Diffie-Hellman Color Analogy ! # " (alice) (eve) (bob) + + $ $ = = Mix Mix (1) Start with public color ▇ – share across network (2) Alice picks secret color ▇ and mixes it to get ▇ (3) Bob picks secret color ▇ and mixes it to get ▇ Secure Software Systems Fall 2018 7 Diffie-Hellman Color Analogy ! # " (alice) (eve) (bob) $ $ Mix Mix = = Eve can’t calculate ▇ !! (secret keys were never shared) (4) Alice and Bob exchange their mixed colors (▇,▇) (5) Eve will -

Fall 2016 Dear Electrical Engineering Alumni and Friends, This Past

ABBAS EL GAMAL Fortinet Founders Chair of the Department of Electrical Engineering Hitachi America Professor Fall 2016 Dear Electrical Engineering Alumni and Friends, This past academic year was another very successful one for the department. We made great progress toward implementing the vision of our strategic plan (EE in the 21st Century, or EE21 for short), which I outlined in my letter to you last year. I am also proud to share some of the exciting research in the department and the significant recognitions our faculty have received. I will first briefly describe the progress we have made toward implementing our EE21 plan. Faculty hiring. The top priority in our strategic plan is hiring faculty with complementary vision and expertise and who enhance our faculty diversity. This past academic year, we conducted a junior faculty broad area search and participated in a School of Engineering wide search in the area of robotics. I am happy to report that Mary Wootters joined our faculty in September as an assistant professor jointly with Computer Science. Mary’s research focuses on applying probability to coding theory, signal processing, and randomized algorithms. She also explores quantum information theory and complexity theory. Mary was previously an NSF postdoctoral fellow in the CS department at Carnegie Mellon University. The robotics search yielded two top candidates. I will report on the final results of this search in my next year’s letter. Reinventing the undergraduate curriculum. We continue to innovate our undergraduate curriculum, introducing two new, exciting project-oriented courses: EE107: Embedded Networked Systems and EE267: Virtual Reality. -

Martin Hellman, Walker and Company, New York,1988, Pantheon Books, New York,1986

Risk Analysis of Nuclear Deterrence by Dr. Martin E. Hellman, New York Epsilon ’66 he first fundamental canon of The Code of A terrorist attack involving a nuclear weapon would Ethics for Engineers adopted by Tau Beta Pi be a catastrophe of immense proportions: “A 10-kiloton states that “Engineers shall hold paramount bomb detonated at Grand Central Station on a typical work the safety, health, and welfare of the public day would likely kill some half a million people, and inflict in the performance of their professional over a trillion dollars in direct economic damage. America Tduties.” When we design systems, we routinely use large and its way of life would be changed forever.” [Bunn 2003, safety factors to account for unforeseen circumstances. pages viii-ix]. The Golden Gate Bridge was designed with a safety factor The likelihood of such an attack is also significant. For- several times the anticipated load. This “over design” saved mer Secretary of Defense William Perry has estimated the bridge, along with the lives of the 300,000 people who the chance of a nuclear terrorist incident within the next thronged onto it in 1987 to celebrate its fiftieth anniver- decade to be roughly 50 percent [Bunn 2007, page 15]. sary. The weight of all those people presented a load that David Albright, a former weapons inspector in Iraq, was several times the design load1, visibly flattening the estimates those odds at less than one percent, but notes, bridge’s arched roadway. Watching the roadway deform, “We would never accept a situation where the chance of a bridge engineers feared that the span might collapse, but major nuclear accident like Chernobyl would be anywhere engineering conservatism saved the day. -

The Impetus to Creativity in Technology

The Impetus to Creativity in Technology Alan G. Konheim Professor Emeritus Department of Computer Science University of California Santa Barbara, California 93106 [email protected] [email protected] Abstract: We describe the technical developments ensuing from two well-known publications in the 20th century containing significant and seminal results, a paper by Claude Shannon in 1948 and a patent by Horst Feistel in 1971. Near the beginning, Shannon’s paper sets the tone with the statement ``the fundamental problem of communication is that of reproducing at one point either exactly or approximately a message selected *sent+ at another point.‛ Shannon’s Coding Theorem established the relationship between the probability of error and rate measuring the transmission efficiency. Shannon proved the existence of codes achieving optimal performance, but it required forty-five years to exhibit an actual code achieving it. These Shannon optimal-efficient codes are responsible for a wide range of communication technology we enjoy today, from GPS, to the NASA rovers Spirit and Opportunity on Mars, and lastly to worldwide communication over the Internet. The US Patent #3798539A filed by the IBM Corporation in1971 described Horst Feistel’s Block Cipher Cryptographic System, a new paradigm for encryption systems. It was largely a departure from the current technology based on shift-register stream encryption for voice and the many of the electro-mechanical cipher machines introduced nearly fifty years before. Horst’s vision directed to its application to secure the privacy of computer files. Invented at a propitious moment in time and implemented by IBM in automated teller machines for the Lloyds Bank Cashpoint System. -

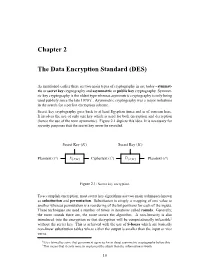

Chapter 2 the Data Encryption Standard (DES)

Chapter 2 The Data Encryption Standard (DES) As mentioned earlier there are two main types of cryptography in use today - symmet- ric or secret key cryptography and asymmetric or public key cryptography. Symmet- ric key cryptography is the oldest type whereas asymmetric cryptography is only being used publicly since the late 1970’s1. Asymmetric cryptography was a major milestone in the search for a perfect encryption scheme. Secret key cryptography goes back to at least Egyptian times and is of concern here. It involves the use of only one key which is used for both encryption and decryption (hence the use of the term symmetric). Figure 2.1 depicts this idea. It is necessary for security purposes that the secret key never be revealed. Secret Key (K) Secret Key (K) ? ? - - - - Plaintext (P ) E{P,K} Ciphertext (C) D{C,K} Plaintext (P ) Figure 2.1: Secret key encryption. To accomplish encryption, most secret key algorithms use two main techniques known as substitution and permutation. Substitution is simply a mapping of one value to another whereas permutation is a reordering of the bit positions for each of the inputs. These techniques are used a number of times in iterations called rounds. Generally, the more rounds there are, the more secure the algorithm. A non-linearity is also introduced into the encryption so that decryption will be computationally infeasible2 without the secret key. This is achieved with the use of S-boxes which are basically non-linear substitution tables where either the output is smaller than the input or vice versa. 1It is claimed by some that government agencies knew about asymmetric cryptography before this. -

Diffie and Hellman Receive 2015 Turing Award Rod Searcey/Stanford University

Diffie and Hellman Receive 2015 Turing Award Rod Searcey/Stanford University. Linda A. Cicero/Stanford News Service. Whitfield Diffie Martin E. Hellman ernment–private sector relations, and attracts billions of Whitfield Diffie, former chief security officer of Sun Mi- dollars in research and development,” said ACM President crosystems, and Martin E. Hellman, professor emeritus Alexander L. Wolf. “In 1976, Diffie and Hellman imagined of electrical engineering at Stanford University, have been a future where people would regularly communicate awarded the 2015 A. M. Turing Award of the Association through electronic networks and be vulnerable to having for Computing Machinery for their critical contributions their communications stolen or altered. Now, after nearly to modern cryptography. forty years, we see that their forecasts were remarkably Citation prescient.” The ability for two parties to use encryption to commu- “Public-key cryptography is fundamental for our indus- nicate privately over an otherwise insecure channel is try,” said Andrei Broder, Google Distinguished Scientist. fundamental for billions of people around the world. On “The ability to protect private data rests on protocols for a daily basis, individuals establish secure online connec- confirming an owner’s identity and for ensuring the integ- tions with banks, e-commerce sites, email servers, and the rity and confidentiality of communications. These widely cloud. Diffie and Hellman’s groundbreaking 1976 paper, used protocols were made possible through the ideas and “New Directions in Cryptography,” introduced the ideas of methods pioneered by Diffie and Hellman.” public-key cryptography and digital signatures, which are Cryptography is a practice that facilitates communi- the foundation for most regularly used security protocols cation between two parties so that the communication on the Internet today. -

The Mathematics of the RSA Public-Key Cryptosystem Burt Kaliski RSA Laboratories

The Mathematics of the RSA Public-Key Cryptosystem Burt Kaliski RSA Laboratories ABOUT THE AUTHOR: Dr Burt Kaliski is a computer scientist whose involvement with the security industry has been through the company that Ronald Rivest, Adi Shamir and Leonard Adleman started in 1982 to commercialize the RSA encryption algorithm that they had invented. At the time, Kaliski had just started his undergraduate degree at MIT. Professor Rivest was the advisor of his bachelor’s, master’s and doctoral theses, all of which were about cryptography. When Kaliski finished his graduate work, Rivest asked him to consider joining the company, then called RSA Data Security. In 1989, he became employed as the company’s first full-time scientist. He is currently chief scientist at RSA Laboratories and vice president of research for RSA Security. Introduction Number theory may be one of the “purest” branches of mathematics, but it has turned out to be one of the most useful when it comes to computer security. For instance, number theory helps to protect sensitive data such as credit card numbers when you shop online. This is the result of some remarkable mathematics research from the 1970s that is now being applied worldwide. Sensitive data exchanged between a user and a Web site needs to be encrypted to prevent it from being disclosed to or modified by unauthorized parties. The encryption must be done in such a way that decryption is only possible with knowledge of a secret decryption key. The decryption key should only be known by authorized parties. In traditional cryptography, such as was available prior to the 1970s, the encryption and decryption operations are performed with the same key. -

Feistel Cipher

Chapter 3 – Block Ciphers and the CryptographyCryptography andand Data Encryption Standard Network Security Network Security All the afternoon Mungo had been working on Stern's code, principally with the aid of the latest ChapterChapter 33 messages which he had copied down at the Nevin Square drop. Stern was very confident. He must be well aware London Central knew Fifth Edition about that drop. It was obvious that they didn't care how often Mungo read their messages, so by William Stallings confident were they in the impenetrability of the Lecture slides by Lawrie Brown code. —Talking to Strange Men, Ruth Rendell (with edits by RHB) Outline Modern Block Ciphers • will consider: • now look at modern block ciphers – Block vs stream ciphers • one of the most widely used types of – Feistel cipher design & structure cryptographic algorithms – DES • provide secrecy/authentication services • details • Initially look at Data Encryption Standard • strength (DES) to illustrate block cipher design – Differential & linear cryptanalysis issues – Block cipher design principles Block vs Stream Ciphers Block vs Stream Ciphers Block vs Stream Ciphers • block ciphers process messages in blocks, each of which is then en/decrypted • like a substitution on very big characters – 64 -bits or more (these days, 128 or more) • stream ciphers process messages a bit or byte at a time when en/de -crypting • many current ciphers are block ciphers – better analysed – broader range of applications Block Cipher Principles Ideal Block Cipher • many symmetric block ciphers -

The Greatest Pioneers in Computer Science

The Greatest Pioneers in Computer Science Ivan Srba, Veronika Gondová 19th October 2017 5th Heidelberg Laureate Forum 2 Laureates of mathematics and computer science meet the next generation September 24–29, 2017, Heidelberg https://www.heidelberg-laureate-forum.org 3 Awards in Computer Science 4 Awards in Computer Science 5 • ACM A.M. Turing Award • “Nobel Prize of Computing” • Awarded to “an individual selected for contributions of a technical nature made to the computing community” • Accompanied by a prize of $1 million Awards in Computer Science 6 • ACM A.M. Turing Award • “Nobel Prize of Computing” • Awarded to “an individual selected for contributions of a technical nature made to the computing community” • Accompanied by a prize of $1 million • ACM Prize in Computing • Awarded to “an early to mid-career fundamental innovative contribution in computing” • Accompanied by a prize of $250,000 Some of Laureates We Met at 5th HLF 7 PeWe Postcard 8 Leslie Lamport 9 ACM A.M. Turing Award (2013) Source: https://www.heidelberg-laureate-forum.org/blog/laureate/leslie-lamport/ Leslie Lamport 10 ACM A.M. Turing Award (2013) “for fundamental contributions to the theory and practice of distributed and concurrent systems, notably the invention of concepts such as causality and logical clocks, safety and liveness, replicated state machines, and sequential consistency.” • Developed Lamport Clocks for distributed systems • The paper “Time, Clocks, and the Ordering of Events in a Distributed System” from 1978 has become one of the most cited works in computer science • Developed LaTeX • Invented the first digital signature algorithm • Currently work in Microsoft Research Source: https://www.heidelberg-laureate-forum.org/blog/laureate/leslie-lamport/ Balmer’s Peak 11 • The theory that computer programmers obtain quasi-magical, superhuman coding ability • when they have a blood alcohol concentration percentage between 0.129% and 0.138%. -

![Arxiv:2106.11534V1 [Cs.DL] 22 Jun 2021 2 Nanjing University of Science and Technology, Nanjing, China 3 University of Southampton, Southampton, U.K](https://docslib.b-cdn.net/cover/7768/arxiv-2106-11534v1-cs-dl-22-jun-2021-2-nanjing-university-of-science-and-technology-nanjing-china-3-university-of-southampton-southampton-u-k-1557768.webp)

Arxiv:2106.11534V1 [Cs.DL] 22 Jun 2021 2 Nanjing University of Science and Technology, Nanjing, China 3 University of Southampton, Southampton, U.K

Noname manuscript No. (will be inserted by the editor) Turing Award elites revisited: patterns of productivity, collaboration, authorship and impact Yinyu Jin1 · Sha Yuan1∗ · Zhou Shao2, 4 · Wendy Hall3 · Jie Tang4 Received: date / Accepted: date Abstract The Turing Award is recognized as the most influential and presti- gious award in the field of computer science(CS). With the rise of the science of science (SciSci), a large amount of bibliographic data has been analyzed in an attempt to understand the hidden mechanism of scientific evolution. These include the analysis of the Nobel Prize, including physics, chemistry, medicine, etc. In this article, we extract and analyze the data of 72 Turing Award lau- reates from the complete bibliographic data, fill the gap in the lack of Turing Award analysis, and discover the development characteristics of computer sci- ence as an independent discipline. First, we show most Turing Award laureates have long-term and high-quality educational backgrounds, and more than 61% of them have a degree in mathematics, which indicates that mathematics has played a significant role in the development of computer science. Secondly, the data shows that not all scholars have high productivity and high h-index; that is, the number of publications and h-index is not the leading indicator for evaluating the Turing Award. Third, the average age of awardees has increased from 40 to around 70 in recent years. This may be because new breakthroughs take longer, and some new technologies need time to prove their influence. Besides, we have also found that in the past ten years, international collabo- ration has experienced explosive growth, showing a new paradigm in the form of collaboration.