Color to Grayscale Image Conversion Using Modulation Domain Quadratic Programming

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Color Management

Color Management hotoshop 5.0 was justifiably praised as a ground- breaking upgrade when it was released in the summer of 1998, although the changes made to the color P management setup were less well received in some quarters. This was because the revised system was perceived to be complex and unnecessary. Bruce Fraser once said of the Photoshop 5.0 color management system ‘it’s push-button simple, as long as you know which of the 60 or so buttons to push!’ Attitudes have changed since then (as has the interface) and it is fair to say that most people working today in the pre-press industry are now using ICC color profile managed workflows. The aim of this chapter is to introduce the basic concepts of color management before looking at the color management interface in Photoshop and the various color management settings. 1 Color management Adobe Photoshop CS6 for Photographers: www.photoshopforphotographers.com The need for color management An advertising agency art buyer was once invited to address a meeting of photographers. The chair, Mike Laye, suggested we could ask him anything we wanted, except ‘Would you like to see my book?’ And if he had already seen your book, we couldn’t ask him why he hadn’t called it back in again. And if he had called it in again we were not allowed to ask why we didn’t get the job. And finally, if we did get the job we were absolutely forbidden to ask why the color in the printed ad looked nothing like the original photograph! That in a nutshell is a problem which has bugged many of us throughout our working lives, and it is one which will be familiar to anyone who has ever experienced the difficulty of matching colors on a computer display with the original or a printed output. -

Color Models

Color Models Jian Huang CS456 Main Color Spaces • CIE XYZ, xyY • RGB, CMYK • HSV (Munsell, HSL, IHS) • Lab, UVW, YUV, YCrCb, Luv, Differences in Color Spaces • What is the use? For display, editing, computation, compression, …? • Several key (very often conflicting) features may be sought after: – Additive (RGB) or subtractive (CMYK) – Separation of luminance and chromaticity – Equal distance between colors are equally perceivable CIE Standard • CIE: International Commission on Illumination (Comission Internationale de l’Eclairage). • Human perception based standard (1931), established with color matching experiment • Standard observer: a composite of a group of 15 to 20 people CIE Experiment CIE Experiment Result • Three pure light source: R = 700 nm, G = 546 nm, B = 436 nm. CIE Color Space • 3 hypothetical light sources, X, Y, and Z, which yield positive matching curves • Y: roughly corresponds to luminous efficiency characteristic of human eye CIE Color Space CIE xyY Space • Irregular 3D volume shape is difficult to understand • Chromaticity diagram (the same color of the varying intensity, Y, should all end up at the same point) Color Gamut • The range of color representation of a display device RGB (monitors) • The de facto standard The RGB Cube • RGB color space is perceptually non-linear • RGB space is a subset of the colors human can perceive • Con: what is ‘bloody red’ in RGB? CMY(K): printing • Cyan, Magenta, Yellow (Black) – CMY(K) • A subtractive color model dye color absorbs reflects cyan red blue and green magenta green blue and red yellow blue red and green black all none RGB and CMY • Converting between RGB and CMY RGB and CMY HSV • This color model is based on polar coordinates, not Cartesian coordinates. -

An Improved SPSIM Index for Image Quality Assessment

S S symmetry Article An Improved SPSIM Index for Image Quality Assessment Mariusz Frackiewicz * , Grzegorz Szolc and Henryk Palus Department of Data Science and Engineering, Silesian University of Technology, Akademicka 16, 44-100 Gliwice, Poland; [email protected] (G.S.); [email protected] (H.P.) * Correspondence: [email protected]; Tel.: +48-32-2371066 Abstract: Objective image quality assessment (IQA) measures are playing an increasingly important role in the evaluation of digital image quality. New IQA indices are expected to be strongly correlated with subjective observer evaluations expressed by Mean Opinion Score (MOS) or Difference Mean Opinion Score (DMOS). One such recently proposed index is the SuperPixel-based SIMilarity (SPSIM) index, which uses superpixel patches instead of a rectangular pixel grid. The authors of this paper have proposed three modifications to the SPSIM index. For this purpose, the color space used by SPSIM was changed and the way SPSIM determines similarity maps was modified using methods derived from an algorithm for computing the Mean Deviation Similarity Index (MDSI). The third modification was a combination of the first two. These three new quality indices were used in the assessment process. The experimental results obtained for many color images from five image databases demonstrated the advantages of the proposed SPSIM modifications. Keywords: image quality assessment; image databases; superpixels; color image; color space; image quality measures Citation: Frackiewicz, M.; Szolc, G.; Palus, H. An Improved SPSIM Index 1. Introduction for Image Quality Assessment. Quantitative domination of acquired color images over gray level images results in Symmetry 2021, 13, 518. https:// the development not only of color image processing methods but also of Image Quality doi.org/10.3390/sym13030518 Assessment (IQA) methods. -

CDOT Shaded Color and Grayscale Printing Reference File Management

CDOT Shaded Color and Grayscale Printing This document guides you through the set-up and printing process for shaded color and grayscale Sheet Files. This workflow may be used any time the user wants to highlight specific areas for things such as phasing plans, public meetings, ROW exhibits, etc. Please note that the use of raster images (jpg, bmp, tif, ets.) will dramatically increase the processing time during printing. Reference File Management Adjustments must be made to some of the reference file settings in order to achieve the desired print quality. The settings that need to be changed are the Slot Numbers and the Update Sequence. Slot Numbers are unique identifiers assigned to reference files and can be called out individually or in groups within a pen table for special processing during printing. The Update Sequence determines the order in which files are refreshed on the screen and printed on paper. Reference file Slot Number Categories: 0 = Sheet File (black)(this is the active dgn file.) 1-99 = Proposed Primary Discipline (Black) 100-199 = Existing Topo (light gray) 200-299 = Proposed Other Discipline (dark gray) 300-399 = Color Shaded Areas Note: All data placed in the Sheet File will be printed black. Items to be printed in color should be in their own file. Create a new file using the standard CDOT seed files and reference design elements to create color areas. If printing to a black and white printer all colors will be printed grayscale. Files can still be printed using the default printer drivers and pen tables, however shaded areas will lose their transparency and may print black depending on their level. -

COLOR SPACE MODELS for VIDEO and CHROMA SUBSAMPLING

COLOR SPACE MODELS for VIDEO and CHROMA SUBSAMPLING Color space A color model is an abstract mathematical model describing the way colors can be represented as tuples of numbers, typically as three or four values or color components (e.g. RGB and CMYK are color models). However, a color model with no associated mapping function to an absolute color space is a more or less arbitrary color system with little connection to the requirements of any given application. Adding a certain mapping function between the color model and a certain reference color space results in a definite "footprint" within the reference color space. This "footprint" is known as a gamut, and, in combination with the color model, defines a new color space. For example, Adobe RGB and sRGB are two different absolute color spaces, both based on the RGB model. In the most generic sense of the definition above, color spaces can be defined without the use of a color model. These spaces, such as Pantone, are in effect a given set of names or numbers which are defined by the existence of a corresponding set of physical color swatches. This article focuses on the mathematical model concept. Understanding the concept Most people have heard that a wide range of colors can be created by the primary colors red, blue, and yellow, if working with paints. Those colors then define a color space. We can specify the amount of red color as the X axis, the amount of blue as the Y axis, and the amount of yellow as the Z axis, giving us a three-dimensional space, wherein every possible color has a unique position. -

Robust Pulse-Rate from Chrominance-Based Rppg Gerard De Haan and Vincent Jeanne

IEEE TRANSACTIONS ON BIOMEDICAL ENGINEERING, VOL. ?, NO. ?, MONTH 2013 1 Robust pulse-rate from chrominance-based rPPG Gerard de Haan and Vincent Jeanne Abstract—Remote photoplethysmography (rPPG) enables con- PPG signal into two independent signals built as a linear tactless monitoring of the blood volume pulse using a regular combination of two color channels [8]. One combination camera. Recent research focused on improved motion robustness, approximated the clean pulse-signal, the other the motion but the proposed blind source separation techniques (BSS) in RGB color space show limited success. We present an analysis of artifact, and the energy in the pulse-signal was minimized the motion problem, from which far superior chrominance-based to optimize the combination. Poh et al. extended this work methods emerge. For a population of 117 stationary subjects, we proposing a linear combination of all three color channels show our methods to perform in 92% good agreement (±1:96σ) defining three independent signals with Independent Compo- with contact PPG, with RMSE and standard deviation both a nent Analysis (ICA) using non-Gaussianity as the criterion factor of two better than BSS-based methods. In a fitness setting using a simple spectral peak detector, the obtained pulse-rate for independence [5]. Lewandowska et al. varied this con- for modest motion (bike) improves from 79% to 98% correct, cept defining three independent linear combinations of the and for vigorous motion (stepping) from less than 11% to more color channels with Principal Component Analysis (PCA) [6]. than 48% correct. We expect the greatly improved robustness to With both Blind Source Separation (BSS) techniques, the considerably widen the application scope of the technology. -

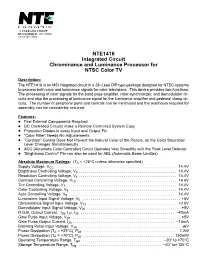

NTE1416 Integrated Circuit Chrominance and Luminance Processor for NTSC Color TV

NTE1416 Integrated Circuit Chrominance and Luminance Processor for NTSC Color TV Description: The NTE1416 is an MSI integrated circuit in a 28–Lead DIP type package designed for NTSC systems to process both color and luminance signals for color televisions. This device provides two functions: The processing of color signals for the band pass amplifier, color synchronizer, and demodulator cir- cuits and also the processing of luminance signal for the luminance amplifier and pedestal clamp cir- cuits. The number of peripheral parts and controls can be minimized and the manhours required for assembly can be considerbly reduced. Features: D Few External Components Required D DC Controlled Circuits make a Remote Controlled System Easy D Protection Diodes in every Input and Output Pin D “Color Killer” Needs No Adjustements D “Contrast” Control Does Not Prevent the Natural Color of the Picture, as the Color Saturation Level Changes Simultaneously D ACC (Automatic Color Controller) Circuit Operates Very Smoothly with the Peak Level Detector D “Brightness Control” Pin can also be used for ABL (Automatic Beam Limitter) Absolute Maximum Ratings: (TA = +25°C unless otherwise specified) Supply Voltage, VCC . 14.4V Brightness Controlling Voltage, V3 . 14.4V Resolution Controlling Voltage, V4 . 14.4V Contrast Controlling Voltage, V10 . 14.4V Tint Controlling Voltage, V7 . 14.4V Color Controlling Voltage, V9 . 14.4V Auto Controlling Voltage, V8 . 14.4V Luminance Input Signal Voltage, V5 . +5V Chrominance Signal Input Voltage, V13 . +2.5V Demodulator Input Signal Voltage, V25 . +5V R.G.B. Output Current, I26, I27, I28 . –40mA Gate Pulse Input Voltage, V20 . +5V Gate Pulse Output Current, I20 . -

The Art of Digital Black & White by Jeff Schewe There's Just Something

The Art of Digital Black & White By Jeff Schewe There’s just something magical about watching an image develop on a piece of photo paper in the developer tray…to see the paper go from being just a blank white piece of paper to becoming a photograph is what many photographers think of when they think of Black & White photography. That process of watching the image develop is what got me hooked on photography over 30 years ago and Black & White is where my heart really lives even though I’ve done more color work professionally. I used to have the brown stains on my fingers like any good darkroom tech, but commercially, I turned toward color photography. Later, when going digital, I basically gave up being able to ever achieve what used to be commonplace from the darkroom–until just recently. At about the same time Kodak announced it was going to stop making Black & White photo paper, Epson announced their new line of digital ink jet printers and a new ink, Ultrachrome K3 (3 Blacks- hence the K3), that has given me hope of returning to darkroom quality prints but with a digital printer instead of working in a smelly darkroom environment. Combine the new printers with the power of digital image processing in Adobe Photoshop and the capabilities of recent digital cameras and I think you’ll see a strong trend towards photographers going digital to get the best Black & White prints possible. Making the optimal Black & White print digitally is not simply a click of the shutter and push button printing. -

Image Processing

IMAGE PROCESSING ROBOTICS CLUB SUMMER CAMP’12 WHAT IS IMAGE PROCESSING? IMAGE PROCESSING = IMAGE + PROCESSING WHAT IS IMAGE? IMAGE = Made up of PIXELS Each Pixels is like an array of Numbers. Numbers determine colour of Pixel. TYPES OF IMAGES 1. BINARY IMAGE 2. GREYSCALE IMAGE 3. COLOURED IMAGE BINARY IMAGE Each Pixel has either 1 (White) or 0 (Black) Depth =1 (bit) Number of Channels = 1 0 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 0 0 0 0 1 1 1 1 1 0 0 0 0 1 1 1 1 1 0 0 0 0 1 1 1 1 1 0 0 0 0 0 0 0 0 0 0 0 GRAYSCALE Each Pixel has a value from 0 to 255. 0 : black and 1 : White Between 0 and 255 are shades of b&w. Depth=8 (bits) Number of Channels =1 GRAYSCALE IMAGE RGB IMAGE Each Pixel stores 3 values :- R : 0- 255 G: 0 -255 B : 0-255 Depth=8 (bits) Number of Channels = 3 RGB IMAGE HSV IMAGE Each pixel stores 3 values :- H ( hue ) : 0 -180 S (saturation) : 0-255 V (value) : 0-255 Depth = 8 (bits) Number of Channels = 3 Note : Hue in general is from 0-360 , but as hue is 8 bits in OpenCV , it is shrinked to 180 STARTING WITH OPENCV OpenCV is a library for C language developed for Image Processing To embed opencv library in Dev C complier , follow instructions in :- http://opencv.willowgarage.com/wiki/DevCpp HEADER FILES IN C After embedding openCV library in Dev C include following header files:- #include "cv.h" #include "highgui.h" IMAGE POINTER An image is stored as a structure IplImage with following elements :- int height Width int width int nChannels int depth Height char *imageData int widthStep …. -

Preparing Images for Delivery

TECHNICAL PAPER Preparing Images for Delivery TABLE OF CONTENTS So, you’ve done a great job for your client. You’ve created a nice image that you both 2 How to prepare RGB files for CMYK agree meets the requirements of the layout. Now what do you do? You deliver it (so you 4 Soft proofing and gamut warning can bill it!). But, in this digital age, how you prepare an image for delivery can make or 13 Final image sizing break the final reproduction. Guess who will get the blame if the image’s reproduction is less than satisfactory? Do you even need to guess? 15 Image sharpening 19 Converting to CMYK What should photographers do to ensure that their images reproduce well in print? 21 What about providing RGB files? Take some precautions and learn the lingo so you can communicate, because a lack of crystal-clear communication is at the root of most every problem on press. 24 The proof 26 Marking your territory It should be no surprise that knowing what the client needs is a requirement of pro- 27 File formats for delivery fessional photographers. But does that mean a photographer in the digital age must become a prepress expert? Kind of—if only to know exactly what to supply your clients. 32 Check list for file delivery 32 Additional resources There are two perfectly legitimate approaches to the problem of supplying digital files for reproduction. One approach is to supply RGB files, and the other is to take responsibility for supplying CMYK files. Either approach is valid, each with positives and negatives. -

Grayscale Lithography Creating Complex 2.5D Structures in Thick Photoresist by Direct Laser Writing

EPIC Meeting on Wafer Level Optics Grayscale Lithography Creating complex 2.5D structures in thick photoresist by direct laser writing 07/11/2019 Dominique Collé - Grayscale Lithography Heidelberg Instruments in a Nutshell • A world leader in the production of innovative, high- precision maskless aligners and laser lithography systems • Extensive know-how in developing customized photolithography solutions • Providing customer support throughout system’s lifetime • Focus on high quality, high fidelity, high speed, and high precision • More than 200 employees worldwide (and growing fast) • 40 million Euros turnover in 2017 • Founded in 1984 • An installation base of over 800 systems in more than 50 countries • 35 years of experience 07/11/2019 Dominique Collé - Grayscale Lithography Principle of Grayscale Photolithography UV exposure with spatially modulated light intensity After development: the intensity gradient has been transferred into resist topography. Positive photoresist Substrate Afterward, the resist topography can be transfered to a different material: the substrate itself (etching) or a molding material (electroforming, OrmoStamp®). 07/11/2019 Dominique Collé - Grayscale Lithography Applications Microlens arrays Fresnel lenses Diffractive Optical elements • Wavefront sensor • Reduced lens volume • Modified phase profile • Fiber coupling • Mobile devices • Split & shape beam • Light homogenization • Miniature cameras • Complex light patterns 07/11/2019 Dominique Collé - Grayscale Lithography Applications Diffusers & reflectors -

Demosaicking: Color Filter Array Interpolation [Exploring the Imaging

[Bahadir K. Gunturk, John Glotzbach, Yucel Altunbasak, Ronald W. Schafer, and Russel M. Mersereau] FLOWER PHOTO © PHOTO FLOWER MEDIA, 1991 21ST CENTURY PHOTO:CAMERA AND BACKGROUND ©VISION LTD. DIGITAL Demosaicking: Color Filter Array Interpolation [Exploring the imaging process and the correlations among three color planes in single-chip digital cameras] igital cameras have become popular, and many people are choosing to take their pic- tures with digital cameras instead of film cameras. When a digital image is recorded, the camera needs to perform a significant amount of processing to provide the user with a viewable image. This processing includes correction for sensor nonlinearities and nonuniformities, white balance adjustment, compression, and more. An important Dpart of this image processing chain is color filter array (CFA) interpolation or demosaicking. A color image requires at least three color samples at each pixel location. Computer images often use red (R), green (G), and blue (B). A camera would need three separate sensors to completely meas- ure the image. In a three-chip color camera, the light entering the camera is split and projected onto each spectral sensor. Each sensor requires its proper driving electronics, and the sensors have to be registered precisely. These additional requirements add a large expense to the system. Thus, many cameras use a single sensor covered with a CFA. The CFA allows only one color to be measured at each pixel. This means that the camera must estimate the missing two color values at each pixel. This estimation process is known as demosaicking. Several patterns exist for the filter array.