Apache Giraph Matúš Macko Martin Schvarcbacher Overview

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

An Enterprise Knowledge Network

Fogbeam Labs Cut Through The Information Fog http://www.fogbeam.com An Enterprise Knowledge Network Knowledge exists in many forms inside your organization – ranging from tacit knowledge which exists only in the minds of the users who possess it, to codified knowledge stored in databases and document repositories. Unfortunately while knowledge exists throughout the organization, it is often not easy (if even possible) to locate, use, share, and reuse existing knowledge. This results in a situation often described as “the left hand doesn't know what the right hand is doing” and damages morale as employees spend their days frustrated and complaining that “nobody knows what is going on around here”. The obstacles that hinder access to existing knowledge can be cultural, geographical, social, and/or technological. And while no technological solution can guarantee perfect knowledge-sharing, tools drawn from big data, data mining / machine learning, deep learning, and artificial intelligence techniques can improve an organization's power to generate, capture, use, share and reuse knowledge. Using technologies developed as part of the semantic web initiative, and applying the principles of linked data within the enterprise, the Fogbeam Labs Enterprise Knowledge Network approach can help your firm integrate and aggregate knowledge which is spread across your existing enterprise applications, content repositories and Intranet. An Enterprise Knowledge Network enables your firm's capabilities to: • engage in high levels of knowledge transfer and -

Return of Organization Exempt from Income

OMB No. 1545-0047 Return of Organization Exempt From Income Tax Form 990 Under section 501(c), 527, or 4947(a)(1) of the Internal Revenue Code (except black lung benefit trust or private foundation) Open to Public Department of the Treasury Internal Revenue Service The organization may have to use a copy of this return to satisfy state reporting requirements. Inspection A For the 2011 calendar year, or tax year beginning 5/1/2011 , and ending 4/30/2012 B Check if applicable: C Name of organization The Apache Software Foundation D Employer identification number Address change Doing Business As 47-0825376 Name change Number and street (or P.O. box if mail is not delivered to street address) Room/suite E Telephone number Initial return 1901 Munsey Drive (909) 374-9776 Terminated City or town, state or country, and ZIP + 4 Amended return Forest Hill MD 21050-2747 G Gross receipts $ 554,439 Application pending F Name and address of principal officer: H(a) Is this a group return for affiliates? Yes X No Jim Jagielski 1901 Munsey Drive, Forest Hill, MD 21050-2747 H(b) Are all affiliates included? Yes No I Tax-exempt status: X 501(c)(3) 501(c) ( ) (insert no.) 4947(a)(1) or 527 If "No," attach a list. (see instructions) J Website: http://www.apache.org/ H(c) Group exemption number K Form of organization: X Corporation Trust Association Other L Year of formation: 1999 M State of legal domicile: MD Part I Summary 1 Briefly describe the organization's mission or most significant activities: to provide open source software to the public that we sponsor free of charge 2 Check this box if the organization discontinued its operations or disposed of more than 25% of its net assets. -

Graft: a Debugging Tool for Apache Giraph

Graft: A Debugging Tool For Apache Giraph Semih Salihoglu, Jaeho Shin, Vikesh Khanna, Ba Quan Truong, Jennifer Widom Stanford University {semih, jaeho.shin, vikesh, bqtruong, widom}@cs.stanford.edu ABSTRACT optional master.compute() function is executed by the Master We address the problem of debugging programs written for Pregel- task between supersteps. like systems. After interviewing Giraph and GPS users, we devel- We have tackled the challenge of debugging programs written oped Graft. Graft supports the debugging cycle that users typically for Pregel-like systems. Despite being a core component of pro- go through: (1) Users describe programmatically the set of vertices grammers’ development cycles, very little work has been done on they are interested in inspecting. During execution, Graft captures debugging in these systems. We interviewed several Giraph and the context information of these vertices across supersteps. (2) Us- GPS programmers (hereafter referred to as “users”) and studied vertex.compute() ing Graft’s GUI, users visualize how the values and messages of the how they currently debug their functions. captured vertices change from superstep to superstep,narrowing in We found that the following three steps were common across users: suspicious vertices and supersteps. (3) Users replay the exact lines (1) Users add print statements to their code to capture information of the vertex.compute() function that executed for the sus- about a select set of potentially “buggy” vertices, e.g., vertices that picious vertices and supersteps, by copying code that Graft gener- are assigned incorrect values, send incorrect messages, or throw ates into their development environments’ line-by-line debuggers. -

Apache Giraph 3

Other Distributed Frameworks Shannon Quinn Distinction 1. General Compute Engines – Hadoop 2. User-facing APIs – Cascading – Scalding Alternative Frameworks 1. Apache Mahout 2. Apache Giraph 3. GraphLab 4. Apache Storm 5. Apache Tez 6. Apache Flink Alternative Frameworks 1. Apache Mahout 2. Apache Giraph 3. GraphLab 4. Apache Storm 5. Apache Tez 6. Apache Flink Apache Mahout • A Tale of Two Frameworks 1. Distributed machine learning on Hadoop – 0.1 to 0.9 2. “Samsara” – New in 0.10+ Machine learning on Hadoop • Born out of the Apache Lucene project • Built on Hadoop (all in Java) • Pragmatic machine learning at scale 1: Recommendation 2: Classification 3: Clustering Other MapReduce algorithms • Dimensionality reduction – Lanczos – SSVD – LDA • Regression – Logistic – Linear – Random Forest • Evolutionary algorithms Mahout-Samsara • Programming “environment” for distributed machine learning • R-like syntax • Interactive shell (like Spark) • Under-the-hood algebraic optimizer • Engine-agnostic – Spark – H2O – Flink – ? Mahout-Samsara Mahout • 3 main components Engine-agnostic environment for Engine-specific Legacy MapReduce building scalable ML algorithms (Spark, algorithms algorithms H2O) (Samsara) Mahout • v0.10.0 released April 11 (as in, 5 days ago!) • 0.10.1 – More base linear algebra functionality • 0.11.0 – Compatible with Spark 1.3 • 1.0 – ? Mahout features by engine Mahout features by engine Mahout features by engine • No engine-agnostic clustering algorithms yet – Still the domain of legacy MapReduce • H2O and especially Flink -

Chapter 2 Introduction to Big Data Technology

Chapter 2 Introduction to Big data Technology Bilal Abu-Salih1, Pornpit Wongthongtham2 Dengya Zhu3 , Kit Yan Chan3 , Amit Rudra3 1The University of Jordan 2 The University of Western Australia 3 Curtin University Abstract: Big data is no more “all just hype” but widely applied in nearly all aspects of our business, governments, and organizations with the technology stack of AI. Its influences are far beyond a simple technique innovation but involves all rears in the world. This chapter will first have historical review of big data; followed by discussion of characteristics of big data, i.e. from the 3V’s to up 10V’s of big data. The chapter then introduces technology stacks for an organization to build a big data application, from infrastructure/platform/ecosystem to constructional units and components. Finally, we provide some big data online resources for reference. Keywords Big data, 3V of Big data, Cloud Computing, Data Lake, Enterprise Data Centre, PaaS, IaaS, SaaS, Hadoop, Spark, HBase, Information retrieval, Solr 2.1 Introduction The ability to exploit the ever-growing amounts of business-related data will al- low to comprehend what is emerging in the world. In this context, Big Data is one of the current major buzzwords [1]. Big Data (BD) is the technical term used in reference to the vast quantity of heterogeneous datasets which are created and spread rapidly, and for which the conventional techniques used to process, analyse, retrieve, store and visualise such massive sets of data are now unsuitable and inad- equate. This can be seen in many areas such as sensor-generated data, social media, uploading and downloading of digital media. -

Study Materials for Big Data Processing Tools

MASARYK UNIVERSITY FACULTY OF INFORMATICS Study materials for Big Data processing tools BACHELOR'S THESIS Martin Durkac Brno, Spring 2021 MASARYK UNIVERSITY FACULTY OF INFORMATICS Study materials for Big Data processing tools BACHELOR'S THESIS Martin Durkáč Brno, Spring 2021 This is where a copy of the official signed thesis assignment and a copy of the Statement of an Author is located in the printed version of the document. Declaration Hereby I declare that this paper is my original authorial work, which I have worked out on my own. All sources, references, and literature used or excerpted during elaboration of this work are properly cited and listed in complete reference to the due source. Martin Durkäc Advisor: RNDr. Martin Macák i Acknowledgements I would like to thank my supervisor RNDr. Martin Macak for all the support and guidance. His constant feedback helped me to improve and finish it. I would also like to express my gratitude towards my colleagues at Greycortex for letting me use their resources to develop the practical part of the thesis. ii Abstract This thesis focuses on providing study materials for the Big Data sem• inar. The thesis is divided into six chapters plus a conclusion, where the first chapter introduces Big Data in general, four chapters contain information about Big Data processing tools and the sixth chapter describes study materials provided in this thesis. For each Big Data processing tool, the thesis contains a practical demonstration and an assignment for the seminar in the attachments. All the assignments are provided in both with and without solution forms. -

Harp: Collective Communication on Hadoop

HHarp:arp: CollectiveCollective CommunicationCommunication onon HadooHadoopp Judy Qiu, Indiana University SA OutlineOutline • Machine Learning on Big Data • Big Data Tools • Iterative MapReduce model • MDS Demo • Harp SA MachineMachine LearningLearning onon BigBig DataData • Mahout on Hadoop • https://mahout.apache.org/ • MLlib on Spark • http://spark.apache.org/mllib/ • GraphLab Toolkits • http://graphlab.org/projects/toolkits.html • GraphLab Computer Vision Toolkit SA World Data DAG Model MapReduce Model Graph Model BSP/Collective Mode Hadoop MPI HaLoop Giraph Twister Hama or GraphLab ions/ ions/ Spark GraphX ning Harp Stratosphere Dryad/ Reef DryadLIN Q Pig/PigLatin Hive Query Tez Drill Shark MRQL S4 Storm or ming Samza Spark Streaming BigBig DataData ToolsTools forfor HPCHPC andand SupercomputingSupercomputing • MPI(Message Passing Interface, 1992) • Provide standardized function interfaces for communication between parallel processes. • Collective communication operations • Broadcast, Scatter, Gather, Reduce, Allgather, Allreduce, Reduce‐scatter. • Popular implementations • MPICH (2001) • OpenMPI (2004) • http://www.open‐mpi.org/ SA MapReduceMapReduce ModelModel Google MapReduce (2004) • Jeffrey Dean et al. MapReduce: Simplified Data Processing on Large Clusters. OSDI 2004. Apache Hadoop (2005) • http://hadoop.apache.org/ • http://developer.yahoo.com/hadoop/tutorial/ Apache Hadoop 2.0 (2012) • Vinod Kumar Vavilapalli et al. Apache Hadoop YARN: Yet Another Resource Negotiator, SO 2013. • Separation between resource management and computation -

Technology Overview

Big Data Technology Overview Term Description See Also Big Data - the 5 Vs Everyone Must Volume, velocity and variety. And some expand the definition further to include veracity 3 Vs Know and value as well. 5 Vs of Big Data From Wikipedia, “Agile software development is a group of software development methods based on iterative and incremental development, where requirements and solutions evolve through collaboration between self-organizing, cross-functional teams. Agile The Agile Manifesto It promotes adaptive planning, evolutionary development and delivery, a time-boxed iterative approach, and encourages rapid and flexible response to change. It is a conceptual framework that promotes foreseen tight iterations throughout the development cycle.” A data serialization system. From Wikepedia, Avro Apache Avro “It is a remote procedure call and serialization framework developed within Apache's Hadoop project. It uses JSON for defining data types and protocols, and serializes data in a compact binary format.” BigInsights Enterprise Edition provides a spreadsheet-like data analysis tool to help Big Insights IBM Infosphere Biginsights organizations store, manage, and analyze big data. A scalable multi-master database with no single points of failure. Cassandra Apache Cassandra It provides scalability and high availability without compromising performance. Cloudera Inc. is an American-based software company that provides Apache Hadoop- Cloudera Cloudera based software, support and services, and training to business customers. Wikipedia - Data Science Data science The study of the generalizable extraction of knowledge from data IBM - Data Scientist Coursera Big Data Technology Overview Term Description See Also Distributed system developed at Google for interactively querying large datasets. Dremel Dremel It empowers business analysts and makes it easy for business users to access the data Google Research rather than having to rely on data engineers. -

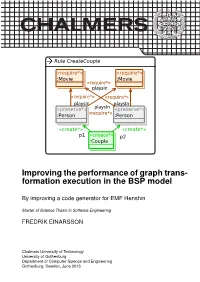

Improving the Performance of Graph Trans- Formation Execution in the BSP Model

Improving the performance of graph trans- formation execution in the BSP model By improving a code generator for EMF Henshin Master of Science Thesis in Software Engineering FREDRIK EINARSSON Chalmers University of Technology University of Gothenburg Department of Computer Science and Engineering Gothenburg, Sweden, June 2015 Master’s thesis 2015:NN Improving the performance of graph transformation execution in the BSP model By improving a code generator for EMF Henshin FREDRIK EINARSSON Department of Computer Science and Engineering Division of Software Engineering Chalmers University of Technology Gothenburg, Sweden 2015 The Author grants to Chalmers University of Technology and University of Gothen- burg the non-exclusive right to publish the Work electronically and in a non-commercial purpose make it accessible on the Internet. The Author warrants that he/she is the author to the Work, and warrants that the Work does not contain text, pictures or other material that violates copyright law. The Author shall, when transferring the rights of the Work to a third party (for example a publisher or a company), acknowledge the third party about this agree- ment. If the Author has signed a copyright agreement with a third party regarding the Work, the Author warrants hereby that he/she has obtained any necessary permission from this third party to let Chalmers University of Technology and Uni- versity of Gothenburg store the Work electronically and make it accessible on the Internet. Improving the performance of graph transformation execution -

Code Smell Prediction Employing Machine Learning Meets Emerging Java Language Constructs"

Appendix to the paper "Code smell prediction employing machine learning meets emerging Java language constructs" Hanna Grodzicka, Michał Kawa, Zofia Łakomiak, Arkadiusz Ziobrowski, Lech Madeyski (B) The Appendix includes two tables containing the dataset used in the paper "Code smell prediction employing machine learning meets emerging Java lan- guage constructs". The first table contains information about 792 projects selected for R package reproducer [Madeyski and Kitchenham(2019)]. Projects were the base dataset for cre- ating the dataset used in the study (Table I). The second table contains information about 281 projects filtered by Java version from build tool Maven (Table II) which were directly used in the paper. TABLE I: Base projects used to create the new dataset # Orgasation Project name GitHub link Commit hash Build tool Java version 1 adobe aem-core-wcm- www.github.com/adobe/ 1d1f1d70844c9e07cd694f028e87f85d926aba94 other or lack of unknown components aem-core-wcm-components 2 adobe S3Mock www.github.com/adobe/ 5aa299c2b6d0f0fd00f8d03fda560502270afb82 MAVEN 8 S3Mock 3 alexa alexa-skills- www.github.com/alexa/ bf1e9ccc50d1f3f8408f887f70197ee288fd4bd9 MAVEN 8 kit-sdk-for- alexa-skills-kit-sdk- java for-java 4 alibaba ARouter www.github.com/alibaba/ 93b328569bbdbf75e4aa87f0ecf48c69600591b2 GRADLE unknown ARouter 5 alibaba atlas www.github.com/alibaba/ e8c7b3f1ff14b2a1df64321c6992b796cae7d732 GRADLE unknown atlas 6 alibaba canal www.github.com/alibaba/ 08167c95c767fd3c9879584c0230820a8476a7a7 MAVEN 7 canal 7 alibaba cobar www.github.com/alibaba/ -

Hadoop Programming Options

"Web Age Speaks!" Webinar Series Hadoop Programming Options Introduction Mikhail Vladimirov Director, Curriculum Architecture [email protected] Web Age Solutions Providing a broad spectrum of regular and customized training classes in programming, system administration and architecture to our clients across the world for over ten years ©WebAgeSolutions.com 2 Overview of Talk Hadoop Overview Hadoop Analytics Systems HDFS and MapReduce v1 & v2 (YARN) Hive Sqoop ©WebAgeSolutions.com 3 Hadoop Programming Options Hadoop Ecosystem Hadoop Hadoop is a distributed fault-tolerant computing platform written in Java Modeled after shared-nothing, massively parallel processing (MPP) system design Hadoop's design was influenced by ideas published in Google File System (GFS) and MapReduce white papers Hadoop can be used as a data hub, data warehouse or an analytic platform ©WebAgeSolutions.com 5 Hadoop Core Components The Hadoop project is made up of three main components: Common • Contains Hadoop infrastructure elements (interfaces with HDFS, system libraries, RPC connectors, Hadoop admin scripts, etc.) Hadoop Distributed File System • Hadoop Distributed File System (HDFS) running on clusters of commodity hardware built around the concept: load once and read many times MapReduce • A distributed data processing framework used as data analysis system ©WebAgeSolutions.com 6 Hadoop Simple Definition In a nutshell, Hadoop is a distributed computing framework that consists of: Reliable data storage (provided via HDFS) Analysis system -

Outline of Machine Learning

Outline of machine learning The following outline is provided as an overview of and topical guide to machine learning: Machine learning – subfield of computer science[1] (more particularly soft computing) that evolved from the study of pattern recognition and computational learning theory in artificial intelligence.[1] In 1959, Arthur Samuel defined machine learning as a "Field of study that gives computers the ability to learn without being explicitly programmed".[2] Machine learning explores the study and construction of algorithms that can learn from and make predictions on data.[3] Such algorithms operate by building a model from an example training set of input observations in order to make data-driven predictions or decisions expressed as outputs, rather than following strictly static program instructions. Contents What type of thing is machine learning? Branches of machine learning Subfields of machine learning Cross-disciplinary fields involving machine learning Applications of machine learning Machine learning hardware Machine learning tools Machine learning frameworks Machine learning libraries Machine learning algorithms Machine learning methods Dimensionality reduction Ensemble learning Meta learning Reinforcement learning Supervised learning Unsupervised learning Semi-supervised learning Deep learning Other machine learning methods and problems Machine learning research History of machine learning Machine learning projects Machine learning organizations Machine learning conferences and workshops Machine learning publications