Using 21St Century Science to Improve Risk-Related Evaluations

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

QU-Alumni Review 2019-3.Pdf

Issue 3, 2019 THE MAGAZINE OF QUEEN’S UNIVERSITY Queen’s SINCE 1927 ALUMNI REVIEW How to Rembrandtlook at a like a conservator and why Heidi Sobol, MAC’00, always starts with the nose In this issue … How Queen’s Chemistry is changing the world Plus … Meet the football coach 4 years to earn your degree. #"""""! !! • Program runs May-August • Earn credits toward an MBA • Designedforrecent graduates "" • Broaden your career prospects • "" " "" ""! 855.933.3298 [email protected] smithqueens.com/gdb "" "" contents Issue 3, 2019, Volume 93, Number 3 Queen’s The magazine of Queen’s University since 1927 queensu.ca/alumnireview ALUMNI REVIEW 2 From the editor 7 From the principal 8 Student research: Pharmacare in Canada 24 Victor Snieckus: The magic of chemistry 29 Matthias Hermann: 10 15 The elements of EM HARM EM TINA WELTZ WELTZ TINA education COVER STORY Inspired by How to look at a Rembrandt 36 Rembrandt Keeping in touch like a conservator Poet Steven Heighton (Artsci’85, Heidi Sobol, mac’00, explores the techniques – ma’86) and artist Em Harm take 46 and the chemistry – behind the masterpieces. inspiration from a new addition The Chemistry medal to The Bader Collection. 48 Your global alumni network 50 Ex libris: New books from faculty and alumni ON THE COVER Heidi Sobol at the Royal Ontario Museum’s exhibition “In the Age of Rembrandt: Dutch Paintings from the 20 33 Museum of Fine Arts, BERNARD CLARK CLARK BERNARD BERNARD CLARK CLARK BERNARD Boston” PHOTO BY TINA WELTZ Pushing the boundaries Meet the coach of science New football coach Steve Snyder discusses his coaching style and the Dr. -

Family Group Sheets Surname Index

PASSAIC COUNTY HISTORICAL SOCIETY FAMILY GROUP SHEETS SURNAME INDEX This collection of 660 folders contains over 50,000 family group sheets of families that resided in Passaic and Bergen Counties. These sheets were prepared by volunteers using the Societies various collections of church, ceme tery and bible records as well as city directo ries, county history books, newspaper abstracts and the Mattie Bowman manuscript collection. Example of a typical Family Group Sheet from the collection. PASSAIC COUNTY HISTORICAL SOCIETY FAMILY GROUP SHEETS — SURNAME INDEX A Aldous Anderson Arndt Aartse Aldrich Anderton Arnot Abbott Alenson Andolina Aronsohn Abeel Alesbrook Andreasen Arquhart Abel Alesso Andrews Arrayo Aber Alexander Andriesse (see Anderson) Arrowsmith Abers Alexandra Andruss Arthur Abildgaard Alfano Angell Arthurs Abraham Alje (see Alyea) Anger Aruesman Abrams Aljea (see Alyea) Angland Asbell Abrash Alji (see Alyea) Angle Ash Ack Allabough Anglehart Ashbee Acker Allee Anglin Ashbey Ackerman Allen Angotti Ashe Ackerson Allenan Angus Ashfield Ackert Aller Annan Ashley Acton Allerman Anners Ashman Adair Allibone Anness Ashton Adams Alliegro Annin Ashworth Adamson Allington Anson Asper Adcroft Alliot Anthony Aspinwall Addy Allison Anton Astin Adelman Allman Antoniou Astley Adolf Allmen Apel Astwood Adrian Allyton Appel Atchison Aesben Almgren Apple Ateroft Agar Almond Applebee Atha Ager Alois Applegate Atherly Agnew Alpart Appleton Atherson Ahnert Alper Apsley Atherton Aiken Alsheimer Arbuthnot Atkins Aikman Alterman Archbold Atkinson Aimone -

Surnames Beginning With

Card# MineName Operator Year Month Day Surname First and Middle Name Age Fatal/Nonfatal In/Outside Occupation Nationality Citizen/Alien Single/Married #Children Mine Experince Occupation Exp. Accident Cause or Remarks Fault County Mining Dist. Film# CoalType 14 Vesta No.4 Vesta Coal 1937 2 18 Babak Frank 28 nonfatal inside brakeman Slavic single thumb caught between car bumpers Washington 21st 3600 bituminous 32 Elma No.3 Baker Whitely Coal 1938 6 14 Babalanis William 18 nonfatal inside machine miner single rock broke & fell on foot heading Somerset 24th 3601 bituminous 7 Elma No.3 Baker Whitely Coal 1937 2 2 Babalonis Charles 29 nonfatal inside scraper Lithuanian citizen single foot caught under machine unloading Somerset 24th 3600 bituminous 117 Somers Pittsburgh Coal 1937 11 2 Babar Louis 35 nonfatal inside machine miner Hungarian married fall of slate in room Fayette 9th 3600 bituminous 12 Isabella Weirton Coal 1938 5 6 Babba Harry M 46 nonfatal inside married hernia lifting timber rail room Fayette 16th 3600 bituminous 33 Colonial No.3 H C Frick Coke 1939 6 24 Babchak John J 25 nonfatal inside brakeman married caught foot in clevices broke foot Fayette 9th 3601 bituminous 66 Heisley No.3 Heisley Coal 1937 9 22 Baber Louis 20 nonfatal inside machine miner Slavic single loose strand in hoist rope hit thumb Cambria 10th 3600 bituminous 91 Springfield No.1 Springfield Coal 1939 10 27 Babich Walter 57 nonfatal inside miner married caught car & roof broke hand Cambria 10th 3601 bituminous 5 Big Bend Big Bend Coal Mining 1942 9 28 Babinscak -

Using 21St Century Science to Improve Risk-Related Evaluations (2017)

THE NATIONAL ACADEMIES PRESS This PDF is available at http://nap.edu/24635 SHARE Using 21st Century Science to Improve Risk-Related Evaluations (2017) DETAILS 200 pages | 8.5 x 11 | PAPERBACK ISBN 978-0-309-45348-6 | DOI 10.17226/24635 CONTRIBUTORS GET THIS BOOK Committee on Incorporating 21st Century Science into Risk-Based Evaluations; Board on Environmental Studies and Toxicology; Division on Earth and Life Studies; National Academies of Sciences, Engineering, and Medicine FIND RELATED TITLES SUGGESTED CITATION National Academies of Sciences, Engineering, and Medicine 2017. Using 21st Century Science to Improve Risk-Related Evaluations. Washington, DC: The National Academies Press. https://doi.org/10.17226/24635. Visit the National Academies Press at NAP.edu and login or register to get: – Access to free PDF downloads of thousands of scientific reports – 10% off the price of print titles – Email or social media notifications of new titles related to your interests – Special offers and discounts Distribution, posting, or copying of this PDF is strictly prohibited without written permission of the National Academies Press. (Request Permission) Unless otherwise indicated, all materials in this PDF are copyrighted by the National Academy of Sciences. Copyright © National Academy of Sciences. All rights reserved. Using 21st Century Science to Improve Risk-Related Evaluations USING 21ST CENTURY SCIENCE TO IMPROVE RISK-RELATED EVALUATIONS Committee on Incorporating 21st Century Science into Risk-Based Evaluations Board on Environmental Studies and Toxicology Division on Earth and Life Studies A Report of Copyright National Academy of Sciences. All rights reserved. Using 21st Century Science to Improve Risk-Related Evaluations THE NATIONAL ACADEMIES PRESS 500 Fifth Street, NW Washington, DC 20001 This activity was supported by Contract EP-C-14-005, TO#0002 between the National Academy of Sciences and the US Environmental Protection Agency. -

Brooklyn College Foundation ANNUAL REPORT 2015/2016 Brooklyn College Foundation 2015–2016 Annual Report

2015 –2016 Annual Report Brooklyn College Foundation ANNUAL REPORT 2015/2016 Brooklyn College Foundation 2015 –2016 Annual Report Dear Alumni and Friends, Dear Alumni and Friends, Welcome to the Brooklyn College Foundation’s FY16 Annual Report, covering the period from July 1, 2015, through June 30, 2016. This was a year of transition. We wrapped up our $200 million Foundation for Success Campaign, bid farewell to retiring President Karen Gould — who provided Brooklyn College with seven years of exceptional leadership — and welcomed our new president, Michelle Anderson. President Anderson comes to Brooklyn College from her previous position as dean of the CUNY School of Law, where she oversaw a period of great renewal and transformation in facilities, programs, and recognition. We on the foundation board are excited to work with her on our mutual mission to continue to provide affordable access to excellent higher education. This year’s report focuses on the impact of key donor gifts as well as the work of the foundation as we prepare for our next capital campaign. I am exceedingly proud that during FY16, the foundation provided more than $2 million to nearly 1,500 students in the form of scholarships, awards, travel grants, internships, fellowships, and emergency grants; and, for faculty, more than $450,000 in the form of professorships, chairs, travel awards, lectureships, and professional development support. All of us at the foundation are grateful to the more than 5,000 donors who share our steadfast commitment to Brooklyn College, its mission, and its students. Sincerely, Edwin H. Cohen ’62 Chair, Brooklyn College Foundation Brooklyn College Foundation 2 3 Brooklyn College Foundation 2015 –2016 Annual Report Dear Alumni and Friends of Brooklyn College, When he laid the cornerstone for the gymnasium building on our beautiful campus, President Franklin D. -

Russian Military Capability in a Ten-Year Perspective 2016

The Russian Armed Forces are developing from a force primarily designed for handling internal – 2016 Perspective Ten-Year in a Capability Military Russian disorder and conflicts in the area of the former Soviet Union towards a structure configured for large-scale operations also beyond that area. The Armed Forces can defend Russia from foreign aggression in 2016 better than they could in 2013. They are also a stronger instrument of coercion than before. This report analyses Russian military capability in a ten-year perspective. It is the eighth edition. A change in this report compared with the previous edition is that a basic assumption has been altered. In 2013, we assessed fighting power under the assumption that Russia was responding to an emerging threat with little or no time to prepare operations. In view of recent events, we now estimate available assets for military operations in situations when Russia initiates the use of armed force. The fighting power of the Russian Armed Forces is studied. Fighting power means the available military assets for three overall missions: operational-strategic joint inter-service combat operations (JISCOs), stand-off warfare and strategic deterrence. The potential order of battle is estimated for these three missions, i.e. what military forces Russia is able to generate and deploy in 2016. The fighting power of Russia’s Armed Forces has continued to increase – primarily west of the Urals. Russian military strategic theorists are devoting much thought not only to military force, but also to all kinds of other – non-military – means. The trend in security policy continues to be based on anti- Americanism, patriotism and authoritarianism at home. -

The List for Surnames by Towns in Bessarabia

Surnames in 19th century revision lists for towns in Bessarabia Compiled from data extracted by the Bessarabia Special Interest Group for the JewishGen Romania & Moldova Database www.http://www.jewishgen.org/Bessarabia http://www.jewishgen.org/databases/Romania How to use this document This list can be helpful in finding a surname that did not turn up in a search of the JewishGen database because the spelling differs significantly from what you entered in the search field, despite using "sounds like," phonetic, fuzzy and other search methods. It is organized by town. Numbers show how many times a given surname appears in in lists extracted for a given town. Data for this document came from lists for various years between 1824 and 1875, processed as of August 2015. Use the alphabetical links below to jump down. B C G I K L M P R S T V Abaklydzhaba village 6 Abaklydzhaba village 6 Abaklydzhaba village 6 Surname Entries Surname Entries Surname Entries KAUSHANSKIY 3 KOGAN 1 MELAMED/LUPU 2 Aleksandreny Colony 1748 Aleksandreny Colony 1748 Aleksandreny Colony 1748 Surname Entries Surname Entries Surname Entries ALEYVI 11 GERSHINKROYN 12 KREYMAR 9 ALTMAN 11 GERTS 13 KUSHNIR 5 ANBINDER 9 GINBERG 12 LANDER 32 AVERBUKH 16 GLEYZER 13 LANFIR 5 AYZIN 5 GLUZMAN 10 LEMLIKH 11 AZINBERG 4 GOFMAN 10 LERNER 3 BARDICHEVER 6 GOIKHMAN 4 LEVIV 4 BARG 7 GOLDBERG 13 LITVAK 5 BARIR 15 GOLDENBERG 15 LIVERANT 17 BATUSHANSKY 9 GOLDENER 12 LOBER 11 BEIN 7 GOLDFARB 9 LOYZGOLD 17 BENDERSKY 26 GOLDINBERG 13 MAGIDMAN 6 BENDIT 7 GOLDMAN 7 MALAMUD 10 BENIKHIS 5 GOLDYAK -

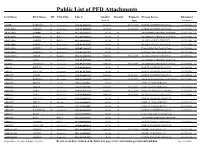

Public List of PFD Attachments

Public List of PFD Attachments Last Name First Name MI Court Site Case # Amount Reason* Payment Process Server Document Seized Date Locator # ABAIR BARBARA J Anchorage 3AN-15-07567CI $880.00 09/23/2017 NORTH COUNTRY PROCESS 2017042963 2 ABALAMA AMBER R Anchorage 3AN-10-00200SC $819.50 09/23/2017 NORTH COUNTRY PROCESS 2017011036 4 ABALAMA AMBER R Palmer 3PA-10-02623CI $0.00 B ATTORNEYS PROCESS SERVICE 2017011036 4 ABALAMA AMBER R Palmer 3PA-10-02623CI $0.00 B ATTORNEYS PROCESS SERVICE 2017011036 4 ABALAMA AMBER R Palmer 3PA-10-00783SC $0.00 B ALASKA COURT SERVICES 2017011036 4 ABALAMA SHIRELL F Anchorage 3AN-02-02749SC $0.00 B ALASKA COURT SERVICES 2017058060 0 ABALAMA SHIRELL F Anchorage 3AN-05-11010CI $0.00 B Certified Mail By Clerk Of Court 2017058060 0 ABALAMA SHIRELL F Anchorage 3AN-01-06487CI $0.00 B INQUEST PROCESS SERVICE 2017058060 0 ABALANZA JOMER T Kodiak 3KO-15-00018SC $880.00 09/23/2017 ALASKA COURT SERVICES 2017054462 8 ABANG TERA Anchorage 3AN-05-01894SC $0.00 A ATTORNEYS PROCESS SERVICE ABARO CHANELLE M Anchorage 3AN-09-02177SC $880.00 09/23/2017 ATTORNEYS PROCESS SERVICE 2017049038 9 ABBAS PHYLLIS A Anchorage 3AN-08-05753CI $0.00 B NORTH COUNTRY PROCESS 2017066584 3 ABBAS PHYLLIS A Anchorage 3AN-06-03730SC $0.00 B NORTH COUNTRY PROCESS 2017066584 3 ABBOTT ANGEL Anchorage 3AN-17-00537SC $506.04 09/23/2017 NORTH COUNTRY PROCESS 2017047984 4 ABBOTT BRENNA N Ketchikan 1KE-13-00007SC $880.00 10/20/2017 SEAK PROFESSIONAL SERVICES LLC2017012534 2 ABBOTT BRIAN L Juneau 1JU-12-00027SC $0.00 A CIVIL CLAIMS SERVICE ABBOTT CHLOE Juneau -

Obituary "P" Index

Obituary "P" Index Copyright © 2004 – 2021 GRHS DISCLAIMER: GRHS cannot guarantee that should you purchase a copy of what you would expect to be an obituary from its obituary collection that you will receive an obituary per se. The obituary collection consists of such items as a) personal cards of information shared with GRHS by researchers, b) www.findagrave.com extractions, c) funeral home cards, d) newspaper death notices, and e) obituaries extracted from newspapers and other publications as well as funeral home web sites. Some obituaries are translations of obituaries published in German publications, although generally GRHS has copies of the German versions. These German versions would have to be ordered separately for they are kept in a separate file in the GRHS library. The list of names and dates contained herein is an alphabetical listing [by surname and given name] of the obituaries held at the Society's headquarters for the letter combination indicated. Each name is followed by the birth date in the first column and death date in the second. Dates may be extrapolated or provided from another source. Important note about UMLAUTS: Surnames in this index have been entered by our volunteers exactly as they appear in each obituary but the use of characters with umlauts in obits has been found to be inconsistant. For example the surname Büchele may be entered as Buchele or Bahmüller as Bahmueller. This is important because surnames with umlauted characters are placed in alphabetic order after regular characters so if you are just scrolling down this sorted list you may find the surname you are looking for in an unexpected place (i.e. -

Publikationsliste Reinhard Büttner 2017 V2

Publikationsliste Reinhard Büttner Original papers 1989-2017 (1) Handt S., R. Büttner, R. Knüchel, H. Rübben, F. Hofstädter, and C. Wagener: Investigations for a point mutation in the c-Ha-ras 1 oncogene in the genome of bladdercarcinomas by Southern blot analysis. Invest. Urolog. 3: 125-128 (1989). (2) Buettner R., S. Yim, Y. Hong, E. Boncinelli, and M.A. Tainsky: Alteration of homeobox gene expression by N-ras transformation of PA-1 human teratocarcinoma cell. Mol. Cell. Biol. 11: 3573-3583 (1991). (3) Tainsky M.A., S. Yim, D.B. Krizman, P. Kannan, P. Chiao, T. Mukhopadhyay, and R. Buettner: Modulation of differentiation in PA-1 human teratocarcinoma cells by an N-ras oncogene. Oncogene 6: 1575-1582 (1991). (4) Kannan P., R. Buettner, D.R. Pratt, and M.A. Tainsky: Identification of a retinoic acid inducible endogenous retrovirus in the human teratocarcinoma-derived cell line PA-1. J. Virol. 65: 6343-6348 (1991). (5) Le-Ruppert K., J.R.W. Masters, R. Knuechel, S. Seegers, M.A. Tainsky, F. Hofstaedter, and R. Buettner: The effect of retinoic acid on chemosensitivity of PA-1 human teratocarcinoma cells and its modulation by an activated N-ras oncogene. Int. J. Cancer 51: 646-651 (1992). (6) Meyer S., R. Buettner, P. Sauer und L. Rupprecht: Akuter arterieller Extremitätenverschluß durch Tumorembolus (Case report and review of literature). Der Chirurg 64: 424-26 (1993). (7) Rohrer L., F.Raulf, C.Bruns, F. Hofstaedter, R. Buettner and R Schuele: Cloning and characterization of a fourth human somatostatin receptor. Proc. Natl. Acad. Sci. USA 90: 4196-4200 (1993). -

Melatonin Treatment Improves Renal Fibrosis Via Mir-4516/SIAH3/PINK1 Axis

cells Article Melatonin Treatment Improves Renal Fibrosis via miR-4516/SIAH3/PINK1 Axis Yeo Min Yoon 1, Gyeongyun Go 2,3, Sungtae Yoon 4, Ji Ho Lim 2,3, Gaeun Lee 2,3, Jun Hee Lee 5,6,7,8 and Sang Hun Lee 1,2,3,4,* 1 Medical Science Research Institute, Soonchunhyang University Seoul Hospital, Seoul 04401, Korea; [email protected] 2 Department of Biochemistry, College of Medicine, Soonchunhyang University, Cheonan 31151, Korea; [email protected] (G.G.); wlenfl[email protected] (J.H.L.); [email protected] (G.L.) 3 Department of Biochemistry, BK21FOUR Project2, College of Medicine, Soonchunhyang University, Cheonan 31151, Korea 4 Stembio. Ltd., Entrepreneur 306, Soonchunhyang-ro 22, Sinchang-myeon, Asan 31538, Korea; [email protected] 5 Institute of Tissue Regeneration Engineering (ITREN), Dankook University, Cheonan 31116, Korea; [email protected] 6 Department of Nanobiomedical Science and BK21 PLUS NBM Global Research Center for Regenerative Medicine, Dankook University, Cheonan 31116, Korea 7 Department of Oral Anatomy, College of Dentistry, Dankook University, Cheonan 31116, Korea 8 Cell & Matter Institute, Dankook University, Cheonan 31116, Korea * Correspondence: [email protected]; Tel.: +82-02-709-9029; Fax: +82-02-792-5812 Abstract: Dysregulation in mitophagy, in addition to contributing to imbalance in the mitochondrial dynamic, has been implicated in the development of renal fibrosis and progression of chronic kidney disease (CKD). However, the current understanding of the precise mechanisms behind the pathogenic loss of mitophagy remains unclear for developing cures for CKD. We found that miR- Citation: Yoon, Y.M.; Go, G.; Yoon, S.; Lim, J.H.; Lee, G.; Lee, J.H.; Lee, S.H. -

AKRON BEACON JOURNAL Index to Obituaries

AKRON BEACON JOURNAL Index to Obituaries 1985-1989 I Akron-Summit County Public Library Compiled by the the staff of the Language, Literature and History Division NAME DATE Abbott, Elizabeth K. 3-21 -88 Abbott, Franz D. 3-1 5-89 Abbott, John L. 2-1 6-88 Abbott, Madeline A. 7-18-86 Abbott, Nellie 2-1 3-85 Abbot , William F. 11-5-87 Abbuh , Robert Lee 12-6-86 Abbuh , Steven N. 1-7-89 Abdoo Florence C. 9-3-85 Abel, Glenn R. 11-27-86 Abel, Robert A. 9-29-87 Abele Beulah 9-1 3-86 Abell, James 2-1 -85 Abell, Mary A. 8-24-85 Abell, Ruth D. 2-8-87 Abell, Virgie M. 2-20-85 Abell, William I. 5-10-86 Abels, Ruth 10-29-86 Abercrombie, Dorothy A. 6-25-86 Aberegg, Paul 9-1 4-89 Abernathy, Elmo B. 3-29-89 Abernathy, John H. 3-1 4-88 Abernathy, Minnie I. 9-8-87 Abernathy, Myrtle L. 7-31~~ -86~~ Abicht, Jean 10-1 3-87 Ablett, Edith M. 6-30-87 Abmyer, Mary 4-2-85 Abraham, Daniel M. 6-1 5-86 Abraham, Flossie 10-31-87 Abraham, Louis 10-30-88 Abraham, Ruth 8-1 5-86 Abram, John R. 3-29-88 Abramchuk, Rose 11-1 3-86 Abrams, Ida M. 9-21 -85 Abrams, Mary E. 7-19-86 Abrams, W. J. 5-1 8-89 Abreu, Arnaldo J. 4-1 9-88 Abshire, Donald L. 11-4-86 Abshire, Gertrude G.