Simulation of Nonverbal Social Interaction and Small Groups Dynamics in Virtual Environments

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

4010, 237 8514, 226 80486, 280 82786, 227, 280 a AA. See Anti-Aliasing (AA) Abacus, 16 Accelerated Graphics Port (AGP), 219 Acce

Index 4010, 237 AIB. See Add-in board (AIB) 8514, 226 Air traffic control system, 303 80486, 280 Akeley, Kurt, 242 82786, 227, 280 Akkadian, 16 Algebra, 26 Alias Research, 169 Alienware, 186 A Alioscopy, 389 AA. See Anti-aliasing (AA) All-In-One computer, 352 Abacus, 16 All-points addressable (APA), 221 Accelerated Graphics Port (AGP), 219 Alpha channel, 328 AccelGraphics, 166, 273 Alpha Processor, 164 Accel-KKR, 170 ALT-256, 223 ACM. See Association for Computing Altair 680b, 181 Machinery (ACM) Alto, 158 Acorn, 156 AMD, 232, 257, 277, 410, 411 ACRTC. See Advanced CRT Controller AMD 2901 bit-slice, 318 (ACRTC) American national Standards Institute (ANSI), ACS, 158 239 Action Graphics, 164, 273 Anaglyph, 376 Acumos, 253 Anaglyph glasses, 385 A.D., 15 Analog computer, 140 Adage, 315 Anamorphic distortion, 377 Adage AGT-30, 317 Anatomic and Symbolic Mapper Engine Adams Associates, 102 (ASME), 110 Adams, Charles W., 81, 148 Anderson, Bob, 321 Add-in board (AIB), 217, 363 AN/FSQ-7, 302 Additive color, 328 Anisotropic filtering (AF), 65 Adobe, 280 ANSI. See American national Standards Adobe RGB, 328 Institute (ANSI) Advanced CRT Controller (ACRTC), 226 Anti-aliasing (AA), 63 Advanced Remote Display Station (ARDS), ANTIC graphics co-processor, 279 322 Antikythera device, 127 Advanced Visual Systems (AVS), 164 APA. See All-points addressable (APA) AED 512, 333 Apalatequi, 42 AF. See Anisotropic filtering (AF) Aperture grille, 326 AGP. See Accelerated Graphics Port (AGP) API. See Application program interface Ahiska, Yavuz, 260 standard (API) AI. -

A Survey Full Text Available At

Full text available at: http://dx.doi.org/10.1561/0600000083 Publishing and Consuming 3D Content on the Web: A Survey Full text available at: http://dx.doi.org/10.1561/0600000083 Other titles in Foundations and Trends R in Computer Graphics and Vision Crowdsourcing in Computer Vision Adriana Kovashka, Olga Russakovsky, Li Fei-Fei and Kristen Grauman ISBN: 978-1-68083-212-9 The Path to Path-Traced Movies Per H. Christensen and Wojciech Jarosz ISBN: 978-1-68083-210-5 (Hyper)-Graphs Inference through Convex Relaxations and Move Making Algorithms Nikos Komodakis, M. Pawan Kumar and Nikos Paragios ISBN: 978-1-68083-138-2 A Survey of Photometric Stereo Techniques Jens Ackermann and Michael Goesele ISBN: 978-1-68083-078-1 Multi-View Stereo: A Tutorial Yasutaka Furukawa and Carlos Hernandez ISBN: 978-1-60198-836-2 Full text available at: http://dx.doi.org/10.1561/0600000083 Publishing and Consuming 3D Content on the Web: A Survey Marco Potenziani Visual Computing Lab, ISTI CNR [email protected] Marco Callieri Visual Computing Lab, ISTI CNR [email protected] Matteo Dellepiane Visual Computing Lab, ISTI CNR [email protected] Roberto Scopigno Visual Computing Lab, ISTI CNR [email protected] Boston — Delft Full text available at: http://dx.doi.org/10.1561/0600000083 Foundations and Trends R in Computer Graphics and Vision Published, sold and distributed by: now Publishers Inc. PO Box 1024 Hanover, MA 02339 United States Tel. +1-781-985-4510 www.nowpublishers.com [email protected] Outside North America: now Publishers Inc. -

Investigation of the Stroop Effect in a Virtual Reality Environment

Tallinn University of Technology School of Information Technologies Younus Ali 177356IASM Investigation of the Stroop Effect in a Virtual Reality Environment Master’s Thesis Aleksei Tepljakov Ph.D. Research Scientist TALLINN 2020 Declaration of Originality Declaration: I hereby declare that this thesis, my original investigation and achieve- ment, submitted for the Master’s degree at Tallinn University of Technology, has not been submitted for any degree or examination. Younus Ali Date: January 7, 2020 Signature: ......................................... Abstract Human cognitive behavior is an exciting subject to study. In this work, the Stroop effect is investigated. The classical Stroop effect arises as a consequence of cognitive interference due to mismatch of the written color name and the actual text color. The purpose of this study is to investigate the Stroop effect and Reverse Stroop in the virtual reality environment by considering response, error, and subjective selection. An interactive application using virtual reality technology with Unreal Engine implemented using instruction-based Stroop and reversed Stroop tasks. In the designed test, participants need to throw a cube-shaped object into three specific zones according to instructions. The instructions depend on the color ( represents Stroop Test ) or the meaning of the words (represents reversed Stroop test). The instructions using either congruent or incongruent (“blue” displayed in red or green) color stimuli. Participants took more time to respond to the Stroop test than -

Download Chapter

Fuzzy Online Reputation Analysis Framework Edy Portmann Information Systems Research Group, University of Fribourg, Switzerland Tam Nguyen Mixed Reality Lab, National University of Singapore, Singapore Jose Sepulveda Mixed Reality Lab, National University of Singapore, Singapore Adrian David Cheok Mixed Reality Lab, National University of Singapore, Singapore ABSTRACT The fuzzy online reputation analysis framework, or ―foRa‖ (plural of forum, the Latin word for market- place) framework, is a method for searching the Social Web to find meaningful information about reputa- tion. Based on an automatic, fuzzy-built ontology, this framework queries the social marketplaces of the Web for reputation, combines the retrieved results, and generates navigable Topic Maps. Using these in- teractive maps, communications operatives can zero in on precisely what they are looking for and discov- er unforeseen relationships between topics and tags. Thus, using this framework, it is possible to scan the Social Web for a name, product, brand, or combination thereof and determine query-related topic classes with related terms and thus identify hidden sources. This chapter also briefly describes the youReputation prototype (www.youreputation.org), a free web-based application for reputation analysis. In the course of this, a small example will explain the benefits of the prototype. INTRODUCTION The Social Web consists of software that provides online prosumers (combination of producer and con- sumer) with a free and easy means of interacting or collaborating with each other. Consequently, it is not surprising that the number of people who read Weblogs (or short blogs) at least once a month has grown rapidly in the past few years and is likely to increase further in the foreseeable future. -

In Printnew from UW Press Seattle on the Spot

The University of Washington is engaged in the most ambitious fundraising campaign in our history: Be Boundless — For Washington, For the World. Your support will help make it possible for our students and faculty to tackle the most crucial challenges of our time. Together, we can turn ideas into impact. JOIN US. uw.edu/campaign 2 COLUMNS MAGAZINE MARCH 2 0 1 8 Gear up for spring! Show your colors and visit University Book Store for the largest and best selection of offcially licensed UW gear anywhere. Find it online at ubookstore.com. 1.800.335. READ • 206.634.3400 • ubookstore.com Outftting Huskies since 1900. Live well. At Mirabella Seattle, our goal is for you to live better longer. With our premium fitness and aquatic centers, complete with spa-style activities and amenities, plus our countless wellness classes, staying active and engaged has never been easier. Let go of age. Embrace healthy. Retire at Mirabella. Experience our incredible community today: 206-254-1441 mirabellaliving.com/seattle Mirabella Seattle is a Pacific Retirement Services community and an equal housing opportunity. HUSKY PICKS FOR PLAY TIME Ouray Asym Redux Hood amazon.com Women's Haachi Crew Sweatshirt Toddler Tops seattleteams.com shop.gohuskies.com Fast Asleep Uniform Pajama fastasleeppjs.com UW Board Book Collegiate Vera Tote Squishable Husky bedbathandbeyond.com verabradley.com squishable.com photographed at University Book Store, children's book section The Fun Zone Husky pups can claim their space and explain the clutter Huskies Home Field The Traveling Team Dawgs from Birth with this quality steel Bring the game to any room Toddlers, toys and tailgating supplies Welcome new Huskies to the sign. -

Enter COMPUSERVE Call 1-800-554-4067 for Free Software and 10 Free Hours

B-1 NetPages™ Business Listings #1 FLOWERS enter COMPUSERVE Call 1-800-554-4067 for free software and 10 free hours. #1 FLOWERS MCNALLY, PETE A. J. LASTER & COMPANY, INC. ABATOR INFORMATION SERVICES, INC ABUNDANT DISCOVERIES http://www.callamer.com/~1flowers/ [email protected] LASTER, ATLAS JR., PH.D. http://www.ibp.com/pit/abator/ http://amsquare.com/abundant/index.html ADVANCED SOFTWARE ENGINEER [email protected] 1-800 MUSIC NOW WALLINGFORD CT USA CONSULTING PSYCHOLOGIST ABB SENAL, S.A. ACADEM CONSULTING SERVICES http://www.1800musicnow.mci.com/ CLAYTON MO USA SANZ, JOSE-MARIA BARBER, STAN SCHALLER, DAVID [email protected] [email protected] 1-800-98-PERFUME [email protected] A.J.S. PRODUCTIONS MADRID SPAIN PROPRIETOR http://www.98perfume.com/ ADVANCED SOFTWARE ENGINEER SHAW, JACK HOUSTON TX USA WALLINGFORD CT USA [email protected] ABC ADS ANERICAN BUSINESS 1-800-COLLECT OWNER CLASSIFIED ACADEMIC INTERNATIONAL PRESS 3M HEALTH INFORMATION SYSTEMS (3M http://www.organic.com/1800collect/index.ht BROOKFIELD WI USA SALKS, DAVIS http://www.gulf.net/~bevon/ HIS) ml http://www.execpc.com/~jshaw [email protected] TANKUS, ED http://www.amaranth.com/aipress/ CLASSIFIED ADVERTISING FOR 1-800-MATTRESS [email protected] A LOCAL REDBOOK FLORIST BUSINESS ACADEMIC SOUTH (THE) http://www.sleep.com/DIAL-A-MATTRESS/ COMPUTER OPERATOR http://www.aflorist.com/ LEESPORT PA USA WALLINGFORD CT USA http://sunsite.unc.edu/doug_m/pages/south 1200 YEARS OF ITALIAN SCULPTURE A+ ON-LINE RESUMES ABC - AUSTRALIAN BROADCASTING /academic.html http://www.thais.it/scultura/scultura.htm 3M HEALTH INFORMATION SYSTEMS (HIS) [email protected] CORPORATION ACADEMY OF MOTION PICTURE ARTS ROSS, MICHAEL G. -

3Dvisa Bulletin Issue 3, September 2007 3D Visualisation in the Arts Network

3D VISA 3DVisA Bulletin Issue 3, September 2007 3D Visualisation in the Arts Network www.viznet.ac.uk/3dvisa Editorial by Anna Bentkowska–Kafel Editorial Featured 3D Method 3D VISUALISATION USING GAME PLATFORMS by Maria Sifniotis “Why is it that we visualise?” There is no common understanding of digital 3D visualisation, nor will there ever be. Computer Vision offers different possibilities 3DViSA Discussion Forum in different creative pursuits. The role of 3DVisA is to give voice to this richness of cognitive and methodo- BEYOND PHOTOREALISM IN 3D COMPUTER logical perspectives, and serve as a forum for discus- APPLICATIONS Daniela Sirbu responds to Angela sion and exchange of knowledge. Geary This issue focuses on the illusion of reality in vir- tual representations. The familiar topos about two Greek ILLUSIONS OF VIRTUAL REALITY by Hanna painters: Zeuxis – who fooled birds with his lifelike Buczyñska-Garewicz rendition of grapes, and Parrhasius who in turn deceived his colleague with a depiction of a curtain that Zeuxis tried to draw – sums up the ambition of illusion in Featured 3D Project pictorial representation. In her response to the articles on photorealism published in two preceding issues of VIRTUAL WORLDS, REAL LEARNING? the 3DVisA Bulletin, Daniela Sirbu argues for the recog- Education in Second Life by Andy Powell nition of the role of the artist behind 3D computer visualisation. She also considers the debt of digital tools to traditional media, such as painting and photography, News and Reviews and the multiple sensory engagement that is a particular feature of virtual environments. The visual percep- 3DVisA Student Award – Call for submissions tion, or the Zeuxis effect, is no longer preferential. -

Playing with Virtual Reality: Early Adopters of Commercial Immersive Technology

Playing with Virtual Reality: Early Adopters of Commercial Immersive Technology Maxwell Foxman Submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy under the Executive Committee of the Graduate School of Arts and Sciences COLUMBIA UNIVERSITY 2018 © 2018 Maxwell Foxman All rights reserved ABSTRACT Playing with Virtual Reality: Early Adopters of Commercial Immersive Technology Maxwell Foxman This dissertation examines early adopters of mass-marketed Virtual Reality (VR), as well as other immersive technologies, and the playful processes by which they incorporate the devices into their lives within New York City. Starting in 2016, relatively inexpensive head-mounted displays (HMDs) were manufactured and distributed by leaders in the game and information technology industries. However, even before these releases, developers and content creators were testing the devices through “development kits.” These de facto early adopters, who are distinctly commercially-oriented, acted as a launching point for the dissertation to scrutinize how, why and in what ways digital technologies spread to the wider public. Taking a multimethod approach that combines semi-structured interviews, two years of participant observation, media discourse analysis and autoethnography, the dissertation details a moment in the diffusion of an innovation and how publicity, social forces and industry influence adoption. This includes studying the media ecosystem which promotes and sustains VR, the role of New York City in framing opportunities and barriers for new users, and a description of meetups as important communities where devotees congregate. With Game Studies as a backdrop for analysis, the dissertation posits that the blurry relationship between labor and play held by most enthusiasts sustains the process of VR adoption. -

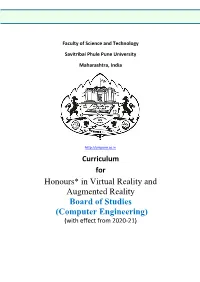

Honours* in Virtual Reality and Augmented Reality Board of Studies (Computer Engineering) (With Effect from 2020-21)

Faculty of Science and Technology Savitribai Phule Pune University Maharashtra, India http://unipune.ac.in Curriculum for Honours* in Virtual Reality and Augmented Reality Board of Studies (Computer Engineering) (with effect from 2020-21) Savitribai Phule Pune University Honours* in Virtual Reality and Augmented Reality With effect from 2020-21 Course Code and Teaching Examination Scheme Credit Scheme Course Title Scheme and Marks Hours / Week Year & Semester & Year Semester Semester Theory - - Tutorial Practical Total Marks Total Presentation Practical Practical Credit Total Term work Term Theory / Theory Tutorial End Mid TE 310701 Virtual Reality 04 -- -- 30 70 -- -- -- 100 04 -- 04 & V 310702 Virtual Reality -- -- 02 -- -- 50 -- -- 50 -- 01 01 Laboratory Total 04 - 02 100 50 - - 150 04 01 05 Total Credits = 05 TE 310703 Augmented Reality 04 -- -- 30 70 -- -- -- 100 04 -- 04 & Total 04 - - 100 - - - 100 04 - 04 VI Total Credits = 04 BE 410701 Virtual Reality 04 -- -- 30 70 -- -- -- 100 04 -- 04 & in Game VII Development 410702 Virtual Reality -- -- 02 -- -- 50 -- -- 50 -- 01 01 Game Development Laboratory Total 04 - 02 100 50 - - 150 04 01 05 Total Credits = 05 BE 410703 Application 04 - -- 30 70 -- -- -- 100 04 -- 04 & Development using VIII Augmented Reality and Virtual Reality 410704 Seminar -- 02 -- -- -- - -- 50 50 02 -- 02 Total 04 - 02 100 - 50 150 06 - 06 Total Credits = 06 Total Credit for Semester V+ VI+ VII+ VIII = 20 * To be offered as Honours for Major Disciplines as– 1. Computer Engineering 2. Electronics and Telecommunication Engineering 3. Electronics Engineering 4. Information Technology For any other Major Disciplines which is not mentioned above, it may be offered as Minor Degree. -

GRAPHICS ACCELERATOR Features System Overview

GRAPHICS ACCELERATOR Preliminary Data Sheet Features ■ Video interface — Shared frame buffer interface for multimedia The ARTIST Graphics 3GA graphics accelerator is a 64 bit, — Supports S3 Vision/VA full screen video with a state-of-the-art 3D graphics processor designed to accelerate shared frame buffer graphical user interfaces, 2D and 3D vector drawing, and 3D ■ Flexible local memory rendering. — 64 bit wide memory bus ■ High performance 3D graphics processor — VRAM block write support — 16 bit Z-buffer for automatic 3D hidden surface — Supports 1 to 4 megabytes VRAM display memory removal — Supports 0 to 8 megabytes of off screen DRAM for — Secondary 16 bit Z-buffer for arbitrary frontal Z Z-buffers, fonts, etc. clipping ■ Single chip accelerator — Gouraud shading for realistic 3D rendering — On-chip 32 bit VGA for fast DOS performance — Dithering for superior shading at 8 and 16 bits per — Support for Microsoft Plug & Play ISA specification pixel — Interfaces directly to VESA VL-Bus and PCI with — Texture mapping to map 2D textures onto 3D no glue logic surfaces — Interfaces to ISA bus with two 74F245 buffers — Six arithmetic raster operations (Add, Add with — 128 byte host FIFO for passing data and commands Saturation, Sub, Sub with Saturation, Min, Max) — 240 pin plastic quad flat pack ■ Powerful 2D GUI accelerator — 50 MHz clock frequency — Accelerated drawing at 8, 16, 24 and 32 bits per ■ Software drivers pixel — Microsoft Windows 3.1, Windows NT and — 256 Microsoft Windows raster operations in Windows ‘95 (when released) -

(Surreal): Software to Simulate Surveying in Virtual Reality

International Journal of Geo-Information Article Surveying Reality (SurReal): Software to Simulate Surveying in Virtual Reality Dimitrios Bolkas 1,*, Jeffrey Chiampi 2 , Joseph Fioti 3 and Donovan Gaffney 3 1 Department of Surveying Engineering, Pennsylvania State University, Wilkes-Barre Campus, Dallas, PA 18612, USA 2 Department of Engineering, Pennsylvania State University, Wilkes-Barre Campus, Dallas, PA 18612, USA; [email protected] 3 Department of Computer Science and Engineering, Pennsylvania State University, University Park, State College, PA 16801, USA; [email protected] (J.F.); [email protected] (D.G.) * Correspondence: [email protected] Abstract: Experiential learning through outdoor labs is an integral component of surveying education. Cancelled labs as a result of weather, the inability to visit a wide variety of terrain location, recent distance education requirements create significant instructional challenges. In this paper, we present a software solution called surveying reality (SurReal); this software allows for students to conduct immersive and interactive virtual reality labs. This paper discusses the development of a virtual differential leveling lab. The developed software faithfully replicates major steps followed in the field and any skills learned in virtual reality are transferable in the physical world. Furthermore, this paper presents a novel technique for leveling multi-legged objects like a tripod on variable terrain. This method relies solely on geometric modeling and does not require physical simulation of the tripod. This increases efficiency and ensures that the user experiences at least 60 frames per second, thus reducing lagging and creating a pleasant experience for the user. We conduct two leveling Citation: Bolkas, D.; Chiampi, J.; Fioti, J.; Gaffney, D. -

Videojuego Soul of Wars

UNIVERSIDAD NACIONAL AUTONOMA DE NICARAGUA, MANAGUA RECINTO UNIVESITARIO “RUBEN DARIO” FACULTAD DE CIENCIAS E INGENIERIAS DEPARTAMENTO DE COMPUTACION TEMA: Programación de Videojuegos SUBTEMA: “Desarrollo de un Videojuego de perspectiva tercera persona, en ambiente 3D para PC, en la plataforma Windows, en el primer semestre 2013” PRESENTADO POR: Br. José Ramón Duran Ramírez Br. Meyling A. Lara Narváez Br. Jaeddson Jeannick Sánchez Arana TUTOR: Msc. Juan De Dios Bonilla MANAGUA, NICARAGUA 31 DE OCTUBRE DEL 2013 Dedicatoria Dedicamos este proyecto a nuestros padres, por su paciencia y apoyo incondicional, por brindar los medios para llevar este proceso de aprendizaje a término y por ser símbolo de superación y entrega; a nuestros amigos y compañeros por motivarnos con su energía; y demás personas que directa o indirectamente hicieron posible la culminación de esta etapa. Agradecimientos Con la realización de este proyecto se pone punto y final a todo un largo camino que ha sido la carrera de Licenciatura en Computación, mediante estas líneas queremos agradecer todo el apoyo que hemos tenido para conseguir finalizar. Primeramente queremos dar las gracias a Dios por nuestra salud y por darnos fortaleza para salir adelante cada día. A nuestros padres, por todo su amor y apoyo incondicional. A Msc. Juan de Dios Bonilla, tutor del proyecto, por todos sus consejos y tiempo invertido en ayudarnos a terminar con éxito este proyecto fin de carrera. A Darwin Rocha por su apoyo brindado a lo largo del proyecto. Al Lic. Luis Miguel Martínez por su colaboración en el desarrollo metodológico del proyecto. Y a las demás personas que hicieron posible llevar a buen término este trabajo de grado.