The Chessbrain Project – Massively Distributed Inhomogeneous Speed- Critical Computation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

General Rule Prohibiting Disruptive Trading Practices CME Rule 575

i CME AND ICE DISRUPTIVE TRADING PRACTICES RULES SUMMARY CME Rule and Guidance ICE Rule and Guidance Guidance Common to CME and ICE CFTC Guidance CWT Comments General Rule Prohibiting Disruptive Trading Practices CME Rule 575. All orders must ICE Rule 4.02(I)(2). In connection The CME and ICE FAQs include a list of factors Section 4c(a)(5) of the Commodity Although the CME and ICE rules do not be entered for the purpose of with the placement of any order or that will be considered in assessing a potential Exchange Act (“CEA”) provides that: “it expressly address the CEA’s prohibition on executing bona fide execution of any transaction, it is a violation of the disruptive trading practices. The shall be unlawful for any person to engage violating bids and offers, the ICE and CME transactions. Additionally, all violation for any person to factors are very similar across both CME and ICE. in any trading practice, or conduct on or trading functionality should prevent this non-actionable messages must knowingly enter any bid or offer for A market participant is not prohibited from subject to the rules of a registered entity type of conduct. The exchanges may find be entered in good faith for the purpose of making a market making a two-sided market with unequal that – (A) violates bids or offers; (B) more generally that violating bids or legitimate purposes. price which does not reflect the quantities as long as both orders are entered to demonstrates intentional or reckless offers is prohibited by the exchanges’ Intent: Rule 575 applies to true state of the market, or execute bona fide transactions.iv disregard for the orderly execution of disruptive trading practices rules. -

The Queen's Gambit

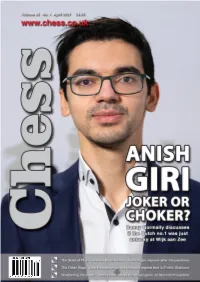

01-01 Cover - April 2021_Layout 1 16/03/2021 13:03 Page 1 03-03 Contents_Chess mag - 21_6_10 18/03/2021 11:45 Page 3 Chess Contents Founding Editor: B.H. Wood, OBE. M.Sc † Editorial....................................................................................................................4 Executive Editor: Malcolm Pein Malcolm Pein on the latest developments in the game Editors: Richard Palliser, Matt Read Associate Editor: John Saunders 60 Seconds with...Geert van der Velde.....................................................7 Subscriptions Manager: Paul Harrington We catch up with the Play Magnus Group’s VP of Content Chess Magazine (ISSN 0964-6221) is published by: A Tale of Two Players.........................................................................................8 Chess & Bridge Ltd, 44 Baker St, London, W1U 7RT Wesley So shone while Carlsen struggled at the Opera Euro Rapid Tel: 020 7486 7015 Anish Giri: Choker or Joker?........................................................................14 Email: [email protected], Website: www.chess.co.uk Danny Gormally discusses if the Dutch no.1 was just unlucky at Wijk Twitter: @CHESS_Magazine How Good is Your Chess?..............................................................................18 Twitter: @TelegraphChess - Malcolm Pein Daniel King also takes a look at the play of Anish Giri Twitter: @chessandbridge The Other Saga ..................................................................................................22 Subscription Rates: John Henderson very much -

Glossary of Chess

Glossary of chess See also: Glossary of chess problems, Index of chess • X articles and Outline of chess • This page explains commonly used terms in chess in al- • Z phabetical order. Some of these have their own pages, • References like fork and pin. For a list of unorthodox chess pieces, see Fairy chess piece; for a list of terms specific to chess problems, see Glossary of chess problems; for a list of chess-related games, see Chess variants. 1 A Contents : absolute pin A pin against the king is called absolute since the pinned piece cannot legally move (as mov- ing it would expose the king to check). Cf. relative • A pin. • B active 1. Describes a piece that controls a number of • C squares, or a piece that has a number of squares available for its next move. • D 2. An “active defense” is a defense employing threat(s) • E or counterattack(s). Antonym: passive. • F • G • H • I • J • K • L • M • N • O • P Envelope used for the adjournment of a match game Efim Geller • Q vs. Bent Larsen, Copenhagen 1966 • R adjournment Suspension of a chess game with the in- • S tention to finish it later. It was once very common in high-level competition, often occurring soon af- • T ter the first time control, but the practice has been • U abandoned due to the advent of computer analysis. See sealed move. • V adjudication Decision by a strong chess player (the ad- • W judicator) on the outcome of an unfinished game. 1 2 2 B This practice is now uncommon in over-the-board are often pawn moves; since pawns cannot move events, but does happen in online chess when one backwards to return to squares they have left, their player refuses to continue after an adjournment. -

July 2021 COLORADO CHESS INFORMANT

Volume 48, Number 3 COLORADO STATE CHESS ASSOCIATION July 2021 COLORADO CHESS INFORMANT BACK TO “NEARLY NORMAL” OVER-THE-BOARD PLAY Volume 48, Number 3 Colorado Chess Informant July 2021 From the Editor Slowly, we are getting there... Only fitting that the return to over-the-board tournament play in Colorado resumed with the Senior Open this year. If you recall, that was the last over-the-board tournament held before we went into lockdown last year. You can read all about this year’s offer- The Colorado State Chess Association, Incorporated, is a ing on page 12. Section 501(C)(3) tax exempt, non-profit educational corpora- The Denver Chess Club has resumed activity (read the resump- tion formed to promote chess in Colorado. Contributions are tive result on page 8) with their first OTB tourney - and it went tax deductible. like gang-busters. So good to see. They are also resuming their Dues are $15 a year. Youth (under 20) and Senior (65 or older) club play in August. Check out the latest with them at memberships are $10. Family memberships are available to www.DenverChess.com. additional family members for $3 off the regular dues. Scholas- And if you look at the back cover you will see that the venerable tic tournament membership is available for $3. Colorado Open has returned in all it’s splendor! Somehow I ● Send address changes to - Attn: Alexander Freeman to the think that this maybe the largest turnout so far - just a hunch on email address [email protected]. my part as players in this state are so hungry to resume the tour- ● Send pay renewals & memberships to the CSCA. -

Merit Badge Workbook This Workbook Can Help You but You Still Need to Read the Merit Badge Pamphlet

Chess Merit Badge Workbook This workbook can help you but you still need to read the merit badge pamphlet. This Workbook can help you organize your thoughts as you prepare to meet with your merit badge counselor. You still must satisfy your counselor that you can demonstrate each skill and have learned the information. You should use the work space provided for each requirement to keep track of which requirements have been completed, and to make notes for discussing the item with your counselor, not for providing full and complete answers. If a requirement says that you must take an action using words such as "discuss", "show", "tell", "explain", "demonstrate", "identify", etc, that is what you must do. Merit Badge Counselors may not require the use of this or any similar workbooks. No one may add or subtract from the official requirements found in Scouts BSA Requirements (Pub. 33216 – SKU 653801). The requirements were last issued or revised in 2013 • This workbook was updated in June 2020. Scout’s Name: __________________________________________ Unit: __________________________________________ Counselor’s Name: ____________________ Phone No.: _______________________ Email: _________________________ http://www.USScouts.Org • http://www.MeritBadge.Org Please submit errors, omissions, comments or suggestions about this workbook to: [email protected] Comments or suggestions for changes to the requirements for the merit badge should be sent to: [email protected] ______________________________________________________________________________________________________________________________________________ 1. Discuss with your merit badge counselor the history of the game of chess. Explain why it is considered a game of planning and strategy. 2. Discuss with your merit badge counselor the following: a. -

Chess Merit Badge

Chess Merit Badge Troop 344 & 9344 Pemberville, OH Requirements 1. Discuss with your merit badge counselor the history of the game of chess. Explain why it is considered a game of planning and strategy. 2. Discuss with your merit badge counselor the following: a. The benefits of playing chess, including developing critical thinking skills, concentration skills, and decision- making skills, and how these skills can help you in other areas of your life b. Sportsmanship and chess etiquette 2 Requirements 3. Demonstrate to your counselor that you know each of the following. Then, using Scouting’s Teaching EDGE, teach someone (preferably another Scout) who does not know how to play chess: a. The name of each chess piece b. How to set up a chessboard c. How each chess piece moves, including castling and en passant captures 3 Requirements 4. Do the following: a. Demonstrate scorekeeping using the algebraic system of chess notation. b. Discuss the differences between the opening, the middle game, and the endgame. c. Explain four opening principles. d. Explain the four rules for castling. e. On a chessboard, demonstrate a "scholar's mate" and a "fool's mate." f. Demonstrate on a chessboard four ways a chess game can end in a draw. 4 Requirements 5. Do the following: a. Explain four of the following elements of chess strategy: exploiting weaknesses, force, king safety, pawn structure, space, tempo, time. b. Explain any five of these chess tactics: clearance sacrifice, decoy, discovered attack, double attack, fork, interposing, overloading, overprotecting, pin, remove the defender, skewer, zwischenzug. c. Set up a chessboard with the white king on e1, the white rooks on a1 and h1, and the black king on e5. -

Chess Merit Badge Requirements

CHESS STEM-Based BOY SCOUTS OF AMERICA MERIT BADGE SERIES CHESS “Enhancing our youths’ competitive edge through merit badges” Requirements 1. Discuss with your merit badge counselor the history of the game of chess. Explain why it is considered a game of planning and strategy. 2. Discuss with your merit badge counselor the following: a. The benefits of playing chess, including developing critical thinking skills, concentration skills, and decision-making skills, and how these skills can help you in other areas of your life b. Sportsmanship and chess etiquette 3. Demonstrate to your counselor that you know each of the following. Then, using Scouting’s Teaching EDGE*, teach someone (preferably another Scout) who does not know how to play chess: a. The name of each chess piece b. How to set up a chessboard c. How each chess piece moves, including castling and en passant captures 4. Do the following: a. Demonstrate scorekeeping using the algebraic system of chess notation. b. Discuss the differences between the opening, the middle game, and the endgame. c. Explain four opening principles. d. Explain the four rules for castling. e. On a chessboard, demonstrate a “scholar’s mate” and a “fool’s mate.” f. Demonstrate on a chessboard four ways a chess game can end in a draw. * You may learn about Scouting’s Teaching EDGE from your unit leader, another Scout, or by attending training. 35973 ISBN 978-0-8395-0000-1 ©2016 Boy Scouts of America 2016 Printing 5. Do the following: a. Explain four of the following elements of chess strategy: exploiting weaknesses, force, king safety, pawn structure, space, tempo, time. -

Chess Curriculum - All Levels (1-20)

Chess Curriculum - All Levels (1-20) Level 1 History of Chess and Introduction to Chessboard Saying Hello: King, Queen and Pawn Saying Hello: Rook, Bishop and Knight Check, Checkmate and Stalemate Coordinating pieces for a goal Level 2 Record capture with pieces and pawn Recording ambiguous moves Attack and Defense Recording in a scoresheet Checkmate with QR rollers Checkmate in one with Q Checkmate in one with R Checkmate in one with B Checkmate in one with N Checkmate in one with pawn Misc Checkmate in one Exchange of pieces of same value Counting Attack and defense Capture free piece Special moves- castling, enpassant, promotion, underpromotion 4 Queens/ 8 Queens Problem Level 3 Three phases of the game Idea behind chess moves- basics Tactics overview Pin-Learn to pin, escape from pin Skewer Fork Analyzing recorded games Pawn Games Level 4 Discover attack Discovered check Double Check Ideas to develop the Queen Basic checkmate patterns Short Games with quick checkmates Level 5 Pinned piece does not Protect Illusionary pins Traps Opening principles- basics Controlling the centre Rapid development Selecting the right moves or the candidatate moves Play games and explain the ideas behind the moves Level 6 Overloading/ overworked piece Remove the guard Decoy Deflection Take the right capture Tactics identification in games Combination of tactics Mate in two Level 7 Rook mates vs lone king in Ending Short break Zugzwang Playing with the King Intro to king and pawn ending Pawn play strategy Pawn box Level 8 Playing pawn endings Opposition -

Merit Badge Workbook This Workbook Can Help You but You Still Need to Read the Merit Badge Pamphlet

Chess Merit Badge Workbook This workbook can help you but you still need to read the merit badge pamphlet. This Workbook can help you organize your thoughts as you prepare to meet with your merit badge counselor. You still must satisfy your counselor that you can demonstrate each skill and have learned the information. You should use the work space provided for each requirement to keep track of which requirements have been completed, and to make notes for discussing the item with your counselor, not for providing full and complete answers. If a requirement says that you must take an action using words such as "discuss", "show", "tell", "explain", "demonstrate", "identify", etc, that is what you must do. Merit Badge Counselors may not require the use of this or any similar workbooks. No one may add or subtract from the official requirements found in Boy Scout Requirements (Pub. 33216 – SKU 637685). The requirements were last issued or revised in 2013 • This workbook was updated in March 2018. Scout’s Name: __________________________________________ Unit: __________________________________________ Counselor’s Name: ______________________________________ Counselor’s Phone No.: ___________________________ http://www.USScouts.Org • http://www.MeritBadge.Org Please submit errors, omissions, comments or suggestions about this workbook to: [email protected] Comments or suggestions for changes to the requirements for the merit badge should be sent to: [email protected] ______________________________________________________________________________________________________________________________________________ 1. Discuss with your merit badge counselor the history of the game of chess. Explain why it is considered a game of planning and strategy. 2. Discuss with your merit badge counselor the following: a. -

Guide to Best Practices in Ocean Acidification Research

EUROPEAN Research & Environment COMMISSION Innovation Guide to best practices for ocean acidification research and data reporting projects Studies and reports EUR 24872 EN EUROPEAN COMMISSION Directorate-General for Research and Innovation Directorate I – Environment Unit I.4 – Climate change and natural hazards Contact: Paola Agostini European Commission Office CDMA 03/124 B-1049 Brussels Tel. +32 2 29 78610 Fax +32 2 29 95755 E-mail: [email protected] Contact: Ulf Riebesell Jean-Pierre Gattuso IFM-GEOMAR Laboratoire d’Océanographie Leibniz Institute of Marine Sciences CNRS-INSU and Université Pierre et Marie Curie Düsternbrooker Weg 20 B.P. 28 24105 Kiel 06234 Villefranche-sur-mer Cedex Germany France E-mail: [email protected] E-mail: [email protected] www.epoca-project.eu Cover photo: © David Luquet - http://www.davidluquet.com/ Reprint August 2011 EPOCA In cooperation with: EUROPEAN COMMISSION Guide to best practices for ocean acidification research and data reporting edited by Ulf Riebesell 1, Victoria J. Fabry 2, Lina Hansson 3 and Jean-Pierre Gattuso 4 1 IFM-GEOMAR, Leibniz Institute of Marine Sciences, Kiel, Germany 2 California State University San Marcos, San Marcos, USA 3 Laboratoire d’Océanographie, CNRS-INSU and Université Pierre et Marie Curie, Villefranche-sur-mer, France 4 Coordinator of EPOCA, Laboratoire d’Océanographie, CNRS-INSU and Université Pierre et Marie Curie, Villefranche-sur-mer, France Directorate-General for Research and Innovation 2011 Environment EUR 24872 EN EUROPE DIRECT is a service to help you find answers to your questions about the European Union Freephone number (*): 00 800 6 7 8 9 10 11 (*) Certain mobile telephone operators do not allow access to 00 800 numbers or these calls may be billed LEGAL NOTICE Neither the European Commission nor any person acting on behalf of the Commission is responsible for the use which might be made of the following information. -

A Survey of NOMA: Current Status and Open Research Challenges

1 A Survey of NOMA: Current Status and Open Research Challenges Behrooz Makki, Senior Member, IEEE, Krishna Chitti, Ali Behravan and Mohamed-Slim Alouini, Fellow, IEEE Abstract—Non-orthogonal multiple access (NOMA) has been Different schemes have been proposed for NOMA includ- considered as a study-item in 3GPP for 5G new radio (NR). ing, power domain NOMA [3], SCMA (SC: sparse code) [4], However, it was decided not to continue with it as a work- [5], PDMA (PD: pattern division) [6], RSMA (RS: resource item, and to leave it for possible use in beyond 5G. In this paper, we first review the discussions that ended in such de- spread) [7], multi-user shared access (MUSA) [8], IGMA cision. Particularly, we present simulation comparisons between (IG: interleave-grid) [9], Welch-bound equality spread mul- the NOMA and multi-user multiple-input-multiple-output (MU- tiple access (WSMA) [10], [11], and IDMA (ID: interleave- MIMO), where the possible gain of NOMA, compared to MU- division) [12]. These techniques follow the superposition prin- MIMO, is negligible. Then, we propose a number of methods ciple and, along with differences in bit- and symbol-level to reduce the implementation complexity and delay of both uplink (UL) and downlink (DL) NOMA-based transmission, as NOMA implementation, the main difference among them is different ways to improve its efficiency. Here, particular attention the UEs’ signature design which is based on spreading, coding, is paid to reducing the receiver complexity, the cost of hybrid scrambling, or interleaving distinctness. automatic repeat request as well as the user pairing complexity. -

Solving: the Role of Teaching Heuristics in Transfer of Learning

Eurasia Journal of Mathematics, Science & Technology Education, 2016, 12(3), 655-668 Chess Training and Mathematical Problem- Solving: The Role of Teaching Heuristics in Transfer of Learning Roberto Trinchero University of Turin, ITALY Giovanni Sala University of Liverpool, UK Received 27 October 2015Revised 29 December 2015 Accepted 10 January 2016 Recently, chess in school activities has attracted the attention of policy makers, teachers and researchers. Chess has been claimed to be an effective tool to enhance children’s mathematical skills. In this study, 931 primary school pupils were recruited and then assigned to two treatment groups attending chess lessons, or to a control group, and were tested on their mathematical problem-solving abilities. The two treatment groups differed from each other on the teaching method adopted: The trainers of one group taught the pupils heuristics to solve chess problems, whereas the trainers of the other treatment group did not teach any chess-specific problem-solving heuristic. Results showed that the former group outperformed the other two groups. These results foster the hypothesis that a specific type of chess training does improve children’s mathematical skills, and uphold the idea that teaching general heuristics can be an effective way to promote transfer of learning. Keywords: chess; heuristics; mathematics; STEM education; transfer INTRODUCTION Recently, pupils’ poor achievement in mathematics has been the subject of debate both in the United States (Hanushek, Peterson, & Woessmann, 2012; Richland, Stigler, & Holyoak, 2012) and in Europe (Grek, 2009). The current market requires more graduates in Science, Technology, Engineering, and Mathematics (STEM) subjects than graduates in the humanities.