Simultaneous Multithreading

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

High Performance Computing Through Parallel and Distributed Processing

Yadav S. et al., J. Harmoniz. Res. Eng., 2013, 1(2), 54-64 Journal Of Harmonized Research (JOHR) Journal Of Harmonized Research in Engineering 1(2), 2013, 54-64 ISSN 2347 – 7393 Original Research Article High Performance Computing through Parallel and Distributed Processing Shikha Yadav, Preeti Dhanda, Nisha Yadav Department of Computer Science and Engineering, Dronacharya College of Engineering, Khentawas, Farukhnagar, Gurgaon, India Abstract : There is a very high need of High Performance Computing (HPC) in many applications like space science to Artificial Intelligence. HPC shall be attained through Parallel and Distributed Computing. In this paper, Parallel and Distributed algorithms are discussed based on Parallel and Distributed Processors to achieve HPC. The Programming concepts like threads, fork and sockets are discussed with some simple examples for HPC. Keywords: High Performance Computing, Parallel and Distributed processing, Computer Architecture Introduction time to solve large problems like weather Computer Architecture and Programming play forecasting, Tsunami, Remote Sensing, a significant role for High Performance National calamities, Defence, Mineral computing (HPC) in large applications Space exploration, Finite-element, Cloud science to Artificial Intelligence. The Computing, and Expert Systems etc. The Algorithms are problem solving procedures Algorithms are Non-Recursive Algorithms, and later these algorithms transform in to Recursive Algorithms, Parallel Algorithms particular Programming language for HPC. and Distributed Algorithms. There is need to study algorithms for High The Algorithms must be supported the Performance Computing. These Algorithms Computer Architecture. The Computer are to be designed to computer in reasonable Architecture is characterized with Flynn’s Classification SISD, SIMD, MIMD, and For Correspondence: MISD. Most of the Computer Architectures preeti.dhanda01ATgmail.com are supported with SIMD (Single Instruction Received on: October 2013 Multiple Data Streams). -

2.5 Classification of Parallel Computers

52 // Architectures 2.5 Classification of Parallel Computers 2.5 Classification of Parallel Computers 2.5.1 Granularity In parallel computing, granularity means the amount of computation in relation to communication or synchronisation Periods of computation are typically separated from periods of communication by synchronization events. • fine level (same operations with different data) ◦ vector processors ◦ instruction level parallelism ◦ fine-grain parallelism: – Relatively small amounts of computational work are done between communication events – Low computation to communication ratio – Facilitates load balancing 53 // Architectures 2.5 Classification of Parallel Computers – Implies high communication overhead and less opportunity for per- formance enhancement – If granularity is too fine it is possible that the overhead required for communications and synchronization between tasks takes longer than the computation. • operation level (different operations simultaneously) • problem level (independent subtasks) ◦ coarse-grain parallelism: – Relatively large amounts of computational work are done between communication/synchronization events – High computation to communication ratio – Implies more opportunity for performance increase – Harder to load balance efficiently 54 // Architectures 2.5 Classification of Parallel Computers 2.5.2 Hardware: Pipelining (was used in supercomputers, e.g. Cray-1) In N elements in pipeline and for 8 element L clock cycles =) for calculation it would take L + N cycles; without pipeline L ∗ N cycles Example of good code for pipelineing: §doi =1 ,k ¤ z ( i ) =x ( i ) +y ( i ) end do ¦ 55 // Architectures 2.5 Classification of Parallel Computers Vector processors, fast vector operations (operations on arrays). Previous example good also for vector processor (vector addition) , but, e.g. recursion – hard to optimise for vector processors Example: IntelMMX – simple vector processor. -

Massively Parallel Computing with CUDA

Massively Parallel Computing with CUDA Antonino Tumeo Politecnico di Milano 1 GPUs have evolved to the point where many real world applications are easily implemented on them and run significantly faster than on multi-core systems. Future computing architectures will be hybrid systems with parallel-core GPUs working in tandem with multi-core CPUs. Jack Dongarra Professor, University of Tennessee; Author of “Linpack” Why Use the GPU? • The GPU has evolved into a very flexible and powerful processor: • It’s programmable using high-level languages • It supports 32-bit and 64-bit floating point IEEE-754 precision • It offers lots of GFLOPS: • GPU in every PC and workstation What is behind such an Evolution? • The GPU is specialized for compute-intensive, highly parallel computation (exactly what graphics rendering is about) • So, more transistors can be devoted to data processing rather than data caching and flow control ALU ALU Control ALU ALU Cache DRAM DRAM CPU GPU • The fast-growing video game industry exerts strong economic pressure that forces constant innovation GPUs • Each NVIDIA GPU has 240 parallel cores NVIDIA GPU • Within each core 1.4 Billion Transistors • Floating point unit • Logic unit (add, sub, mul, madd) • Move, compare unit • Branch unit • Cores managed by thread manager • Thread manager can spawn and manage 12,000+ threads per core 1 Teraflop of processing power • Zero overhead thread switching Heterogeneous Computing Domains Graphics Massive Data GPU Parallelism (Parallel Computing) Instruction CPU Level (Sequential -

Parallel Computer Architecture

Parallel Computer Architecture Introduction to Parallel Computing CIS 410/510 Department of Computer and Information Science Lecture 2 – Parallel Architecture Outline q Parallel architecture types q Instruction-level parallelism q Vector processing q SIMD q Shared memory ❍ Memory organization: UMA, NUMA ❍ Coherency: CC-UMA, CC-NUMA q Interconnection networks q Distributed memory q Clusters q Clusters of SMPs q Heterogeneous clusters of SMPs Introduction to Parallel Computing, University of Oregon, IPCC Lecture 2 – Parallel Architecture 2 Parallel Architecture Types • Uniprocessor • Shared Memory – Scalar processor Multiprocessor (SMP) processor – Shared memory address space – Bus-based memory system memory processor … processor – Vector processor bus processor vector memory memory – Interconnection network – Single Instruction Multiple processor … processor Data (SIMD) network processor … … memory memory Introduction to Parallel Computing, University of Oregon, IPCC Lecture 2 – Parallel Architecture 3 Parallel Architecture Types (2) • Distributed Memory • Cluster of SMPs Multiprocessor – Shared memory addressing – Message passing within SMP node between nodes – Message passing between SMP memory memory nodes … M M processor processor … … P … P P P interconnec2on network network interface interconnec2on network processor processor … P … P P … P memory memory … M M – Massively Parallel Processor (MPP) – Can also be regarded as MPP if • Many, many processors processor number is large Introduction to Parallel Computing, University of Oregon, -

A Review of Multicore Processors with Parallel Programming

International Journal of Engineering Technology, Management and Applied Sciences www.ijetmas.com September 2015, Volume 3, Issue 9, ISSN 2349-4476 A Review of Multicore Processors with Parallel Programming Anchal Thakur Ravinder Thakur Research Scholar, CSE Department Assistant Professor, CSE L.R Institute of Engineering and Department Technology, Solan , India. L.R Institute of Engineering and Technology, Solan, India ABSTRACT When the computers first introduced in the market, they came with single processors which limited the performance and efficiency of the computers. The classic way of overcoming the performance issue was to use bigger processors for executing the data with higher speed. Big processor did improve the performance to certain extent but these processors consumed a lot of power which started over heating the internal circuits. To achieve the efficiency and the speed simultaneously the CPU architectures developed multicore processors units in which two or more processors were used to execute the task. The multicore technology offered better response-time while running big applications, better power management and faster execution time. Multicore processors also gave developer an opportunity to parallel programming to execute the task in parallel. These days parallel programming is used to execute a task by distributing it in smaller instructions and executing them on different cores. By using parallel programming the complex tasks that are carried out in a multicore environment can be executed with higher efficiency and performance. Keywords: Multicore Processing, Multicore Utilization, Parallel Processing. INTRODUCTION From the day computers have been invented a great importance has been given to its efficiency for executing the task. -

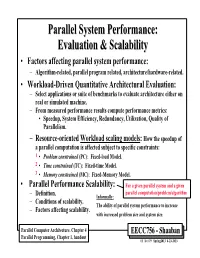

Parallel System Performance: Evaluation & Scalability

ParallelParallel SystemSystem Performance:Performance: EvaluationEvaluation && ScalabilityScalability • Factors affecting parallel system performance: – Algorithm-related, parallel program related, architecture/hardware-related. • Workload-Driven Quantitative Architectural Evaluation: – Select applications or suite of benchmarks to evaluate architecture either on real or simulated machine. – From measured performance results compute performance metrics: • Speedup, System Efficiency, Redundancy, Utilization, Quality of Parallelism. – Resource-oriented Workload scaling models: How the speedup of a parallel computation is affected subject to specific constraints: 1 • Problem constrained (PC): Fixed-load Model. 2 • Time constrained (TC): Fixed-time Model. 3 • Memory constrained (MC): Fixed-Memory Model. • Parallel Performance Scalability: For a given parallel system and a given parallel computation/problem/algorithm – Definition. Informally: – Conditions of scalability. The ability of parallel system performance to increase – Factors affecting scalability. with increased problem size and system size. Parallel Computer Architecture, Chapter 4 EECC756 - Shaaban Parallel Programming, Chapter 1, handout #1 lec # 9 Spring2013 4-23-2013 Parallel Program Performance • Parallel processing goal is to maximize speedup: Time(1) Sequential Work Speedup = < Time(p) Max (Work + Synch Wait Time + Comm Cost + Extra Work) Fixed Problem Size Speedup Max for any processor Parallelizing Overheads • By: 1 – Balancing computations/overheads (workload) on processors -

Vector Vs. Scalar Processors: a Performance Comparison Using a Set of Computational Science Benchmarks

Vector vs. Scalar Processors: A Performance Comparison Using a Set of Computational Science Benchmarks Mike Ashworth, Ian J. Bush and Martyn F. Guest, Computational Science & Engineering Department, CCLRC Daresbury Laboratory ABSTRACT: Despite a significant decline in their popularity in the last decade vector processors are still with us, and manufacturers such as Cray and NEC are bringing new products to market. We have carried out a performance comparison of three full-scale applications, the first, SBLI, a Direct Numerical Simulation code from Computational Fluid Dynamics, the second, DL_POLY, a molecular dynamics code and the third, POLCOMS, a coastal-ocean model. Comparing the performance of the Cray X1 vector system with two massively parallel (MPP) micro-processor-based systems we find three rather different results. The SBLI PCHAN benchmark performs excellently on the Cray X1 with no code modification, showing 100% vectorisation and significantly outperforming the MPP systems. The performance of DL_POLY was initially poor, but we were able to make significant improvements through a few simple optimisations. The POLCOMS code has been substantially restructured for cache-based MPP systems and now does not vectorise at all well on the Cray X1 leading to poor performance. We conclude that both vector and MPP systems can deliver high performance levels but that, depending on the algorithm, careful software design may be necessary if the same code is to achieve high performance on different architectures. KEYWORDS: vector processor, scalar processor, benchmarking, parallel computing, CFD, molecular dynamics, coastal ocean modelling All of the key computational science groups in the 1. Introduction UK made use of vector supercomputers during their halcyon days of the 1970s, 1980s and into the early 1990s Vector computers entered the scene at a very early [1]-[3]. -

Scalable Task Parallel Programming in the Partitioned Global Address Space

Scalable Task Parallel Programming in the Partitioned Global Address Space DISSERTATION Presented in Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy in the Graduate School of The Ohio State University By James Scott Dinan, M.S. Graduate Program in Computer Science and Engineering The Ohio State University 2010 Dissertation Committee: P. Sadayappan, Advisor Atanas Rountev Paolo Sivilotti c Copyright by James Scott Dinan 2010 ABSTRACT Applications that exhibit irregular, dynamic, and unbalanced parallelism are grow- ing in number and importance in the computational science and engineering commu- nities. These applications span many domains including computational chemistry, physics, biology, and data mining. In such applications, the units of computation are often irregular in size and the availability of work may be depend on the dynamic, often recursive, behavior of the program. Because of these properties, it is challenging for these programs to achieve high levels of performance and scalability on modern high performance clusters. A new family of programming models, called the Partitioned Global Address Space (PGAS) family, provides the programmer with a global view of shared data and allows for asynchronous, one-sided access to data regardless of where it is physically stored. In this model, the global address space is distributed across the memories of multiple nodes and, for any given node, is partitioned into local patches that have high affinity and low access cost and remote patches that have a high access cost due to communication. The PGAS data model relaxes conventional two-sided communication semantics and allows the programmer to access remote data without the cooperation of the remote processor. -

Parallel Processing! 1! CSE 30321 – Lecture 23 – Introduction to Parallel Processing! 2! Suggested Readings! •! Readings! –! H&P: Chapter 7! •! (Over Next 2 Weeks)!

CSE 30321 – Lecture 23 – Introduction to Parallel Processing! 1! CSE 30321 – Lecture 23 – Introduction to Parallel Processing! 2! Suggested Readings! •! Readings! –! H&P: Chapter 7! •! (Over next 2 weeks)! Lecture 23" Introduction to Parallel Processing! University of Notre Dame! University of Notre Dame! CSE 30321 – Lecture 23 – Introduction to Parallel Processing! 3! CSE 30321 – Lecture 23 – Introduction to Parallel Processing! 4! Processor components! Multicore processors and programming! Processor comparison! vs.! Goal: Explain and articulate why modern microprocessors now have more than one core andCSE how software 30321 must! adapt to accommodate the now prevalent multi- core approach to computing. " Introduction and Overview! Writing more ! efficient code! The right HW for the HLL code translation! right application! University of Notre Dame! University of Notre Dame! CSE 30321 – Lecture 23 – Introduction to Parallel Processing! CSE 30321 – Lecture 23 – Introduction to Parallel Processing! 6! Pipelining and “Parallelism”! ! Load! Mem! Reg! DM! Reg! ALU ! Instruction 1! Mem! Reg! DM! Reg! ALU ! Instruction 2! Mem! Reg! DM! Reg! ALU ! Instruction 3! Mem! Reg! DM! Reg! ALU ! Instruction 4! Mem! Reg! DM! Reg! ALU Time! Instructions execution overlaps (psuedo-parallel)" but instructions in program issued sequentially." University of Notre Dame! University of Notre Dame! CSE 30321 – Lecture 23 – Introduction to Parallel Processing! CSE 30321 – Lecture 23 – Introduction to Parallel Processing! Multiprocessing (Parallel) Machines! Flynn#s -

Challenges for the Message Passing Interface in the Petaflops Era

Challenges for the Message Passing Interface in the Petaflops Era William D. Gropp Mathematics and Computer Science www.mcs.anl.gov/~gropp What this Talk is About The title talks about MPI – Because MPI is the dominant parallel programming model in computational science But the issue is really – What are the needs of the parallel software ecosystem? – How does MPI fit into that ecosystem? – What are the missing parts (not just from MPI)? – How can MPI adapt or be replaced in the parallel software ecosystem? – Short version of this talk: • The problem with MPI is not with what it has but with what it is missing Lets start with some history … Argonne National Laboratory 2 Quotes from “System Software and Tools for High Performance Computing Environments” (1993) “The strongest desire expressed by these users was simply to satisfy the urgent need to get applications codes running on parallel machines as quickly as possible” In a list of enabling technologies for mathematical software, “Parallel prefix for arbitrary user-defined associative operations should be supported. Conflicts between system and library (e.g., in message types) should be automatically avoided.” – Note that MPI-1 provided both Immediate Goals for Computing Environments: – Parallel computer support environment – Standards for same – Standard for parallel I/O – Standard for message passing on distributed memory machines “The single greatest hindrance to significant penetration of MPP technology in scientific computing is the absence of common programming interfaces across various parallel computing systems” Argonne National Laboratory 3 Quotes from “Enabling Technologies for Petaflops Computing” (1995): “The software for the current generation of 100 GF machines is not adequate to be scaled to a TF…” “The Petaflops computer is achievable at reasonable cost with technology available in about 20 years [2014].” – (estimated clock speed in 2004 — 700MHz)* “Software technology for MPP’s must evolve new ways to design software that is portable across a wide variety of computer architectures. -

A Survey on Parallel Multicore Computing: Performance & Improvement

Advances in Science, Technology and Engineering Systems Journal Vol. 3, No. 3, 152-160 (2018) ASTESJ www.astesj.com ISSN: 2415-6698 A Survey on Parallel Multicore Computing: Performance & Improvement Ola Surakhi*, Mohammad Khanafseh, Sami Sarhan University of Jordan, King Abdullah II School for Information Technology, Computer Science Department, 11942, Jordan A R T I C L E I N F O A B S T R A C T Article history: Multicore processor combines two or more independent cores onto one integrated circuit. Received: 18 May, 2018 Although it offers a good performance in terms of the execution time, there are still many Accepted: 11 June, 2018 metrics such as number of cores, power, memory and more that effect on multicore Online: 26 June, 2018 performance and reduce it. This paper gives an overview about the evolution of the Keywords: multicore architecture with a comparison between single, Dual and Quad. Then we Distributed System summarized some of the recent related works implemented using multicore architecture and Dual core show the factors that have an effect on the performance of multicore parallel architecture Multicore based on their results. Finally, we covered some of the distributed parallel system concepts Quad core and present a comparison between them and the multiprocessor system characteristics. 1. Introduction [6]. The heterogenous cores have more than one type of cores, they are not identical, each core can handle different application. Multicore is one of the parallel computing architectures which The later has better performance in terms of less power consists of two or more individual units (cores) that read and write consumption as will be shown later [2]. -

Oblivious Network RAM and Leveraging Parallelism to Achieve Obliviousness

Oblivious Network RAM and Leveraging Parallelism to Achieve Obliviousness Dana Dachman-Soled1,3 ∗ Chang Liu2 y Charalampos Papamanthou1,3 z Elaine Shi4 x Uzi Vishkin1,3 { 1: University of Maryland, Department of Electrical and Computer Engineering 2: University of Maryland, Department of Computer Science 3: University of Maryland Institute for Advanced Computer Studies (UMIACS) 4: Cornell University January 12, 2017 Abstract Oblivious RAM (ORAM) is a cryptographic primitive that allows a trusted CPU to securely access untrusted memory, such that the access patterns reveal nothing about sensitive data. ORAM is known to have broad applications in secure processor design and secure multi-party computation for big data. Unfortunately, due to a logarithmic lower bound by Goldreich and Ostrovsky (Journal of the ACM, '96), ORAM is bound to incur a moderate cost in practice. In particular, with the latest developments in ORAM constructions, we are quickly approaching this limit, and the room for performance improvement is small. In this paper, we consider new models of computation in which the cost of obliviousness can be fundamentally reduced in comparison with the standard ORAM model. We propose the Oblivious Network RAM model of computation, where a CPU communicates with multiple memory banks, such that the adversary observes only which bank the CPU is communicating with, but not the address offset within each memory bank. In other words, obliviousness within each bank comes for free|either because the architecture prevents a malicious party from ob- serving the address accessed within a bank, or because another solution is used to obfuscate memory accesses within each bank|and hence we only need to obfuscate communication pat- terns between the CPU and the memory banks.