Massively Parallel Computers: Why Not Prirallel Computers for the Masses?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

High Performance Computing Through Parallel and Distributed Processing

Yadav S. et al., J. Harmoniz. Res. Eng., 2013, 1(2), 54-64 Journal Of Harmonized Research (JOHR) Journal Of Harmonized Research in Engineering 1(2), 2013, 54-64 ISSN 2347 – 7393 Original Research Article High Performance Computing through Parallel and Distributed Processing Shikha Yadav, Preeti Dhanda, Nisha Yadav Department of Computer Science and Engineering, Dronacharya College of Engineering, Khentawas, Farukhnagar, Gurgaon, India Abstract : There is a very high need of High Performance Computing (HPC) in many applications like space science to Artificial Intelligence. HPC shall be attained through Parallel and Distributed Computing. In this paper, Parallel and Distributed algorithms are discussed based on Parallel and Distributed Processors to achieve HPC. The Programming concepts like threads, fork and sockets are discussed with some simple examples for HPC. Keywords: High Performance Computing, Parallel and Distributed processing, Computer Architecture Introduction time to solve large problems like weather Computer Architecture and Programming play forecasting, Tsunami, Remote Sensing, a significant role for High Performance National calamities, Defence, Mineral computing (HPC) in large applications Space exploration, Finite-element, Cloud science to Artificial Intelligence. The Computing, and Expert Systems etc. The Algorithms are problem solving procedures Algorithms are Non-Recursive Algorithms, and later these algorithms transform in to Recursive Algorithms, Parallel Algorithms particular Programming language for HPC. and Distributed Algorithms. There is need to study algorithms for High The Algorithms must be supported the Performance Computing. These Algorithms Computer Architecture. The Computer are to be designed to computer in reasonable Architecture is characterized with Flynn’s Classification SISD, SIMD, MIMD, and For Correspondence: MISD. Most of the Computer Architectures preeti.dhanda01ATgmail.com are supported with SIMD (Single Instruction Received on: October 2013 Multiple Data Streams). -

2.5 Classification of Parallel Computers

52 // Architectures 2.5 Classification of Parallel Computers 2.5 Classification of Parallel Computers 2.5.1 Granularity In parallel computing, granularity means the amount of computation in relation to communication or synchronisation Periods of computation are typically separated from periods of communication by synchronization events. • fine level (same operations with different data) ◦ vector processors ◦ instruction level parallelism ◦ fine-grain parallelism: – Relatively small amounts of computational work are done between communication events – Low computation to communication ratio – Facilitates load balancing 53 // Architectures 2.5 Classification of Parallel Computers – Implies high communication overhead and less opportunity for per- formance enhancement – If granularity is too fine it is possible that the overhead required for communications and synchronization between tasks takes longer than the computation. • operation level (different operations simultaneously) • problem level (independent subtasks) ◦ coarse-grain parallelism: – Relatively large amounts of computational work are done between communication/synchronization events – High computation to communication ratio – Implies more opportunity for performance increase – Harder to load balance efficiently 54 // Architectures 2.5 Classification of Parallel Computers 2.5.2 Hardware: Pipelining (was used in supercomputers, e.g. Cray-1) In N elements in pipeline and for 8 element L clock cycles =) for calculation it would take L + N cycles; without pipeline L ∗ N cycles Example of good code for pipelineing: §doi =1 ,k ¤ z ( i ) =x ( i ) +y ( i ) end do ¦ 55 // Architectures 2.5 Classification of Parallel Computers Vector processors, fast vector operations (operations on arrays). Previous example good also for vector processor (vector addition) , but, e.g. recursion – hard to optimise for vector processors Example: IntelMMX – simple vector processor. -

A Massively-Parallel Mixed-Mode Computer Designed to Support

This paper appeared in th International Parallel Processing Symposium Proc of nd Work shop on Heterogeneous Processing pages NewportBeach CA April Triton A MassivelyParallel MixedMo de Computer Designed to Supp ort High Level Languages Christian G Herter Thomas M Warschko Walter F Tichy and Michael Philippsen University of Karlsruhe Dept of Informatics Postfach D Karlsruhe Germany Mo dula Abstract Mo dula pronounced Mo dulastar is a small ex We present the architectureofTriton a scalable tension of Mo dula for massively parallel program mixedmode SIMDMIMD paral lel computer The ming The programming mo del of Mo dula incor novel features of Triton are p orates b oth data and control parallelism and allows hronous and asynchronous execution mixed sync Support for highlevel machineindependent pro Mo dula is problemorientedinthesensethatthe gramming languages programmer can cho ose the degree of parallelism and mix the control mo de SIMD or MIMDlike as need Fast SIMDMIMD mode switching ed bytheintended algorithm Parallelism maybe nested to arbitrary depth Pro cedures may b e called Special hardware for barrier synchronization of from sequential or parallel contexts and can them multiple process groups selves generate parallel activity without any restric tions Most Mo dula programs can b e translated into ecient co de for b oth SIMD and MIMD archi A selfrouting deadlockfreeperfect shue inter tectures connect with latency hiding Overview of language extensions The architecture is the outcomeofanintegrated de Mo dula extends Mo dula -

Massively Parallel Computing with CUDA

Massively Parallel Computing with CUDA Antonino Tumeo Politecnico di Milano 1 GPUs have evolved to the point where many real world applications are easily implemented on them and run significantly faster than on multi-core systems. Future computing architectures will be hybrid systems with parallel-core GPUs working in tandem with multi-core CPUs. Jack Dongarra Professor, University of Tennessee; Author of “Linpack” Why Use the GPU? • The GPU has evolved into a very flexible and powerful processor: • It’s programmable using high-level languages • It supports 32-bit and 64-bit floating point IEEE-754 precision • It offers lots of GFLOPS: • GPU in every PC and workstation What is behind such an Evolution? • The GPU is specialized for compute-intensive, highly parallel computation (exactly what graphics rendering is about) • So, more transistors can be devoted to data processing rather than data caching and flow control ALU ALU Control ALU ALU Cache DRAM DRAM CPU GPU • The fast-growing video game industry exerts strong economic pressure that forces constant innovation GPUs • Each NVIDIA GPU has 240 parallel cores NVIDIA GPU • Within each core 1.4 Billion Transistors • Floating point unit • Logic unit (add, sub, mul, madd) • Move, compare unit • Branch unit • Cores managed by thread manager • Thread manager can spawn and manage 12,000+ threads per core 1 Teraflop of processing power • Zero overhead thread switching Heterogeneous Computing Domains Graphics Massive Data GPU Parallelism (Parallel Computing) Instruction CPU Level (Sequential -

CS 677: Parallel Programming for Many-Core Processors Lecture 1

1 CS 677: Parallel Programming for Many-core Processors Lecture 1 Instructor: Philippos Mordohai Webpage: mordohai.github.io E-mail: [email protected] Objectives • Learn how to program massively parallel processors and achieve – High performance – Functionality and maintainability – Scalability across future generations • Acquire technical knowledge required to achieve the above goals – Principles and patterns of parallel programming – Processor architecture features and constraints – Programming API, tools and techniques 2 Important Points • This is an elective course. You chose to be here. • Expect to work and to be challenged. • If your programming background is weak, you will probably suffer. • This course will evolve to follow the rapid pace of progress in GPU programming. It is bound to always be a little behind… 3 Important Points II • At any point ask me WHY? • You can ask me anything about the course in class, during a break, in my office, by email. – If you think a homework is taking too long or is wrong. – If you can’t decide on a project. 4 Logistics • Class webpage: http://mordohai.github.io/classes/cs677_s20.html • Office hours: Tuesdays 5-6pm and by email • Evaluation: – Homework assignments (40%) – Quizzes (10%) – Midterm (15%) – Final project (35%) 5 Project • Pick topic BEFORE middle of the semester • I will suggest ideas and datasets, if you can’t decide • Deliverables: – Project proposal – Presentation in class – Poster in CS department event – Final report (around 8 pages) 6 Project Examples • k-means • Perceptron • Boosting – General – Face detector (group of 2) • Mean Shift • Normal estimation for 3D point clouds 7 More Ideas • Look for parallelizable problems in: – Image processing – Cryptanalysis – Graphics • GPU Gems – Nearest neighbor search 8 Even More… • Particle simulations • Financial analysis • MCMC • Games/puzzles 9 Resources • Textbook – Kirk & Hwu. -

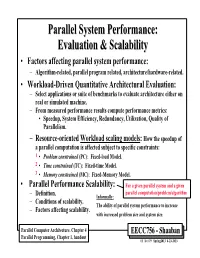

Parallel System Performance: Evaluation & Scalability

ParallelParallel SystemSystem Performance:Performance: EvaluationEvaluation && ScalabilityScalability • Factors affecting parallel system performance: – Algorithm-related, parallel program related, architecture/hardware-related. • Workload-Driven Quantitative Architectural Evaluation: – Select applications or suite of benchmarks to evaluate architecture either on real or simulated machine. – From measured performance results compute performance metrics: • Speedup, System Efficiency, Redundancy, Utilization, Quality of Parallelism. – Resource-oriented Workload scaling models: How the speedup of a parallel computation is affected subject to specific constraints: 1 • Problem constrained (PC): Fixed-load Model. 2 • Time constrained (TC): Fixed-time Model. 3 • Memory constrained (MC): Fixed-Memory Model. • Parallel Performance Scalability: For a given parallel system and a given parallel computation/problem/algorithm – Definition. Informally: – Conditions of scalability. The ability of parallel system performance to increase – Factors affecting scalability. with increased problem size and system size. Parallel Computer Architecture, Chapter 4 EECC756 - Shaaban Parallel Programming, Chapter 1, handout #1 lec # 9 Spring2013 4-23-2013 Parallel Program Performance • Parallel processing goal is to maximize speedup: Time(1) Sequential Work Speedup = < Time(p) Max (Work + Synch Wait Time + Comm Cost + Extra Work) Fixed Problem Size Speedup Max for any processor Parallelizing Overheads • By: 1 – Balancing computations/overheads (workload) on processors -

Scalable Task Parallel Programming in the Partitioned Global Address Space

Scalable Task Parallel Programming in the Partitioned Global Address Space DISSERTATION Presented in Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy in the Graduate School of The Ohio State University By James Scott Dinan, M.S. Graduate Program in Computer Science and Engineering The Ohio State University 2010 Dissertation Committee: P. Sadayappan, Advisor Atanas Rountev Paolo Sivilotti c Copyright by James Scott Dinan 2010 ABSTRACT Applications that exhibit irregular, dynamic, and unbalanced parallelism are grow- ing in number and importance in the computational science and engineering commu- nities. These applications span many domains including computational chemistry, physics, biology, and data mining. In such applications, the units of computation are often irregular in size and the availability of work may be depend on the dynamic, often recursive, behavior of the program. Because of these properties, it is challenging for these programs to achieve high levels of performance and scalability on modern high performance clusters. A new family of programming models, called the Partitioned Global Address Space (PGAS) family, provides the programmer with a global view of shared data and allows for asynchronous, one-sided access to data regardless of where it is physically stored. In this model, the global address space is distributed across the memories of multiple nodes and, for any given node, is partitioned into local patches that have high affinity and low access cost and remote patches that have a high access cost due to communication. The PGAS data model relaxes conventional two-sided communication semantics and allows the programmer to access remote data without the cooperation of the remote processor. -

Core Processors

UNIVERSITY OF CALIFORNIA Los Angeles Parallel Algorithms for Medical Informatics on Data-Parallel Many-Core Processors A dissertation submitted in partial satisfaction of the requirements for the degree Doctor of Philosophy in Computer Science by Maryam Moazeni 2013 © Copyright by Maryam Moazeni 2013 ABSTRACT OF THE DISSERTATION Parallel Algorithms for Medical Informatics on Data-Parallel Many-Core Processors by Maryam Moazeni Doctor of Philosophy in Computer Science University of California, Los Angeles, 2013 Professor Majid Sarrafzadeh, Chair The extensive use of medical monitoring devices has resulted in the generation of tremendous amounts of data. Storage, retrieval, and analysis of such data require platforms that can scale with data growth and adapt to the various behavior of the analysis and processing algorithms. In recent years, many-core processors and more specifically many-core Graphical Processing Units (GPUs) have become one of the most promising platforms for high performance processing of data, due to the massive parallel processing power they offer. However, many of the algorithms and data structures used in medical and bioinformatics systems do not follow a data-parallel programming paradigm, and hence cannot fully benefit from the parallel processing power of ii data-parallel many-core architectures. In this dissertation, we present three techniques to adapt several non-data parallel applications in different dwarfs to modern many-core GPUs. First, we present a load balancing technique to maximize parallelism in non-serial polyadic Dynamic Programming (DP), which is a family of dynamic programming algorithms with more non-uniform data access pattern. We show that a bottom-up approach to solving the DP problem exploits more parallelism and therefore yields higher performance. -

Oblivious Network RAM and Leveraging Parallelism to Achieve Obliviousness

Oblivious Network RAM and Leveraging Parallelism to Achieve Obliviousness Dana Dachman-Soled1,3 ∗ Chang Liu2 y Charalampos Papamanthou1,3 z Elaine Shi4 x Uzi Vishkin1,3 { 1: University of Maryland, Department of Electrical and Computer Engineering 2: University of Maryland, Department of Computer Science 3: University of Maryland Institute for Advanced Computer Studies (UMIACS) 4: Cornell University January 12, 2017 Abstract Oblivious RAM (ORAM) is a cryptographic primitive that allows a trusted CPU to securely access untrusted memory, such that the access patterns reveal nothing about sensitive data. ORAM is known to have broad applications in secure processor design and secure multi-party computation for big data. Unfortunately, due to a logarithmic lower bound by Goldreich and Ostrovsky (Journal of the ACM, '96), ORAM is bound to incur a moderate cost in practice. In particular, with the latest developments in ORAM constructions, we are quickly approaching this limit, and the room for performance improvement is small. In this paper, we consider new models of computation in which the cost of obliviousness can be fundamentally reduced in comparison with the standard ORAM model. We propose the Oblivious Network RAM model of computation, where a CPU communicates with multiple memory banks, such that the adversary observes only which bank the CPU is communicating with, but not the address offset within each memory bank. In other words, obliviousness within each bank comes for free|either because the architecture prevents a malicious party from ob- serving the address accessed within a bank, or because another solution is used to obfuscate memory accesses within each bank|and hence we only need to obfuscate communication pat- terns between the CPU and the memory banks. -

Compiling for a Multithreaded Dataflow Architecture : Algorithms, Tools, and Experience Feng Li

Compiling for a multithreaded dataflow architecture : algorithms, tools, and experience Feng Li To cite this version: Feng Li. Compiling for a multithreaded dataflow architecture : algorithms, tools, and experience. Other [cs.OH]. Université Pierre et Marie Curie - Paris VI, 2014. English. NNT : 2014PA066102. tel-00992753v2 HAL Id: tel-00992753 https://tel.archives-ouvertes.fr/tel-00992753v2 Submitted on 10 Sep 2014 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Universit´ePierre et Marie Curie Ecole´ Doctorale Informatique, T´el´ecommunications et Electronique´ Compiling for a multithreaded dataflow architecture: algorithms, tools, and experience par Feng LI Th`esede doctorat d'Informatique Dirig´eepar Albert COHEN Pr´esent´ee et soutenue publiquement le 20 mai, 2014 Devant un jury compos´ede: , Pr´esident , Rapporteur , Rapporteur , Examinateur , Examinateur , Examinateur I would like to dedicate this thesis to my mother Shuxia Li, for her love Acknowledgements I would like to thank my supervisor Albert Cohen, there's nothing more exciting than working with him. Albert, you are an extraordi- nary person, full of ideas and motivation. The discussions with you always enlightens me, helps me. I am so lucky to have you as my supervisor when I first come to research as a PhD student. -

Introduction to Parallel Computing

INTRODUCTION TO PARALLEL COMPUTING Plamen Krastev Office: 38 Oxford, Room 117 Email: [email protected] FAS Research Computing Harvard University OBJECTIVES: To introduce you to the basic concepts and ideas in parallel computing To familiarize you with the major programming models in parallel computing To provide you with with guidance for designing efficient parallel programs 2 OUTLINE: Introduction to Parallel Computing / High Performance Computing (HPC) Concepts and terminology Parallel programming models Parallelizing your programs Parallel examples 3 What is High Performance Computing? Pravetz 82 and 8M, Bulgarian Apple clones Image credit: flickr 4 What is High Performance Computing? Pravetz 82 and 8M, Bulgarian Apple clones Image credit: flickr 4 What is High Performance Computing? Odyssey supercomputer is the major computational resource of FAS RC: • 2,140 nodes / 60,000 cores • 14 petabytes of storage 5 What is High Performance Computing? Odyssey supercomputer is the major computational resource of FAS RC: • 2,140 nodes / 60,000 cores • 14 petabytes of storage Using the world’s fastest and largest computers to solve large and complex problems. 5 Serial Computation: Traditionally software has been written for serial computations: To be run on a single computer having a single Central Processing Unit (CPU) A problem is broken into a discrete set of instructions Instructions are executed one after another Only one instruction can be executed at any moment in time 6 Parallel Computing: In the simplest sense, parallel -

Scheduling on Asymmetric Parallel Architectures

Scheduling on Asymmetric Parallel Architectures Filip Blagojevic Dissertation submitted to the faculty of the Virginia Polytechnic Institute and State University in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Computer Science and Applications Committee Members: Dimitrios S. Nikolopoulos (Chair) Kirk W. Cameron Wu-chun Feng David K. Lowenthal Calvin J. Ribbens May 30, 2008 Blacksburg, Virginia Keywords: Multicore processors, Cell BE, process scheduling, high-performance computing, performance prediction, runtime adaptation c Copyright 2008, Filip Blagojevic Scheduling on Asymmetric Parallel Architectures Filip Blagojevic (ABSTRACT) We explore runtime mechanisms and policies for scheduling dynamic multi-grain parallelism on heterogeneous multi-core processors. Heterogeneous multi-core processors integrate con- ventional cores that run legacy codes with specialized cores that serve as computational ac- celerators. The term multi-grain parallelism refers to the exposure of multiple dimensions of parallelism from within the runtime system, so as to best exploit a parallel architecture with heterogeneous computational capabilities between its cores and execution units. To maximize performance on heterogeneous multi-core processors, programs need to expose multiple dimen- sions of parallelism simultaneously. Unfortunately, programming with multiple dimensions of parallelism is to date an ad hoc process, relying heavily on the intuition and skill of program- mers. Formal techniques are needed to optimize multi-dimensional parallel program designs. We investigate user- and kernel-level schedulers that dynamically ”rightsize” the dimensions and degrees of parallelism on the asymmetric parallel platforms. The schedulers address the problem of mapping application-specific concurrency to an architecture with multiple hardware layers of parallelism, without requiring programmer intervention or sophisticated compiler sup- port.