Parallel Programming in Openmp About the Authors

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Case for High Performance Computing with Virtual Machines

A Case for High Performance Computing with Virtual Machines Wei Huangy Jiuxing Liuz Bulent Abaliz Dhabaleswar K. Panday y Computer Science and Engineering z IBM T. J. Watson Research Center The Ohio State University 19 Skyline Drive Columbus, OH 43210 Hawthorne, NY 10532 fhuanwei, [email protected] fjl, [email protected] ABSTRACT in the 1960s [9], but are experiencing a resurgence in both Virtual machine (VM) technologies are experiencing a resur- industry and research communities. A VM environment pro- gence in both industry and research communities. VMs of- vides virtualized hardware interfaces to VMs through a Vir- fer many desirable features such as security, ease of man- tual Machine Monitor (VMM) (also called hypervisor). VM agement, OS customization, performance isolation, check- technologies allow running different guest VMs in a phys- pointing, and migration, which can be very beneficial to ical box, with each guest VM possibly running a different the performance and the manageability of high performance guest operating system. They can also provide secure and computing (HPC) applications. However, very few HPC ap- portable environments to meet the demanding requirements plications are currently running in a virtualized environment of computing resources in modern computing systems. due to the performance overhead of virtualization. Further, Recently, network interconnects such as InfiniBand [16], using VMs for HPC also introduces additional challenges Myrinet [24] and Quadrics [31] are emerging, which provide such as management and distribution of OS images. very low latency (less than 5 µs) and very high bandwidth In this paper we present a case for HPC with virtual ma- (multiple Gbps). -

High Performance Computing Through Parallel and Distributed Processing

Yadav S. et al., J. Harmoniz. Res. Eng., 2013, 1(2), 54-64 Journal Of Harmonized Research (JOHR) Journal Of Harmonized Research in Engineering 1(2), 2013, 54-64 ISSN 2347 – 7393 Original Research Article High Performance Computing through Parallel and Distributed Processing Shikha Yadav, Preeti Dhanda, Nisha Yadav Department of Computer Science and Engineering, Dronacharya College of Engineering, Khentawas, Farukhnagar, Gurgaon, India Abstract : There is a very high need of High Performance Computing (HPC) in many applications like space science to Artificial Intelligence. HPC shall be attained through Parallel and Distributed Computing. In this paper, Parallel and Distributed algorithms are discussed based on Parallel and Distributed Processors to achieve HPC. The Programming concepts like threads, fork and sockets are discussed with some simple examples for HPC. Keywords: High Performance Computing, Parallel and Distributed processing, Computer Architecture Introduction time to solve large problems like weather Computer Architecture and Programming play forecasting, Tsunami, Remote Sensing, a significant role for High Performance National calamities, Defence, Mineral computing (HPC) in large applications Space exploration, Finite-element, Cloud science to Artificial Intelligence. The Computing, and Expert Systems etc. The Algorithms are problem solving procedures Algorithms are Non-Recursive Algorithms, and later these algorithms transform in to Recursive Algorithms, Parallel Algorithms particular Programming language for HPC. and Distributed Algorithms. There is need to study algorithms for High The Algorithms must be supported the Performance Computing. These Algorithms Computer Architecture. The Computer are to be designed to computer in reasonable Architecture is characterized with Flynn’s Classification SISD, SIMD, MIMD, and For Correspondence: MISD. Most of the Computer Architectures preeti.dhanda01ATgmail.com are supported with SIMD (Single Instruction Received on: October 2013 Multiple Data Streams). -

Heterogeneous Task Scheduling for Accelerated Openmp

Heterogeneous Task Scheduling for Accelerated OpenMP ? ? Thomas R. W. Scogland Barry Rountree† Wu-chun Feng Bronis R. de Supinski† ? Department of Computer Science, Virginia Tech, Blacksburg, VA 24060 USA † Center for Applied Scientific Computing, Lawrence Livermore National Laboratory, Livermore, CA 94551 USA [email protected] [email protected] [email protected] [email protected] Abstract—Heterogeneous systems with CPUs and computa- currently requires a programmer either to program in at tional accelerators such as GPUs, FPGAs or the upcoming least two different parallel programming models, or to use Intel MIC are becoming mainstream. In these systems, peak one of the two that support both GPUs and CPUs. Multiple performance includes the performance of not just the CPUs but also all available accelerators. In spite of this fact, the models however require code replication, and maintaining majority of programming models for heterogeneous computing two completely distinct implementations of a computational focus on only one of these. With the development of Accelerated kernel is a difficult and error-prone proposition. That leaves OpenMP for GPUs, both from PGI and Cray, we have a clear us with using either OpenCL or accelerated OpenMP to path to extend traditional OpenMP applications incrementally complete the task. to use GPUs. The extensions are geared toward switching from CPU parallelism to GPU parallelism. However they OpenCL’s greatest strength lies in its broad hardware do not preserve the former while adding the latter. Thus support. In a way, though, that is also its greatest weak- computational potential is wasted since either the CPU cores ness. To enable one to program this disparate hardware or the GPU cores are left idle. -

Technical Report Aaron Councilman

Extensible Parallel Programming in ableC Aaron Councilman Department of Computer Science and Engineering University of Minnesota, Twin Cities May 23, 2019 1 Introduction There are many different manners of parallelizing code, and many different languages that provide such features. Different types of computations are best suited by different types of parallelism. Simply whether a computation is compute bound or I/O bound determines whether the computation will benefit from being run with more threads than the machine has cores, and other properties of a computation will similarly affect how it performs when run in parallel. Thus, to provide parallel programmers the ability to deliver the best performance for their programs, the ability to choose the parallel programming abstractions they use is important. The ability to combine these abstractions however they need is also important, since different parts of a program will have different performance properties, and therefore may perform best using different abstractions. Unfortunately, parallel programming languages are often designed monolithically, built as an entire language with a specific set of features. Because of this, programmer's choice of parallel programming abstractions is generally limited to the choice of the language to use. Beyond limiting the available abstracts, this also means, that the choice of abstractions must be made ahead of time, since any attempt to change the parallel programming language at a later time is likely to be be prohibitive as it may require rewriting large portions of the codebase, if not the entire codebase. Extensible programming languages can offer a solution to these problems. With an extensible compiler, the programmer chooses a base programming language and can then select the set of \extensions" for that language that best fit their needs. -

Intel® Cilk™ Plus

Overview: Programming Environment for Intel® Xeon Phi™ Coprocessor One Source Base, Tuned to many Targets Source Compilers, Libraries, Parallel Models Multicore Many-core Cluster Multicore Multicore CPU CPU Intel® MIC Multicore Multicore and Architecture Cluster Many-core Cluster Copyright© 2014, Intel Corporation. All rights reserved. *Other brands and names are the property of their respective owners. Intel® Parallel Studio XE 2013 and Intel® Cluster Studio XE 2013 Phase Product Feature Benefit Intel® Threading design assistant • Simplifies, demystifies, and speeds Advisor XE (Studio products only) parallel application design • C/C++ and Fortran compilers • Intel® Threading Building Blocks • Enabling solution to achieve the Intel® • Intel® Cilk™ Plus application performance and Composer XE • Intel® Integrated Performance scalability benefits of multicore and Build Primitives forward scale to many-core • Intel® Math Kernel Library • Enabling High Performance Scalability, Interconnect Intel® High Performance Message Independence, Runtime Fabric † MPI Library Passing (MPI) Library Selection, and Application Tuning Capability ® Intel Performance Profiler for • Remove guesswork, saves time, VTune™ optimizing application makes it easier to find performance Amplifier XE performance and scalability and scalability bottlenecks Memory & threading dynamic • Increased productivity, code quality, ® Intel analysis for code quality and lowers cost, finds memory, Verify Inspector XE threading , and security defects & Tune Static Analysis for code quality -

Parallel Programming

Parallel Programming Libraries and implementations Outline • MPI – distributed memory de-facto standard • Using MPI • OpenMP – shared memory de-facto standard • Using OpenMP • CUDA – GPGPU de-facto standard • Using CUDA • Others • Hybrid programming • Xeon Phi Programming • SHMEM • PGAS MPI Library Distributed, message-passing programming Message-passing concepts Explicit Parallelism • In message-passing all the parallelism is explicit • The program includes specific instructions for each communication • What to send or receive • When to send or receive • Synchronisation • It is up to the developer to design the parallel decomposition and implement it • How will you divide up the problem? • When will you need to communicate between processes? Message Passing Interface (MPI) • MPI is a portable library used for writing parallel programs using the message passing model • You can expect MPI to be available on any HPC platform you use • Based on a number of processes running independently in parallel • HPC resource provides a command to launch multiple processes simultaneously (e.g. mpiexec, aprun) • There are a number of different implementations but all should support the MPI 2 standard • As with different compilers, there will be variations between implementations but all the features specified in the standard should work. • Examples: MPICH2, OpenMPI Point-to-point communications • A message sent by one process and received by another • Both processes are actively involved in the communication – not necessarily at the same time • Wide variety of semantics provided: • Blocking vs. non-blocking • Ready vs. synchronous vs. buffered • Tags, communicators, wild-cards • Built-in and custom data-types • Can be used to implement any communication pattern • Collective operations, if applicable, can be more efficient Collective communications • A communication that involves all processes • “all” within a communicator, i.e. -

Openmp API 5.1 Specification

OpenMP Application Programming Interface Version 5.1 November 2020 Copyright c 1997-2020 OpenMP Architecture Review Board. Permission to copy without fee all or part of this material is granted, provided the OpenMP Architecture Review Board copyright notice and the title of this document appear. Notice is given that copying is by permission of the OpenMP Architecture Review Board. This page intentionally left blank in published version. Contents 1 Overview of the OpenMP API1 1.1 Scope . .1 1.2 Glossary . .2 1.2.1 Threading Concepts . .2 1.2.2 OpenMP Language Terminology . .2 1.2.3 Loop Terminology . .9 1.2.4 Synchronization Terminology . 10 1.2.5 Tasking Terminology . 12 1.2.6 Data Terminology . 14 1.2.7 Implementation Terminology . 18 1.2.8 Tool Terminology . 19 1.3 Execution Model . 22 1.4 Memory Model . 25 1.4.1 Structure of the OpenMP Memory Model . 25 1.4.2 Device Data Environments . 26 1.4.3 Memory Management . 27 1.4.4 The Flush Operation . 27 1.4.5 Flush Synchronization and Happens Before .................. 29 1.4.6 OpenMP Memory Consistency . 30 1.5 Tool Interfaces . 31 1.5.1 OMPT . 32 1.5.2 OMPD . 32 1.6 OpenMP Compliance . 33 1.7 Normative References . 33 1.8 Organization of this Document . 35 i 2 Directives 37 2.1 Directive Format . 38 2.1.1 Fixed Source Form Directives . 43 2.1.2 Free Source Form Directives . 44 2.1.3 Stand-Alone Directives . 45 2.1.4 Array Shaping . 45 2.1.5 Array Sections . -

Openmp Made Easy with INTEL® ADVISOR

OpenMP made easy with INTEL® ADVISOR Zakhar Matveev, PhD, Intel CVCG, November 2018, SC’18 OpenMP booth Why do we care? Ai Bi Ai Bi Ai Bi Ai Bi Vector + Processing Ci Ci Ci Ci VL Motivation (instead of Agenda) • Starting from 4.x, OpenMP introduces support for both levels of parallelism: • Multi-Core (think of “pragma/directive omp parallel for”) • SIMD (think of “pragma/directive omp simd”) • 2 pillars of OpenMP SIMD programming model • Hardware with Intel ® AVX-512 support gives you theoretically 8x speed- up over SSE baseline (less or even more in practice) • Intel® Advisor is here to assist you in: • Enabling SIMD parallelism with OpenMP (if not yet) • Improving performance of already vectorized OpenMP SIMD code • And will also help to optimize for Memory Sub-system (Advisor Roofline) 3 Don’t use a single Vector lane! Un-vectorized and un-threaded software will under perform 4 Permission to Design for All Lanes Threading and Vectorization needed to fully utilize modern hardware 5 A Vector parallelism in x86 AVX-512VL AVX-512BW B AVX-512DQ AVX-512CD AVX-512F C AVX2 AVX2 AVX AVX AVX SSE SSE SSE SSE Intel® microarchitecture code name … NHM SNB HSW SKL 6 (theoretically) 8x more SIMD FLOP/S compared to your (–O2) optimized baseline x - Significant leap to 512-bit SIMD support for processors - Intel® Compilers and Intel® Math Kernel Library include AVX-512 support x - Strong compatibility with AVX - Added EVEX prefix enables additional x functionality Don’t leave it on the table! 7 Two level parallelism decomposition with OpenMP: image processing example B #pragma omp parallel for for (int y = 0; y < ImageHeight; ++y){ #pragma omp simd C for (int x = 0; x < ImageWidth; ++x){ count[y][x] = mandel(in_vals[y][x]); } } 8 Two level parallelism decomposition with OpenMP: fluid dynamics processing example B #pragma omp parallel for for (int i = 0; i < X_Dim; ++i){ #pragma omp simd C for (int m = 0; x < n_velocities; ++m){ next_i = f(i, velocities(m)); X[i] = next_i; } } 9 Key components of Intel® Advisor What’s new in “2019” release Step 1. -

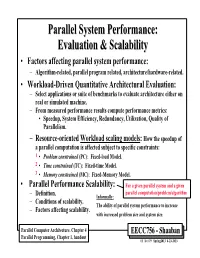

Parallel System Performance: Evaluation & Scalability

ParallelParallel SystemSystem Performance:Performance: EvaluationEvaluation && ScalabilityScalability • Factors affecting parallel system performance: – Algorithm-related, parallel program related, architecture/hardware-related. • Workload-Driven Quantitative Architectural Evaluation: – Select applications or suite of benchmarks to evaluate architecture either on real or simulated machine. – From measured performance results compute performance metrics: • Speedup, System Efficiency, Redundancy, Utilization, Quality of Parallelism. – Resource-oriented Workload scaling models: How the speedup of a parallel computation is affected subject to specific constraints: 1 • Problem constrained (PC): Fixed-load Model. 2 • Time constrained (TC): Fixed-time Model. 3 • Memory constrained (MC): Fixed-Memory Model. • Parallel Performance Scalability: For a given parallel system and a given parallel computation/problem/algorithm – Definition. Informally: – Conditions of scalability. The ability of parallel system performance to increase – Factors affecting scalability. with increased problem size and system size. Parallel Computer Architecture, Chapter 4 EECC756 - Shaaban Parallel Programming, Chapter 1, handout #1 lec # 9 Spring2013 4-23-2013 Parallel Program Performance • Parallel processing goal is to maximize speedup: Time(1) Sequential Work Speedup = < Time(p) Max (Work + Synch Wait Time + Comm Cost + Extra Work) Fixed Problem Size Speedup Max for any processor Parallelizing Overheads • By: 1 – Balancing computations/overheads (workload) on processors -

Exploring Massive Parallel Computation with GPU

Need for parallelism Graphical Processor Units Gravitational Microlensing Modelling Exploring Massive Parallel Computation with GPU Ian Bond Massey University, Auckland, New Zealand 2011 Sagan Exoplanet Workshop Pasadena, July 25-29 2011 Ian Bond | Microlensing parallelism 1/40 Need for parallelism Graphical Processor Units Gravitational Microlensing Modelling Assumptions/Purpose You are all involved in microlensing modelling and you have (or are working on) your own code this lecture shows how to get started on getting code to run on a GPU then its over to you . Ian Bond | Microlensing parallelism 2/40 Need for parallelism Graphical Processor Units Gravitational Microlensing Modelling Outline 1 Need for parallelism 2 Graphical Processor Units 3 Gravitational Microlensing Modelling Ian Bond | Microlensing parallelism 3/40 Need for parallelism Graphical Processor Units Gravitational Microlensing Modelling Paralel Computing Parallel Computing is use of multiple computers, or computers with multiple internal processors, to solve a problem at a greater computational speed than using a single computer (Wilkinson 2002). How does one achieve parallelism? Ian Bond | Microlensing parallelism 4/40 Need for parallelism Graphical Processor Units Gravitational Microlensing Modelling Grand Challenge Problems A grand challenge problem is one that cannot be solved in a reasonable amount of time with todays computers’ Examples: – Modelling large DNA structures – Global weather forecasting – N body problem (N very large) – brain simulation Has microlensing -

Introduction to Openmp Paul Edmon ITC Research Computing Associate

Introduction to OpenMP Paul Edmon ITC Research Computing Associate FAS Research Computing Overview • Threaded Parallelism • OpenMP Basics • OpenMP Programming • Benchmarking FAS Research Computing Threaded Parallelism • Shared Memory • Single Node • Non-uniform Memory Access (NUMA) • One thread per core FAS Research Computing Threaded Languages • PThreads • Python • Perl • OpenCL/CUDA • OpenACC • OpenMP FAS Research Computing OpenMP Basics FAS Research Computing What is OpenMP? • OpenMP (Open Multi-Processing) – Application Program Interface (API) – Governed by OpenMP Architecture Review Board • OpenMP provides a portable, scalable model for developers of shared memory parallel applications • The API supports C/C++ and Fortran on a wide variety of architectures FAS Research Computing Goals of OpenMP • Standardization – Provide a standard among a variety shared memory architectures / platforms – Jointly defined and endorsed by a group of major computer hardware and software vendors • Lean and Mean – Establish a simple and limited set of directives for programming shared memory machines – Significant parallelism can be implemented by just a few directives • Ease of Use – Provide the capability to incrementally parallelize a serial program • Portability – Specified for C/C++ and Fortran – Most majors platforms have been implemented including Unix/Linux and Windows – Implemented for all major compilers FAS Research Computing OpenMP Programming Model Shared Memory Model: OpenMP is designed for multi-processor/core, shared memory machines Thread Based Parallelism: OpenMP programs accomplish parallelism exclusively through the use of threads Explicit Parallelism: OpenMP provides explicit (not automatic) parallelism, offering the programmer full control over parallelization Compiler Directive Based: Parallelism is specified through the use of compiler directives embedded in the C/C++ or Fortran code I/O: OpenMP specifies nothing about parallel I/O. -

Dcuda: Hardware Supported Overlap of Computation and Communication

dCUDA: Hardware Supported Overlap of Computation and Communication Tobias Gysi Jeremia Bar¨ Torsten Hoefler Department of Computer Science Department of Computer Science Department of Computer Science ETH Zurich ETH Zurich ETH Zurich [email protected] [email protected] [email protected] Abstract—Over the last decade, CUDA and the underlying utilization of the costly compute and network hardware. To GPU hardware architecture have continuously gained popularity mitigate this problem, application developers can implement in various high-performance computing application domains manual overlap of computation and communication [23], [27]. such as climate modeling, computational chemistry, or machine learning. Despite this popularity, we lack a single coherent In particular, there exist various approaches [13], [22] to programming model for GPU clusters. We therefore introduce overlap the communication with the computation on an inner the dCUDA programming model, which implements device- domain that has no inter-node data dependencies. However, side remote memory access with target notification. To hide these code transformations significantly increase code com- instruction pipeline latencies, CUDA programs over-decompose plexity which results in reduced real-world applicability. the problem and over-subscribe the device by running many more threads than there are hardware execution units. Whenever a High-performance system design often involves trading thread stalls, the hardware scheduler immediately proceeds with off sequential performance against parallel throughput. The the execution of another thread ready for execution. This latency architectural difference between host and device processors hiding technique is key to make best use of the available hardware perfectly showcases the two extremes of this design space.