Oblivious Network RAM and Leveraging Parallelism to Achieve Obliviousness

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

High Performance Computing Through Parallel and Distributed Processing

Yadav S. et al., J. Harmoniz. Res. Eng., 2013, 1(2), 54-64 Journal Of Harmonized Research (JOHR) Journal Of Harmonized Research in Engineering 1(2), 2013, 54-64 ISSN 2347 – 7393 Original Research Article High Performance Computing through Parallel and Distributed Processing Shikha Yadav, Preeti Dhanda, Nisha Yadav Department of Computer Science and Engineering, Dronacharya College of Engineering, Khentawas, Farukhnagar, Gurgaon, India Abstract : There is a very high need of High Performance Computing (HPC) in many applications like space science to Artificial Intelligence. HPC shall be attained through Parallel and Distributed Computing. In this paper, Parallel and Distributed algorithms are discussed based on Parallel and Distributed Processors to achieve HPC. The Programming concepts like threads, fork and sockets are discussed with some simple examples for HPC. Keywords: High Performance Computing, Parallel and Distributed processing, Computer Architecture Introduction time to solve large problems like weather Computer Architecture and Programming play forecasting, Tsunami, Remote Sensing, a significant role for High Performance National calamities, Defence, Mineral computing (HPC) in large applications Space exploration, Finite-element, Cloud science to Artificial Intelligence. The Computing, and Expert Systems etc. The Algorithms are problem solving procedures Algorithms are Non-Recursive Algorithms, and later these algorithms transform in to Recursive Algorithms, Parallel Algorithms particular Programming language for HPC. and Distributed Algorithms. There is need to study algorithms for High The Algorithms must be supported the Performance Computing. These Algorithms Computer Architecture. The Computer are to be designed to computer in reasonable Architecture is characterized with Flynn’s Classification SISD, SIMD, MIMD, and For Correspondence: MISD. Most of the Computer Architectures preeti.dhanda01ATgmail.com are supported with SIMD (Single Instruction Received on: October 2013 Multiple Data Streams). -

Chapter 4 Algorithm Analysis

Chapter 4 Algorithm Analysis In this chapter we look at how to analyze the cost of algorithms in a way that is useful for comparing algorithms to determine which is likely to be better, at least for large input sizes, but is abstract enough that we don’t have to look at minute details of compilers and machine architectures. There are a several parts of such analysis. Firstly we need to abstract the cost from details of the compiler or machine. Secondly we have to decide on a concrete model that allows us to formally define the cost of an algorithm. Since we are interested in parallel algorithms, the model needs to consider parallelism. Thirdly we need to understand how to analyze costs in this model. These are the topics of this chapter. 4.1 Abstracting Costs When we analyze the cost of an algorithm formally, we need to be reasonably precise about the model we are performing the analysis in. Question 4.1. How precise should this model be? For example, would it help to know the exact running time for each instruction? The model can be arbitrarily precise but it is often helpful to abstract away from some details such as the exact running time of each (kind of) instruction. For example, the model can posit that each instruction takes a single step (unit of time) whether it is an addition, a division, or a memory access operation. Some more advanced models, which we will not consider in this class, separate between different classes of instructions, for example, a model may require analyzing separately calculation (e.g., addition or a multiplication) and communication (e.g., memory read). -

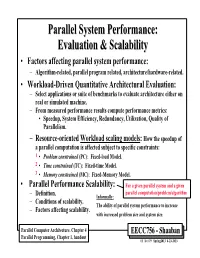

Parallel System Performance: Evaluation & Scalability

ParallelParallel SystemSystem Performance:Performance: EvaluationEvaluation && ScalabilityScalability • Factors affecting parallel system performance: – Algorithm-related, parallel program related, architecture/hardware-related. • Workload-Driven Quantitative Architectural Evaluation: – Select applications or suite of benchmarks to evaluate architecture either on real or simulated machine. – From measured performance results compute performance metrics: • Speedup, System Efficiency, Redundancy, Utilization, Quality of Parallelism. – Resource-oriented Workload scaling models: How the speedup of a parallel computation is affected subject to specific constraints: 1 • Problem constrained (PC): Fixed-load Model. 2 • Time constrained (TC): Fixed-time Model. 3 • Memory constrained (MC): Fixed-Memory Model. • Parallel Performance Scalability: For a given parallel system and a given parallel computation/problem/algorithm – Definition. Informally: – Conditions of scalability. The ability of parallel system performance to increase – Factors affecting scalability. with increased problem size and system size. Parallel Computer Architecture, Chapter 4 EECC756 - Shaaban Parallel Programming, Chapter 1, handout #1 lec # 9 Spring2013 4-23-2013 Parallel Program Performance • Parallel processing goal is to maximize speedup: Time(1) Sequential Work Speedup = < Time(p) Max (Work + Synch Wait Time + Comm Cost + Extra Work) Fixed Problem Size Speedup Max for any processor Parallelizing Overheads • By: 1 – Balancing computations/overheads (workload) on processors -

Introduction to Parallel Computing, 2Nd Edition

732A54 Traditional Use of Parallel Computing: Big Data Analytics Large-Scale HPC Applications n High Performance Computing (HPC) l Much computational work (in FLOPs, floatingpoint operations) l Often, large data sets Introduction to l E.g. climate simulations, particle physics, engineering, sequence Parallel Computing matching or proteine docking in bioinformatics, … n Single-CPU computers and even today’s multicore processors cannot provide such massive computation power Christoph Kessler n Aggregate LOTS of computers à Clusters IDA, Linköping University l Need scalable parallel algorithms l Need to exploit multiple levels of parallelism Christoph Kessler, IDA, Linköpings universitet. C. Kessler, IDA, Linköpings universitet. NSC Triolith2 More Recent Use of Parallel Computing: HPC vs Big-Data Computing Big-Data Analytics Applications n Big Data Analytics n Both need parallel computing n Same kind of hardware – Clusters of (multicore) servers l Data access intensive (disk I/O, memory accesses) n Same OS family (Linux) 4Typically, very large data sets (GB … TB … PB … EB …) n Different programming models, languages, and tools l Also some computational work for combining/aggregating data l E.g. data center applications, business analytics, click stream HPC application Big-Data application analysis, scientific data analysis, machine learning, … HPC prog. languages: Big-Data prog. languages: l Soft real-time requirements on interactive querys Fortran, C/C++ (Python) Java, Scala, Python, … Programming models: Programming models: n Single-CPU and multicore processors cannot MPI, OpenMP, … MapReduce, Spark, … provide such massive computation power and I/O bandwidth+capacity Scientific computing Big-data storage/access: libraries: BLAS, … HDFS, … n Aggregate LOTS of computers à Clusters OS: Linux OS: Linux l Need scalable parallel algorithms HW: Cluster HW: Cluster l Need to exploit multiple levels of parallelism l Fault tolerance à Let us start with the common basis: Parallel computer architecture C. -

Scalable Task Parallel Programming in the Partitioned Global Address Space

Scalable Task Parallel Programming in the Partitioned Global Address Space DISSERTATION Presented in Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy in the Graduate School of The Ohio State University By James Scott Dinan, M.S. Graduate Program in Computer Science and Engineering The Ohio State University 2010 Dissertation Committee: P. Sadayappan, Advisor Atanas Rountev Paolo Sivilotti c Copyright by James Scott Dinan 2010 ABSTRACT Applications that exhibit irregular, dynamic, and unbalanced parallelism are grow- ing in number and importance in the computational science and engineering commu- nities. These applications span many domains including computational chemistry, physics, biology, and data mining. In such applications, the units of computation are often irregular in size and the availability of work may be depend on the dynamic, often recursive, behavior of the program. Because of these properties, it is challenging for these programs to achieve high levels of performance and scalability on modern high performance clusters. A new family of programming models, called the Partitioned Global Address Space (PGAS) family, provides the programmer with a global view of shared data and allows for asynchronous, one-sided access to data regardless of where it is physically stored. In this model, the global address space is distributed across the memories of multiple nodes and, for any given node, is partitioned into local patches that have high affinity and low access cost and remote patches that have a high access cost due to communication. The PGAS data model relaxes conventional two-sided communication semantics and allows the programmer to access remote data without the cooperation of the remote processor. -

Compiling for a Multithreaded Dataflow Architecture : Algorithms, Tools, and Experience Feng Li

Compiling for a multithreaded dataflow architecture : algorithms, tools, and experience Feng Li To cite this version: Feng Li. Compiling for a multithreaded dataflow architecture : algorithms, tools, and experience. Other [cs.OH]. Université Pierre et Marie Curie - Paris VI, 2014. English. NNT : 2014PA066102. tel-00992753v2 HAL Id: tel-00992753 https://tel.archives-ouvertes.fr/tel-00992753v2 Submitted on 10 Sep 2014 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Universit´ePierre et Marie Curie Ecole´ Doctorale Informatique, T´el´ecommunications et Electronique´ Compiling for a multithreaded dataflow architecture: algorithms, tools, and experience par Feng LI Th`esede doctorat d'Informatique Dirig´eepar Albert COHEN Pr´esent´ee et soutenue publiquement le 20 mai, 2014 Devant un jury compos´ede: , Pr´esident , Rapporteur , Rapporteur , Examinateur , Examinateur , Examinateur I would like to dedicate this thesis to my mother Shuxia Li, for her love Acknowledgements I would like to thank my supervisor Albert Cohen, there's nothing more exciting than working with him. Albert, you are an extraordi- nary person, full of ideas and motivation. The discussions with you always enlightens me, helps me. I am so lucky to have you as my supervisor when I first come to research as a PhD student. -

Massively Parallel Computers: Why Not Prirallel Computers for the Masses?

Maslsively Parallel Computers: Why Not Prwallel Computers for the Masses? Gordon Bell Abstract In 1989 I described the situation in high performance computers including several parallel architectures that During the 1980s the computers engineering and could deliver teraflop power by 1995, but with no price science research community generally ignored parallel constraint. I felt SIMDs and multicomputers could processing. With a focus on high performance computing achieve this goal. A shared memory multiprocessor embodied in the massive 1990s High Performance looked infeasible then. Traditional, multiple vector Computing and Communications (HPCC) program that processor supercomputers such as Crays would simply not has the short-term, teraflop peak performanc~:goal using a evolve to a teraflop until 2000. Here's what happened. network of thousands of computers, everyone with even passing interest in parallelism is involted with the 1. During the first half of 1992, NEC's four processor massive parallelism "gold rush". Funding-wise the SX3 is the fastest computer, delivering 90% of its situation is bright; applications-wise massit e parallelism peak 22 Glops for the Linpeak benchmark, and Cray's is microscopic. While there are several programming 16 processor YMP C90 has the greatest throughput. models, the mainline is data parallel Fortran. However, new algorithms are required, negating th~: decades of 2. The SIMD hardware approach of Thinking Machines progress in algorithms. Thus, utility will no doubt be the was abandoned because it was only suitable for a few, Achilles Heal of massive parallelism. very large scale problems, barely multiprogrammed, and uneconomical for workloads. It's unclear whether The Teraflop: 1992 large SIMDs are "generation" scalable, and they are clearly not "size" scalable. -

An External-Memory Work-Depth Model and Its Applications to Massively Parallel Join Algorithms

An External-Memory Work-Depth Model and Its Applications to Massively Parallel Join Algorithms Xiao Hu Ke Yi Paraschos Koutris Hong Kong University of Science and Technology University of Wisconsin-Madison (xhuam,yike)@cse.ust.hk [email protected] ABSTRACT available. This allows us to better focus on the inherent parallel The PRAM is a fundamental model in parallel computing, but it is complexity of the algorithm. On the other hand, given a particular p, seldom directly used in parallel algorithm design. Instead, work- an algorithm with work W and depth d can be optimally simulated depth models have enjoyed much more popularity, as they relieve on the PRAM in O¹d + W /pº steps [11–13]. Thirdly, work-depth algorithm designers and programmers from worrying about how models are syntactically similar to many programming languages various tasks should be assigned to each of the processors. Mean- supporting parallel computing, so algorithms designed in these while, they also make it easy to study the fundamental parallel models can be more easily implemented in practice. complexity of the algorithm, namely work and depth, which are The PRAM can be considered as a fine-grained parallel model, irrelevant to the number of processors available. where in each step, each processor carries out one unit of work. The massively parallel computation (MPC) model, which is a However, today’s massively parallel systems, such as MapReduce simplified version of the BSP model, has drawn a strong interest and Spark, are better captured by a coarse-grained model. The first in recent years, due to the widespread popularity of many big data coarse-grained parallel model is the bulk synchronous parallel (BSP) systems based on such a model. -

Scheduling on Asymmetric Parallel Architectures

Scheduling on Asymmetric Parallel Architectures Filip Blagojevic Dissertation submitted to the faculty of the Virginia Polytechnic Institute and State University in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Computer Science and Applications Committee Members: Dimitrios S. Nikolopoulos (Chair) Kirk W. Cameron Wu-chun Feng David K. Lowenthal Calvin J. Ribbens May 30, 2008 Blacksburg, Virginia Keywords: Multicore processors, Cell BE, process scheduling, high-performance computing, performance prediction, runtime adaptation c Copyright 2008, Filip Blagojevic Scheduling on Asymmetric Parallel Architectures Filip Blagojevic (ABSTRACT) We explore runtime mechanisms and policies for scheduling dynamic multi-grain parallelism on heterogeneous multi-core processors. Heterogeneous multi-core processors integrate con- ventional cores that run legacy codes with specialized cores that serve as computational ac- celerators. The term multi-grain parallelism refers to the exposure of multiple dimensions of parallelism from within the runtime system, so as to best exploit a parallel architecture with heterogeneous computational capabilities between its cores and execution units. To maximize performance on heterogeneous multi-core processors, programs need to expose multiple dimen- sions of parallelism simultaneously. Unfortunately, programming with multiple dimensions of parallelism is to date an ad hoc process, relying heavily on the intuition and skill of program- mers. Formal techniques are needed to optimize multi-dimensional parallel program designs. We investigate user- and kernel-level schedulers that dynamically ”rightsize” the dimensions and degrees of parallelism on the asymmetric parallel platforms. The schedulers address the problem of mapping application-specific concurrency to an architecture with multiple hardware layers of parallelism, without requiring programmer intervention or sophisticated compiler sup- port. -

CUDA C++ Programming Guide

CUDA C++ Programming Guide Design Guide PG-02829-001_v11.4 | September 2021 Changes from Version 11.3 ‣ Added Graph Memory Nodes. ‣ Formalized Asynchronous SIMT Programming Model. CUDA C++ Programming Guide PG-02829-001_v11.4 | ii Table of Contents Chapter 1. Introduction........................................................................................................ 1 1.1. The Benefits of Using GPUs.....................................................................................................1 1.2. CUDA®: A General-Purpose Parallel Computing Platform and Programming Model....... 2 1.3. A Scalable Programming Model.............................................................................................. 3 1.4. Document Structure................................................................................................................. 5 Chapter 2. Programming Model.......................................................................................... 7 2.1. Kernels.......................................................................................................................................7 2.2. Thread Hierarchy...................................................................................................................... 8 2.3. Memory Hierarchy...................................................................................................................10 2.4. Heterogeneous Programming................................................................................................11 2.5. Asynchronous -

Introduction to Parallel Computing

732A54 / TDDE31 Big Data Analytics Introduction to Parallel Computing Christoph Kessler IDA, Linköping University Christoph Kessler, IDA, Linköpings universitet. Traditional Use of Parallel Computing: Large-Scale HPC Applications High Performance Computing (HPC) Much computational work (in FLOPs, floatingpoint operations) Often, large data sets E.g. climate simulations, particle physics, engineering, sequence matching or proteine docking in bioinformatics, … Single-CPU computers and even today’s multicore processors cannot provide such massive computation power Aggregate LOTS of computers → Clusters Need scalable parallel algorithms Need exploit multiple levels of parallelism C. Kessler, IDA, Linköpings universitet. NSC Tetralith2 More Recent Use of Parallel Computing: Big-Data Analytics Applications Big Data Analytics Data access intensive (disk I/O, memory accesses) Typically, very large data sets (GB … TB … PB … EB …) Also some computational work for combining/aggregating data E.g. data center applications, business analytics, click stream analysis, scientific data analysis, machine learning, … Soft real-time requirements on interactive querys Single-CPU and multicore processors cannot provide such massive computation power and I/O bandwidth+capacity Aggregate LOTS of computers → Clusters Need scalable parallel algorithms Need exploit multiple levels of parallelism Fault tolerance C. Kessler, IDA, Linköpings universitet. NSC Tetralith3 HPC vs Big-Data Computing Both need parallel computing Same kind of hardware – Clusters of (multicore) servers Same OS family (Linux) Different programming models, languages, and tools HPC application Big-Data application HPC prog. languages: Big-Data prog. languages: Fortran, C/C++ (Python) Java, Scala, Python, … Par. programming models: Par. programming models: MPI, OpenMP, … MapReduce, Spark, … Scientific computing Big-data storage/access: libraries: BLAS, … HDFS, … OS: Linux OS: Linux HW: Cluster HW: Cluster → Let us start with the common basis: Parallel computer architecture C. -

Parallel Functional Arrays

Parallel Functional Arrays Ananya Kumar Guy E. Blelloch Robert Harper Carnegie Mellon University, USA Carnegie Mellon University, USA Carnegie Mellon University, USA [email protected] [email protected] [email protected] Abstract ment with logarithmic time accesses and updates using balanced The goal of this paper is to develop a form of functional arrays trees, but it seems that getting both accesses and updates in con- (sequences) that are as efficient as imperative arrays, can be used stant time cannot be achieved without some form of language ex- in parallel, and have well defined cost-semantics. The key idea is tension. This means that algorithms for many fundamental prob- to consider sequences with functional value semantics but non- lems are a logarithmic factor slower in functional languages than in functional cost semantics. Because the value semantics is func- imperative languages. This includes algorithms for basic problems tional, “updating” a sequence returns a new sequence. We allow such as generating a random permutation, and for many important operations on “older” sequences (called interior sequences) to be graph problems (e.g., shortest-unweighted-paths, connected com- more expensive than operations on the “most recent” sequences ponents, biconnected components, topological sort, and cycle de- (called leaf sequences). tection). Simple algorithms for these problems take linear time in We embed sequences in a language supporting fork-join paral- the imperative setting, but an additional logarithmic factor in time lelism. Due to the parallelism, operations can be interleaved non- in the functional setting, at least without extensions. deterministically, and, in conjunction with the different cost for in- A variety of approaches have been suggested to alleviate this terior and leaf sequences, this can lead to non-deterministic costs problem.