CUDA C++ Programming Guide

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

PRE PROCESSOR DIRECTIVES in –C LANGUAGE. 2401 – Course

PRE PROCESSOR DIRECTIVES IN –C LANGUAGE. 2401 – Course Notes. 1 The C preprocessor is a macro processor that is used automatically by the C compiler to transform programmer defined programs before actual compilation takes place. It is called a macro processor because it allows the user to define macros, which are short abbreviations for longer constructs. This functionality allows for some very useful and practical tools to design our programs. To include header files(Header files are files with pre defined function definitions, user defined data types, declarations that can be included.) To include macro expansions. We can take random fragments of C code and abbreviate them into smaller definitions namely macros, and once they are defined and included via header files or directly onto the program itself it(the user defined definition) can be used everywhere in the program. In other words it works like a blank tile in the game scrabble, where if one player possesses a blank tile and uses it to state a particular letter for a given word he chose to play then that blank piece is used as that letter throughout the game. It is pretty similar to that rule. Special pre processing directives can be used to, include or exclude parts of the program according to various conditions. To sum up, preprocessing directives occurs before program compilation. So, it can be also be referred to as pre compiled fragments of code. Some possible actions are the inclusions of other files in the file being compiled, definitions of symbolic constants and macros and conditional compilation of program code and conditional execution of preprocessor directives. -

CUDA by Example

CUDA by Example AN INTRODUCTION TO GENERAL-PURPOSE GPU PROGRAMMING JASON SaNDERS EDWARD KANDROT Upper Saddle River, NJ • Boston • Indianapolis • San Francisco New York • Toronto • Montreal • London • Munich • Paris • Madrid Capetown • Sydney • Tokyo • Singapore • Mexico City Sanders_book.indb 3 6/12/10 3:15:14 PM Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and the publisher was aware of a trademark claim, the designations have been printed with initial capital letters or in all capitals. The authors and publisher have taken care in the preparation of this book, but make no expressed or implied warranty of any kind and assume no responsibility for errors or omissions. No liability is assumed for incidental or consequential damages in connection with or arising out of the use of the information or programs contained herein. NVIDIA makes no warranty or representation that the techniques described herein are free from any Intellectual Property claims. The reader assumes all risk of any such claims based on his or her use of these techniques. The publisher offers excellent discounts on this book when ordered in quantity for bulk purchases or special sales, which may include electronic versions and/or custom covers and content particular to your business, training goals, marketing focus, and branding interests. For more information, please contact: U.S. Corporate and Government Sales (800) 382-3419 [email protected] For sales outside the United States, please contact: International Sales [email protected] Visit us on the Web: informit.com/aw Library of Congress Cataloging-in-Publication Data Sanders, Jason. -

X86 Platform Coprocessor/Prpmc (PC on a PMC)

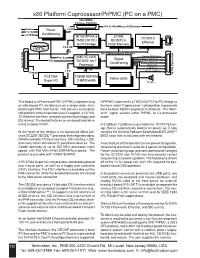

x86 Platform Coprocessor/PrPMC (PC on a PMC) 32b/33MHz PCI bus PN1/PN2 +5V to Kbd/Mouse/USB power Vcore Power +3.3V +2.5v Conditioning +3.3VIO 1K100 FPGA & 512KB 10/100TX TMS2250 PCI BIOS/PLA Ethernet RJ45 Compact to PCI bridge Flash Memory PLA I/O Flash site 8 32b/33MHz Internal PCI bus Analog SVGA Video Pwr Seq AMD SC2200 Signal COM 1 (RXD/TXD only) IDE "GEODE (tm)" Conditioning COM 2 (RXD/TXD only) Processor USB Port 1 Rear I/O PN4 I/O Rear 64 USB Port 2 LPC Keyboard/Mouse Floppy 36 Pin 36 Pin Connector PC87364 128MB SDRAM Status LEDs Super I/O (16MWx64b) PC Spkr This board is a Processor PMC (PrPMC) implementing A PrPMC implements a TMS2250 PCI-to-PCI bridge to an x86-based PC architecture on a single-wide, stan- the host, and a “Coprocessor” cofniguration implements dard height PMC form factor. This delivers a complete back-to-back 16550 compatible COM ports. The “Mon- x86 platform with comprehensive I/O support, a 10/100- arch” signal selects either PrPMC or Co-processor TX Ethernet interface, and disk access (both floppy and mode. IDE drives). The board features an on-board hard drive using Compact Flash. A 512Kbyte FLASH memory holds the 1K100 PLA im- age that is automatically loaded on power up. It also At the heart of the design is an Advanced Micro De- contains the General Software Embedded BIOS 2000™ vices SC2200 GEODE™ processor that integrates video, BIOS code that is included with each board. -

Convey Overview

THE WORLD’S FIRST HYBRID-CORE COMPUTER. CONVEY HYBRID-CORE COMPUTING Hybrid-core Computing Convey HC-1 High Performance of application- specific hardware Heterogenous solutions • can be much more efficient Performance/ • still hard to program Programmability and Power efficiency deployment ease of an x86 server Application Multicore solutions • don’t always scale well • parallel programming is hard Low Difficult Ease of Deployment Easy 1/22/2010 3 Hybrid-Core Computing Application-Specific Personalities Applications • Extend the x86 instruction set • Implement key operations in Convey Compilers hardware Life Sciences x86-64 ISA Custom ISA CAE Custom Financial Oil & Gas Shared Virtual Memory Cache-coherent, shared memory • Both ISAs address common memory *ISA: Instruction Set Architecture 7/12/2010 4 HC-1 Hardware PCI I/O FPGA FPGA Intel Personalities Chipset FPGA FPGA 8 GB/s 80 GB/s Memory Memory Cache Coherent, Shared Virtual Memory 1/22/2010 5 Using Personalities C/C++ Fortran • Personalities are user specifies reloadable personality at instruction sets Convey Software compile time Development Suite • Compiler instruction generates x86 descriptions and coprocessor instructions from Hybrid-Core Executable P ANSI standard x86-64 and Coprocessor Personalities C/C++ & Fortran Instructions • Executable can run on x86 nodes FPGA Convey HC-1 or Convey Hybrid- bitfiles Core nodes Intel x86 Coprocessor personality loaded at runtime by OS 1/22/2010 6 SYSTEM ARCHITECTURE HC-1 Architecture “Commodity” Intel Server Convey FPGA-based coprocessor Direct -

Bitfusion Guide to CUDA Installation Bitfusion Guides Bitfusion: Bitfusion Guide to CUDA Installation

WHITE PAPER–OCTOBER 2019 Bitfusion Guide to CUDA Installation Bitfusion Guides Bitfusion: Bitfusion Guide to CUDA Installation Table of Contents Purpose 3 Introduction 3 Some Sources of Confusion 4 So Many Prerequisites 4 Installing the NVIDIA Repository 4 Installing the NVIDIA Driver 6 Installing NVIDIA CUDA 6 Installing cuDNN 7 Speed It Up! 8 Upgrading CUDA 9 WHITE PAPER | 2 Bitfusion: Bitfusion Guide to CUDA Installation Purpose Bitfusion FlexDirect provides a GPU virtualization solution. It allows you to use the GPUs, or even partial GPUs (e.g., half of a GPU, or a quarter, or one-third of a GPU), on different servers as if they were attached to your local machine, a machine on which you can now run a CUDA application even though it has no GPUs of its own. Since Bitfusion FlexDirect software and ML/AI (machine learning/artificial intelligence) applications require other CUDA libraries and drivers, this document describes how to install the prerequisite software for both Bitfusion FlexDirect and for various ML/AI applications. If you already have your CUDA drivers and libraries installed, you can skip ahead to the chapter on installing Bitfusion FlexDirect. This document gives instruction and examples for Ubuntu, Red Hat, and CentOS systems. Introduction Bitfusion’s software tool is called FlexDirect. FlexDirect easily installs with a single command, but installing the third-party prerequisite drivers and libraries, plus any application and its prerequisite software, can seem a Gordian Knot to users new to CUDA code and ML/AI applications. We will begin untangling the knot with a table of the components involved. -

C and C++ Preprocessor Directives #Include #Define Macros Inline

MODULE 10 PREPROCESSOR DIRECTIVES My Training Period: hours Abilities ▪ Able to understand and use #include. ▪ Able to understand and use #define. ▪ Able to understand and use macros and inline functions. ▪ Able to understand and use the conditional compilation – #if, #endif, #ifdef, #else, #ifndef and #undef. ▪ Able to understand and use #error, #pragma, # and ## operators and #line. ▪ Able to display error messages during conditional compilation. ▪ Able to understand and use assertions. 10.1 Introduction - For C/C++ preprocessor, preprocessing occurs before a program is compiled. A complete process involved during the preprocessing, compiling and linking can be read in Module W. - Some possible actions are: ▪ Inclusion of other files in the file being compiled. ▪ Definition of symbolic constants and macros. ▪ Conditional compilation of program code or code segment. ▪ Conditional execution of preprocessor directives. - All preprocessor directives begin with #, and only white space characters may appear before a preprocessor directive on a line. 10.2 The #include Preprocessor Directive - The #include directive causes copy of a specified file to be included in place of the directive. The two forms of the #include directive are: //searches for header files and replaces this directive //with the entire contents of the header file here #include <header_file> - Or #include "header_file" e.g. #include <stdio.h> #include "myheader.h" - If the file name is enclosed in double quotes, the preprocessor searches in the same directory (local) as the source file being compiled for the file to be included, if not found then looks in the subdirectory associated with standard header files as specified using angle bracket. - This method is normally used to include user or programmer-defined header files. -

High Performance Computing Through Parallel and Distributed Processing

Yadav S. et al., J. Harmoniz. Res. Eng., 2013, 1(2), 54-64 Journal Of Harmonized Research (JOHR) Journal Of Harmonized Research in Engineering 1(2), 2013, 54-64 ISSN 2347 – 7393 Original Research Article High Performance Computing through Parallel and Distributed Processing Shikha Yadav, Preeti Dhanda, Nisha Yadav Department of Computer Science and Engineering, Dronacharya College of Engineering, Khentawas, Farukhnagar, Gurgaon, India Abstract : There is a very high need of High Performance Computing (HPC) in many applications like space science to Artificial Intelligence. HPC shall be attained through Parallel and Distributed Computing. In this paper, Parallel and Distributed algorithms are discussed based on Parallel and Distributed Processors to achieve HPC. The Programming concepts like threads, fork and sockets are discussed with some simple examples for HPC. Keywords: High Performance Computing, Parallel and Distributed processing, Computer Architecture Introduction time to solve large problems like weather Computer Architecture and Programming play forecasting, Tsunami, Remote Sensing, a significant role for High Performance National calamities, Defence, Mineral computing (HPC) in large applications Space exploration, Finite-element, Cloud science to Artificial Intelligence. The Computing, and Expert Systems etc. The Algorithms are problem solving procedures Algorithms are Non-Recursive Algorithms, and later these algorithms transform in to Recursive Algorithms, Parallel Algorithms particular Programming language for HPC. and Distributed Algorithms. There is need to study algorithms for High The Algorithms must be supported the Performance Computing. These Algorithms Computer Architecture. The Computer are to be designed to computer in reasonable Architecture is characterized with Flynn’s Classification SISD, SIMD, MIMD, and For Correspondence: MISD. Most of the Computer Architectures preeti.dhanda01ATgmail.com are supported with SIMD (Single Instruction Received on: October 2013 Multiple Data Streams). -

An Execution Model for Serverless Functions at the Edge

An Execution Model for Serverless Functions at the Edge Adam Hall Umakishore Ramachandran Georgia Institute of Technology Georgia Institute of Technology Atlanta, Georgia Atlanta, Georgia ach@gatech:edu rama@gatech:edu ABSTRACT 1 INTRODUCTION Serverless computing platforms allow developers to host single- Enabling next generation technologies such as self-driving cars or purpose applications that automatically scale with demand. In con- smart cities via edge computing requires us to reconsider the way trast to traditional long-running applications on dedicated, virtu- we characterize and deploy the services supporting those technolo- alized, or container-based platforms, serverless applications are gies. Edge/fog environments consist of many micro data centers intended to be instantiated when called, execute a single function, spread throughout the edge of the network. This is in stark contrast and shut down when finished. State-of-the-art serverless platforms to the cloud, where we assume the notion of unlimited resources achieve these goals by creating a new container instance to host available in a few centralized data centers. These micro data center a function when it is called and destroying the container when it environments must support large numbers of Internet of Things completes. This design allows for cost and resource savings when (IoT) devices on limited hardware resources, processing the mas- hosting simple applications, such as those supporting IoT devices sive amounts of data those devices generate while providing quick at the edge of the network. However, the use of containers intro- decisions to inform their actions [44]. One solution to supporting duces some overhead which may be unsuitable for applications emerging technologies at the edge lies in serverless computing. -

Parallel Architectures and Algorithms for Large-Scale Nonlinear Programming

Parallel Architectures and Algorithms for Large-Scale Nonlinear Programming Carl D. Laird Associate Professor, School of Chemical Engineering, Purdue University Faculty Fellow, Mary Kay O’Connor Process Safety Center Laird Research Group: http://allthingsoptimal.com Landscape of Scientific Computing 10$ 1$ 50%/year 20%/year Clock&Rate&(GHz)& 0.1$ 0.01$ 1980$ 1985$ 1990$ 1995$ 2000$ 2005$ 2010$ Year& [Steven Edwards, Columbia University] 2 Landscape of Scientific Computing Clock-rate, the source of past speed improvements have stagnated. Hardware manufacturers are shifting their focus to energy efficiency (mobile, large10" data centers) and parallel architectures. 12" 10" 1" 8" 6" #"of"Cores" Clock"Rate"(GHz)" 0.1" 4" 2" 0.01" 0" 1980" 1985" 1990" 1995" 2000" 2005" 2010" Year" 3 “… over a 15-year span… [problem] calculations improved by a factor of 43 million. [A] factor of roughly 1,000 was attributable to faster processor speeds, … [yet] a factor of 43,000 was due to improvements in the efficiency of software algorithms.” - attributed to Martin Grotschel [Steve Lohr, “Software Progress Beats Moore’s Law”, New York Times, March 7, 2011] Landscape of Computing 5 Landscape of Computing High-Performance Parallel Architectures Multi-core, Grid, HPC clusters, Specialized Architectures (GPU) 6 Landscape of Computing High-Performance Parallel Architectures Multi-core, Grid, HPC clusters, Specialized Architectures (GPU) 7 Landscape of Computing data VM iOS and Android device sales High-Performance have surpassed PC Parallel Architectures iPhone performance -

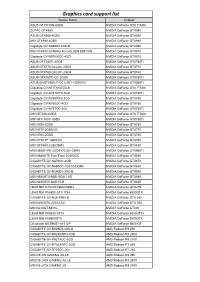

Graphics Card Support List

Graphics card support list Device Name Chipset ASUS GTXTITAN-6GD5 NVIDIA GeForce GTX TITAN ZOTAC GTX980 NVIDIA GeForce GTX980 ASUS GTX980-4GD5 NVIDIA GeForce GTX980 MSI GTX980-4GD5 NVIDIA GeForce GTX980 Gigabyte GV-N980D5-4GD-B NVIDIA GeForce GTX980 MSI GTX970 GAMING 4G GOLDEN EDITION NVIDIA GeForce GTX970 Gigabyte GV-N970IXOC-4GD NVIDIA GeForce GTX970 ASUS GTX780TI-3GD5 NVIDIA GeForce GTX780Ti ASUS GTX770-DC2OC-2GD5 NVIDIA GeForce GTX770 ASUS GTX760-DC2OC-2GD5 NVIDIA GeForce GTX760 ASUS GTX750TI-OC-2GD5 NVIDIA GeForce GTX750Ti ASUS ENGTX560-Ti-DCII/2D1-1GD5/1G NVIDIA GeForce GTX560Ti Gigabyte GV-NTITAN-6GD-B NVIDIA GeForce GTX TITAN Gigabyte GV-N78TWF3-3GD NVIDIA GeForce GTX780Ti Gigabyte GV-N780WF3-3GD NVIDIA GeForce GTX780 Gigabyte GV-N760OC-4GD NVIDIA GeForce GTX760 Gigabyte GV-N75TOC-2GI NVIDIA GeForce GTX750Ti MSI NTITAN-6GD5 NVIDIA GeForce GTX TITAN MSI GTX 780Ti 3GD5 NVIDIA GeForce GTX780Ti MSI N780-3GD5 NVIDIA GeForce GTX780 MSI N770-2GD5/OC NVIDIA GeForce GTX770 MSI N760-2GD5 NVIDIA GeForce GTX760 MSI N750 TF 1GD5/OC NVIDIA GeForce GTX750 MSI GTX680-2GB/DDR5 NVIDIA GeForce GTX680 MSI N660Ti-PE-2GD5-OC/2G-DDR5 NVIDIA GeForce GTX660Ti MSI N680GTX Twin Frozr 2GD5/OC NVIDIA GeForce GTX680 GIGABYTE GV-N670OC-2GD NVIDIA GeForce GTX670 GIGABYTE GV-N650OC-1GI/1G-DDR5 NVIDIA GeForce GTX650 GIGABYTE GV-N590D5-3GD-B NVIDIA GeForce GTX590 MSI N580GTX-M2D15D5/1.5G NVIDIA GeForce GTX580 MSI N465GTX-M2D1G-B NVIDIA GeForce GTX465 LEADTEK GTX275/896M-DDR3 NVIDIA GeForce GTX275 LEADTEK PX8800 GTX TDH NVIDIA GeForce 8800GTX GIGABYTE GV-N26-896H-B -

NVIDIA Quadro RTX for V-Ray Next

NVIDIA QUADRO RTX V-RAY NEXT GPU Image courtesy of © Dabarti Studio, rendered with V-Ray GPU Quadro RTX Accelerates V-Ray Next GPU Rendering Solutions for V-Ray Next GPU V-Ray Next GPU taps into the power of NVIDIA® Quadro® NVIDIA Quadro® provides a wide range of RTX-enabled RTX™ to speed up production rendering with dedicated RT solutions for desktop, mobile, server-based rendering, and Cores for ray tracing and Tensor Cores for AI-accelerated virtual workstations with NVIDIA Quadro Virtual Data denoising.¹ With up to 18X faster rendering than CPU-based Center Workstation (Quadro vDWS) software.2 With up to 96 solutions and enhanced performance with NVIDIA NVLink™, gigabytes (GB) of GPU memory available,3 Quadro RTX V-Ray Next GPU with RTX support provides incredible provides the power you need for the largest professional performance improvements for your rendering workloads. graphics and rendering workloads. “ Accelerating artist productivity is always our top Benchmark: V-Ray Next GPU Rendering Performance Increase on Quadro RTX GPUs priority, so we’re quick to take advantage of the latest ray-tracing hardware breakthroughs. By Quadro RTX 6000 x2 1885 ™ Quadro RTX 6000 104 supporting NVIDIA RTX in V-Ray GPU, we’re Quadro RTX 4000 783 bringing our customers an exciting new boost in PU 1 0 2 4 6 8 10 12 14 16 18 20 their GPU production rendering speeds.” Relatve Performance – Phillip Miller, Vice President, Product Management, Chaos Group Desktop performance Tests run on 1x Xeon old 6154 3 Hz (37 Hz Turbo), 64 B DDR4 RAM Wn10x64 Drver verson 44128 Performance results may vary dependng on the scene NVIDIA Quadro professional graphics solutions are verified and recommended for the most demanding projects by Chaos Group. -

NVIDIA Launches Tegra X1 Mobile Super Chip

NVIDIA Launches Tegra X1 Mobile Super Chip Maxwell GPU Architecture Delivers First Teraflops Mobile Processor, Powering Deep Learning and Computer Vision Applications NVIDIA today unveiled Tegra® X1, its next-generation mobile super chip with over one teraflops of processing power – delivering capabilities that open the door to unprecedented graphics and sophisticated deep learning and computer vision applications. Tegra X1 is built on the same NVIDIA Maxwell™ GPU architecture rolled out only months ago for the world's top-performing gaming graphics card, the GeForce® GTX 980. The 256-core Tegra X1 provides twice the performance of its predecessor, the Tegra K1, which is based on the previous-generation Kepler™ architecture and debuted at last year's Consumer Electronics Show. Tegra processors are built for embedded products, mobile devices, autonomous machines and automotive applications. Tegra X1 will begin appearing in the first half of the year. It will be featured in the newly announced NVIDIA DRIVE™ car computers. DRIVE PX is an auto-pilot computing platform that can process video from up to 12 onboard cameras to run capabilities providing Surround-Vision, for a seamless 360-degree view around the car, and Auto-Valet, for true self-parking. DRIVE CX is a complete cockpit platform designed to power the advanced graphics required across the increasing number of screens used for digital clusters, infotainment, head-up displays, virtual mirrors and rear-seat entertainment. "We see a future of autonomous cars, robots and drones that see and learn, with seeming intelligence that is hard to imagine," said Jen-Hsun Huang, CEO and co-founder, NVIDIA.