GPU-Based Deep Learning Inference

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

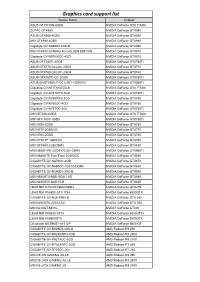

Graphics Card Support List

Graphics card support list Device Name Chipset ASUS GTXTITAN-6GD5 NVIDIA GeForce GTX TITAN ZOTAC GTX980 NVIDIA GeForce GTX980 ASUS GTX980-4GD5 NVIDIA GeForce GTX980 MSI GTX980-4GD5 NVIDIA GeForce GTX980 Gigabyte GV-N980D5-4GD-B NVIDIA GeForce GTX980 MSI GTX970 GAMING 4G GOLDEN EDITION NVIDIA GeForce GTX970 Gigabyte GV-N970IXOC-4GD NVIDIA GeForce GTX970 ASUS GTX780TI-3GD5 NVIDIA GeForce GTX780Ti ASUS GTX770-DC2OC-2GD5 NVIDIA GeForce GTX770 ASUS GTX760-DC2OC-2GD5 NVIDIA GeForce GTX760 ASUS GTX750TI-OC-2GD5 NVIDIA GeForce GTX750Ti ASUS ENGTX560-Ti-DCII/2D1-1GD5/1G NVIDIA GeForce GTX560Ti Gigabyte GV-NTITAN-6GD-B NVIDIA GeForce GTX TITAN Gigabyte GV-N78TWF3-3GD NVIDIA GeForce GTX780Ti Gigabyte GV-N780WF3-3GD NVIDIA GeForce GTX780 Gigabyte GV-N760OC-4GD NVIDIA GeForce GTX760 Gigabyte GV-N75TOC-2GI NVIDIA GeForce GTX750Ti MSI NTITAN-6GD5 NVIDIA GeForce GTX TITAN MSI GTX 780Ti 3GD5 NVIDIA GeForce GTX780Ti MSI N780-3GD5 NVIDIA GeForce GTX780 MSI N770-2GD5/OC NVIDIA GeForce GTX770 MSI N760-2GD5 NVIDIA GeForce GTX760 MSI N750 TF 1GD5/OC NVIDIA GeForce GTX750 MSI GTX680-2GB/DDR5 NVIDIA GeForce GTX680 MSI N660Ti-PE-2GD5-OC/2G-DDR5 NVIDIA GeForce GTX660Ti MSI N680GTX Twin Frozr 2GD5/OC NVIDIA GeForce GTX680 GIGABYTE GV-N670OC-2GD NVIDIA GeForce GTX670 GIGABYTE GV-N650OC-1GI/1G-DDR5 NVIDIA GeForce GTX650 GIGABYTE GV-N590D5-3GD-B NVIDIA GeForce GTX590 MSI N580GTX-M2D15D5/1.5G NVIDIA GeForce GTX580 MSI N465GTX-M2D1G-B NVIDIA GeForce GTX465 LEADTEK GTX275/896M-DDR3 NVIDIA GeForce GTX275 LEADTEK PX8800 GTX TDH NVIDIA GeForce 8800GTX GIGABYTE GV-N26-896H-B -

NVIDIA Quadro RTX for V-Ray Next

NVIDIA QUADRO RTX V-RAY NEXT GPU Image courtesy of © Dabarti Studio, rendered with V-Ray GPU Quadro RTX Accelerates V-Ray Next GPU Rendering Solutions for V-Ray Next GPU V-Ray Next GPU taps into the power of NVIDIA® Quadro® NVIDIA Quadro® provides a wide range of RTX-enabled RTX™ to speed up production rendering with dedicated RT solutions for desktop, mobile, server-based rendering, and Cores for ray tracing and Tensor Cores for AI-accelerated virtual workstations with NVIDIA Quadro Virtual Data denoising.¹ With up to 18X faster rendering than CPU-based Center Workstation (Quadro vDWS) software.2 With up to 96 solutions and enhanced performance with NVIDIA NVLink™, gigabytes (GB) of GPU memory available,3 Quadro RTX V-Ray Next GPU with RTX support provides incredible provides the power you need for the largest professional performance improvements for your rendering workloads. graphics and rendering workloads. “ Accelerating artist productivity is always our top Benchmark: V-Ray Next GPU Rendering Performance Increase on Quadro RTX GPUs priority, so we’re quick to take advantage of the latest ray-tracing hardware breakthroughs. By Quadro RTX 6000 x2 1885 ™ Quadro RTX 6000 104 supporting NVIDIA RTX in V-Ray GPU, we’re Quadro RTX 4000 783 bringing our customers an exciting new boost in PU 1 0 2 4 6 8 10 12 14 16 18 20 their GPU production rendering speeds.” Relatve Performance – Phillip Miller, Vice President, Product Management, Chaos Group Desktop performance Tests run on 1x Xeon old 6154 3 Hz (37 Hz Turbo), 64 B DDR4 RAM Wn10x64 Drver verson 44128 Performance results may vary dependng on the scene NVIDIA Quadro professional graphics solutions are verified and recommended for the most demanding projects by Chaos Group. -

NVIDIA Launches Tegra X1 Mobile Super Chip

NVIDIA Launches Tegra X1 Mobile Super Chip Maxwell GPU Architecture Delivers First Teraflops Mobile Processor, Powering Deep Learning and Computer Vision Applications NVIDIA today unveiled Tegra® X1, its next-generation mobile super chip with over one teraflops of processing power – delivering capabilities that open the door to unprecedented graphics and sophisticated deep learning and computer vision applications. Tegra X1 is built on the same NVIDIA Maxwell™ GPU architecture rolled out only months ago for the world's top-performing gaming graphics card, the GeForce® GTX 980. The 256-core Tegra X1 provides twice the performance of its predecessor, the Tegra K1, which is based on the previous-generation Kepler™ architecture and debuted at last year's Consumer Electronics Show. Tegra processors are built for embedded products, mobile devices, autonomous machines and automotive applications. Tegra X1 will begin appearing in the first half of the year. It will be featured in the newly announced NVIDIA DRIVE™ car computers. DRIVE PX is an auto-pilot computing platform that can process video from up to 12 onboard cameras to run capabilities providing Surround-Vision, for a seamless 360-degree view around the car, and Auto-Valet, for true self-parking. DRIVE CX is a complete cockpit platform designed to power the advanced graphics required across the increasing number of screens used for digital clusters, infotainment, head-up displays, virtual mirrors and rear-seat entertainment. "We see a future of autonomous cars, robots and drones that see and learn, with seeming intelligence that is hard to imagine," said Jen-Hsun Huang, CEO and co-founder, NVIDIA. -

Nvidia Cuda Getting Started Guide for Linux

NVIDIA CUDA GETTING STARTED GUIDE FOR LINUX DU-05347-001_v7.0 | March 2015 Installation and Verification on Linux Systems TABLE OF CONTENTS Chapter 1. Introduction.........................................................................................1 1.1. System Requirements.................................................................................... 1 1.1.1. x86 32-bit Support.................................................................................. 2 1.2. About This Document.................................................................................... 3 Chapter 2. Pre-installation Actions...........................................................................4 2.1. Verify You Have a CUDA-Capable GPU................................................................ 4 2.2. Verify You Have a Supported Version of Linux.......................................................4 2.3. Verify the System Has gcc Installed................................................................... 5 2.4. Choose an Installation Method......................................................................... 5 2.5. Download the NVIDIA CUDA Toolkit....................................................................6 2.6. Handle Conflicting Installation Methods.............................................................. 6 Chapter 3. Package Manager Installation....................................................................8 3.1. Overview.................................................................................................. -

Msi Nvidia Drivers Download Update MSI Graphics Card Driver on Windows 10 & 7

msi nvidia drivers download Update MSI Graphics Card Driver on Windows 10 & 7. Easily! Updating MSI graphics card drivers provides you with high gaming performance. So it’s recommended you keep your graphics card driver up-to- date. In this post, you’ll learn two ways to download and install the latest MSI graphics card driver. Download the MSI graphics card driver manually Download and install the MSI graphics card driver automatically. Way 1: Download the MSI graphics card driver manually. MSI provides the graphics driver on their website, for instance, the NVIDIA graphics card driver and the AMD graphics card driver. So you can check for and download the latest driver you need for your graphics card from MSI’s website. The driver always can be downloaded on the SUPPORT section. Go to MSI website and enter the name of your graphics card and perform a quick search. Then follow the on-screen instructions to download the driver you need. MSI always uploads new drivers to their website. So it’s recommended you to check for the driver release often in order to get the latest driver in time. If you don’t have time and patience to download the driver manually, Way 2 may be a better option for you. Way 2 : Download and install the MSI graphics card driver automatically. If you don’t have the time, patience or computer skills to update the MSI graphics driver manually, you can do it automatically with Driver Easy . Driver Easy will automatically recognize your system and find the correct drivers for it. -

Cuda C Programming Guide

CUDA C PROGRAMMING GUIDE PG-02829-001_v10.0 | October 2018 Design Guide CHANGES FROM VERSION 9.0 ‣ Documented restriction that operator-overloads cannot be __global__ functions in Operator Function. ‣ Removed guidance to break 8-byte shuffles into two 4-byte instructions. 8-byte shuffle variants are provided since CUDA 9.0. See Warp Shuffle Functions. ‣ Passing __restrict__ references to __global__ functions is now supported. Updated comment in __global__ functions and function templates. ‣ Documented CUDA_ENABLE_CRC_CHECK in CUDA Environment Variables. ‣ Warp matrix functions now support matrix products with m=32, n=8, k=16 and m=8, n=32, k=16 in addition to m=n=k=16. www.nvidia.com CUDA C Programming Guide PG-02829-001_v10.0 | ii TABLE OF CONTENTS Chapter 1. Introduction.........................................................................................1 1.1. From Graphics Processing to General Purpose Parallel Computing............................... 1 1.2. CUDA®: A General-Purpose Parallel Computing Platform and Programming Model.............3 1.3. A Scalable Programming Model.........................................................................4 1.4. Document Structure...................................................................................... 5 Chapter 2. Programming Model............................................................................... 7 2.1. Kernels......................................................................................................7 2.2. Thread Hierarchy........................................................................................ -

Numerical Behavior of NVIDIA Tensor Cores

Numerical behavior of NVIDIA tensor cores Massimiliano Fasi1, Nicholas J. Higham2, Mantas Mikaitis2 and Srikara Pranesh2 1 School of Science and Technology, Örebro University, Örebro, Sweden 2 Department of Mathematics, University of Manchester, Manchester, UK ABSTRACT We explore the floating-point arithmetic implemented in the NVIDIA tensor cores, which are hardware accelerators for mixed-precision matrix multiplication available on the Volta, Turing, and Ampere microarchitectures. Using Volta V100, Turing T4, and Ampere A100 graphics cards, we determine what precision is used for the intermediate results, whether subnormal numbers are supported, what rounding mode is used, in which order the operations underlying the matrix multiplication are performed, and whether partial sums are normalized. These aspects are not documented by NVIDIA, and we gain insight by running carefully designed numerical experiments on these hardware units. Knowing the answers to these questions is important if one wishes to: (1) accurately simulate NVIDIA tensor cores on conventional hardware; (2) understand the differences between results produced by code that utilizes tensor cores and code that uses only IEEE 754-compliant arithmetic operations; and (3) build custom hardware whose behavior matches that of NVIDIA tensor cores. As part of this work we provide a test suite that can be easily adapted to test newer versions of the NVIDIA tensorcoresaswellassimilaracceleratorsfromothervendors,astheybecome available. Moreover, we identify a non-monotonicity issue -

NVIDIA Ampere GA102 GPU Architecture Whitepaper

NVIDIA AMPERE GA102 GPU ARCHITECTURE Second-Generation RTX Updated with NVIDIA RTX A6000 and NVIDIA A40 Information V2.0 Table of Contents Introduction 5 GA102 Key Features 7 2x FP32 Processing 7 Second-Generation RT Core 7 Third-Generation Tensor Cores 8 GDDR6X and GDDR6 Memory 8 Third-Generation NVLink® 8 PCIe Gen 4 9 Ampere GPU Architecture In-Depth 10 GPC, TPC, and SM High-Level Architecture 10 ROP Optimizations 11 GA10x SM Architecture 11 2x FP32 Throughput 12 Larger and Faster Unified Shared Memory and L1 Data Cache 13 Performance Per Watt 16 Second-Generation Ray Tracing Engine in GA10x GPUs 17 Ampere Architecture RTX Processors in Action 19 GA10x GPU Hardware Acceleration for Ray-Traced Motion Blur 20 Third-Generation Tensor Cores in GA10x GPUs 24 Comparison of Turing vs GA10x GPU Tensor Cores 24 NVIDIA Ampere Architecture Tensor Cores Support New DL Data Types 26 Fine-Grained Structured Sparsity 26 NVIDIA DLSS 8K 28 GDDR6X Memory 30 RTX IO 32 Introducing NVIDIA RTX IO 33 How NVIDIA RTX IO Works 34 Display and Video Engine 38 DisplayPort 1.4a with DSC 1.2a 38 HDMI 2.1 with DSC 1.2a 38 Fifth Generation NVDEC - Hardware-Accelerated Video Decoding 39 AV1 Hardware Decode 40 Seventh Generation NVENC - Hardware-Accelerated Video Encoding 40 NVIDIA Ampere GA102 GPU Architecture ii Conclusion 42 Appendix A - Additional GeForce GA10x GPU Specifications 44 GeForce RTX 3090 44 GeForce RTX 3070 46 Appendix B - New Memory Error Detection and Replay (EDR) Technology 49 Appendix C - RTX A6000 GPU Perf ormance 50 List of Figures Figure 1. -

Manycore GPU Architectures and Programming, Part 1

Lecture 19: Manycore GPU Architectures and Programming, Part 1 Concurrent and Mul=core Programming CSE 436/536, [email protected] www.secs.oakland.edu/~yan 1 Topics (Part 2) • Parallel architectures and hardware – Parallel computer architectures – Memory hierarchy and cache coherency • Manycore GPU architectures and programming – GPUs architectures – CUDA programming – Introduc?on to offloading model in OpenMP and OpenACC • Programming on large scale systems (Chapter 6) – MPI (point to point and collec=ves) – Introduc?on to PGAS languages, UPC and Chapel • Parallel algorithms (Chapter 8,9 &10) – Dense matrix, and sorng 2 Manycore GPU Architectures and Programming: Outline • Introduc?on – GPU architectures, GPGPUs, and CUDA • GPU Execuon model • CUDA Programming model • Working with Memory in CUDA – Global memory, shared and constant memory • Streams and concurrency • CUDA instruc?on intrinsic and library • Performance, profiling, debugging, and error handling • Direc?ve-based high-level programming model – OpenACC and OpenMP 3 Computer Graphics GPU: Graphics Processing Unit 4 Graphics Processing Unit (GPU) Image: h[p://www.ntu.edu.sg/home/ehchua/programming/opengl/CG_BasicsTheory.html 5 Graphics Processing Unit (GPU) • Enriching user visual experience • Delivering energy-efficient compung • Unlocking poten?als of complex apps • Enabling Deeper scien?fic discovery 6 What is GPU Today? • It is a processor op?mized for 2D/3D graphics, video, visual compu?ng, and display. • It is highly parallel, highly multhreaded mulprocessor op?mized for visual -

Geforce® Gtx 680 2Gb Vcggtx680xpb

FILENAME: PNY-GeForce-GTX-680-2GB MODIFIED: April 25, 2013 12:02 PM GEFORCE® GTX 680 2GB VCGGTX680XPB Game-Changing Innovation PRODUCT SPECIFICATIONS: PRODUCT SPECIFICATIONS The GeForce® GTX 680 delivers more than just state-of-the-art features and technology. It gives you truly GPU GeForce® GTX 680 ® gaming-changing performance that taps into the power next generation GeForce Kepler-architecture to CUDA Cores 1536 redefine smooth, seamless, lifelike gaming. NVIDIA GPU Boost technology that dynamically maximize clock speeds to push performance to the new levels and bring out the best in every game. Ultra-smooth Core Speed 1006 MHz gaming with exciting new NVIDIA Adaptive V-Sync technology. Boost Speed 1058 MHz Memory Size 2048 MB GDDR5 PACKAGE INCLUDES REQUIREMENTS Memory • GeForce® GTX 680 2GB graphics card • PCI Express compliant motherboard with one 256 bit Interface • Quick Installation Guide dual-width x16 graphics slot • Installation DVD, which includes: • Two 6-pin PCI Express supplementary Memory 6.0 Gbps Detailed installation guide power connectors Frequency ® - NVIDIA Graphics Drivers • Minimum 550W or greater system power supply TDP 195W-Active - NVIDIA® GeForce® Demos (with a minimum 12V current rating of 38A) - PhysX™ System Software • 300 MB of available hard-disk space 2GB SLI 3-Way SLI • DVI-to-VGA adapter system memory (2GB or higher recommended) 4 Concurrent Screens*** Multi-Screen • 6-pin PCIe to Molex Power Adapter • Microsoft Windows 7, Windows Vista, or NVIDIA 3D Surround* Windows XP Operating System (32 or 64-bit) -

Geforce 500 Series Graphics Cards, Including Without Limitation the Geforce GTX

c) GeForce 500 series graphics cards, including without limitation the GeForce GTX 580, GeForce GTX 570, GeForce GTX 560 Ti, GeForce GTX 560, GeForce GTX 550 Ti, and GeForce GT 520; d) GeForce 400 series graphics cards, including without limitation the GcForcc GTX 480, GcForcc GTX 470, GeForce GTX 460, GeForce GTS 450, GeForce GTS 440, and GcForcc GT 430; and c) GcForcc 200 series graphics cards, including without limitation the GeForce GT 240, GcForcc GT 220, and GeForce 210. 134. On information and belief, the Accused ’734 Biostar Products contain at least one of the following Accused NVIDIA ’734 Products that infringe at least claims 1-3, 7-9, 12-15, 17, and 19 of the ’734 patent, literally or under the doctrine of equivalents: the GFIOO, GFl04, GF108, GF110, GF114, GF116, GF119, GK104, GKIO6, GK107, GK208, GM107, GT2l5, GT2l6, and GT2l8, which are infringing NVIDIA GPUs. (See Section VI.D.1 and claim charts attached as Exhibit 28.) 3. ECS 135. On information and belief, ECS imports, sells for importation, and/or sells after importation into the United States, Accused Products that incorporate Accused NVIDIA ’734 Products (the “Accused PCS ’734 Products”) and therefore infringe the ’734 patent, literally or under the doctrine of equivalents. 136. On information and belief, the Accused ECS "/34 Products include at least the following products that directly infringe at least claims 1-3, 7-9, 12-15, 17, and 19 of the ’734 patent, literally or under the doctrine of equivalents: a) GeForce 600 series graphics cards, including without limitation -

Numerical Behavior of NVIDIA Tensor Cores Fasi, Massimiliano And

Numerical Behavior of NVIDIA Tensor Cores Fasi, Massimiliano and Higham, Nicholas J. and Mikaitis, Mantas and Pranesh, Srikara 2020 MIMS EPrint: 2020.10 Manchester Institute for Mathematical Sciences School of Mathematics The University of Manchester Reports available from: http://eprints.maths.manchester.ac.uk/ And by contacting: The MIMS Secretary School of Mathematics The University of Manchester Manchester, M13 9PL, UK ISSN 1749-9097 Numerical Behavior of NVIDIA Tensor Cores Massimiliano Fasi*1, Nicholas J. Higham2, Mantas Mikaitis2, and Srikara Pranesh2 1School of Science and Technology, Orebro¨ University, Orebro,¨ Sweden 2Department of Mathematics, University of Manchester, Manchester, United Kingdom Corresponding author: Mantas Mikaitis2 Email address: [email protected] ABSTRACT We explore the floating-point arithmetic implemented in the NVIDIA tensor cores, which are hardware accelerators for mixed-precision matrix multiplication available on the Volta, Turing, and Ampere microarchitectures. Using Volta V100, Turing T4 and Ampere A100 graphics cards, we determine what precision is used for the intermediate results, whether subnormal numbers are supported, what rounding mode is used, in which order the operations underlying the matrix multiplication are performed, and whether partial sums are normalized. These aspects are not documented by NVIDIA, and we gain insight by running carefully designed numerical experiments on these hardware units. Knowing the answers to these questions is important if one wishes to: 1) accurately simulate NVIDIA tensor cores on conventional hardware; 2) understand the differences between results produced by code that utilizes tensor cores and code that uses only IEEE 754-compliant arithmetic operations; and 3) build custom hardware whose behavior matches that of NVIDIA tensor cores.