(Gpus) for Department of Defense (Dod) Digital Signal Processing (DSP) Applications

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Physically Based Rendering: from Theory to Implementation Pdf, Epub, Ebook

PHYSICALLY BASED RENDERING: FROM THEORY TO IMPLEMENTATION PDF, EPUB, EBOOK Matt Pharr, Greg Humphreys, Wenzel Jakob | 1266 pages | 18 Oct 2016 | ELSEVIER SCIENCE & TECHNOLOGY | 9780128006450 | English | San Francisco, United States Physically Based Rendering: From Theory to Implementation PDF Book The text should make more clear that we're making use of the tristimulus theory of color perception in that step. I wrote a technical perspective, The Ray-Tracing Engine That Could , that introduced the article and helped frame the work's achievements for a non-graphics audience. Design and novel uses of higher-dimensional rasterization. Lecture 7: Splines, Curves and Surfaces Shirley et al. Hardcover ISBN: Stanford csb I recently had a great time teaching the and installments of csb, the graduate-level rendering course at Stanford. A practical shading model for ray tracing. Free Shipping Free global shipping No minimum order. Then, in the code below, the negation of 'd' should be removed and in the places where 'd' is added in the computations of tx and ty, subtraction should be used. Toggle navigation pbrt. Feiner, and Kurt Akeley. Osgood this is an outstanding reference Lecture 4: Transforms Shirley et al. I was also fortunate to have the opportunity to teach the class. Given its unconventional preparation style, this textbook stands out because of its descriptions of the tradeoffs involved in developing a complete working renderer. Index of Miscellaneous Identifiers. Wenzel is also the lead developer of the Mitsuba renderer , a research-oriented rendering system. Physically Based Rendering, Second Edition , describes both the mathematical theory behind a modern photorealistic rendering system as well as its practical implementation. -

Rendering Complex Scenes with Memory-Coherent Ray Tracing

To appear in Proceedings of SIGGRAPH 1997 Rendering Complex Scenes with Memory-Coherent Ray Tracing Matt Pharr Craig Kolb Reid Gershbein Pat Hanrahan Computer Science Department, Stanford University Abstract both. For example, Z-buffer rendering algorithms operate on a sin- gle primitive at a time, which makes it possible to build rendering Simulating realistic lighting and rendering complex scenes are usu- hardware that does not need access to the entire scene. More re- ally considered separate problems with incompatible solutions. Ac- cently, the Talisman architecture was designed to exploit frame-to- curate lighting calculations are typically performed using ray trac- frame coherence as a means of accelerating rendering and reducing ing algorithms, which require that the entire scene database reside memory bandwidth requirements[21]. in memory to perform well. Conversely, most systems capable of Kajiya has written a whitepaper that proposes an architecture rendering complex scenes use scan-conversion algorithms that ac- for Monte Carlo ray tracing systems that is designed to improve cess memory coherently, but are unable to incorporate sophisticated coherence across all levels of the memory hierarchy, from pro- illumination. We have developed algorithms that use caching and cessor caches to disk storage[13]. The rendering computation is lazy creation of texture and geometry to manage scene complexity. decomposed into parts—ray-object intersections, shading calcula- To improve cache performance, we increase locality of reference tions, and calculating spawned rays—that are performed indepen- by dynamically reordering the rendering computation based on the dently. The coherence of memory references is increased through contents of the cache. We have used these algorithms to compute careful management of the interaction of the computation and the images of scenes containing millions of primitives, while storing memory that it references, reducing overall running time and facil- ten percent of the scene description in memory. -

Course Overview

Course overview Digital Image Synthesis Yung-Yu Chuang with slides by Mario Costa Sousa, Pat Hanrahan and Revi Ramamoorthi Logistics • Meeting time: 2:20pm-5:20pm, Thursday • Classroom: CSIE Room 111 • Instructor: Yung-Yu Chuang ([email protected]) • TA:陳育聖 • Webpage: http://www.csie.ntu.edu.tw/~cyy/rendering id/password • Mailing list: [email protected] Please subscribe via https://cmlmail.csie.ntu.edu.tw/mailman/listinfo/rendering/ Prerequisites • C++ programming experience is required. • Basic knowledge on algorithm and data structure is essential. • Knowledge on linear algebra, probability, calculus and numerical methods is a plus. • Though not required, it is recommended that you have background knowledge on computer graphics. Requirements (subject to change) • 3 programming assignments (60%) • Class participation (5%) • Final project (35%) Textbook Physically Based Rendering from Theory to Implementation, 2nd ed, by Matt Pharr and Greg Humphreys •Authors have a lot of experience on ray tracing •Complete (educational) code, more concrete •Has been used in many courses and papers •Implement some advanced or difficult-to-implement methods: subdivision surfaces, Metropolis sampling, BSSRDF, PRT. •3rd edition is coming next year! pbrt won Oscar 2014 •ToMatt Pharr, Greg Humphreys and Pat Hanrahan for their formalization and reference implementation of the concepts behind physically based rendering, as shared in their book Physically Based Rendering. Physically based rendering has transformed computer graphics lighting by more accurately simulating materials and lights, allowing digital artists to focus on cinematography rather than the intricacies of rendering. First published in 2004, Physically Based Rendering is both a textbook and a complete source-code implementation that has provided a widely adopted practical roadmap for most physically based shading and lighting systems used in film production. -

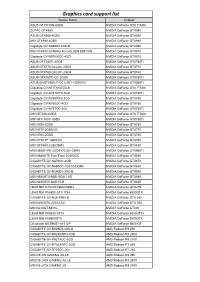

Graphics Card Support List

Graphics card support list Device Name Chipset ASUS GTXTITAN-6GD5 NVIDIA GeForce GTX TITAN ZOTAC GTX980 NVIDIA GeForce GTX980 ASUS GTX980-4GD5 NVIDIA GeForce GTX980 MSI GTX980-4GD5 NVIDIA GeForce GTX980 Gigabyte GV-N980D5-4GD-B NVIDIA GeForce GTX980 MSI GTX970 GAMING 4G GOLDEN EDITION NVIDIA GeForce GTX970 Gigabyte GV-N970IXOC-4GD NVIDIA GeForce GTX970 ASUS GTX780TI-3GD5 NVIDIA GeForce GTX780Ti ASUS GTX770-DC2OC-2GD5 NVIDIA GeForce GTX770 ASUS GTX760-DC2OC-2GD5 NVIDIA GeForce GTX760 ASUS GTX750TI-OC-2GD5 NVIDIA GeForce GTX750Ti ASUS ENGTX560-Ti-DCII/2D1-1GD5/1G NVIDIA GeForce GTX560Ti Gigabyte GV-NTITAN-6GD-B NVIDIA GeForce GTX TITAN Gigabyte GV-N78TWF3-3GD NVIDIA GeForce GTX780Ti Gigabyte GV-N780WF3-3GD NVIDIA GeForce GTX780 Gigabyte GV-N760OC-4GD NVIDIA GeForce GTX760 Gigabyte GV-N75TOC-2GI NVIDIA GeForce GTX750Ti MSI NTITAN-6GD5 NVIDIA GeForce GTX TITAN MSI GTX 780Ti 3GD5 NVIDIA GeForce GTX780Ti MSI N780-3GD5 NVIDIA GeForce GTX780 MSI N770-2GD5/OC NVIDIA GeForce GTX770 MSI N760-2GD5 NVIDIA GeForce GTX760 MSI N750 TF 1GD5/OC NVIDIA GeForce GTX750 MSI GTX680-2GB/DDR5 NVIDIA GeForce GTX680 MSI N660Ti-PE-2GD5-OC/2G-DDR5 NVIDIA GeForce GTX660Ti MSI N680GTX Twin Frozr 2GD5/OC NVIDIA GeForce GTX680 GIGABYTE GV-N670OC-2GD NVIDIA GeForce GTX670 GIGABYTE GV-N650OC-1GI/1G-DDR5 NVIDIA GeForce GTX650 GIGABYTE GV-N590D5-3GD-B NVIDIA GeForce GTX590 MSI N580GTX-M2D15D5/1.5G NVIDIA GeForce GTX580 MSI N465GTX-M2D1G-B NVIDIA GeForce GTX465 LEADTEK GTX275/896M-DDR3 NVIDIA GeForce GTX275 LEADTEK PX8800 GTX TDH NVIDIA GeForce 8800GTX GIGABYTE GV-N26-896H-B -

NVIDIA Quadro RTX for V-Ray Next

NVIDIA QUADRO RTX V-RAY NEXT GPU Image courtesy of © Dabarti Studio, rendered with V-Ray GPU Quadro RTX Accelerates V-Ray Next GPU Rendering Solutions for V-Ray Next GPU V-Ray Next GPU taps into the power of NVIDIA® Quadro® NVIDIA Quadro® provides a wide range of RTX-enabled RTX™ to speed up production rendering with dedicated RT solutions for desktop, mobile, server-based rendering, and Cores for ray tracing and Tensor Cores for AI-accelerated virtual workstations with NVIDIA Quadro Virtual Data denoising.¹ With up to 18X faster rendering than CPU-based Center Workstation (Quadro vDWS) software.2 With up to 96 solutions and enhanced performance with NVIDIA NVLink™, gigabytes (GB) of GPU memory available,3 Quadro RTX V-Ray Next GPU with RTX support provides incredible provides the power you need for the largest professional performance improvements for your rendering workloads. graphics and rendering workloads. “ Accelerating artist productivity is always our top Benchmark: V-Ray Next GPU Rendering Performance Increase on Quadro RTX GPUs priority, so we’re quick to take advantage of the latest ray-tracing hardware breakthroughs. By Quadro RTX 6000 x2 1885 ™ Quadro RTX 6000 104 supporting NVIDIA RTX in V-Ray GPU, we’re Quadro RTX 4000 783 bringing our customers an exciting new boost in PU 1 0 2 4 6 8 10 12 14 16 18 20 their GPU production rendering speeds.” Relatve Performance – Phillip Miller, Vice President, Product Management, Chaos Group Desktop performance Tests run on 1x Xeon old 6154 3 Hz (37 Hz Turbo), 64 B DDR4 RAM Wn10x64 Drver verson 44128 Performance results may vary dependng on the scene NVIDIA Quadro professional graphics solutions are verified and recommended for the most demanding projects by Chaos Group. -

NVIDIA Launches Tegra X1 Mobile Super Chip

NVIDIA Launches Tegra X1 Mobile Super Chip Maxwell GPU Architecture Delivers First Teraflops Mobile Processor, Powering Deep Learning and Computer Vision Applications NVIDIA today unveiled Tegra® X1, its next-generation mobile super chip with over one teraflops of processing power – delivering capabilities that open the door to unprecedented graphics and sophisticated deep learning and computer vision applications. Tegra X1 is built on the same NVIDIA Maxwell™ GPU architecture rolled out only months ago for the world's top-performing gaming graphics card, the GeForce® GTX 980. The 256-core Tegra X1 provides twice the performance of its predecessor, the Tegra K1, which is based on the previous-generation Kepler™ architecture and debuted at last year's Consumer Electronics Show. Tegra processors are built for embedded products, mobile devices, autonomous machines and automotive applications. Tegra X1 will begin appearing in the first half of the year. It will be featured in the newly announced NVIDIA DRIVE™ car computers. DRIVE PX is an auto-pilot computing platform that can process video from up to 12 onboard cameras to run capabilities providing Surround-Vision, for a seamless 360-degree view around the car, and Auto-Valet, for true self-parking. DRIVE CX is a complete cockpit platform designed to power the advanced graphics required across the increasing number of screens used for digital clusters, infotainment, head-up displays, virtual mirrors and rear-seat entertainment. "We see a future of autonomous cars, robots and drones that see and learn, with seeming intelligence that is hard to imagine," said Jen-Hsun Huang, CEO and co-founder, NVIDIA. -

GPU-Based Deep Learning Inference

Whitepaper GPU-Based Deep Learning Inference: A Performance and Power Analysis November 2015 1 Contents Abstract ......................................................................................................................................................... 3 Introduction .................................................................................................................................................. 3 Inference versus Training .............................................................................................................................. 4 GPUs Excel at Neural Network Inference ..................................................................................................... 5 Inference Optimizations in Caffe and cuDNN 4 ........................................................................................ 5 Experimental Setup and Testing Methodology ........................................................................................ 7 Inference on Small and Large GPUs .......................................................................................................... 8 Conclusion ................................................................................................................................................... 10 References .................................................................................................................................................. 10 2 Abstract Deep learning methods are revolutionizing various areas of machine perception. On a -

Nvidia Cuda Getting Started Guide for Linux

NVIDIA CUDA GETTING STARTED GUIDE FOR LINUX DU-05347-001_v7.0 | March 2015 Installation and Verification on Linux Systems TABLE OF CONTENTS Chapter 1. Introduction.........................................................................................1 1.1. System Requirements.................................................................................... 1 1.1.1. x86 32-bit Support.................................................................................. 2 1.2. About This Document.................................................................................... 3 Chapter 2. Pre-installation Actions...........................................................................4 2.1. Verify You Have a CUDA-Capable GPU................................................................ 4 2.2. Verify You Have a Supported Version of Linux.......................................................4 2.3. Verify the System Has gcc Installed................................................................... 5 2.4. Choose an Installation Method......................................................................... 5 2.5. Download the NVIDIA CUDA Toolkit....................................................................6 2.6. Handle Conflicting Installation Methods.............................................................. 6 Chapter 3. Package Manager Installation....................................................................8 3.1. Overview.................................................................................................. -

Cuda C Programming Guide

CUDA C PROGRAMMING GUIDE PG-02829-001_v10.0 | October 2018 Design Guide CHANGES FROM VERSION 9.0 ‣ Documented restriction that operator-overloads cannot be __global__ functions in Operator Function. ‣ Removed guidance to break 8-byte shuffles into two 4-byte instructions. 8-byte shuffle variants are provided since CUDA 9.0. See Warp Shuffle Functions. ‣ Passing __restrict__ references to __global__ functions is now supported. Updated comment in __global__ functions and function templates. ‣ Documented CUDA_ENABLE_CRC_CHECK in CUDA Environment Variables. ‣ Warp matrix functions now support matrix products with m=32, n=8, k=16 and m=8, n=32, k=16 in addition to m=n=k=16. www.nvidia.com CUDA C Programming Guide PG-02829-001_v10.0 | ii TABLE OF CONTENTS Chapter 1. Introduction.........................................................................................1 1.1. From Graphics Processing to General Purpose Parallel Computing............................... 1 1.2. CUDA®: A General-Purpose Parallel Computing Platform and Programming Model.............3 1.3. A Scalable Programming Model.........................................................................4 1.4. Document Structure...................................................................................... 5 Chapter 2. Programming Model............................................................................... 7 2.1. Kernels......................................................................................................7 2.2. Thread Hierarchy........................................................................................ -

An Advanced Path Tracing Architecture for Movie Rendering

RenderMan: An Advanced Path Tracing Architecture for Movie Rendering PER CHRISTENSEN, JULIAN FONG, JONATHAN SHADE, WAYNE WOOTEN, BRENDEN SCHUBERT, ANDREW KENSLER, STEPHEN FRIEDMAN, CHARLIE KILPATRICK, CLIFF RAMSHAW, MARC BAN- NISTER, BRENTON RAYNER, JONATHAN BROUILLAT, and MAX LIANI, Pixar Animation Studios Fig. 1. Path-traced images rendered with RenderMan: Dory and Hank from Finding Dory (© 2016 Disney•Pixar). McQueen’s crash in Cars 3 (© 2017 Disney•Pixar). Shere Khan from Disney’s The Jungle Book (© 2016 Disney). A destroyer and the Death Star from Lucasfilm’s Rogue One: A Star Wars Story (© & ™ 2016 Lucasfilm Ltd. All rights reserved. Used under authorization.) Pixar’s RenderMan renderer is used to render all of Pixar’s films, and by many 1 INTRODUCTION film studios to render visual effects for live-action movies. RenderMan started Pixar’s movies and short films are all rendered with RenderMan. as a scanline renderer based on the Reyes algorithm, and was extended over The first computer-generated (CG) animated feature film, Toy Story, the years with ray tracing and several global illumination algorithms. was rendered with an early version of RenderMan in 1995. The most This paper describes the modern version of RenderMan, a new architec- ture for an extensible and programmable path tracer with many features recent Pixar movies – Finding Dory, Cars 3, and Coco – were rendered that are essential to handle the fiercely complex scenes in movie production. using RenderMan’s modern path tracing architecture. The two left Users can write their own materials using a bxdf interface, and their own images in Figure 1 show high-quality rendering of two challenging light transport algorithms using an integrator interface – or they can use the CG movie scenes with many bounces of specular reflections and materials and light transport algorithms provided with RenderMan. -

NVIDIA Ampere GA102 GPU Architecture Whitepaper

NVIDIA AMPERE GA102 GPU ARCHITECTURE Second-Generation RTX Updated with NVIDIA RTX A6000 and NVIDIA A40 Information V2.0 Table of Contents Introduction 5 GA102 Key Features 7 2x FP32 Processing 7 Second-Generation RT Core 7 Third-Generation Tensor Cores 8 GDDR6X and GDDR6 Memory 8 Third-Generation NVLink® 8 PCIe Gen 4 9 Ampere GPU Architecture In-Depth 10 GPC, TPC, and SM High-Level Architecture 10 ROP Optimizations 11 GA10x SM Architecture 11 2x FP32 Throughput 12 Larger and Faster Unified Shared Memory and L1 Data Cache 13 Performance Per Watt 16 Second-Generation Ray Tracing Engine in GA10x GPUs 17 Ampere Architecture RTX Processors in Action 19 GA10x GPU Hardware Acceleration for Ray-Traced Motion Blur 20 Third-Generation Tensor Cores in GA10x GPUs 24 Comparison of Turing vs GA10x GPU Tensor Cores 24 NVIDIA Ampere Architecture Tensor Cores Support New DL Data Types 26 Fine-Grained Structured Sparsity 26 NVIDIA DLSS 8K 28 GDDR6X Memory 30 RTX IO 32 Introducing NVIDIA RTX IO 33 How NVIDIA RTX IO Works 34 Display and Video Engine 38 DisplayPort 1.4a with DSC 1.2a 38 HDMI 2.1 with DSC 1.2a 38 Fifth Generation NVDEC - Hardware-Accelerated Video Decoding 39 AV1 Hardware Decode 40 Seventh Generation NVENC - Hardware-Accelerated Video Encoding 40 NVIDIA Ampere GA102 GPU Architecture ii Conclusion 42 Appendix A - Additional GeForce GA10x GPU Specifications 44 GeForce RTX 3090 44 GeForce RTX 3070 46 Appendix B - New Memory Error Detection and Replay (EDR) Technology 49 Appendix C - RTX A6000 GPU Perf ormance 50 List of Figures Figure 1. -

Geforce® Gtx 680 2Gb Vcggtx680xpb

FILENAME: PNY-GeForce-GTX-680-2GB MODIFIED: April 25, 2013 12:02 PM GEFORCE® GTX 680 2GB VCGGTX680XPB Game-Changing Innovation PRODUCT SPECIFICATIONS: PRODUCT SPECIFICATIONS The GeForce® GTX 680 delivers more than just state-of-the-art features and technology. It gives you truly GPU GeForce® GTX 680 ® gaming-changing performance that taps into the power next generation GeForce Kepler-architecture to CUDA Cores 1536 redefine smooth, seamless, lifelike gaming. NVIDIA GPU Boost technology that dynamically maximize clock speeds to push performance to the new levels and bring out the best in every game. Ultra-smooth Core Speed 1006 MHz gaming with exciting new NVIDIA Adaptive V-Sync technology. Boost Speed 1058 MHz Memory Size 2048 MB GDDR5 PACKAGE INCLUDES REQUIREMENTS Memory • GeForce® GTX 680 2GB graphics card • PCI Express compliant motherboard with one 256 bit Interface • Quick Installation Guide dual-width x16 graphics slot • Installation DVD, which includes: • Two 6-pin PCI Express supplementary Memory 6.0 Gbps Detailed installation guide power connectors Frequency ® - NVIDIA Graphics Drivers • Minimum 550W or greater system power supply TDP 195W-Active - NVIDIA® GeForce® Demos (with a minimum 12V current rating of 38A) - PhysX™ System Software • 300 MB of available hard-disk space 2GB SLI 3-Way SLI • DVI-to-VGA adapter system memory (2GB or higher recommended) 4 Concurrent Screens*** Multi-Screen • 6-pin PCIe to Molex Power Adapter • Microsoft Windows 7, Windows Vista, or NVIDIA 3D Surround* Windows XP Operating System (32 or 64-bit)