A Brief History of Just-In-Time Compilation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

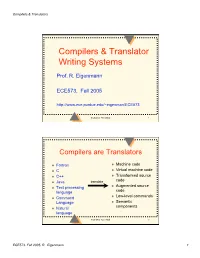

Compilers & Translator Writing Systems

Compilers & Translators Compilers & Translator Writing Systems Prof. R. Eigenmann ECE573, Fall 2005 http://www.ece.purdue.edu/~eigenman/ECE573 ECE573, Fall 2005 1 Compilers are Translators Fortran Machine code C Virtual machine code C++ Transformed source code Java translate Augmented source Text processing language code Low-level commands Command Language Semantic components Natural language ECE573, Fall 2005 2 ECE573, Fall 2005, R. Eigenmann 1 Compilers & Translators Compilers are Increasingly Important Specification languages Increasingly high level user interfaces for ↑ specifying a computer problem/solution High-level languages ↑ Assembly languages The compiler is the translator between these two diverging ends Non-pipelined processors Pipelined processors Increasingly complex machines Speculative processors Worldwide “Grid” ECE573, Fall 2005 3 Assembly code and Assemblers assembly machine Compiler code Assembler code Assemblers are often used at the compiler back-end. Assemblers are low-level translators. They are machine-specific, and perform mostly 1:1 translation between mnemonics and machine code, except: – symbolic names for storage locations • program locations (branch, subroutine calls) • variable names – macros ECE573, Fall 2005 4 ECE573, Fall 2005, R. Eigenmann 2 Compilers & Translators Interpreters “Execute” the source language directly. Interpreters directly produce the result of a computation, whereas compilers produce executable code that can produce this result. Each language construct executes by invoking a subroutine of the interpreter, rather than a machine instruction. Examples of interpreters? ECE573, Fall 2005 5 Properties of Interpreters “execution” is immediate elaborate error checking is possible bookkeeping is possible. E.g. for garbage collection can change program on-the-fly. E.g., switch libraries, dynamic change of data types machine independence. -

Source-To-Source Translation and Software Engineering

Journal of Software Engineering and Applications, 2013, 6, 30-40 http://dx.doi.org/10.4236/jsea.2013.64A005 Published Online April 2013 (http://www.scirp.org/journal/jsea) Source-to-Source Translation and Software Engineering David A. Plaisted Department of Computer Science, University of North Carolina at Chapel Hill, Chapel Hill, USA. Email: [email protected] Received February 5th, 2013; revised March 7th, 2013; accepted March 15th, 2013 Copyright © 2013 David A. Plaisted. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. ABSTRACT Source-to-source translation of programs from one high level language to another has been shown to be an effective aid to programming in many cases. By the use of this approach, it is sometimes possible to produce software more cheaply and reliably. However, the full potential of this technique has not yet been realized. It is proposed to make source- to-source translation more effective by the use of abstract languages, which are imperative languages with a simple syntax and semantics that facilitate their translation into many different languages. By the use of such abstract lan- guages and by translating only often-used fragments of programs rather than whole programs, the need to avoid writing the same program or algorithm over and over again in different languages can be reduced. It is further proposed that programmers be encouraged to write often-used algorithms and program fragments in such abstract languages. Libraries of such abstract programs and program fragments can then be constructed, and programmers can be encouraged to make use of such libraries by translating their abstract programs into application languages and adding code to join things together when coding in various application languages. -

US 2002/0066086 A1 Linden (43) Pub

US 2002O066086A1 (19) United States (12) Patent Application Publication (10) Pub. No.: US 2002/0066086 A1 Linden (43) Pub. Date: May 30, 2002 (54) DYNAMIC RECOMPLER (52) U.S. Cl. ............................................ 717/145; 717/151 (76) Inventor: Randal N. Linden, Los Angeles, CA (57) ABSTRACT (US) A method for dynamic recompilation of Source Software Correspondence Address: instructions for execution by a target processor, which LU & LIU LLP considers not only the Specific Source instructions, but also 811 WEST SEVENTH STREET, SUITE 1100 the intent and purpose of the instructions, to translate and LOS ANGELES, CA 90017 (US) optimize a set of equivalent code for the target processor. The dynamic recompiler determines what the Source opera (21) Appl. No.: 09/755,542 tion code is trying to accomplish and the optimum way of Filed: Jan. 5, 2001 doing it at the target processor, in an “interpolative' and (22) context Sensitive fashion. The Source instructions are pro Related U.S. Application Data cessed in blocks of varying Sizes by the dynamic recompiler, which considers the instructions that come before and after (63) Non-provisional of provisional application No. a current instruction to determine the most efficient approach 60/175,008, filed on Jan. 7, 2000. out of Several available approaches for encoding the opera tion code for the target processor to perform the equivalent Publication Classification tasks Specified by the Source instructions. The dynamic compiler comprises a decoding Stage, an optimization Stage (51) Int. Cl. ................................................. G06F 9/45 and an encoding Stage. Patent Application Publication May 30, 2002 Sheet 1 of 3 US 2002/0066086 A1 Patent Application Publication May 30, 2002 Sheet 2 of 3 US 2002/0066086 A1 - \O, 2 Patent Application Publication May 30, 2002 Sheet 3 of 3 US 2002/0066086 A1 fo, 3 US 2002/0066086 A1 May 30, 2002 DYNAMIC RECOMPLER more target instructions to be executed in response to a given Source instruction. -

Tdb: a Source-Level Debugger for Dynamically Translated Programs

Tdb: A Source-level Debugger for Dynamically Translated Programs Naveen Kumar†, Bruce R. Childers†, and Mary Lou Soffa‡ †Department of Computer Science ‡Department of Computer Science University of Pittsburgh University of Virginia Pittsburgh, Pennsylvania 15260 Charlottesville, Virginia 22904 {naveen, childers}@cs.pitt.edu [email protected] Abstract single stepping through program execution, watching for par- ticular conditions and requests to add and remove breakpoints. Debugging techniques have evolved over the years in response In order to respond, the debugger has to map the values and to changes in programming languages, implementation tech- statements that the user expects using the source program niques, and user needs. A new type of implementation vehicle viewpoint, to the actual values and locations of the statements for software has emerged that, once again, requires new as found in the executable program. debugging techniques. Software dynamic translation (SDT) As programming languages have evolved, new debugging has received much attention due to compelling applications of techniques have been developed. For example, checkpointing the technology, including software security checking, binary and time stamping techniques have been developed for lan- translation, and dynamic optimization. Using SDT, program guages with concurrent constructs [6,19,30]. The pervasive code changes dynamically, and thus, debugging techniques use of code optimizations to improve performance has necessi- developed for statically generated code cannot be used to tated techniques that can respond to queries even though the debug these applications. In this paper, we describe a new optimization may have changed the number of statement debug architecture for applications executing with SDT sys- instances and the order of execution [15,25,29]. -

An Execution Model for Serverless Functions at the Edge

An Execution Model for Serverless Functions at the Edge Adam Hall Umakishore Ramachandran Georgia Institute of Technology Georgia Institute of Technology Atlanta, Georgia Atlanta, Georgia ach@gatech:edu rama@gatech:edu ABSTRACT 1 INTRODUCTION Serverless computing platforms allow developers to host single- Enabling next generation technologies such as self-driving cars or purpose applications that automatically scale with demand. In con- smart cities via edge computing requires us to reconsider the way trast to traditional long-running applications on dedicated, virtu- we characterize and deploy the services supporting those technolo- alized, or container-based platforms, serverless applications are gies. Edge/fog environments consist of many micro data centers intended to be instantiated when called, execute a single function, spread throughout the edge of the network. This is in stark contrast and shut down when finished. State-of-the-art serverless platforms to the cloud, where we assume the notion of unlimited resources achieve these goals by creating a new container instance to host available in a few centralized data centers. These micro data center a function when it is called and destroying the container when it environments must support large numbers of Internet of Things completes. This design allows for cost and resource savings when (IoT) devices on limited hardware resources, processing the mas- hosting simple applications, such as those supporting IoT devices sive amounts of data those devices generate while providing quick at the edge of the network. However, the use of containers intro- decisions to inform their actions [44]. One solution to supporting duces some overhead which may be unsuitable for applications emerging technologies at the edge lies in serverless computing. -

A Dynamically Recompiling ARM Emulator POWERED

POWERED TACARM A Dynamically Recompiling ARM Emulator 3rd year project report 2000-2001 University of Warwick Written by David Sharp Supervised by Graham Martin 2 Abstract Dynamically recompiling from one machine code to another can be used to emulate modern microprocessors at realistic speeds. This report is a discussion of the techniques used in implementing a dynamically recompiling emulator of an ARM processor for use in an emulation of a complete computer system. Keywords Emulation, Dynamic Recompilation, Binary Translation, Just-In-Time compilation, Virtual Machine, Microprocessor Simulation, Intermediate Code, ARM. 3 Contents 1. Introduction 7 1.1. What is emulation? 7 1.2. Applications of emulation 7 1.3. Processor emulation techniques 9 1.4. This Project 13 2. Analysis 16 2.1. Introduction to the ARM 16 2.2. Identifying the problem 18 2.3. Getting started 20 2.4. The System Design 21 3. Disassembler 23 3.1. Purpose 23 3.2. ARM decoding 23 3.3. Design 24 4. Interpreter 26 4.1. Purpose 26 4.2. The problem with JIT 26 4.3. The HotSpotTM alternative 26 4.4. Quantifying the JIT problem 27 4.5. Faster decoding 28 4.6. Interfaces 29 4.7. The emulation loop 30 4.8. Implementation 31 4.9. Debugging 32 4.10. Compatibility 33 5. Recompilation 35 5.1. Overview 35 5.2. Methods of generating native code 35 5.3. The use of intermediate code 36 6. Armlets – An Intermediate Code 38 6.1. Purpose 38 6.2. The ‘explicit-implicit problem’ 38 6.3. Options 39 6.4. -

Virtual Machine Part II: Program Control

Virtual Machine Part II: Program Control Building a Modern Computer From First Principles www.nand2tetris.org Elements of Computing Systems, Nisan & Schocken, MIT Press, www.nand2tetris.org , Chapter 8: Virtual Machine, Part II slide 1 Where we are at: Human Abstract design Software abstract interface Thought Chapters 9, 12 hierarchy H.L. Language Compiler & abstract interface Chapters 10 - 11 Operating Sys. Virtual VM Translator abstract interface Machine Chapters 7 - 8 Assembly Language Assembler Chapter 6 abstract interface Computer Machine Architecture abstract interface Language Chapters 4 - 5 Hardware Gate Logic abstract interface Platform Chapters 1 - 3 Electrical Chips & Engineering Hardware Physics hierarchy Logic Gates Elements of Computing Systems, Nisan & Schocken, MIT Press, www.nand2tetris.org , Chapter 8: Virtual Machine, Part II slide 2 The big picture Some . Some Other . Jack language language language Chapters Some Jack compiler Some Other 9-13 compiler compiler Implemented in VM language Projects 7-8 VM implementation VM imp. VM imp. VM over the Hack Chapters over CISC over RISC emulator platforms platforms platform 7-8 A Java-based emulator CISC RISC is included in the course written in Hack software suite machine machine . a high-level machine language language language language Chapters . 1-6 CISC RISC other digital platforms, each equipped Any Hack machine machine with its VM implementation computer computer Elements of Computing Systems, Nisan & Schocken, MIT Press, www.nand2tetris.org , Chapter 8: Virtual Machine, -

Toward IFVM Virtual Machine: a Model Driven IFML Interpretation

Toward IFVM Virtual Machine: A Model Driven IFML Interpretation Sara Gotti and Samir Mbarki MISC Laboratory, Faculty of Sciences, Ibn Tofail University, BP 133, Kenitra, Morocco Keywords: Interaction Flow Modelling Language IFML, Model Execution, Unified Modeling Language (UML), IFML Execution, Model Driven Architecture MDA, Bytecode, Virtual Machine, Model Interpretation, Model Compilation, Platform Independent Model PIM, User Interfaces, Front End. Abstract: UML is the first international modeling language standardized since 1997. It aims at providing a standard way to visualize the design of a system, but it can't model the complex design of user interfaces and interactions. However, according to MDA approach, it is necessary to apply the concept of abstract models to user interfaces too. IFML is the OMG adopted (in March 2013) standard Interaction Flow Modeling Language designed for abstractly expressing the content, user interaction and control behaviour of the software applications front-end. IFML is a platform independent language, it has been designed with an executable semantic and it can be mapped easily into executable applications for various platforms and devices. In this article we present an approach to execute the IFML. We introduce a IFVM virtual machine which translate the IFML models into bytecode that will be interpreted by the java virtual machine. 1 INTRODUCTION a fundamental standard fUML (OMG, 2011), which is a subset of UML that contains the most relevant The software development has been affected by the part of class diagrams for modeling the data apparition of the MDA (OMG, 2015) approach. The structure and activity diagrams to specify system trend of the 21st century (BRAMBILLA et al., behavior; it contains all UML elements that are 2014) which has allowed developers to build their helpful for the execution of the models. -

A Parallel Program Execution Model Supporting Modular Software Construction

A Parallel Program Execution Model Supporting Modular Software Construction Jack B. Dennis Laboratory for Computer Science Massachusetts Institute of Technology Cambridge, MA 02139 U.S.A. [email protected] Abstract as a guide for computer system design—follows from basic requirements for supporting modular software construction. A watershed is near in the architecture of computer sys- The fundamental theme of this paper is: tems. There is overwhelming demand for systems that sup- port a universal format for computer programs and software The architecture of computer systems should components so users may benefit from their use on a wide reflect the requirements of the structure of pro- variety of computing platforms. At present this demand is grams. The programming interface provided being met by commodity microprocessors together with stan- should address software engineering issues, in dard operating system interfaces. However, current systems particular, the ability to practice the modular do not offer a standard API (application program interface) construction of software. for parallel programming, and the popular interfaces for parallel computing violate essential principles of modular The positions taken in this presentation are contrary to or component-based software construction. Moreover, mi- much conventional wisdom, so I have included a ques- croprocessor architecture is reaching the limit of what can tion/answer dialog at appropriate places to highlight points be done usefully within the framework of superscalar and of debate. We start with a discussion of the nature and VLIW processor models. The next step is to put several purpose of a program execution model. Our Parallelism processors (or the equivalent) on a single chip. -

CNT6008 Network Programming Module - 11 Objectives

CNT6008 Network Programming Module - 11 Objectives Skills/Concepts/Assignments Objectives ASP.NET Overview • Learn the Framework • Understand the different platforms • Compare to Java Platform Final Project Define your final project requirements Section 21 – Web App Read Sections 21 and 27, pages 649 to 694 and 854 Development and ASP.NET to 878. Section 27 – Web App Development with ASP.NET Overview of ASP.NET Section Goals Goal Course Presentation Understanding Windows Understanding .NET Framework Foundation Project Concepts Creating a ASP.NET Client and Server Application Understanding the Visual Creating a ASP Project Studio Development Environment .NET – What Is It? • Software platform • Language neutral • In other words: • .NET is not a language (Runtime and a library for writing and executing written programs in any compliant language) What Is .NET • .Net is a new framework for developing web-based and windows-based applications within the Microsoft environment. • The framework offers a fundamental shift in Microsoft strategy: it moves application development from client-centric to server- centric. .NET – What Is It? .NET Application .NET Framework Operating System + Hardware Framework, Languages, And Tools VB VC++ VC# JScript … Common Language Specification Visual Studio.NET Visual ASP.NET: Web Services Windows and Web Forms Forms ADO.NET: Data and XML Base Class Library Common Language Runtime The .NET Framework .NET Framework Services • Common Language Runtime • Windows Communication Framework (WCF) • Windows® Forms • ASP.NET (Active Server Pages) • Web Forms • Web Services • ADO.NET, evolution of ADO • Visual Studio.NET Common Language Runtime (CLR) • CLR works like a virtual machine in executing all languages. • All .NET languages must obey the rules and standards imposed by CLR. -

Language Translators

Student Notes Theory LANGUAGE TRANSLATORS A. HIGH AND LOW LEVEL LANGUAGES Programming languages Low – Level Languages High-Level Languages Example: Assembly Language Example: Pascal, Basic, Java Characteristics of LOW Level Languages: They are machine oriented : an assembly language program written for one machine will not work on any other type of machine unless they happen to use the same processor chip. Each assembly language statement generally translates into one machine code instruction, therefore the program becomes long and time-consuming to create. Example: 10100101 01110001 LDA &71 01101001 00000001 ADD #&01 10000101 01110001 STA &71 Characteristics of HIGH Level Languages: They are not machine oriented: in theory they are portable , meaning that a program written for one machine will run on any other machine for which the appropriate compiler or interpreter is available. They are problem oriented: most high level languages have structures and facilities appropriate to a particular use or type of problem. For example, FORTRAN was developed for use in solving mathematical problems. Some languages, such as PASCAL were developed as general-purpose languages. Statements in high-level languages usually resemble English sentences or mathematical expressions and these languages tend to be easier to learn and understand than assembly language. Each statement in a high level language will be translated into several machine code instructions. Example: number:= number + 1; 10100101 01110001 01101001 00000001 10000101 01110001 B. GENERATIONS OF PROGRAMMING LANGUAGES 4th generation 4GLs 3rd generation High Level Languages 2nd generation Low-level Languages 1st generation Machine Code Page 1 of 5 K Aquilina Student Notes Theory 1. MACHINE LANGUAGE – 1ST GENERATION In the early days of computer programming all programs had to be written in machine code. -

Program Dynamic Analysis Overview

4/14/16 Program Dynamic Analysis Overview • Dynamic Analysis • JVM & Java Bytecode [2] • A Java bytecode engineering library: ASM [1] 2 1 4/14/16 What is dynamic analysis? [3] • The investigation of the properties of a running software system over one or more executions 3 Has anyone done dynamic analysis? [3] • Loggers • Debuggers • Profilers • … 4 2 4/14/16 Why dynamic analysis? [3] • Gap between run-time structure and code structure in OO programs Trying to understand one [structure] from the other is like trying to understand the dynamism of living ecosystems from the static taxonomy of plants and animals, and vice-versa. -- Erich Gamma et al., Design Patterns 5 Why dynamic analysis? • Collect runtime execution information – Resource usage, execution profiles • Program comprehension – Find bugs in applications, identify hotspots • Program transformation – Optimize or obfuscate programs – Insert debugging or monitoring code – Modify program behaviors on the fly 6 3 4/14/16 How to do dynamic analysis? • Instrumentation – Modify code or runtime to monitor specific components in a system and collect data – Instrumentation approaches • Source code modification • Byte code modification • VM modification • Data analysis 7 A Running Example • Method call instrumentation – Given a program’s source code, how do you modify the code to record which method is called by main() in what order? public class Test { public static void main(String[] args) { if (args.length == 0) return; if (args.length % 2 == 0) printEven(); else printOdd(); } public