Parallel Programming with Openmp

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Heterogeneous Task Scheduling for Accelerated Openmp

Heterogeneous Task Scheduling for Accelerated OpenMP ? ? Thomas R. W. Scogland Barry Rountree† Wu-chun Feng Bronis R. de Supinski† ? Department of Computer Science, Virginia Tech, Blacksburg, VA 24060 USA † Center for Applied Scientific Computing, Lawrence Livermore National Laboratory, Livermore, CA 94551 USA [email protected] [email protected] [email protected] [email protected] Abstract—Heterogeneous systems with CPUs and computa- currently requires a programmer either to program in at tional accelerators such as GPUs, FPGAs or the upcoming least two different parallel programming models, or to use Intel MIC are becoming mainstream. In these systems, peak one of the two that support both GPUs and CPUs. Multiple performance includes the performance of not just the CPUs but also all available accelerators. In spite of this fact, the models however require code replication, and maintaining majority of programming models for heterogeneous computing two completely distinct implementations of a computational focus on only one of these. With the development of Accelerated kernel is a difficult and error-prone proposition. That leaves OpenMP for GPUs, both from PGI and Cray, we have a clear us with using either OpenCL or accelerated OpenMP to path to extend traditional OpenMP applications incrementally complete the task. to use GPUs. The extensions are geared toward switching from CPU parallelism to GPU parallelism. However they OpenCL’s greatest strength lies in its broad hardware do not preserve the former while adding the latter. Thus support. In a way, though, that is also its greatest weak- computational potential is wasted since either the CPU cores ness. To enable one to program this disparate hardware or the GPU cores are left idle. -

Distributed Algorithms with Theoretic Scalability Analysis of Radial and Looped Load flows for Power Distribution Systems

Electric Power Systems Research 65 (2003) 169Á/177 www.elsevier.com/locate/epsr Distributed algorithms with theoretic scalability analysis of radial and looped load flows for power distribution systems Fangxing Li *, Robert P. Broadwater ECE Department Virginia Tech, Blacksburg, VA 24060, USA Received 15 April 2002; received in revised form 14 December 2002; accepted 16 December 2002 Abstract This paper presents distributed algorithms for both radial and looped load flows for unbalanced, multi-phase power distribution systems. The distributed algorithms are developed from tree-based sequential algorithms. Formulas of scalability for the distributed algorithms are presented. It is shown that computation time dominates communication time in the distributed computing model. This provides benefits to real-time load flow calculations, network reconfigurations, and optimization studies that rely on load flow calculations. Also, test results match the predictions of derived formulas. This shows the formulas can be used to predict the computation time when additional processors are involved. # 2003 Elsevier Science B.V. All rights reserved. Keywords: Distributed computing; Scalability analysis; Radial load flow; Looped load flow; Power distribution systems 1. Introduction Also, the method presented in Ref. [10] was tested in radial distribution systems with no more than 528 buses. Parallel and distributed computing has been applied More recent works [11Á/14] presented distributed to many scientific and engineering computations such as implementations for power flows or power flow based weather forecasting and nuclear simulations [1,2]. It also algorithms like optimizations and contingency analysis. has been applied to power system analysis calculations These works also targeted power transmission systems. [3Á/14]. -

Scalability and Performance Management of Internet Applications in the Cloud

Hasso-Plattner-Institut University of Potsdam Internet Technology and Systems Group Scalability and Performance Management of Internet Applications in the Cloud A thesis submitted for the degree of "Doktors der Ingenieurwissenschaften" (Dr.-Ing.) in IT Systems Engineering Faculty of Mathematics and Natural Sciences University of Potsdam By: Wesam Dawoud Supervisor: Prof. Dr. Christoph Meinel Potsdam, Germany, March 2013 This work is licensed under a Creative Commons License: Attribution – Noncommercial – No Derivative Works 3.0 Germany To view a copy of this license visit http://creativecommons.org/licenses/by-nc-nd/3.0/de/ Published online at the Institutional Repository of the University of Potsdam: URL http://opus.kobv.de/ubp/volltexte/2013/6818/ URN urn:nbn:de:kobv:517-opus-68187 http://nbn-resolving.de/urn:nbn:de:kobv:517-opus-68187 To my lovely parents To my lovely wife Safaa To my lovely kids Shatha and Yazan Acknowledgements At Hasso Plattner Institute (HPI), I had the opportunity to meet many wonderful people. It is my pleasure to thank those who sup- ported me to make this thesis possible. First and foremost, I would like to thank my Ph.D. supervisor, Prof. Dr. Christoph Meinel, for his continues support. In spite of his tight schedule, he always found the time to discuss, guide, and motivate my research ideas. The thanks are extended to Dr. Karin-Irene Eiermann for assisting me even before moving to Germany. I am also grateful for Michaela Schmitz. She managed everything well to make everyones life easier. I owe a thanks to Dr. Nemeth Sharon for helping me to improve my English writing skills. -

Introduction to Multi-Threading and Vectorization Matti Kortelainen Larsoft Workshop 2019 25 June 2019 Outline

Introduction to multi-threading and vectorization Matti Kortelainen LArSoft Workshop 2019 25 June 2019 Outline Broad introductory overview: • Why multithread? • What is a thread? • Some threading models – std::thread – OpenMP (fork-join) – Intel Threading Building Blocks (TBB) (tasks) • Race condition, critical region, mutual exclusion, deadlock • Vectorization (SIMD) 2 6/25/19 Matti Kortelainen | Introduction to multi-threading and vectorization Motivations for multithreading Image courtesy of K. Rupp 3 6/25/19 Matti Kortelainen | Introduction to multi-threading and vectorization Motivations for multithreading • One process on a node: speedups from parallelizing parts of the programs – Any problem can get speedup if the threads can cooperate on • same core (sharing L1 cache) • L2 cache (may be shared among small number of cores) • Fully loaded node: save memory and other resources – Threads can share objects -> N threads can use significantly less memory than N processes • If smallest chunk of data is so big that only one fits in memory at a time, is there any other option? 4 6/25/19 Matti Kortelainen | Introduction to multi-threading and vectorization What is a (software) thread? (in POSIX/Linux) • “Smallest sequence of programmed instructions that can be managed independently by a scheduler” [Wikipedia] • A thread has its own – Program counter – Registers – Stack – Thread-local memory (better to avoid in general) • Threads of a process share everything else, e.g. – Program code, constants – Heap memory – Network connections – File handles -

Scalability and Optimization Strategies for GPU Enhanced Neural Networks (Genn)

Scalability and Optimization Strategies for GPU Enhanced Neural Networks (GeNN) Naresh Balaji1, Esin Yavuz2, Thomas Nowotny2 {T.Nowotny, E.Yavuz}@sussex.ac.uk, [email protected] 1National Institute of Technology, Tiruchirappalli, India 2School of Engineering and Informatics, University of Sussex, Brighton, UK Abstract: Simulation of spiking neural networks has been traditionally done on high-performance supercomputers or large-scale clusters. Utilizing the parallel nature of neural network computation algorithms, GeNN (GPU Enhanced Neural Network) provides a simulation environment that performs on General Purpose NVIDIA GPUs with a code generation based approach. GeNN allows the users to design and simulate neural networks by specifying the populations of neurons at different stages, their synapse connection densities and the model of individual neurons. In this report we describe work on how to scale synaptic weights based on the configuration of the user-defined network to ensure sufficient spiking and subsequent effective learning. We also discuss optimization strategies particular to GPU computing: sparse representation of synapse connections and occupancy based block-size determination. Keywords: Spiking Neural Network, GPGPU, CUDA, code-generation, occupancy, optimization 1. Introduction The computational performance of traditional single-core CPUs has steadily increased since its arrival, primarily due to the combination of increased clock frequency, process technology advancements and compiler optimizations. The clock frequency was pushed higher and higher, from 5 MHz to 3 GHz in the years from 1983 to 2002, until it reached a stall a few years ago [1] when the power consumption and dissipation of the transistors reached peaks that could not compensated by its cost [2]. -

Parallel Programming

Parallel Programming Libraries and implementations Outline • MPI – distributed memory de-facto standard • Using MPI • OpenMP – shared memory de-facto standard • Using OpenMP • CUDA – GPGPU de-facto standard • Using CUDA • Others • Hybrid programming • Xeon Phi Programming • SHMEM • PGAS MPI Library Distributed, message-passing programming Message-passing concepts Explicit Parallelism • In message-passing all the parallelism is explicit • The program includes specific instructions for each communication • What to send or receive • When to send or receive • Synchronisation • It is up to the developer to design the parallel decomposition and implement it • How will you divide up the problem? • When will you need to communicate between processes? Message Passing Interface (MPI) • MPI is a portable library used for writing parallel programs using the message passing model • You can expect MPI to be available on any HPC platform you use • Based on a number of processes running independently in parallel • HPC resource provides a command to launch multiple processes simultaneously (e.g. mpiexec, aprun) • There are a number of different implementations but all should support the MPI 2 standard • As with different compilers, there will be variations between implementations but all the features specified in the standard should work. • Examples: MPICH2, OpenMPI Point-to-point communications • A message sent by one process and received by another • Both processes are actively involved in the communication – not necessarily at the same time • Wide variety of semantics provided: • Blocking vs. non-blocking • Ready vs. synchronous vs. buffered • Tags, communicators, wild-cards • Built-in and custom data-types • Can be used to implement any communication pattern • Collective operations, if applicable, can be more efficient Collective communications • A communication that involves all processes • “all” within a communicator, i.e. -

Openmp API 5.1 Specification

OpenMP Application Programming Interface Version 5.1 November 2020 Copyright c 1997-2020 OpenMP Architecture Review Board. Permission to copy without fee all or part of this material is granted, provided the OpenMP Architecture Review Board copyright notice and the title of this document appear. Notice is given that copying is by permission of the OpenMP Architecture Review Board. This page intentionally left blank in published version. Contents 1 Overview of the OpenMP API1 1.1 Scope . .1 1.2 Glossary . .2 1.2.1 Threading Concepts . .2 1.2.2 OpenMP Language Terminology . .2 1.2.3 Loop Terminology . .9 1.2.4 Synchronization Terminology . 10 1.2.5 Tasking Terminology . 12 1.2.6 Data Terminology . 14 1.2.7 Implementation Terminology . 18 1.2.8 Tool Terminology . 19 1.3 Execution Model . 22 1.4 Memory Model . 25 1.4.1 Structure of the OpenMP Memory Model . 25 1.4.2 Device Data Environments . 26 1.4.3 Memory Management . 27 1.4.4 The Flush Operation . 27 1.4.5 Flush Synchronization and Happens Before .................. 29 1.4.6 OpenMP Memory Consistency . 30 1.5 Tool Interfaces . 31 1.5.1 OMPT . 32 1.5.2 OMPD . 32 1.6 OpenMP Compliance . 33 1.7 Normative References . 33 1.8 Organization of this Document . 35 i 2 Directives 37 2.1 Directive Format . 38 2.1.1 Fixed Source Form Directives . 43 2.1.2 Free Source Form Directives . 44 2.1.3 Stand-Alone Directives . 45 2.1.4 Array Shaping . 45 2.1.5 Array Sections . -

Openmp Made Easy with INTEL® ADVISOR

OpenMP made easy with INTEL® ADVISOR Zakhar Matveev, PhD, Intel CVCG, November 2018, SC’18 OpenMP booth Why do we care? Ai Bi Ai Bi Ai Bi Ai Bi Vector + Processing Ci Ci Ci Ci VL Motivation (instead of Agenda) • Starting from 4.x, OpenMP introduces support for both levels of parallelism: • Multi-Core (think of “pragma/directive omp parallel for”) • SIMD (think of “pragma/directive omp simd”) • 2 pillars of OpenMP SIMD programming model • Hardware with Intel ® AVX-512 support gives you theoretically 8x speed- up over SSE baseline (less or even more in practice) • Intel® Advisor is here to assist you in: • Enabling SIMD parallelism with OpenMP (if not yet) • Improving performance of already vectorized OpenMP SIMD code • And will also help to optimize for Memory Sub-system (Advisor Roofline) 3 Don’t use a single Vector lane! Un-vectorized and un-threaded software will under perform 4 Permission to Design for All Lanes Threading and Vectorization needed to fully utilize modern hardware 5 A Vector parallelism in x86 AVX-512VL AVX-512BW B AVX-512DQ AVX-512CD AVX-512F C AVX2 AVX2 AVX AVX AVX SSE SSE SSE SSE Intel® microarchitecture code name … NHM SNB HSW SKL 6 (theoretically) 8x more SIMD FLOP/S compared to your (–O2) optimized baseline x - Significant leap to 512-bit SIMD support for processors - Intel® Compilers and Intel® Math Kernel Library include AVX-512 support x - Strong compatibility with AVX - Added EVEX prefix enables additional x functionality Don’t leave it on the table! 7 Two level parallelism decomposition with OpenMP: image processing example B #pragma omp parallel for for (int y = 0; y < ImageHeight; ++y){ #pragma omp simd C for (int x = 0; x < ImageWidth; ++x){ count[y][x] = mandel(in_vals[y][x]); } } 8 Two level parallelism decomposition with OpenMP: fluid dynamics processing example B #pragma omp parallel for for (int i = 0; i < X_Dim; ++i){ #pragma omp simd C for (int m = 0; x < n_velocities; ++m){ next_i = f(i, velocities(m)); X[i] = next_i; } } 9 Key components of Intel® Advisor What’s new in “2019” release Step 1. -

Unit: 4 Processes and Threads in Distributed Systems

Unit: 4 Processes and Threads in Distributed Systems Thread A program has one or more locus of execution. Each execution is called a thread of execution. In traditional operating systems, each process has an address space and a single thread of execution. It is the smallest unit of processing that can be scheduled by an operating system. A thread is a single sequence stream within in a process. Because threads have some of the properties of processes, they are sometimes called lightweight processes. In a process, threads allow multiple executions of streams. Thread Structure Process is used to group resources together and threads are the entities scheduled for execution on the CPU. The thread has a program counter that keeps track of which instruction to execute next. It has registers, which holds its current working variables. It has a stack, which contains the execution history, with one frame for each procedure called but not yet returned from. Although a thread must execute in some process, the thread and its process are different concepts and can be treated separately. What threads add to the process model is to allow multiple executions to take place in the same process environment, to a large degree independent of one another. Having multiple threads running in parallel in one process is similar to having multiple processes running in parallel in one computer. Figure: (a) Three processes each with one thread. (b) One process with three threads. In former case, the threads share an address space, open files, and other resources. In the latter case, process share physical memory, disks, printers and other resources. -

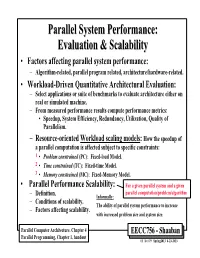

Parallel System Performance: Evaluation & Scalability

ParallelParallel SystemSystem Performance:Performance: EvaluationEvaluation && ScalabilityScalability • Factors affecting parallel system performance: – Algorithm-related, parallel program related, architecture/hardware-related. • Workload-Driven Quantitative Architectural Evaluation: – Select applications or suite of benchmarks to evaluate architecture either on real or simulated machine. – From measured performance results compute performance metrics: • Speedup, System Efficiency, Redundancy, Utilization, Quality of Parallelism. – Resource-oriented Workload scaling models: How the speedup of a parallel computation is affected subject to specific constraints: 1 • Problem constrained (PC): Fixed-load Model. 2 • Time constrained (TC): Fixed-time Model. 3 • Memory constrained (MC): Fixed-Memory Model. • Parallel Performance Scalability: For a given parallel system and a given parallel computation/problem/algorithm – Definition. Informally: – Conditions of scalability. The ability of parallel system performance to increase – Factors affecting scalability. with increased problem size and system size. Parallel Computer Architecture, Chapter 4 EECC756 - Shaaban Parallel Programming, Chapter 1, handout #1 lec # 9 Spring2013 4-23-2013 Parallel Program Performance • Parallel processing goal is to maximize speedup: Time(1) Sequential Work Speedup = < Time(p) Max (Work + Synch Wait Time + Comm Cost + Extra Work) Fixed Problem Size Speedup Max for any processor Parallelizing Overheads • By: 1 – Balancing computations/overheads (workload) on processors -

Introduction to Openmp Paul Edmon ITC Research Computing Associate

Introduction to OpenMP Paul Edmon ITC Research Computing Associate FAS Research Computing Overview • Threaded Parallelism • OpenMP Basics • OpenMP Programming • Benchmarking FAS Research Computing Threaded Parallelism • Shared Memory • Single Node • Non-uniform Memory Access (NUMA) • One thread per core FAS Research Computing Threaded Languages • PThreads • Python • Perl • OpenCL/CUDA • OpenACC • OpenMP FAS Research Computing OpenMP Basics FAS Research Computing What is OpenMP? • OpenMP (Open Multi-Processing) – Application Program Interface (API) – Governed by OpenMP Architecture Review Board • OpenMP provides a portable, scalable model for developers of shared memory parallel applications • The API supports C/C++ and Fortran on a wide variety of architectures FAS Research Computing Goals of OpenMP • Standardization – Provide a standard among a variety shared memory architectures / platforms – Jointly defined and endorsed by a group of major computer hardware and software vendors • Lean and Mean – Establish a simple and limited set of directives for programming shared memory machines – Significant parallelism can be implemented by just a few directives • Ease of Use – Provide the capability to incrementally parallelize a serial program • Portability – Specified for C/C++ and Fortran – Most majors platforms have been implemented including Unix/Linux and Windows – Implemented for all major compilers FAS Research Computing OpenMP Programming Model Shared Memory Model: OpenMP is designed for multi-processor/core, shared memory machines Thread Based Parallelism: OpenMP programs accomplish parallelism exclusively through the use of threads Explicit Parallelism: OpenMP provides explicit (not automatic) parallelism, offering the programmer full control over parallelization Compiler Directive Based: Parallelism is specified through the use of compiler directives embedded in the C/C++ or Fortran code I/O: OpenMP specifies nothing about parallel I/O. -

Parallel Computing

Parallel Computing Announcements ● Midterm has been graded; will be distributed after class along with solutions. ● SCPD students: Midterms have been sent to the SCPD office and should be sent back to you soon. Announcements ● Assignment 6 due right now. ● Assignment 7 (Pathfinder) out, due next Tuesday at 11:30AM. ● Play around with graphs and graph algorithms! ● Learn how to interface with library code. ● No late submissions will be considered. This is as late as we're allowed to have the assignment due. Why Algorithms and Data Structures Matter Making Things Faster ● Choose better algorithms and data structures. ● Dropping from O(n2) to O(n log n) for large data sets will make your programs faster. ● Optimize your code. ● Try to reduce the constant factor in the big-O notation. ● Not recommended unless all else fails. ● Get a better computer. ● Having more memory and processing power can improve performance. ● New option: Use parallelism. How Your Programs Run Threads of Execution ● When running a program, that program gets a thread of execution (or thread). ● Each thread runs through code as normal. ● A program can have multiple threads running at the same time, each of which performs different tasks. ● A program that uses multiple threads is called multithreaded; writing a multithreaded program or algorithm is called multithreading. Threads in C++ ● The newest version of C++ (C++11) has libraries that support threading. ● To create a thread: ● Write the function that you want to execute. ● Construct an object of type thread to run that function. – Need header <thread> for this. ● That function will run in parallel alongside the original program.