Challenges for the Message Passing Interface in the Petaflops Era

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Memory Consistency

Lecture 9: Memory Consistency Parallel Computing Stanford CS149, Winter 2019 Midterm ▪ Feb 12 ▪ Open notes ▪ Practice midterm Stanford CS149, Winter 2019 Shared Memory Behavior ▪ Intuition says loads should return latest value written - What is latest? - Coherence: only one memory location - Consistency: apparent ordering for all locations - Order in which memory operations performed by one thread become visible to other threads ▪ Affects - Programmability: how programmers reason about program behavior - Allowed behavior of multithreaded programs executing with shared memory - Performance: limits HW/SW optimizations that can be used - Reordering memory operations to hide latency Stanford CS149, Winter 2019 Today: what you should know ▪ Understand the motivation for relaxed consistency models ▪ Understand the implications of relaxing W→R ordering Stanford CS149, Winter 2019 Today: who should care ▪ Anyone who: - Wants to implement a synchronization library - Will ever work a job in kernel (or driver) development - Seeks to implement lock-free data structures * - Does any of the above on ARM processors ** * Topic of a later lecture ** For reasons to be described later Stanford CS149, Winter 2019 Memory coherence vs. memory consistency ▪ Memory coherence defines requirements for the observed Observed chronology of operations on address X behavior of reads and writes to the same memory location - All processors must agree on the order of reads/writes to X P0 write: 5 - In other words: it is possible to put all operations involving X on a timeline such P1 read (5) that the observations of all processors are consistent with that timeline P2 write: 10 ▪ Memory consistency defines the behavior of reads and writes to different locations (as observed by other processors) P2 write: 11 - Coherence only guarantees that writes to address X will eventually propagate to other processors P1 read (11) - Consistency deals with when writes to X propagate to other processors, relative to reads and writes to other addresses Stanford CS149, Winter 2019 Coherence vs. -

Memory Consistency: in a Distributed Outline for Lecture 20 Memory System, References to Memory in Remote Processors Do Not Take Place I

Memory consistency: In a distributed Outline for Lecture 20 memory system, references to memory in remote processors do not take place I. Memory consistency immediately. II. Consistency models not requiring synch. This raises a potential problem. Suppose operations that— Strict consistency Sequential consistency • The value of a particular memory PRAM & processor consist. word in processor 2’s local memory III. Consistency models is 0. not requiring synch. operations. • Then processor 1 writes the value 1 to that word of memory. Note that Weak consistency this is a remote write. Release consistency • Processor 2 then reads the word. But, being local, the read occurs quickly, and the value 0 is returned. What’s wrong with this? This situation can be diagrammed like this (the horizontal axis represents time): P1: W (x )1 P2: R (x )0 Depending upon how the program is written, it may or may not be able to tolerate a situation like this. But, in any case, the programmer must understand what can happen when memory is accessed in a DSM system. Consistency models: A consistency model is essentially a contract between the software and the memory. It says that iff they software agrees to obey certain rules, the memory promises to store and retrieve the expected values. © 1997 Edward F. Gehringer CSC/ECE 501 Lecture Notes Page 220 Strict consistency: The most obvious consistency model is strict consistency. Strict consistency: Any read to a memory location x returns the value stored by the most recent write operation to x. This definition implicitly assumes the existence of a global clock, so that the determination of “most recent” is unambiguous. -

2.5 Classification of Parallel Computers

52 // Architectures 2.5 Classification of Parallel Computers 2.5 Classification of Parallel Computers 2.5.1 Granularity In parallel computing, granularity means the amount of computation in relation to communication or synchronisation Periods of computation are typically separated from periods of communication by synchronization events. • fine level (same operations with different data) ◦ vector processors ◦ instruction level parallelism ◦ fine-grain parallelism: – Relatively small amounts of computational work are done between communication events – Low computation to communication ratio – Facilitates load balancing 53 // Architectures 2.5 Classification of Parallel Computers – Implies high communication overhead and less opportunity for per- formance enhancement – If granularity is too fine it is possible that the overhead required for communications and synchronization between tasks takes longer than the computation. • operation level (different operations simultaneously) • problem level (independent subtasks) ◦ coarse-grain parallelism: – Relatively large amounts of computational work are done between communication/synchronization events – High computation to communication ratio – Implies more opportunity for performance increase – Harder to load balance efficiently 54 // Architectures 2.5 Classification of Parallel Computers 2.5.2 Hardware: Pipelining (was used in supercomputers, e.g. Cray-1) In N elements in pipeline and for 8 element L clock cycles =) for calculation it would take L + N cycles; without pipeline L ∗ N cycles Example of good code for pipelineing: §doi =1 ,k ¤ z ( i ) =x ( i ) +y ( i ) end do ¦ 55 // Architectures 2.5 Classification of Parallel Computers Vector processors, fast vector operations (operations on arrays). Previous example good also for vector processor (vector addition) , but, e.g. recursion – hard to optimise for vector processors Example: IntelMMX – simple vector processor. -

Distributed Algorithms with Theoretic Scalability Analysis of Radial and Looped Load flows for Power Distribution Systems

Electric Power Systems Research 65 (2003) 169Á/177 www.elsevier.com/locate/epsr Distributed algorithms with theoretic scalability analysis of radial and looped load flows for power distribution systems Fangxing Li *, Robert P. Broadwater ECE Department Virginia Tech, Blacksburg, VA 24060, USA Received 15 April 2002; received in revised form 14 December 2002; accepted 16 December 2002 Abstract This paper presents distributed algorithms for both radial and looped load flows for unbalanced, multi-phase power distribution systems. The distributed algorithms are developed from tree-based sequential algorithms. Formulas of scalability for the distributed algorithms are presented. It is shown that computation time dominates communication time in the distributed computing model. This provides benefits to real-time load flow calculations, network reconfigurations, and optimization studies that rely on load flow calculations. Also, test results match the predictions of derived formulas. This shows the formulas can be used to predict the computation time when additional processors are involved. # 2003 Elsevier Science B.V. All rights reserved. Keywords: Distributed computing; Scalability analysis; Radial load flow; Looped load flow; Power distribution systems 1. Introduction Also, the method presented in Ref. [10] was tested in radial distribution systems with no more than 528 buses. Parallel and distributed computing has been applied More recent works [11Á/14] presented distributed to many scientific and engineering computations such as implementations for power flows or power flow based weather forecasting and nuclear simulations [1,2]. It also algorithms like optimizations and contingency analysis. has been applied to power system analysis calculations These works also targeted power transmission systems. [3Á/14]. -

Scalability and Performance Management of Internet Applications in the Cloud

Hasso-Plattner-Institut University of Potsdam Internet Technology and Systems Group Scalability and Performance Management of Internet Applications in the Cloud A thesis submitted for the degree of "Doktors der Ingenieurwissenschaften" (Dr.-Ing.) in IT Systems Engineering Faculty of Mathematics and Natural Sciences University of Potsdam By: Wesam Dawoud Supervisor: Prof. Dr. Christoph Meinel Potsdam, Germany, March 2013 This work is licensed under a Creative Commons License: Attribution – Noncommercial – No Derivative Works 3.0 Germany To view a copy of this license visit http://creativecommons.org/licenses/by-nc-nd/3.0/de/ Published online at the Institutional Repository of the University of Potsdam: URL http://opus.kobv.de/ubp/volltexte/2013/6818/ URN urn:nbn:de:kobv:517-opus-68187 http://nbn-resolving.de/urn:nbn:de:kobv:517-opus-68187 To my lovely parents To my lovely wife Safaa To my lovely kids Shatha and Yazan Acknowledgements At Hasso Plattner Institute (HPI), I had the opportunity to meet many wonderful people. It is my pleasure to thank those who sup- ported me to make this thesis possible. First and foremost, I would like to thank my Ph.D. supervisor, Prof. Dr. Christoph Meinel, for his continues support. In spite of his tight schedule, he always found the time to discuss, guide, and motivate my research ideas. The thanks are extended to Dr. Karin-Irene Eiermann for assisting me even before moving to Germany. I am also grateful for Michaela Schmitz. She managed everything well to make everyones life easier. I owe a thanks to Dr. Nemeth Sharon for helping me to improve my English writing skills. -

Towards Shared Memory Consistency Models for Gpus

TOWARDS SHARED MEMORY CONSISTENCY MODELS FOR GPUS by Tyler Sorensen A thesis submitted to the faculty of The University of Utah in partial fulfillment of the requirements for the degree of Bachelor of Science School of Computing The University of Utah March 2013 FINAL READING APPROVAL I have read the thesis of Tyler Sorensen in its final form and have found that (1) its format, citations, and bibliographic style are consistent and acceptable; (2) its illustrative materials including figures, tables, and charts are in place. Date Ganesh Gopalakrishnan ABSTRACT With the widespread use of GPUs, it is important to ensure that programmers have a clear understanding of their shared memory consistency model i.e. what values can be read when issued concurrently with writes. While memory consistency has been studied for CPUs, GPUs present very different memory and concurrency systems and have not been well studied. We propose a collection of litmus tests that shed light on interesting visibility and ordering properties. These include classical shared memory consistency model properties, such as coherence and write atomicity, as well as GPU specific properties e.g. memory visibility differences between intra and inter block threads. The results of the litmus tests are determined by feedback from industry experts, the limited documentation available and properties common to all modern multi-core systems. Some of the behaviors remain unresolved. Using the results of the litmus tests, we establish a formal state transition model using intuitive data structures and operations. We implement our model in the Murphi modeling language and verify the initial litmus tests. -

Scalability and Optimization Strategies for GPU Enhanced Neural Networks (Genn)

Scalability and Optimization Strategies for GPU Enhanced Neural Networks (GeNN) Naresh Balaji1, Esin Yavuz2, Thomas Nowotny2 {T.Nowotny, E.Yavuz}@sussex.ac.uk, [email protected] 1National Institute of Technology, Tiruchirappalli, India 2School of Engineering and Informatics, University of Sussex, Brighton, UK Abstract: Simulation of spiking neural networks has been traditionally done on high-performance supercomputers or large-scale clusters. Utilizing the parallel nature of neural network computation algorithms, GeNN (GPU Enhanced Neural Network) provides a simulation environment that performs on General Purpose NVIDIA GPUs with a code generation based approach. GeNN allows the users to design and simulate neural networks by specifying the populations of neurons at different stages, their synapse connection densities and the model of individual neurons. In this report we describe work on how to scale synaptic weights based on the configuration of the user-defined network to ensure sufficient spiking and subsequent effective learning. We also discuss optimization strategies particular to GPU computing: sparse representation of synapse connections and occupancy based block-size determination. Keywords: Spiking Neural Network, GPGPU, CUDA, code-generation, occupancy, optimization 1. Introduction The computational performance of traditional single-core CPUs has steadily increased since its arrival, primarily due to the combination of increased clock frequency, process technology advancements and compiler optimizations. The clock frequency was pushed higher and higher, from 5 MHz to 3 GHz in the years from 1983 to 2002, until it reached a stall a few years ago [1] when the power consumption and dissipation of the transistors reached peaks that could not compensated by its cost [2]. -

Massively Parallel Computing with CUDA

Massively Parallel Computing with CUDA Antonino Tumeo Politecnico di Milano 1 GPUs have evolved to the point where many real world applications are easily implemented on them and run significantly faster than on multi-core systems. Future computing architectures will be hybrid systems with parallel-core GPUs working in tandem with multi-core CPUs. Jack Dongarra Professor, University of Tennessee; Author of “Linpack” Why Use the GPU? • The GPU has evolved into a very flexible and powerful processor: • It’s programmable using high-level languages • It supports 32-bit and 64-bit floating point IEEE-754 precision • It offers lots of GFLOPS: • GPU in every PC and workstation What is behind such an Evolution? • The GPU is specialized for compute-intensive, highly parallel computation (exactly what graphics rendering is about) • So, more transistors can be devoted to data processing rather than data caching and flow control ALU ALU Control ALU ALU Cache DRAM DRAM CPU GPU • The fast-growing video game industry exerts strong economic pressure that forces constant innovation GPUs • Each NVIDIA GPU has 240 parallel cores NVIDIA GPU • Within each core 1.4 Billion Transistors • Floating point unit • Logic unit (add, sub, mul, madd) • Move, compare unit • Branch unit • Cores managed by thread manager • Thread manager can spawn and manage 12,000+ threads per core 1 Teraflop of processing power • Zero overhead thread switching Heterogeneous Computing Domains Graphics Massive Data GPU Parallelism (Parallel Computing) Instruction CPU Level (Sequential -

Parallel Computer Architecture

Parallel Computer Architecture Introduction to Parallel Computing CIS 410/510 Department of Computer and Information Science Lecture 2 – Parallel Architecture Outline q Parallel architecture types q Instruction-level parallelism q Vector processing q SIMD q Shared memory ❍ Memory organization: UMA, NUMA ❍ Coherency: CC-UMA, CC-NUMA q Interconnection networks q Distributed memory q Clusters q Clusters of SMPs q Heterogeneous clusters of SMPs Introduction to Parallel Computing, University of Oregon, IPCC Lecture 2 – Parallel Architecture 2 Parallel Architecture Types • Uniprocessor • Shared Memory – Scalar processor Multiprocessor (SMP) processor – Shared memory address space – Bus-based memory system memory processor … processor – Vector processor bus processor vector memory memory – Interconnection network – Single Instruction Multiple processor … processor Data (SIMD) network processor … … memory memory Introduction to Parallel Computing, University of Oregon, IPCC Lecture 2 – Parallel Architecture 3 Parallel Architecture Types (2) • Distributed Memory • Cluster of SMPs Multiprocessor – Shared memory addressing – Message passing within SMP node between nodes – Message passing between SMP memory memory nodes … M M processor processor … … P … P P P interconnec2on network network interface interconnec2on network processor processor … P … P P … P memory memory … M M – Massively Parallel Processor (MPP) – Can also be regarded as MPP if • Many, many processors processor number is large Introduction to Parallel Computing, University of Oregon, -

A Review of Multicore Processors with Parallel Programming

International Journal of Engineering Technology, Management and Applied Sciences www.ijetmas.com September 2015, Volume 3, Issue 9, ISSN 2349-4476 A Review of Multicore Processors with Parallel Programming Anchal Thakur Ravinder Thakur Research Scholar, CSE Department Assistant Professor, CSE L.R Institute of Engineering and Department Technology, Solan , India. L.R Institute of Engineering and Technology, Solan, India ABSTRACT When the computers first introduced in the market, they came with single processors which limited the performance and efficiency of the computers. The classic way of overcoming the performance issue was to use bigger processors for executing the data with higher speed. Big processor did improve the performance to certain extent but these processors consumed a lot of power which started over heating the internal circuits. To achieve the efficiency and the speed simultaneously the CPU architectures developed multicore processors units in which two or more processors were used to execute the task. The multicore technology offered better response-time while running big applications, better power management and faster execution time. Multicore processors also gave developer an opportunity to parallel programming to execute the task in parallel. These days parallel programming is used to execute a task by distributing it in smaller instructions and executing them on different cores. By using parallel programming the complex tasks that are carried out in a multicore environment can be executed with higher efficiency and performance. Keywords: Multicore Processing, Multicore Utilization, Parallel Processing. INTRODUCTION From the day computers have been invented a great importance has been given to its efficiency for executing the task. -

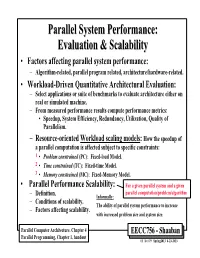

Parallel System Performance: Evaluation & Scalability

ParallelParallel SystemSystem Performance:Performance: EvaluationEvaluation && ScalabilityScalability • Factors affecting parallel system performance: – Algorithm-related, parallel program related, architecture/hardware-related. • Workload-Driven Quantitative Architectural Evaluation: – Select applications or suite of benchmarks to evaluate architecture either on real or simulated machine. – From measured performance results compute performance metrics: • Speedup, System Efficiency, Redundancy, Utilization, Quality of Parallelism. – Resource-oriented Workload scaling models: How the speedup of a parallel computation is affected subject to specific constraints: 1 • Problem constrained (PC): Fixed-load Model. 2 • Time constrained (TC): Fixed-time Model. 3 • Memory constrained (MC): Fixed-Memory Model. • Parallel Performance Scalability: For a given parallel system and a given parallel computation/problem/algorithm – Definition. Informally: – Conditions of scalability. The ability of parallel system performance to increase – Factors affecting scalability. with increased problem size and system size. Parallel Computer Architecture, Chapter 4 EECC756 - Shaaban Parallel Programming, Chapter 1, handout #1 lec # 9 Spring2013 4-23-2013 Parallel Program Performance • Parallel processing goal is to maximize speedup: Time(1) Sequential Work Speedup = < Time(p) Max (Work + Synch Wait Time + Comm Cost + Extra Work) Fixed Problem Size Speedup Max for any processor Parallelizing Overheads • By: 1 – Balancing computations/overheads (workload) on processors -

On the Coexistence of Shared-Memory and Message-Passing in The

On the Co existence of SharedMemory and MessagePassing in the Programming of Parallel Applications 1 2 J Cordsen and W SchroderPreikschat GMD FIRST Rudower Chaussee D Berlin Germany University of Potsdam Am Neuen Palais D Potsdam Germany Abstract Interop erability in nonsequential applications requires com munication to exchange information using either the sharedmemory or messagepassing paradigm In the past the communication paradigm in use was determined through the architecture of the underlying comput ing platform Sharedmemory computing systems were programmed to use sharedmemory communication whereas distributedmemory archi tectures were running applications communicating via messagepassing Current trends in the architecture of parallel machines are based on sharedmemory and distributedmemory For scalable parallel applica tions in order to maintain transparency and eciency b oth communi cation paradigms have to co exist Users should not b e obliged to know when to use which of the two paradigms On the other hand the user should b e able to exploit either of the paradigms directly in order to achieve the b est p ossible solution The pap er presents the VOTE communication supp ort system VOTE provides co existent implementations of sharedmemory and message passing communication Applications can change the communication paradigm dynamically at runtime thus are able to employ the under lying computing system in the most convenient and applicationoriented way The presented case study and detailed p erformance analysis un derpins the