Scalability and Performance Management of Internet Applications in the Cloud

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Distributed Algorithms with Theoretic Scalability Analysis of Radial and Looped Load flows for Power Distribution Systems

Electric Power Systems Research 65 (2003) 169Á/177 www.elsevier.com/locate/epsr Distributed algorithms with theoretic scalability analysis of radial and looped load flows for power distribution systems Fangxing Li *, Robert P. Broadwater ECE Department Virginia Tech, Blacksburg, VA 24060, USA Received 15 April 2002; received in revised form 14 December 2002; accepted 16 December 2002 Abstract This paper presents distributed algorithms for both radial and looped load flows for unbalanced, multi-phase power distribution systems. The distributed algorithms are developed from tree-based sequential algorithms. Formulas of scalability for the distributed algorithms are presented. It is shown that computation time dominates communication time in the distributed computing model. This provides benefits to real-time load flow calculations, network reconfigurations, and optimization studies that rely on load flow calculations. Also, test results match the predictions of derived formulas. This shows the formulas can be used to predict the computation time when additional processors are involved. # 2003 Elsevier Science B.V. All rights reserved. Keywords: Distributed computing; Scalability analysis; Radial load flow; Looped load flow; Power distribution systems 1. Introduction Also, the method presented in Ref. [10] was tested in radial distribution systems with no more than 528 buses. Parallel and distributed computing has been applied More recent works [11Á/14] presented distributed to many scientific and engineering computations such as implementations for power flows or power flow based weather forecasting and nuclear simulations [1,2]. It also algorithms like optimizations and contingency analysis. has been applied to power system analysis calculations These works also targeted power transmission systems. [3Á/14]. -

Scalability and Optimization Strategies for GPU Enhanced Neural Networks (Genn)

Scalability and Optimization Strategies for GPU Enhanced Neural Networks (GeNN) Naresh Balaji1, Esin Yavuz2, Thomas Nowotny2 {T.Nowotny, E.Yavuz}@sussex.ac.uk, [email protected] 1National Institute of Technology, Tiruchirappalli, India 2School of Engineering and Informatics, University of Sussex, Brighton, UK Abstract: Simulation of spiking neural networks has been traditionally done on high-performance supercomputers or large-scale clusters. Utilizing the parallel nature of neural network computation algorithms, GeNN (GPU Enhanced Neural Network) provides a simulation environment that performs on General Purpose NVIDIA GPUs with a code generation based approach. GeNN allows the users to design and simulate neural networks by specifying the populations of neurons at different stages, their synapse connection densities and the model of individual neurons. In this report we describe work on how to scale synaptic weights based on the configuration of the user-defined network to ensure sufficient spiking and subsequent effective learning. We also discuss optimization strategies particular to GPU computing: sparse representation of synapse connections and occupancy based block-size determination. Keywords: Spiking Neural Network, GPGPU, CUDA, code-generation, occupancy, optimization 1. Introduction The computational performance of traditional single-core CPUs has steadily increased since its arrival, primarily due to the combination of increased clock frequency, process technology advancements and compiler optimizations. The clock frequency was pushed higher and higher, from 5 MHz to 3 GHz in the years from 1983 to 2002, until it reached a stall a few years ago [1] when the power consumption and dissipation of the transistors reached peaks that could not compensated by its cost [2]. -

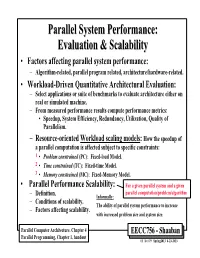

Parallel System Performance: Evaluation & Scalability

ParallelParallel SystemSystem Performance:Performance: EvaluationEvaluation && ScalabilityScalability • Factors affecting parallel system performance: – Algorithm-related, parallel program related, architecture/hardware-related. • Workload-Driven Quantitative Architectural Evaluation: – Select applications or suite of benchmarks to evaluate architecture either on real or simulated machine. – From measured performance results compute performance metrics: • Speedup, System Efficiency, Redundancy, Utilization, Quality of Parallelism. – Resource-oriented Workload scaling models: How the speedup of a parallel computation is affected subject to specific constraints: 1 • Problem constrained (PC): Fixed-load Model. 2 • Time constrained (TC): Fixed-time Model. 3 • Memory constrained (MC): Fixed-Memory Model. • Parallel Performance Scalability: For a given parallel system and a given parallel computation/problem/algorithm – Definition. Informally: – Conditions of scalability. The ability of parallel system performance to increase – Factors affecting scalability. with increased problem size and system size. Parallel Computer Architecture, Chapter 4 EECC756 - Shaaban Parallel Programming, Chapter 1, handout #1 lec # 9 Spring2013 4-23-2013 Parallel Program Performance • Parallel processing goal is to maximize speedup: Time(1) Sequential Work Speedup = < Time(p) Max (Work + Synch Wait Time + Comm Cost + Extra Work) Fixed Problem Size Speedup Max for any processor Parallelizing Overheads • By: 1 – Balancing computations/overheads (workload) on processors -

Multiprocessing and Scalability

Multiprocessing and Scalability A.R. Hurson Computer Science and Engineering The Pennsylvania State University 1 Multiprocessing and Scalability Large-scale multiprocessor systems have long held the promise of substantially higher performance than traditional uni- processor systems. However, due to a number of difficult problems, the potential of these machines has been difficult to realize. This is because of the: Fall 2004 2 Multiprocessing and Scalability Advances in technology ─ Rate of increase in performance of uni-processor, Complexity of multiprocessor system design ─ This drastically effected the cost and implementation cycle. Programmability of multiprocessor system ─ Design complexity of parallel algorithms and parallel programs. Fall 2004 3 Multiprocessing and Scalability Programming a parallel machine is more difficult than a sequential one. In addition, it takes much effort to port an existing sequential program to a parallel machine than to a newly developed sequential machine. Lack of good parallel programming environments and standard parallel languages also has further aggravated this issue. Fall 2004 4 Multiprocessing and Scalability As a result, absolute performance of many early concurrent machines was not significantly better than available or soon- to-be available uni-processors. Fall 2004 5 Multiprocessing and Scalability Recently, there has been an increased interest in large-scale or massively parallel processing systems. This interest stems from many factors, including: Advances in integrated technology. Very -

Pascal Viewer: a Tool for the Visualization of Parallel Scalability Trends

PaScal Viewer: a Tool for the Visualization of Parallel Scalability Trends 1st Anderson B. N. da Silva 2nd Daniel A. M. Cunha Pro-Reitoria´ de Pesquisa, Inovac¸ao˜ e Pos-Graduac¸´ ao˜ Dept. de Eng. de Comp. e Automac¸ao˜ Instituto Federal da Paraiba Univ. Federal do Rio Grande do Norte Joao Pessoa, Brazil Natal, Brazil [email protected] [email protected] 3st Vitor R. G. Silva 4th Alex F. de A. Furtunato 5th Samuel Xavier de Souza Dept. de Eng. de Comp. e Automac¸ao˜ Diretoria de Tecnologia da Informac¸ao˜ Dept. de Eng. de Comp. e Automac¸ao˜ Univ. Federal do Rio Grande do Norte Instituto Federal do Rio Grande do Norte Univ. Federal do Rio Grande do Norte Natal, Brazil Natal, Brazil Natal, Brazil [email protected] [email protected] [email protected] Abstract—Taking advantage of the growing number of cores particular environment. The configuration of this environment in supercomputers to increase the scalabilty of parallel programs includes the number of cores, their operating frequency, and is an increasing challenge. Many advanced profiling tools have the size of the input data or the problem size. Among the var- been developed to assist programmers in the process of analyzing data related to the execution of their program. Programmers ious collected information, the elapsed time in each function can act upon the information generated by these data and make of the code, the number of function calls, and the memory their programs reach higher performance levels. However, the consumption of the program can be cited to name just a few information provided by profiling tools is generally designed [2]. -

Computer Architecture: Parallel Processing Basics

Computer Architecture: Parallel Processing Basics Onur Mutlu & Seth Copen Goldstein Carnegie Mellon University 9/9/13 Today What is Parallel Processing? Why? Kinds of Parallel Processing Multiprocessing and Multithreading Measuring success Speedup Amdhal’s Law Bottlenecks to parallelism 2 Concurrent Systems Embedded-Physical Distributed Sensor Claytronics Networks Concurrent Systems Embedded-Physical Distributed Sensor Claytronics Networks Geographically Distributed Power Internet Grid Concurrent Systems Embedded-Physical Distributed Sensor Claytronics Networks Geographically Distributed Power Internet Grid Cloud Computing EC2 Tashi PDL'09 © 2007-9 Goldstein5 Concurrent Systems Embedded-Physical Distributed Sensor Claytronics Networks Geographically Distributed Power Internet Grid Cloud Computing EC2 Tashi Parallel PDL'09 © 2007-9 Goldstein6 Concurrent Systems Physical Geographical Cloud Parallel Geophysical +++ ++ --- --- location Relative +++ +++ + - location Faults ++++ +++ ++++ -- Number of +++ +++ + - Processors + Network varies varies fixed fixed structure Network --- --- + + connectivity 7 Concurrent System Challenge: Programming The old joke: How long does it take to write a parallel program? One Graduate Student Year 8 Parallel Programming Again?? Increased demand (multicore) Increased scale (cloud) Improved compute/communicate Change in Application focus Irregular Recursive data structures PDL'09 © 2007-9 Goldstein9 Why Parallel Computers? Parallelism: Doing multiple things at a time Things: instructions, -

Parallel Programming with Openmp

Parallel Programming with OpenMP OpenMP Parallel Programming Introduction: OpenMP Programming Model Thread-based parallelism utilized on shared-memory platforms Parallelization is either explicit, where programmer has full control over parallelization or through using compiler directives, existing in the source code. Thread is a process of a code is being executed. A thread of execution is the smallest unit of processing. Multiple threads can exist within the same process and share resources such as memory OpenMP Parallel Programming Introduction: OpenMP Programming Model Master thread is a single thread that runs sequentially; parallel execution occurs inside parallel regions and between two parallel regions, only the master thread executes the code. This is called the fork-join model: OpenMP Parallel Programming OpenMP Parallel Computing Hardware Shared memory allows immediate access to all data from all processors without explicit communication. Shared memory: multiple cpus are attached to the BUS all processors share the same primary memory the same memory address on different CPU's refer to the same memory location CPU-to-memory connection becomes a bottleneck: shared memory computers cannot scale very well OpenMP Parallel Programming OpenMP versus MPI OpenMP (Open Multi-Processing): easy to use; loop-level parallelism non-loop-level parallelism is more difficult limited to shared memory computers cannot handle very large problems An alternative is MPI (Message Passing Interface): require low-level programming; more difficult programming -

Challenges for the Message Passing Interface in the Petaflops Era

Challenges for the Message Passing Interface in the Petaflops Era William D. Gropp Mathematics and Computer Science www.mcs.anl.gov/~gropp What this Talk is About The title talks about MPI – Because MPI is the dominant parallel programming model in computational science But the issue is really – What are the needs of the parallel software ecosystem? – How does MPI fit into that ecosystem? – What are the missing parts (not just from MPI)? – How can MPI adapt or be replaced in the parallel software ecosystem? – Short version of this talk: • The problem with MPI is not with what it has but with what it is missing Lets start with some history … Argonne National Laboratory 2 Quotes from “System Software and Tools for High Performance Computing Environments” (1993) “The strongest desire expressed by these users was simply to satisfy the urgent need to get applications codes running on parallel machines as quickly as possible” In a list of enabling technologies for mathematical software, “Parallel prefix for arbitrary user-defined associative operations should be supported. Conflicts between system and library (e.g., in message types) should be automatically avoided.” – Note that MPI-1 provided both Immediate Goals for Computing Environments: – Parallel computer support environment – Standards for same – Standard for parallel I/O – Standard for message passing on distributed memory machines “The single greatest hindrance to significant penetration of MPP technology in scientific computing is the absence of common programming interfaces across various parallel computing systems” Argonne National Laboratory 3 Quotes from “Enabling Technologies for Petaflops Computing” (1995): “The software for the current generation of 100 GF machines is not adequate to be scaled to a TF…” “The Petaflops computer is achievable at reasonable cost with technology available in about 20 years [2014].” – (estimated clock speed in 2004 — 700MHz)* “Software technology for MPP’s must evolve new ways to design software that is portable across a wide variety of computer architectures. -

Scalable SIMD-Efficient Graph Processing on Gpus

2015 International Conference on Parallel Architecture and Compilation Scalable SIMD-Efficient Graph Processing on GPUs Farzad Khorasani Rajiv Gupta Laxmi N. Bhuyan Computer Science and Engineering Department University of California Riverside, CA, USA {fkhor001, gupta, bhuyan}@cs.ucr.edu Abstract—The vast computing power of GPUs makes them In this paper we present techniques that maximize the an attractive platform for accelerating large scale data parallel scalability and performance of vertex-centric graph process- computations such as popular graph processing applications. ing on multi-GPU systems by fully exploiting the available However, the inherent irregularity and large sizes of real- world power law graphs makes effective use of GPUs a resources as follows: major challenge. In this paper we develop techniques that SIMD hardware – The irregular nature of power law greatly enhance the performance and scalability of vertex- graphs makes it difficult to balance load across threads centric graph processing on GPUs. First, we present Warp leading to underutilization of SIMD resources. We address Segmentation, a novel method that greatly enhances GPU the device underutilization problem of a GPU by developing device utilization by dynamically assigning appropriate number of SIMD threads to process a vertex with irregular-sized Warp Segmentation that dynamically assigns appropriate neighbors while employing compact CSR representation to number of SIMD threads to process a vertex with irregular- maximize the graph size that can be kept inside the GPU sized neighbors. Our experiments show that the warp exe- global memory. Prior works can either maximize graph sizes cution efficiency of warp segmentation exceeds 70% while (VWC [11] uses the CSR representation) or device utilization for the well known VWC [11] technique it is around 40%. -

Computing at Massive Scale: Scalability and Dependability Challenges

This is a repository copy of Computing at massive scale: Scalability and dependability challenges. White Rose Research Online URL for this paper: http://eprints.whiterose.ac.uk/105671/ Version: Accepted Version Proceedings Paper: Yang, R and Xu, J orcid.org/0000-0002-4598-167X (2016) Computing at massive scale: Scalability and dependability challenges. In: Proceedings - 2016 IEEE Symposium on Service-Oriented System Engineering, SOSE 2016. 2016 IEEE Symposium on Service-Oriented System Engineering (SOSE), 29 Mar - 02 Apr 2016, Oxford, United Kingdom. IEEE , pp. 386-397. ISBN 9781509022533 https://doi.org/10.1109/SOSE.2016.73 (c) 2016 IEEE. Personal use of this material is permitted. Permission from IEEE must be obtained for all other users, including reprinting/ republishing this material for advertising or promotional purposes, creating new collective works for resale or redistribution to servers or lists, or reuse of any copyrighted components of this work in other works. Reuse Unless indicated otherwise, fulltext items are protected by copyright with all rights reserved. The copyright exception in section 29 of the Copyright, Designs and Patents Act 1988 allows the making of a single copy solely for the purpose of non-commercial research or private study within the limits of fair dealing. The publisher or other rights-holder may allow further reproduction and re-use of this version - refer to the White Rose Research Online record for this item. Where records identify the publisher as the copyright holder, users can verify any specific terms of use on the publisher’s website. Takedown If you consider content in White Rose Research Online to be in breach of UK law, please notify us by emailing [email protected] including the URL of the record and the reason for the withdrawal request. -

CUDA Basics Murphy Stein New York University

CUDA Basics Murphy Stein New York University Overview ● Device Architecture ● CUDA Programming Model ● Matrix Transpose in CUDA ● Further Reading What is CUDA? CUDA stands for: ºCompute Unified Device Architectureº It is 2 things: 1. Device Architecture Specification 2. A small extension to C = New Syntax + Built-in Variables ± Restrictions + Libraries Device Architecture: Streaming Multiprocessor (SM) 1 SM contains 8 scalar cores SM ● Up to 8 cores can run Instruction Fetch/Dispatch simulatenously ● Each core executes identical Streaming Core Shared Memory 16KB instruction set, or sleeps #1 ● SM schedules instructions Streaming Registers 8KB across cores with 0 overhead Core #2 ● Up to 32 threads may be Texture Memory Cache 5-8 KB scheduled at a time, called a Streaming warp, but max 24 warps active Core Constant Memory #3 Cache 8KB in 1 SM ... ● Thread-level memory-sharing supported via Shared Memory Streaming Core ● Register memory is local to #8 thread, and divided amongst all blocks on SM Transparent Scalability · Hardware is free to assigns blocks to any processor at any time ± A kernel scales across any number of parallel processors Device Kernel grid Device Block 0 Block 1 Block 2 Block 3 Block 0 Block 1 Block 4 Block 5 Block 0 Block 1 Block 2 Block 3 Block 6 Block 7 time Block 2 Block 3 Block 4 Block 5 Block 6 Block 7 Block 4 Block 5 Each block can execute in any order relative to other blocks. Block 6 Block 7 © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007-2009 SM Warp Scheduling · SM hardware implements zero- overhead Warp scheduling ± Warps whose next instruction has its operands ready for consumption are SM multithreaded eligible for execution Warp scheduler ± Eligible Warps are selected for time execution on a prioritized scheduling warp 8 instruction 11 policy ± All threads in a Warp execute the warp 1 instruction 42 same instruction when selected · 4 clock cycles needed to dispatch warp 3 instruction 95 . -

Evaluation of a Multithreaded Architecture for Cellular Computing

Evaluation of a Multithreaded Architecture for Cellular Computing C˘alin Cas¸caval Jos´eG. Casta˜nos Luis Ceze Monty Denneau Manish Gupta Derek Lieber Jos´eE. Moreira Karin Strauss Henry S. Warren, Jr. IBM Thomas J. Watson Research Center Yorktown Heights, NY 10598-0218 g fcascaval,castanos,lceze,denneau,mgupta,lieber,jmoreira,kstrauss,hankw @us.ibm.com Abstract to reduce the impact of memory hierarchy and functional unit latencies. Approaches like out-of-order execution, mul- Cyclops is a new architecture for high performance par- tiple instruction issue, and deep pipelines have been suc- allel computers being developed at the IBM T. J. Watson cessful in the past. However, the average number of ma- Research Center. The basic cell of this architecture is a chine cycles to execute an instruction has not improved sig- single-chip SMP system with multiple threads of execution, nificantly in the past few years. This indicates that we are embedded memory, and integrated communications hard- reaching a point of diminishing returns as measured by the ware. Massive intra-chip parallelism is used to tolerate increase in performance obtained from additional transis- memory and functional unit latencies. Large systems with tors and power. thousands of chips can be built by replicating this basic cell This paper describes the Cyclops architecture, a new ap- in a regular pattern. In this paper we describe the Cy- proach to effectively use the transistor and power budget of clops architecture and evaluate two of its new hardware a piece of silicon. The primary reasoning behind Cyclops features: memory hierarchy with flexible cache organiza- is that computer architecture and organization has become tion and fast barrier hardware.