Evaluation of a Multithreaded Architecture for Cellular Computing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

NVIDIA Tesla Personal Supercomputer, Please Visit

NVIDIA TESLA PERSONAL SUPERCOMPUTER TESLA DATASHEET Get your own supercomputer. Experience cluster level computing performance—up to 250 times faster than standard PCs and workstations—right at your desk. The NVIDIA® Tesla™ Personal Supercomputer AccessiBLE to Everyone TESLA C1060 COMPUTING ™ PROCESSORS ARE THE CORE is based on the revolutionary NVIDIA CUDA Available from OEMs and resellers parallel computing architecture and powered OF THE TESLA PERSONAL worldwide, the Tesla Personal Supercomputer SUPERCOMPUTER by up to 960 parallel processing cores. operates quietly and plugs into a standard power strip so you can take advantage YOUR OWN SUPERCOMPUTER of cluster level performance anytime Get nearly 4 teraflops of compute capability you want, right from your desk. and the ability to perform computations 250 times faster than a multi-CPU core PC or workstation. NVIDIA CUDA UnlocKS THE POWER OF GPU parallel COMPUTING The CUDA™ parallel computing architecture enables developers to utilize C programming with NVIDIA GPUs to run the most complex computationally-intensive applications. CUDA is easy to learn and has become widely adopted by thousands of application developers worldwide to accelerate the most performance demanding applications. TESLA PERSONAL SUPERCOMPUTER | DATASHEET | MAR09 | FINAL FEATURES AND BENEFITS Your own Supercomputer Dedicated computing resource for every computational researcher and technical professional. Cluster Performance The performance of a cluster in a desktop system. Four Tesla computing on your DesKtop processors deliver nearly 4 teraflops of performance. DESIGNED for OFFICE USE Plugs into a standard office power socket and quiet enough for use at your desk. Massively Parallel Many Core 240 parallel processor cores per GPU that can execute thousands of GPU Architecture concurrent threads. -

Distributed Algorithms with Theoretic Scalability Analysis of Radial and Looped Load flows for Power Distribution Systems

Electric Power Systems Research 65 (2003) 169Á/177 www.elsevier.com/locate/epsr Distributed algorithms with theoretic scalability analysis of radial and looped load flows for power distribution systems Fangxing Li *, Robert P. Broadwater ECE Department Virginia Tech, Blacksburg, VA 24060, USA Received 15 April 2002; received in revised form 14 December 2002; accepted 16 December 2002 Abstract This paper presents distributed algorithms for both radial and looped load flows for unbalanced, multi-phase power distribution systems. The distributed algorithms are developed from tree-based sequential algorithms. Formulas of scalability for the distributed algorithms are presented. It is shown that computation time dominates communication time in the distributed computing model. This provides benefits to real-time load flow calculations, network reconfigurations, and optimization studies that rely on load flow calculations. Also, test results match the predictions of derived formulas. This shows the formulas can be used to predict the computation time when additional processors are involved. # 2003 Elsevier Science B.V. All rights reserved. Keywords: Distributed computing; Scalability analysis; Radial load flow; Looped load flow; Power distribution systems 1. Introduction Also, the method presented in Ref. [10] was tested in radial distribution systems with no more than 528 buses. Parallel and distributed computing has been applied More recent works [11Á/14] presented distributed to many scientific and engineering computations such as implementations for power flows or power flow based weather forecasting and nuclear simulations [1,2]. It also algorithms like optimizations and contingency analysis. has been applied to power system analysis calculations These works also targeted power transmission systems. [3Á/14]. -

Scalability and Performance Management of Internet Applications in the Cloud

Hasso-Plattner-Institut University of Potsdam Internet Technology and Systems Group Scalability and Performance Management of Internet Applications in the Cloud A thesis submitted for the degree of "Doktors der Ingenieurwissenschaften" (Dr.-Ing.) in IT Systems Engineering Faculty of Mathematics and Natural Sciences University of Potsdam By: Wesam Dawoud Supervisor: Prof. Dr. Christoph Meinel Potsdam, Germany, March 2013 This work is licensed under a Creative Commons License: Attribution – Noncommercial – No Derivative Works 3.0 Germany To view a copy of this license visit http://creativecommons.org/licenses/by-nc-nd/3.0/de/ Published online at the Institutional Repository of the University of Potsdam: URL http://opus.kobv.de/ubp/volltexte/2013/6818/ URN urn:nbn:de:kobv:517-opus-68187 http://nbn-resolving.de/urn:nbn:de:kobv:517-opus-68187 To my lovely parents To my lovely wife Safaa To my lovely kids Shatha and Yazan Acknowledgements At Hasso Plattner Institute (HPI), I had the opportunity to meet many wonderful people. It is my pleasure to thank those who sup- ported me to make this thesis possible. First and foremost, I would like to thank my Ph.D. supervisor, Prof. Dr. Christoph Meinel, for his continues support. In spite of his tight schedule, he always found the time to discuss, guide, and motivate my research ideas. The thanks are extended to Dr. Karin-Irene Eiermann for assisting me even before moving to Germany. I am also grateful for Michaela Schmitz. She managed everything well to make everyones life easier. I owe a thanks to Dr. Nemeth Sharon for helping me to improve my English writing skills. -

The Intro to GPGPU CPU Vs

12/12/11! The Intro to GPGPU . Dr. Chokchai (Box) Leangsuksun, PhD! Louisiana Tech University. Ruston, LA! ! CPU vs. GPU • CPU – Fast caches – Branching adaptability – High performance • GPU – Multiple ALUs – Fast onboard memory – High throughput on parallel tasks • Executes program on each fragment/vertex • CPUs are great for task parallelism • GPUs are great for data parallelism Supercomputing 20082 Education Program 1! 12/12/11! CPU vs. GPU - Hardware • More transistors devoted to data processing CUDA programming guide 3.1 3 CPU vs. GPU – Computation Power CUDA programming guide 3.1! 2! 12/12/11! CPU vs. GPU – Memory Bandwidth CUDA programming guide 3.1! What is GPGPU ? • General Purpose computation using GPU in applications other than 3D graphics – GPU accelerates critical path of application • Data parallel algorithms leverage GPU attributes – Large data arrays, streaming throughput – Fine-grain SIMD parallelism – Low-latency floating point (FP) computation © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007! ECE 498AL, University of Illinois, Urbana-Champaign! 3! 12/12/11! Why is GPGPU? • Large number of cores – – 100-1000 cores in a single card • Low cost – less than $100-$1500 • Green computing – Low power consumption – 135 watts/card – 135 w vs 30000 w (300 watts * 100) • 1 card can perform > 100 desktops 12/14/09!– $750 vs 50000 ($500 * 100) 7 Two major players 4! 12/12/11! Parallel Computing on a GPU • NVIDIA GPU Computing Architecture – Via a HW device interface – In laptops, desktops, workstations, servers • Tesla T10 1070 from 1-4 TFLOPS • AMD/ATI 5970 x2 3200 cores • NVIDIA Tegra is an all-in-one (system-on-a-chip) ATI 4850! processor architecture derived from the ARM family • GPU parallelism is better than Moore’s law, more doubling every year • GPGPU is a GPU that allows user to process both graphics and non-graphics applications. -

System-On-A-Chip (Soc) & ARM Architecture

System-on-a-Chip (SoC) & ARM Architecture EE2222 Computer Interfacing and Microprocessors Partially based on System-on-Chip Design by Hao Zheng 2020 EE2222 1 Overview • A system-on-a-chip (SoC): • a computing system on a single silicon substrate that integrates both hardware and software. • Hardware packages all necessary electronics for a particular application. • which implemented by SW running on HW. • Aim for low power and low cost. • Also more reliable than multi-component systems. 2020 EE2222 2 Driven by semiconductor advances 2020 EE2222 3 Basic SoC Model 2020 EE2222 4 2020 EE2222 5 SoC vs Processors System on a chip Processors on a chip processor multiple, simple, heterogeneous few, complex, homogeneous cache one level, small 2-3 levels, extensive memory embedded, on chip very large, off chip functionality special purpose general purpose interconnect wide, high bandwidth often through cache power, cost both low both high operation largely stand-alone need other chips 2020 EE2222 6 Embedded Systems • 98% processors sold annually are used in embedded applications. 2020 EE2222 7 Embedded Systems: Design Challenges • Power/energy efficient: • mobile & battery powered • Highly reliable: • Extreme environment (e.g. temperature) • Real-time operations: • predictable performance • Highly complex • A modern automobile with 55 electronic control units • Tightly coupled Software & Hardware • Rapid development at low price 2020 EE2222 8 EECS222A: SoC Description and Modeling Lecture 1 Design Complexity Challenge Design• Productivity Complexity -

Scalability and Optimization Strategies for GPU Enhanced Neural Networks (Genn)

Scalability and Optimization Strategies for GPU Enhanced Neural Networks (GeNN) Naresh Balaji1, Esin Yavuz2, Thomas Nowotny2 {T.Nowotny, E.Yavuz}@sussex.ac.uk, [email protected] 1National Institute of Technology, Tiruchirappalli, India 2School of Engineering and Informatics, University of Sussex, Brighton, UK Abstract: Simulation of spiking neural networks has been traditionally done on high-performance supercomputers or large-scale clusters. Utilizing the parallel nature of neural network computation algorithms, GeNN (GPU Enhanced Neural Network) provides a simulation environment that performs on General Purpose NVIDIA GPUs with a code generation based approach. GeNN allows the users to design and simulate neural networks by specifying the populations of neurons at different stages, their synapse connection densities and the model of individual neurons. In this report we describe work on how to scale synaptic weights based on the configuration of the user-defined network to ensure sufficient spiking and subsequent effective learning. We also discuss optimization strategies particular to GPU computing: sparse representation of synapse connections and occupancy based block-size determination. Keywords: Spiking Neural Network, GPGPU, CUDA, code-generation, occupancy, optimization 1. Introduction The computational performance of traditional single-core CPUs has steadily increased since its arrival, primarily due to the combination of increased clock frequency, process technology advancements and compiler optimizations. The clock frequency was pushed higher and higher, from 5 MHz to 3 GHz in the years from 1983 to 2002, until it reached a stall a few years ago [1] when the power consumption and dissipation of the transistors reached peaks that could not compensated by its cost [2]. -

Comparative Study of Various Systems on Chips Embedded in Mobile Devices

Innovative Systems Design and Engineering www.iiste.org ISSN 2222-1727 (Paper) ISSN 2222-2871 (Online) Vol.4, No.7, 2013 - National Conference on Emerging Trends in Electrical, Instrumentation & Communication Engineering Comparative Study of Various Systems on Chips Embedded in Mobile Devices Deepti Bansal(Assistant Professor) BVCOE, New Delhi Tel N: +919711341624 Email: [email protected] ABSTRACT Systems-on-chips (SoCs) are the latest incarnation of very large scale integration (VLSI) technology. A single integrated circuit can contain over 100 million transistors. Harnessing all this computing power requires designers to move beyond logic design into computer architecture, meet real-time deadlines, ensure low-power operation, and so on. These opportunities and challenges make SoC design an important field of research. So in the paper we will try to focus on the various aspects of SOC and the applications offered by it. Also the different parameters to be checked for functional verification like integration and complexity are described in brief. We will focus mainly on the applications of system on chip in mobile devices and then we will compare various mobile vendors in terms of different parameters like cost, memory, features, weight, and battery life, audio and video applications. A brief discussion on the upcoming technologies in SoC used in smart phones as announced by Intel, Microsoft, Texas etc. is also taken up. Keywords: System on Chip, Core Frame Architecture, Arm Processors, Smartphone. 1. Introduction: What Is SoC? We first need to define system-on-chip (SoC). A SoC is a complex integrated circuit that implements most or all of the functions of a complete electronic system. -

System-On-Chip Design with Virtual Components

past designs can a huge chip be com- pleted within a reasonable time. This FEATURE solution usually entails reusing designs from previous generations of products ARTICLE and often leverages design work done by other groups in the same company. Various forms of intercompany cross licensing and technology sharing Thomas Anderson can provide access to design technol- ogy that may be reused in new ways. Many large companies have estab- lished central organizations to pro- mote design reuse and sharing, and to System-on-Chip Design look for external IP sources. One challenge faced by IP acquisi- tion teams is that many designs aren’t well suited for reuse. Designing with with Virtual Components reuse in mind requires extra time and effort, and often more logic as well— requirements likely to be at odds with the time-to-market goals of a product design team. Therefore, a merchant semiconduc- tor IP industry has arisen to provide designs that were developed specifically for reuse in a wide range of applications. These designs are backed by documen- esign reuse for tation and support similar to that d semiconductor provided by a semiconductor supplier. Here in the Recycling projects has evolved The terms “virtual component” from an interesting con- and “core” commonly denote reusable Age, designing for cept to a requirement. Today’s huge semiconductor IP that is offered for system-on-a-chip (SOC) designs rou- license as a product. The latter term is reuse may sound like tinely require millions of transistors. promoted extensively by the Virtual Silicon geometry continues to shrink Socket Interface (VSI) Alliance, a joint a great idea. -

Lecture Notes

Lecture #4-5: Computer Hardware (Overview and CPUs) CS106E Spring 2018, Young In these lectures, we begin our three-lecture exploration of Computer Hardware. We start by looking at the different types of computer components and how they interact during basic computer operations. Next, we focus specifically on the CPU (Central Processing Unit). We take a look at the Machine Language of the CPU and discover it’s really quite primitive. We explore how Compilers and Interpreters allow us to go from the High-Level Languages we are used to programming to the Low-Level machine language actually used by the CPU. Most modern CPUs are multicore. We take a look at when multicore provides big advantages and when it doesn’t. We also take a short look at Graphics Processing Units (GPUs) and what they might be used for. We end by taking a look at Reduced Instruction Set Computing (RISC) and Complex Instruction Set Computing (CISC). Stanford President John Hennessy won the Turing Award (Computer Science’s equivalent of the Nobel Prize) for his work on RISC computing. Hardware and Software: Hardware refers to the physical components of a computer. Software refers to the programs or instructions that run on the physical computer. - We can entirely change the software on a computer, without changing the hardware and it will transform how the computer works. I can take an Apple MacBook for example, remove the Apple Software and install Microsoft Windows, and I now have a Window’s computer. - In the next two lectures we will focus entirely on Hardware. -

EE Concentration: System-On-A-Chip (Soc)

EE Concentration: System-on-a-Chip (SoC) Requirements: Complete ESE350, ESE370, CIS371, ESE532 Requirement Flow: Impact: The chip at the heart of your smartphone, tablet, or mp3 player (including the Apple A11, A12) is an SoC. The chips that run almost all of your gadgets today are SoCs. These are the current culmination of miniaturization and part count reduction that allows such systems to built inexpensively and from small part counts. These chips democratize innovation, by providing a platform for the deployment of novel ideas without requiring hundreds of millions of dollars to build new custom ICs. Description: Modern computational and control chips contain billions of transistors and run software that has millions of lines of code. They integrate complete systems including multiple, potentially heterogeneous, processing elements, sophisticated memory hierarchies, communications, and rich interfaces for inputs and outputs including sensing and actuations. To design these systems, engineers must understand IC technology, digital circuits, processor and accelerator architectures, networking, and composition and interfacing and be able to manage hardware/software trade-offs. This concentration prepares students both to participate in the design of these SoC architectures and to use SoC architectures as implementation vehicles for novel embedded computing tasks. Sample industries and companies: ● Integrated Circuit Design: ARM, IBM, Intel, Nvidia, Samsung, Qualcomm, Xilinx ● Consumer Electronics: Apple, Samsung, NEST, Hewlett Packard ● Systems: Amazon, CISCO, Google, Facebook, Microsoft ● Automotive and Aerospace: Boeing, Ford, Space-X, Tesla, Waymo ● Your startup Sample Job Titles: ● Hardware Engineer, Chip Designer, Chip Architect, Architect, Verification Engineer, Software Engineering, Embedded Software Engineer, Member of Technical Staff, VP Engineering, CTO Graduate research in: computer systems and architecture . -

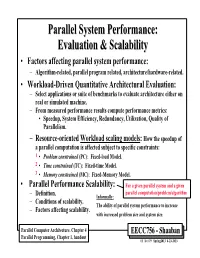

Parallel System Performance: Evaluation & Scalability

ParallelParallel SystemSystem Performance:Performance: EvaluationEvaluation && ScalabilityScalability • Factors affecting parallel system performance: – Algorithm-related, parallel program related, architecture/hardware-related. • Workload-Driven Quantitative Architectural Evaluation: – Select applications or suite of benchmarks to evaluate architecture either on real or simulated machine. – From measured performance results compute performance metrics: • Speedup, System Efficiency, Redundancy, Utilization, Quality of Parallelism. – Resource-oriented Workload scaling models: How the speedup of a parallel computation is affected subject to specific constraints: 1 • Problem constrained (PC): Fixed-load Model. 2 • Time constrained (TC): Fixed-time Model. 3 • Memory constrained (MC): Fixed-Memory Model. • Parallel Performance Scalability: For a given parallel system and a given parallel computation/problem/algorithm – Definition. Informally: – Conditions of scalability. The ability of parallel system performance to increase – Factors affecting scalability. with increased problem size and system size. Parallel Computer Architecture, Chapter 4 EECC756 - Shaaban Parallel Programming, Chapter 1, handout #1 lec # 9 Spring2013 4-23-2013 Parallel Program Performance • Parallel processing goal is to maximize speedup: Time(1) Sequential Work Speedup = < Time(p) Max (Work + Synch Wait Time + Comm Cost + Extra Work) Fixed Problem Size Speedup Max for any processor Parallelizing Overheads • By: 1 – Balancing computations/overheads (workload) on processors -

Multiprocessing and Scalability

Multiprocessing and Scalability A.R. Hurson Computer Science and Engineering The Pennsylvania State University 1 Multiprocessing and Scalability Large-scale multiprocessor systems have long held the promise of substantially higher performance than traditional uni- processor systems. However, due to a number of difficult problems, the potential of these machines has been difficult to realize. This is because of the: Fall 2004 2 Multiprocessing and Scalability Advances in technology ─ Rate of increase in performance of uni-processor, Complexity of multiprocessor system design ─ This drastically effected the cost and implementation cycle. Programmability of multiprocessor system ─ Design complexity of parallel algorithms and parallel programs. Fall 2004 3 Multiprocessing and Scalability Programming a parallel machine is more difficult than a sequential one. In addition, it takes much effort to port an existing sequential program to a parallel machine than to a newly developed sequential machine. Lack of good parallel programming environments and standard parallel languages also has further aggravated this issue. Fall 2004 4 Multiprocessing and Scalability As a result, absolute performance of many early concurrent machines was not significantly better than available or soon- to-be available uni-processors. Fall 2004 5 Multiprocessing and Scalability Recently, there has been an increased interest in large-scale or massively parallel processing systems. This interest stems from many factors, including: Advances in integrated technology. Very