Building a State-Of-The Art Enterprise Supercomputer

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Analysis of Server-Smartphone Application Communication Patterns

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Aaltodoc Publication Archive Aalto University School of Science Degree Programme in Computer Science and Engineering Péter Somogyi Analysis of server-smartphone application communication patterns Master’s Thesis Budapest, June 15, 2014 Supervisors: Professor Jukka Nurminen, Aalto University Professor Tamás Kozsik, Eötvös Loránd University Instructor: Máté Szalay-Bekő, M.Sc. Ph.D. Aalto University School of Science ABSTRACT OF THE Degree programme in Computer Science and MASTER’S THESIS Engineering Author: Péter Somogyi Title: Analysis of server-smartphone application communication patterns Number of pages: 83 Date: June 15, 2014 Language: English Professorship: Data Communication Code: T-110 Software Supervisor: Professor Jukka Nurminen, Aalto University Professor Tamás Kozsik, Eötvös Loránd University Instructor: Máté Szalay-Bekő, M.Sc. Ph.D. Abstract: The spread of smartphone devices, Internet of Things technologies and the popularity of web-services require real-time and always on applications. The aim of this thesis is to identify a suitable communication technology for server and smartphone communication which fulfills the main requirements for transferring real- time data to the handheld devices. For the analysis I selected 3 popular communication technologies that can be used on mobile devices as well as from commonly used browsers. These are client polling, long polling and HTML5 WebSocket. For the assessment I developed an Android application that receives real-time sensor data from a WildFly application server using the aforementioned technologies. Industry specific requirements were selected in order to verify the usability of this communication forms. The first one covers the message size which is relevant because most smartphone users have limited data plan. -

IBM Bladecenter S Types 7779 and 8886 12-Disk Storage Module

IBM BladeCenter S Types 7779 and 8886 12-Disk Storage Module IBM BladeCenter S Types 7779 and 8886 12-Disk Storage Module Note Note: Before using this information and the product it supports, read the general information in Notices; and read the IBM Safety Information and the IBM Systems Environmental Notices and User Guide on the IBM Documentation CD. First Edition (July 2013) © Copyright IBM Corporation 2013. US Government Users Restricted Rights – Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents Chapter 1. BladeCenter S Types 7779 Electronic emission notices .........14 and 8886 disk storage module .....1 Federal Communications Commission (FCC) Disk storage modules ...........1 statement ..............14 Industry Canada Class A emission compliance Chapter 2. Installation guidelines ....3 statement ..............15 Avis de conformité à la réglementation System reliability guidelines .........3 d'Industrie Canada ...........15 Handling static-sensitive devices........4 Australia and New Zealand Class A statement . 15 European Union EMC Directive conformance Chapter 3. Installing a disk storage statement ..............15 module ...............5 Germany Class A statement ........15 Japan VCCI Class A statement .......16 Chapter 4. Removing a disk storage Japan Electronics and Information Technology module ...............7 Industries Association (JEITA) statement....17 Japan Electronics and Information Technology Industries Association (JEITA) statement....17 Chapter 5. Replacing 6-Disk Storage -

HPE D2220sb Storage Blade Overview

QuickSpecs HPE D2220sb Storage Blade Overview HPE D2220sb Storage Blade Do you need a direct attached or shared storage solution within your BladeSystem enclosure? Direct attached storage The D2220sb Storage Blade delivers direct attached storage for c-Class Gen9 blade servers, with support for up to twelve hot plug small form factor (SFF) SAS or SATA hard disk drives or SAS/SATA SSDs. The enclosure backplane provides a PCI Express connection to the adjacent c-Class server blade and enables high performance storage access without any additional cables. The D2220sb Storage Blade features an onboard Smart Array P420i controller with 2GB flash-backed write cache, for increased performance and data protection. Up to eight D2220sb storage devices can be supported in a single BladeSystem c7000 enclosure. Two ways to create shared storage with the D2220sb Use HPE StoreVirtual VSA software to turn the D2220sb into an iSCSI SAN for use by all servers in the enclosure and any server on the network. HPE StoreVirtual VSA software is installed in a virtual machine on a VMware ESX host server adjacent to the D2220sb. HPE StoreVirtual VSA turns the D2220sb into a scalable and robust iSCSI SAN, featuring storage clustering for scalability, Network RAID for storage failover, thin provisioning, snapshots, remote replication, and cloning. Expand capacity within the same enclosure or to other BladeSystem enclosures by adding additional D2220sb Storage Blades and HPE VSA licenses. A cost effective bundle of the D2220sb Storage Blade and a HPE StoreVirtual VSA license makes purchasing convenient. If storage needs increase, add HPE P4300 or P4500 systems externally and manage everything via a single pane of glass. -

Distributed Algorithms with Theoretic Scalability Analysis of Radial and Looped Load flows for Power Distribution Systems

Electric Power Systems Research 65 (2003) 169Á/177 www.elsevier.com/locate/epsr Distributed algorithms with theoretic scalability analysis of radial and looped load flows for power distribution systems Fangxing Li *, Robert P. Broadwater ECE Department Virginia Tech, Blacksburg, VA 24060, USA Received 15 April 2002; received in revised form 14 December 2002; accepted 16 December 2002 Abstract This paper presents distributed algorithms for both radial and looped load flows for unbalanced, multi-phase power distribution systems. The distributed algorithms are developed from tree-based sequential algorithms. Formulas of scalability for the distributed algorithms are presented. It is shown that computation time dominates communication time in the distributed computing model. This provides benefits to real-time load flow calculations, network reconfigurations, and optimization studies that rely on load flow calculations. Also, test results match the predictions of derived formulas. This shows the formulas can be used to predict the computation time when additional processors are involved. # 2003 Elsevier Science B.V. All rights reserved. Keywords: Distributed computing; Scalability analysis; Radial load flow; Looped load flow; Power distribution systems 1. Introduction Also, the method presented in Ref. [10] was tested in radial distribution systems with no more than 528 buses. Parallel and distributed computing has been applied More recent works [11Á/14] presented distributed to many scientific and engineering computations such as implementations for power flows or power flow based weather forecasting and nuclear simulations [1,2]. It also algorithms like optimizations and contingency analysis. has been applied to power system analysis calculations These works also targeted power transmission systems. [3Á/14]. -

Scalability and Performance Management of Internet Applications in the Cloud

Hasso-Plattner-Institut University of Potsdam Internet Technology and Systems Group Scalability and Performance Management of Internet Applications in the Cloud A thesis submitted for the degree of "Doktors der Ingenieurwissenschaften" (Dr.-Ing.) in IT Systems Engineering Faculty of Mathematics and Natural Sciences University of Potsdam By: Wesam Dawoud Supervisor: Prof. Dr. Christoph Meinel Potsdam, Germany, March 2013 This work is licensed under a Creative Commons License: Attribution – Noncommercial – No Derivative Works 3.0 Germany To view a copy of this license visit http://creativecommons.org/licenses/by-nc-nd/3.0/de/ Published online at the Institutional Repository of the University of Potsdam: URL http://opus.kobv.de/ubp/volltexte/2013/6818/ URN urn:nbn:de:kobv:517-opus-68187 http://nbn-resolving.de/urn:nbn:de:kobv:517-opus-68187 To my lovely parents To my lovely wife Safaa To my lovely kids Shatha and Yazan Acknowledgements At Hasso Plattner Institute (HPI), I had the opportunity to meet many wonderful people. It is my pleasure to thank those who sup- ported me to make this thesis possible. First and foremost, I would like to thank my Ph.D. supervisor, Prof. Dr. Christoph Meinel, for his continues support. In spite of his tight schedule, he always found the time to discuss, guide, and motivate my research ideas. The thanks are extended to Dr. Karin-Irene Eiermann for assisting me even before moving to Germany. I am also grateful for Michaela Schmitz. She managed everything well to make everyones life easier. I owe a thanks to Dr. Nemeth Sharon for helping me to improve my English writing skills. -

Implementation of Embedded Web Server Based on ARM11 and Linux Using Raspberry PI

International Journal of Recent Technology and Engineering (IJRTE) ISSN: 2277-3878, Volume-3 Issue-3, July 2014 Implementation of Embedded Web Server Based on ARM11 and Linux using Raspberry PI Girish Birajdar Abstract— As ARM processor based web servers not uses III. HARDWARE USED computer directly, it helps a lot in reduction of cost. In this We will use different hardware to implement this embedded project our aim is to implement an Embedded Web Server (EWS) based on ARM11 processor and Linux operating system using web server, which are described in this section. Raspberry Pi. it will provide a powerful networking solution with 1. Raspberry Pi : The Raspberry Pi is low cost ARM wide range of application areas over internet. We will run web based palm-size computer. The Raspberry Pi has server on an embedded system having limited resources to serve microprocessor ARM1176JZF-S which is a member of embedded web page to a web browser. ARM11 family and has ARMv6 architecture. It is build Index Terms— Embedded Web Server, Raspberry Pi, ARM, around a BCM2835 broadcom processor. ARM processor Ethernet etc. operates at 700 MHz & it has 512 MB RAM. It consumes 5V electricity at 1A current due to which power I. INTRODUCTION consumption of raspberry pi is less. It has many peripherals such as USB port, 10/100 ethernet, GPIO, HDMI & With evolution of World-Wide Web (WWW), its composite video outputs and SD card slot.SD card slot is application areas are increasing day by day. Web access used to connect the SD card which consist of raspberry linux functionality can be embedded in a low cost device which operating system. -

Data Management for Portable Media Players

Data Management for Portable Media Players Table of Contents Introduction..............................................................................................2 The New Role of Database........................................................................3 Design Considerations.................................................................................3 Hardware Limitations...............................................................................3 Value of a Lightweight Relational Database.................................................4 Why Choose a Database...........................................................................5 An ITTIA Solution—ITTIA DB........................................................................6 Tailoring ITTIA DB for a Specific Device......................................................6 Tables and Indexes..................................................................................6 Transactions and Recovery.......................................................................7 Simplifying the Software Development Process............................................7 Flexible Deployment................................................................................7 Working with ITTIA Toward a Successful Deployment....................................8 Conclusion................................................................................................8 Copyright © 2009 ITTIA, L.L.C. Introduction Portable media players have evolved significantly in the decade that has -

Scalability and Optimization Strategies for GPU Enhanced Neural Networks (Genn)

Scalability and Optimization Strategies for GPU Enhanced Neural Networks (GeNN) Naresh Balaji1, Esin Yavuz2, Thomas Nowotny2 {T.Nowotny, E.Yavuz}@sussex.ac.uk, [email protected] 1National Institute of Technology, Tiruchirappalli, India 2School of Engineering and Informatics, University of Sussex, Brighton, UK Abstract: Simulation of spiking neural networks has been traditionally done on high-performance supercomputers or large-scale clusters. Utilizing the parallel nature of neural network computation algorithms, GeNN (GPU Enhanced Neural Network) provides a simulation environment that performs on General Purpose NVIDIA GPUs with a code generation based approach. GeNN allows the users to design and simulate neural networks by specifying the populations of neurons at different stages, their synapse connection densities and the model of individual neurons. In this report we describe work on how to scale synaptic weights based on the configuration of the user-defined network to ensure sufficient spiking and subsequent effective learning. We also discuss optimization strategies particular to GPU computing: sparse representation of synapse connections and occupancy based block-size determination. Keywords: Spiking Neural Network, GPGPU, CUDA, code-generation, occupancy, optimization 1. Introduction The computational performance of traditional single-core CPUs has steadily increased since its arrival, primarily due to the combination of increased clock frequency, process technology advancements and compiler optimizations. The clock frequency was pushed higher and higher, from 5 MHz to 3 GHz in the years from 1983 to 2002, until it reached a stall a few years ago [1] when the power consumption and dissipation of the transistors reached peaks that could not compensated by its cost [2]. -

Colloquium Report

FUTURE INSTITUTION OF ENGG.&TECHNOLOYG, BAREILLY COLLOQUIUM REPORT ON Blade Servers MCA-611 Of MASTER OF COMPUTER APPLICATION SUBMITTED BY SUMIT KUMAR 1047614044 2012-2013 Under the supervision of Ms. Neha Agrawal Department of Computer Applications Future Institute of Engineering and Technology Bareilly NH-24, CERTIFICATE This is certify that the colloquium entitled has been carried out by Mr./Ms. Of M.C.A Semester-VI Approval No. as a partial fulfillment of the course, for the Academic Year 2012-2013. COLLOQUIUM OF MCA Academic Year Approved By Internal Guide Name and Sign (Examiners) ACKNOWLEDGEMENT One must be grateful for the co-operation and assistance given in ties self-oriented Individualistic world, which is very difficult from each and everyone. Even I cannot in full measure, reciprocate the kindness shown and contribution made by various persons in this endeavor, though I shall always remember them with high gratitude. I must, however, specially acknowledge my indebtness to Mr. Abhishek Saxena(Asst.Director and H.O.D. FIET, Bareilly) who provided me the opportunity to do my Research in FIET Bareilly. I am also very much thankful to Ms. Neha Agarwal( Internal Project Advisor appointed by the Department) who gave me his continuous guidance and encourage me continuously during my training. I shall always very much thankful to. I extend my heartiest gratitude for providing me the opportunity to be a part of an esteemed research like Blade Servers. Abstract Blade servers are self-contained computer servers, designed for high density. Slim, hot swappable blade servers fit in a single chassis like books in a bookshelf - and each is an independent server, with its own processors, memory, storage, network controllers, operating system and applications. -

IBM Bladecenter HS22

Versatile, easy-to-use blade optimized for performance, energy and cooling Product Guide August 2010 IBM BladeCenter HS22 Product Overview No-compromise, truly balanced, 2-socket blade server for infrastructure, virtualization and enterprise business applications Suggested uses: Front-end and mid-tier applications requiring high performance, enterprise- class availability and extreme flexibility and power efficiency. CONTENTS Today’s data center environment is tougher than ever. You’re looking to reduce IT cost, complexity, space requirements, energy consumption and heat output, while increasing Product Overview 1 flexibility, utilization and manageability. Incorporating IBM X-Architecture ™ features, the IBM ® BladeCenter ® HS22 blade server, combined with the various BladeCenter chassis, can help Selling Features 2 you accomplish all of these goals. Key Features 4 Reducing an entire server into as little as .5U of rack space (i.e., up to 14 servers in 7U) does Key Options 14 not mean trading away features and capabilities for smaller size. Each HS22 blade server offers features comparable to many 1U rack-optimized full-featured servers: The HS22 supports up to HS22 Images 15 two of the latest high-performance or low-voltage 6-core , 4-core , and 2-core Intel® Xeon ® 5500 series and 5600 series processors.. The Xeon processors are designed with up to 12MB of HS22 Specifications 16 shared cache and leading-edge memory performance (up to 1333MHz , depending on The Bottom Line 18 processor model) to help provide the computing power you require to match your business needs and growth . The HS22 supports up to 192GB of registered double data rate III ( DDR3 ) Server Comparison 19 ECC (Error Checking and Correcting) memory in 12 DIMM slots, with optional Chipkill ™ protection 1, for high performance and reliability. -

Organizations Turn to Blade Servers to Boost Power, Save Space and Cut Costs

Blade Servers Cut Paths Organizations turn to blade servers to boost power, save space and cut costs. 62 • Blade Servers to Progress In government agencies and educational institutions, few research firm. “They want to leverage industry-standard, low- challenges loom as large as managing information-technology cost servers, yet be able to maintain high availability while resources. Organizations that lack adequate computing achieving high performance. Clustering and virtualization power often find that it is impossible to manage mountains of technologies achieve these goals by using resources more efficiently and effectively.” information. Yet, those who throw technology at every problem often wind up creating an unmanageable and expensive IT As a result, many organizations are turning to blade servers. These units, which consist of multiple server cards enclosed environment — particularly when numerous applications and in a specialized chassis, offer a more efficient architecture servers enter the picture. for managing multiple applications, databases and storage hese days, things aren’t getting any simpler — devices. Blades — consisting of several servers within a single especially as the demand for information technology chassis — are rapidly moving into the mainstream. In the Tgrows and more sophisticated data requirements process, they are replacing more expensive and complicated take hold. “Organizations are looking for ways to optimize mid-range and mainframe computers. their computing environments without spending a great deal IMEX Research reports that sales of blade servers grew more money,” observes Anil Vasudeva, president of IMEX from near zero in 2001 to about $2.2 billion in 2005. At Research, a San Jose, Calif.-based IT consulting and market present, about 7 percent of servers used are blades, but the 4 Blade Servers • 63 figure is expected to hit 32 percent by 2009. -

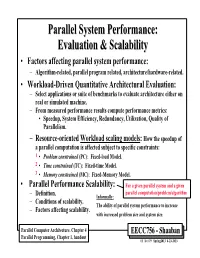

Parallel System Performance: Evaluation & Scalability

ParallelParallel SystemSystem Performance:Performance: EvaluationEvaluation && ScalabilityScalability • Factors affecting parallel system performance: – Algorithm-related, parallel program related, architecture/hardware-related. • Workload-Driven Quantitative Architectural Evaluation: – Select applications or suite of benchmarks to evaluate architecture either on real or simulated machine. – From measured performance results compute performance metrics: • Speedup, System Efficiency, Redundancy, Utilization, Quality of Parallelism. – Resource-oriented Workload scaling models: How the speedup of a parallel computation is affected subject to specific constraints: 1 • Problem constrained (PC): Fixed-load Model. 2 • Time constrained (TC): Fixed-time Model. 3 • Memory constrained (MC): Fixed-Memory Model. • Parallel Performance Scalability: For a given parallel system and a given parallel computation/problem/algorithm – Definition. Informally: – Conditions of scalability. The ability of parallel system performance to increase – Factors affecting scalability. with increased problem size and system size. Parallel Computer Architecture, Chapter 4 EECC756 - Shaaban Parallel Programming, Chapter 1, handout #1 lec # 9 Spring2013 4-23-2013 Parallel Program Performance • Parallel processing goal is to maximize speedup: Time(1) Sequential Work Speedup = < Time(p) Max (Work + Synch Wait Time + Comm Cost + Extra Work) Fixed Problem Size Speedup Max for any processor Parallelizing Overheads • By: 1 – Balancing computations/overheads (workload) on processors