Jitter Detection and Image Restoration Based on Generative Adversarial Networks in Satellite Images

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

China's Space Program

China’s Space Program An Introduction China’s Space Program ● Motivations ● Organization ● Programs ○ Satellites ○ Manned Space flight ○ Lunar Exploration Program ○ International Relations ● Summary China’s Space Program Motivations Stated Purpose ● Explore outer space and to enhance understanding of the Earth and the cosmos ● Utilize outer space for peaceful purposes, promote human civilization and social progress, and to benefit the whole of mankind ● Meet the demands of economic development, scientific and technological development, national security and social progress ● Improve the scientific and cultural knowledge of the Chinese people ● Protect China's national rights and interests ● Build up China’s national comprehensive strength National Space Motivations • Preservation of its political system is overriding goal • The CCP prioritizes investments into space technology ○ Establish PRC as an equal among world powers ○ Space for international competition and cooperation ○ Manned spaceflight ● Foster national pride ● Enhance the domestic and international legitimacy of the CCP. ○ Space technology is metric of political legitimacy, national power, and status globally China’s Space Program Organization The China National Space Administration (CNSA) ● The China National Space Administration (CNSA, GuóJiā HángTiān Jú,) ○ National space agency of the People's Republic of China ○ Responsible for the national space program. ■ Planning and development of space activities. The China National Space Administration ● CNSA and China Aerospace -

Orbital Debris Program Office Figure 1

National Aeronautics and Space Administration Orbital Debris Quarterly News Volume 13, Issue 1 January 2009 Inside... New Debris Seen from Decommissioned Fengyun-1C Debris: Two Years Later 2 Satellite with Nuclear Power Source The ESA’s ATV-1 Reentry Event 3 A 21-year-old satellite containing a dormant According to Russian reports, the nuclear reactors nuclear reactor was the source of an unexpected on Cosmos 1818 and Cosmos 1867 functioned for Two New Microsatellite debris cloud in early July 2008. Launched by the approximately 5 and 11 months, respectively. For Impact Tests 4 former Soviet Union in February 1987, Cosmos the next two decades, the two inactive spacecraft 1818 (International Designator 1987-011A, circled the Earth without significant incident. Review of Different U.S. Satellite Number 17369) was the first of two Following the fragmentation event on or about Solar Cycle 24 vehicles designed to test a new, more advanced 4 July 2008, the U.S. Space Surveillance Network Predictions 7 nuclear power supply in low Earth orbit. Dozens was able to produce orbital data on 30 small debris of small particles were released during the still- (Figure 2). The majority of these debris were ejected Don Kessler Wins unexplained debris generation event. in a posigrade direction with velocities of less Space Safety Pioneer Cosmos 1818 and its sister spacecraft, than 15 meters per second, suggesting a relatively Award 8 Cosmos 1867 (Figure 1), carried a thermionic low energy event. From radar detections, a larger nuclear power supply, in contrast to the simpler, number of very small debris appear to have also Abstracts from the thermoelectric nuclear device which provided been released, but routine tracking of these debris NASA OD Program energy to the well-known RORSATs (Radar Ocean has proven difficult. -

25 April 2021

2 5 A P R I L 2 0 2 1 THE FINAL FRONTIER FLASH Developments & Analysis of the Space Domain In This Issue China Launches Shiyan-6 China to Build Ningbo Commercial Spaceport Russia Counterspace Lasers Mission Extension Vehicle-2 (MEV-2) Docking Upcoming China Space Station Missions Airbus to Lead On-Orbit Manufacturing Study 1411 Frontier, Suite 1A Spring Branch, TX 78070 isruniversity.com integrityisr.com All hyperlinks are underlined #WeKnowISR China Launches Shiyan 6 (03) Shiyan 6 (03) Launch from Taiyuan 8 April 2021: China launched the third in a series of experimental Shiyan 6 satellites April 8 aboard a Long March 4B rocket. Not much is known about the satellite other than it will test new space technologies, including a new super- black coating to absorb stray light and improve the sensitivity of on-board optics. See VIDEO - The Long March 4B rocket carried the Shiyan 6 spacecraft into a polar orbit about 620 miles (1,000 km) above Earth, with an inclination of 99.5 degrees to the equator. - This is the third in a series of Shiyan 6-type satellites. The first two Shiyan 6 satellites launched on Long March 2D rockets from the Jiuquan space center in northwestern China in November 2018 and July 2020. The first two Shiyan 6 satellites were deployed at lower altitudes. - Shiyan means “experiment” in Shiyan 6 (01, 02 & 03) Orbits Chinese. - One of the technologies to be tested on the Shiyan 6 spacecraft is a new super-black coating designed to prevent stray light from disrupting optical cameras. -

China's Evolving Reconnaissance- Strike Capabilities

DRAFT China’s Evolving Reconnaissance- Strike Capabilities Implications for the U.S.-Japan Alliance Ian Easton February 2014 |China’s Evolving Reconnaissance-Strike Capabilities | Ian Easton is a Research Fellow at the Project 2049 Institute. He also recently served as a visiting fellow at the Japan Institute of International Affairs (JIIA) in Tokyo. Before his current fellowship at the Project 2049 Institute, he spent some two years at the Center for Naval Analyses (CNA) as a China analyst. Prior to that, he consulted for a Taiwanese software company, the Defense News Asia Bureau Chief, and the Foundation on Asia-Pacific Peace Studies. Mr. Easton holds an M.A. in China studies from National Chengchi University in Taipei, a B.A. in international studies from the University of Illinois at Urbana-Champaign, and a certification in advanced Mandarin Chinese. He received his formal language training at National Taiwan Normal University’s Mandarin Training Center in Taipei, and Fudan University in Shanghai. *** The author would like to acknowledge and thank JIIA for its generous support of this project. He is especially thankful for the guidance provided by Tetsuo Kotani, and in particular for his leadership during three research trips to Yokosuka, Ishigaki and Okinawa. In addition, the author would like to thank Toshiro Iijima, Nobuyasu Abe, Seiichiro Takagi, Kyoichi Wakayama, Yasuyuki Ishida, Asuka Mastsumoto, and Alan Vick for their comments and suggestions, which served to greatly improve this paper’s analysis and conclusions. Finally, the author is indebted to the men and women of the U.S. State Department, the U.S. -

Chinese Intelligence, Surveillance, and Reconnaissance Systems Lt Col Thomas R

DIGITAL-ONLY COMMENTARY Chinese Intelligence, Surveillance, and Reconnaissance Systems LT COL THOMAS R. MCCABE, USAFR, RETIRED he best weapons in the world are useless unless aimed accurately, which requires a sophisticated intelligence, surveillance, and reconnaissance (ISR) system to detect and track targets, preferably in as close to real time Tas possible. Even more important, at the strategic level, the People’s Republic of China (PRC) views war between modern states as a conflict between systems of systems,1 which means weapons and targeting require an accurate and compre- hensive view of those enemy systems to target them. China has been building a wide variety of ISR systems to provide its forces with such capabilities, including systems that we must expect will be available for military use even if nominally civilian. (China has said its policy of military-civil fusion will include the outer space and maritime domains; so, we must assume that all the surveillance re- sources PRC civilian agencies have will be integrated into crisis/wartime military ISR.2) These systems include the following: Satellites. China has developed and deployed constellations of dual-use and military satellite reconnaissance systems, especially the Yaogan (“China remote- sensing satellite”) systems, with both electro-optical imagery reconnaissance sat- ellites and synthetic aperture radar satellites.3 Many of the Yaogan satellites are also reported to be electronic intelligence satellites,4 intended to track and locate foreign warships by their -

Chang'e Flying to the Moon

Issue 7 January 2013 All about the Chinese Space Programme GO TAIKONAUTS! Editor’s Note COVER STORY If you are a fan of the Chinese space pro- gramme, you must have heard about Brian Harvey, who is the first Western writer to publish a book on the Chinese space pro- gramme. We are very happy that Mr. Harvey contributed an article to Go Taikonauts! The article about Chinese ... page 2 Quarterly Report October - December 2012 Launch Events China made six space launches in the last three months of 2012, setting a new annual launch record of 19 and overtaking U.S. in number of suc- cessful annual space launches for the first time. In 2011, China also ... page 3 Deep Space Adventure of Chang’e 2 From A Backup Lunar Orbiter to An Asteroid Probe Observation Just before Chang’e 1 (CE-1)’s successful mission to the Moon was completed, Echo of the Curiosity in China China announced that they would send the second lunar probe Chang’e 2 (CE-2) The 6 August 2012 was a special day to an to the Moon in 2010. No one at that time could anticipate the surprises that CE-2 American-Chinese girl. She is Clara Ma, would bring a few years later since it was just a backup ... page 8 a 15-year-old middle school student from Lenexa, Kansas. She waited for this day for more than three years. In May 2009, History Ma won a NASA essay contest for naming the Mars Science Laboratory, the most Chang’e Flying to the Moon complicated machine .. -

China Dream, Space Dream: China's Progress in Space Technologies and Implications for the United States

China Dream, Space Dream 中国梦,航天梦China’s Progress in Space Technologies and Implications for the United States A report prepared for the U.S.-China Economic and Security Review Commission Kevin Pollpeter Eric Anderson Jordan Wilson Fan Yang Acknowledgements: The authors would like to thank Dr. Patrick Besha and Dr. Scott Pace for reviewing a previous draft of this report. They would also like to thank Lynne Bush and Bret Silvis for their master editing skills. Of course, any errors or omissions are the fault of authors. Disclaimer: This research report was prepared at the request of the Commission to support its deliberations. Posting of the report to the Commission's website is intended to promote greater public understanding of the issues addressed by the Commission in its ongoing assessment of U.S.-China economic relations and their implications for U.S. security, as mandated by Public Law 106-398 and Public Law 108-7. However, it does not necessarily imply an endorsement by the Commission or any individual Commissioner of the views or conclusions expressed in this commissioned research report. CONTENTS Acronyms ......................................................................................................................................... i Executive Summary ....................................................................................................................... iii Introduction ................................................................................................................................... 1 -

Vayu Issue IV July Aug 2017

IV/2017 Aerospace & Defence Review The Dragon’s Claws India-Israel Relationship China’s Military Modernisation Israel’s Defence Industry Paris Air Show 2017 The Baltic Connection boeing.co.in 286mm in. Bleed 286mm in. 275mm Trim 275mm 257mm Live TOGETHER. BUILDING THE FUTURE. Boeing is proud of its longstanding partnership with Indi a. A partnership India can depend upon to meet its developing requirements, from surveillance, strike and mobility platforms to C4ISR, unmanned systems and support services. The most advanced systems and technologies providing the greatest value for India today and tomorrow. 197mm Live 215mm Trim 221mm Bleed Job Number: BOEG_BDS_IND_3223M Approved Client: Boeing Product: Boeing Defense Space & Security Date/Initials Date: 1/20/15 GCD: P. Serchuk File Name: BOEG_BDS_IND_3223M Creative Director: P. Serchuk Output Printed at: 100% Art Director: P. de Koninck Fonts: Helvetica Neue 65, Helvetica Neue 75 Copy Writer: P. Serchuk Media: Force Show Daily, Print Producer: Account Executive: D. McAuliffe 3C Vayu Defence Show Daily 50K Client: Boeing 50C Space/Color: Page — 4 Color — Bleed 4C 41M Proof Reader: 41Y Live: 197mm x 257mm Legal: Trim: 215mm x 275mm Traffic Manager: Traci Brown Bleed: 221mm x 286mm 0 25 50 75 100 Digital Artist: Gutter: Art Buyer: Production Artist: S. Bowman Vendor: Garvey Group PUBLICATION NOTE: Guideline for general identification only. Do not use as insertion order. Material for this insertion is to be examined carefully upon receipt. If it is deficient or does not comply with your requirements, please contact: Print Production at 310-601-1485. Frontline Communications Partners 1880 Century Park East, Suite 1011, Los Angeles, CA 90067 &OLHQW)URQWOLQH-RE9HU$' &\DQ 0DJHQWD <HOORZ %ODFN IV/2017 IV/2017 Aerospace & Defence Review A New Era for Sameer Joshi reviews status of the including Bombardier and Embraer, 38 PLAAF restructuring and reforms, even as ATR notched steady orders. -

ESPI Insights Space Sector Watch

ESPI Insights Space Sector Watch Issue 13 February 2021 THIS MONTH IN THE SPACE SECTOR… SPACE INSURERS LOOK FOR PROFITABILITY AFTER THREE YEARS OF LOSS .......................................... 1 POLICY & PROGRAMMES .................................................................................................................................... 2 Mars missions’ arrival bring major successes for space exploration ....................................................... 2 European Commission’s Action Plan on synergies between civil, defence and space industries ....... 3 UK and Australia sign agreement to increase bilateral cooperation in space sector ............................. 3 Spain publishes new Defence Technology and Innovation Strategy ......................................................... 3 Thales Group selected by French Armed Forces for the delivery of Syracuse IV ground stations...... 3 In other news ........................................................................................................................................................ 4 INDUSTRY & INNOVATION .................................................................................................................................. 5 Telesat awards contract to Thales Alenia Space for delivery of broadband constellation ................... 5 European New Space companies ask European Commission to update bidding procedures ............ 5 The European Court of Justice suspends Galileo second generation contract ...................................... 5 -

China's Military Role in Space

We encourage you to e-mail your comments to us at: [email protected]. China’s Military Role in Space Dean Cheng As the United States tries to square its commitments in Asia with declining budgetary resources, it is essential American decision makers tread carefully with regard to its space capabilities. These global assets are the backbone that allows the US military to fight in the manner to which it is accustomed. Consequently, in the event of a conflict involving the People’s Republic of China (PRC), they are likely to be a primary target. Over the past two decades, the PRC has paid careful attention to how other nations, but especially the United States, fight their wars. Space has consistently been part of the People’s Liberation Army’s (PLA) think ing about future conflict. At the same time, the PRC has grown from a developing country to the second largest economy in the world, with sufficient resources to create its own substantial space presence. Unlike previous conflicts in the Middle East, the Balkans, and Central Asia, if the United States engages in a conflict in the western Pacific, it will be confronted by a nation with a comprehensive set of space capabilities to counter America’s own. This article reviews the evolution of China’s military thinking and the changed role of space within that context. It briefly examines China’s space capabilities and development before discussing its concepts for mili tary space operations and concludes with future Chinese space operations. Evolution of Chinese Thinking about Military Space While China’s space program dates from the 1956 founding of the Fifth Academy of the Ministry of Defense, little public information is available on PLA thinking about space in the early years. -

06 Yuan-Chou Jing.Indd

The Korean Journal of Defense Analysis Vol. 31, No. 1, March 2019, 99-120 The Study of China’s Military Strategy and Satellite Development: Moving Toward “Winning the Informationized War Globally”? Yuan-Chou Jing* National Defense University, Taoyuan City, Taiwan China’s military strategy has evolved across different leaders’ eras. Even though China’s military strategic guideline always stays on the track of “active defense,” the essence has been shifting from tradition to informationization. Since satellites are able to provide space information support so as to fulfill informationization, once informationization becomes the focus of military strategy, it is supposed to bring momentum to the research and development of satellites. Therefore, satellite development can be considered as an index to check whether the military strategy is being implemented or not. China is continuously making progress in satellite development. Especially since the informationization was included in the guideline in the Hu Jintao era, satellites started to be able to conduct space information support missions. In conclusion, China is sincerely fulfilling its military strategy through consistent satellite development. On the other hand, “local war” is another focus of strategic guidelines, it implies that China considers its national interests mainly concentrated on this region so far. However, while China’s interests are expanding globally, China will need to cultivate its global dominance based on rapid satellite development to safeguard the global interest; -

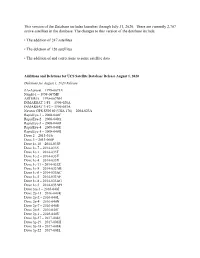

This Version of the Database Includes Launches Through July 31, 2020

This version of the Database includes launches through July 31, 2020. There are currently 2,787 active satellites in the database. The changes to this version of the database include: • The addition of 247 satellites • The deletion of 126 satellites • The addition of and corrections to some satellite data Additions and Deletions for UCS Satellite Database Release August 1, 2020 Deletions for August 1, 2020 Release ZA-Aerosat – 1998-067LU Nsight-1 – 1998-067MF ASTERIA – 1998-067NH INMARSAT 3-F1 – 1996-020A INMARSAT 3-F2 – 1996-053A Navstar GPS SVN 60 (USA 178) – 2004-023A RapidEye-1 – 2008-040C RapidEye-2 – 2008-040A RapidEye-3 – 2008-040D RapidEye-4 – 2008-040E RapidEye-5 – 2008-040B Dove 2 – 2013-015c Dove 3 – 2013-066P Dove 1c-10 – 2014-033P Dove 1c-7 – 2014-033S Dove 1c-1 – 2014-033T Dove 1c-2 – 2014-033V Dove 1c-4 – 2014-033X Dove 1c-11 – 2014-033Z Dove 1c-9 – 2014-033AB Dove 1c-6 – 2014-033AC Dove 1c-5 – 2014-033AE Dove 1c-8 – 2014-033AG Dove 1c-3 – 2014-033AH Dove 3m-1 – 2016-040J Dove 2p-11 – 2016-040K Dove 2p-2 – 2016-040L Dove 2p-4 – 2016-040N Dove 2p-7 – 2016-040S Dove 2p-5 – 2016-040T Dove 2p-1 – 2016-040U Dove 3p-37 – 2017-008F Dove 3p-19 – 2017-008H Dove 3p-18 – 2017-008K Dove 3p-22 – 2017-008L Dove 3p-21 – 2017-008M Dove 3p-28 – 2017-008N Dove 3p-26 – 2017-008P Dove 3p-17 – 2017-008Q Dove 3p-27 – 2017-008R Dove 3p-25 – 2017-008S Dove 3p-1 – 2017-008V Dove 3p-6 – 2017-008X Dove 3p-7 – 2017-008Y Dove 3p-5 – 2017-008Z Dove 3p-9 – 2017-008AB Dove 3p-10 – 2017-008AC Dove 3p-75 – 2017-008AH Dove 3p-73 – 2017-008AK Dove 3p-36 –